OWB11g, DML Error Logging and Data Rules

One of the new features in OWB 11g (and back-ported to OWB 10.2.03) is support for DML Error Logging. DML Error Logging is a database feature introduced with Oracle 10gR2 that lets you add a LOG ERRORS clause to your DML statement, so that DML operations that would normally fail due to a constraint violation will instead move the erroneous rows into a log table and complete the load as normal. DML Error Logging is particulary interesting to data warehouse people as it means we can safely load our tables using direct path INSERTS rather than having to write complex PL/SQL to catch any exceptions.

I wrote about DML Error Logging in a blog post last year and in this article for Oracle Magazine. One of the drawbacks in the 10gR2 implementation of DML Error Logging was when you tried to use it in conjunction with conventional path inserts, which for whatever reason caused the statement to run a lot slower than if you didn't include the LOG ERRORS clause, and so I was interested to find out whether that issue still occured with 11g. Looking back at the article and in particular the blog post, even direct path loads using LOG ERRORS came in a little bit slower than writing your own exception handler in PL/SQL, so I was also interested to know whether OWB mappings that used DML Error Logging were faster or slower than mappings that used data rules, which switch the mapping over to row-based mode and load the table using PL/SQL.

To start off then, I thought I'd recreate the tests used in the Oracle Magazine article and see how the timings compare when using the 11g version of Oracle rather than 10gR2. I wasn't really looking at absolute times - I'm running Oracle on a different machine to the one I used then - but instead at relative times between the running inserts using conventional path and direct path inserts using, and then not using, the LOG ERRORS clause, and to compare this with a PL/SQL row-by-row insert using the SAVE EXCEPTIONS clause.

I started off by re-creating the tables in the two articles and ran a direct path insert using DML Error Logging:

SQL> insert /*+ APPEND */ 2 into sales_target_con 3 select * 4 from sales_src 5 log errors 6 reject limit unlimited 7 ;918834 rows created.

Elapsed: 00:00:03.13

OK, around 3 seconds, which compares well with the 5 seconds in my 10gR2 test. What if I run the insert in conventional path?

SQL> insert 2 into sales_target_con 3 select * 4 from sales_src 5 log errors 6 reject limit unlimited 7 ;918834 rows created.

Elapsed: 00:00:29.67

So that's around 30 seconds compared to 3 seconds. But how much of that is down to just running in conventional path mode? Let's run the first one again, take off the LOG ERRORS clause but remove the rows that could cause an error.

SQL> insert /*+ APPEND */ 2 into sales_target_con 3 select * 4 from sales_src 5 where promo_id is not null 6 and amount_sold > 0 7 ;918834 rows created.

Elapsed: 00:00:04.05

That's interesting, it's slightly slower to filter out the bad rows rather than have LOG ERRORS deal with it. It's within a margin of error though so I wouldn't read too much into this.

What about if we run the conventional path insert without the LOG ERRORS clause?

SQL> insert 2 into sales_target_con 3 select * 4 from sales_src 5 where promo_id is not null 6 and amount_sold > 0 7 ;918834 rows created.

Elapsed: 00:00:04.21

OK, it's back to four seconds. So there's definately a performance hit when you do a conventional path insert and choose the LOG ERRORS clause, so that issue is still there with 11g.

Before going on to the OWB test I also ran the four insert statements with primary key constraints on the table being inserted in to - the original test didn't have a primary key on this table, but as in real life I'm likely to require one, and the data rule feature in OWB requires that we have one in place, I gave it a go.

The timings for the four inserts with a primary key present were

- Direct path insert with DML Error logging - 22 seconds

- Conventional path insert with DML Error logging - 43 seconds

- Direct path insert with no error logging - 19 seconds

- Conventional path insert with no error logging - 27 seconds

Given the non-scientific nature of the tests I wouldn't read too much into differences of a few seconds, but certainly running DML Error Logging in conventional path still adds quite a big overhead to your table insert.

In the original article, I also compared DML Error Logging with custom PL/SQL code that used the SAVE EXCEPTIONS clause. How did this perform in 11g?

Running the code without a primary key constraint on the target table came in as follows:

SQL> DECLARE

2 TYPE array IS TABLE OF

sales_target_con_plsql%ROWTYPE

3 INDEX BY BINARY_INTEGER;

4 sales_src_arr ARRAY;

5 errors NUMBER;

6 error_mesg VARCHAR2(255);

7 bulk_error EXCEPTION;

8 l_cnt NUMBER := 0;

9 PRAGMA exception_init

10 (bulk_error, -24381);

11 CURSOR c IS

12 SELECT *

13 FROM sales_src;

14 BEGIN

15 OPEN c;

16 LOOP

17 FETCH c

18 BULK COLLECT

19 INTO sales_src_arr

20 LIMIT 100;

21 BEGIN

22 FORALL i IN 1 .. sales_src_arr.count

23 SAVE EXCEPTIONS

24 INSERT INTO sales_target_con_plsql

VALUES sales_src_arr(i);

25 EXCEPTION

26 WHEN bulk_error THEN

27 errors :=

28 SQL%BULK_EXCEPTIONS.COUNT;

29 l_cnt := l_cnt + errors;

30 FOR i IN 1..errors LOOP

31 error_mesg :=

SQLERRM(-SQL%BULK_EXCEPTIONS(i).ERROR_CODE);

32 INSERT INTO SALES_TARGET_CON_PLSQL_ERR

33 VALUES (error_mesg);

34 END LOOP;

35 END;

36 EXIT WHEN c%NOTFOUND;

37 END LOOP;

38 CLOSE c;

39 DBMS_OUTPUT.PUT_LINE

40 ( l_cnt || ' total errors' );

41 END;

42 /

9 total errors

PL/SQL procedure successfully completed.

Elapsed: 00:00:11.03

Running it again with the primary key in place brought the time up to around 19 seconds. If we take the most realistic scenario - inserting into a table that has a primary key - the timings now come out like this:

- Direct path without DML Error Logging - 19 seconds

- PL/SQL with SAVE EXCEPTIONS - 19 seconds

- Direct path insert with DML Error Logging - 22 seconds

- Conventional path insert without error logging - 27 seconds

- Conventional path insert with error logging - 43 seconds

which would seem to suggest that, if you can strip out the erroneous rows beforehand and then run your insert in direct path mode, or alternatively write your own PL/SQL error handler, that's the fastest, otherwise do a direct path insert with error logging turned on but whatever you do, don't use error logging with a conventional path insert otherwise your load will grind to a halt.

So, how does this all relate to Warehouse Builder then? As a quick recap, Warehouse Builder from 10.2.0.3 onwards lets you enter an "Error Table" name for each target table in a mapping, wherapon it will insert a LOG ERRORS clause into the DML statement that loads the table. If you've licensed the Data Quality Option for OWB, as an alternative you can define a data rule and attach it to a table, which again makes warehouse builder direct all the error rows into an error table (albeit one that OWB defines for you automatically). The kicker though is that mappings using data rules then run only in row-based, PL/SQL mode, which when all things are considered usually runs a bit slower than set-based code. I wonder though, whether these latest versions of OWB actually use DML Error Logging for the data rule mappings and then run in set-based mode as well, and to find this out I put a couple of mappings together. For all these tests onwards, I've added a primary key to the target table as this is the most realistic scenario.

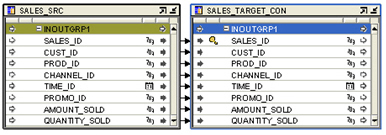

The mapping itself performed the same load logic as the SQL statements beforehand. For the mapping that was going to use DML Error Logging, I just copied the columns from the source table into the target table, like this:

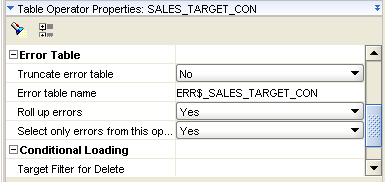

To turn on the DML Error Logging feature, I selected the target table and entered the log table name (created previously from SQL*Plus using DBMS_ERRLOG.CREATE_ERROR_LOG).

Taking a look at the mapping code that OWB generates for this, you can see the LOG ERRORS clause in place:

INSERT

/*+ APPEND PARALLEL("SALES_TARGET_CON") */

INTO

"SALES_TARGET_CON"

("SALES_ID",

"CUST_ID",

"PROD_ID",

"CHANNEL_ID",

"TIME_ID",

"PROMO_ID",

"AMOUNT_SOLD",

"QUANTITY_SOLD")

(SELECT

"SALES_SRC"."SALES_ID" "SALES_ID",

"SALES_SRC"."CUST_ID" "CUST_ID",

"SALES_SRC"."PROD_ID" "PROD_ID",

"SALES_SRC"."CHANNEL_ID" "CHANNEL_ID",

"SALES_SRC"."TIME_ID" "TIME_ID",

"SALES_SRC"."PROMO_ID" "PROMO_ID",

"SALES_SRC"."AMOUNT_SOLD" "AMOUNT_SOLD",

"SALES_SRC"."QUANTITY_SOLD" "QUANTITY_SOLD"

FROM

"SALES_SRC" "SALES_SRC"

)

LOG ERRORS INTO ERR$_SALES_TARGET_CON

(get_audit_detail_id) REJECT LIMIT 50

;

So I save the mapping and switch over to the Control Center Manager, deploy the mapping and then run it. Looking at the timings, it came in at around 18 seconds, a little bit faster than the hand-written insert statement, but again within a margin of error.

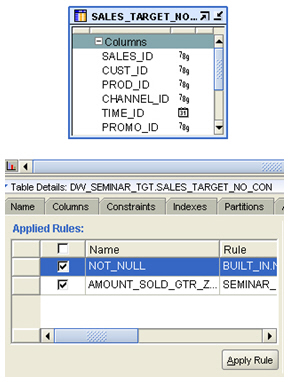

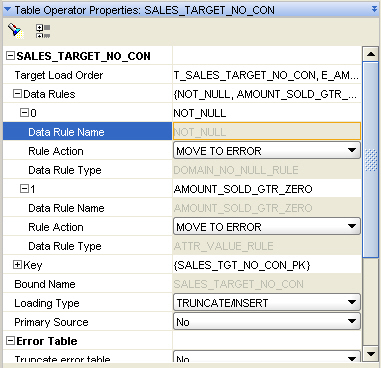

For the mapping that used a data rule to handle errors, I first created a couple of manual data rules, one for AMOUNT_SOLD > 0 and one for PROMO_ID is NOT NULL. I then added this to the target table using the Data Object Editor, like this:

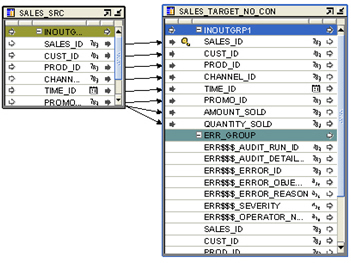

I then created the mapping again, which this time showed some "error rows" on the target table operator as I'd now associated this table with a set of data rules.

>

>

Over on the table operator properties panel, I turned on the two data rules and set them to move all errors to the error logging table.

Taking a look at the generated code, it's clear that the mapping isn't using DML Error Logging; it still creates a few test selections, works out whether any errors have occurred, in some cases inserts into the target table using regular (i.e. non-DML Error Logging) direct path insert statements, in some cases inserts using BULK COLLECT .. FORALL. This is not neccesarily a problem, as we saw from the first tests using PL/SQL can actually be as fast as direct path inserts using LOG ERRORS, but it'll be interesting to see how the load performs.

Running the test first time around was a bit of a surprise actually, as it still errored when trying to insert null values into the PROMO_ID column - this column has a NOT NULL constraint and is one of the constraints that causes the load otherwise to fail. When using DML Error Logging, the load carried on as normal though, whereas with a data rule, it must still be trying to insert the null value as the mapping errors at this point. Removing the NOT NULL constraint allows the mapping to run, which means that (and I remember this from when I first tried out data rules) it won't protect you from an actual, physical constraint violation, as data rules assume that all data checks are carried out "virtually" within the OWB repository.

Anyway, running the mapping again with the PROMO_ID constraint dropped gave a run time of between 2 and 3 minutes, which I checked a few times by re-running the mapping. Taking a look through the PL/SQL code generated shows that it's not using the SAVE EXCEPTIONS clause, and that's even with the "Bulk Processing Code" option checked in the mapping configuration, so whatever's going on there, on the face of it it's not as efficient a way of handling potential mapping errors as using the LOG ERRORS feature.

Of course with data rules, and the Data Quality Option in general, you get a lot more features, such as the ability to siphon off the error rows and correct them as part of the same mapping, plus of course your repository and mapping now contain metadata on the allowable values in your warehouse and how errors are corrected. Performance-wise though, as long as you ensure the table insert runs in direct path mode, using the LOG ERRORS clause is faster than using data rules, although of course clearing out potential errors prior to loading your tables, and then loading them without any additional error handling features, is usually faster still.

Anyway, support for DML Error Logging in OWB 11g and 10.2.0.3 looks like a pretty neat feature, and of course it doesn't require you to license the Data Quality Option. Whilst I wouldn't use it wholesale on all mappings - adding the clause slows down your inserts even if you don't hit any errors - it's a nice feature you can switch on fairly easily and it's about the fastest way you can gracefully handle errors in a mapping.