Incremental refresh of Exalytics Aggregates using TimesTen

Introduction

One of the key reasons that Exalytics can deliver sub-second response times is the use of TimesTen in-memory database to hold data pre-calculated to various levels of aggregation as required by the reports. Last year I wrote about Alternatives to the Summary Advisor when considering how to build and maintain these aggregates in TimesTen on Exalytics. This is something I also discussed in my recent UKOUG presentation, Exalytics - One Size Fits All?. In these, I outlined how the functionality “out of the box” works, and also why you might want to consider alternatives, the key reasons for which are:

- When Aggregate Persistence runs, it modifies the RPD online. In a Production environment this is often not desirable.

- Aggregate Persistence does not currently support incremental refresh. If the aggregates take a lot of time to build then the batch window may not be sufficient to do a complete rebuild.

Just to whet your appetite, using the method I describe below the refresh time for a single aggregate table can be cut from over 20 minutes (using the standard Aggregate Persistence method - full rebuild) to under two seconds to do an incremental refresh of the data…

NB Aggregate Persistence is the refresh technology that OBIEE uses, whether you generate the script using the Exalytics-only Summary Advisor or the standard Aggregate Persistence Wizard.

In this post I am going to show how to use a hybrid of Aggregate Persistence and new functionality in native TimesTen to implement incremental refresh as well as avoid the online update of the RPD.

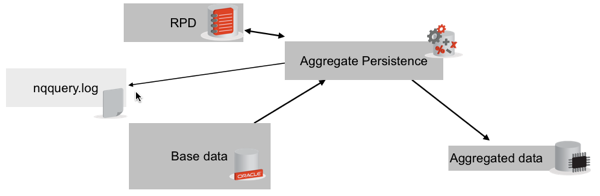

Overview

TimesTen 11.2.2.4 includes new “Load From Oracle” functionality which enables TimesTen to connect directly to Oracle and load data into TimesTen tables from it. This is not the same as In-Memory DataBase Cache (IMDB), which has existed for TimesTen and Oracle for a long time. This is new in 11.2.2.4, and provides an excellent way for loading data into TimesTen from Oracle, without much setup needed. For full details, see the documentation here

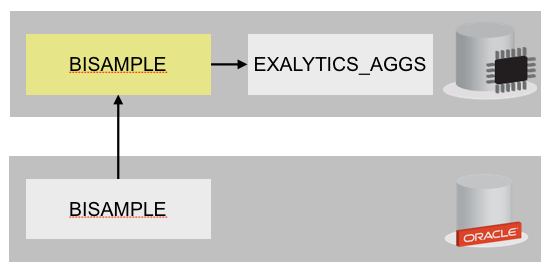

The method I am going to describe has two parts:

- The initial build. This is standard Exalytics, using Aggregate Persistence, from either the Summary Advisor or Aggregate Persistence Wizard. This step will do several things:

- Generate the SQL that we will subsequently use to populate the tables.

- Update the RPD with the aggregates in the Physical and BMM lays

- Create the physical tables in TimesTen and do the initial load.

This step needs to be run just once per set of aggregates.

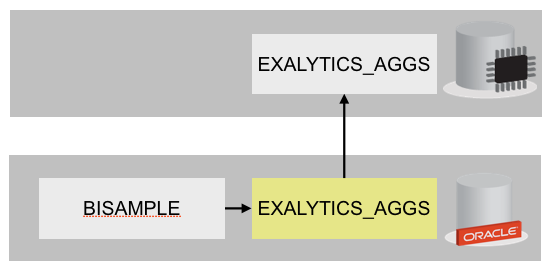

The data refresh, using the SQL extracted from Aggregate Persistence and TimesTen’s CreateAndLoadFromOraQuery command.

This step is run each time the aggregate data needs to be refreshed

Step-by-step - Part 1 - Initial build

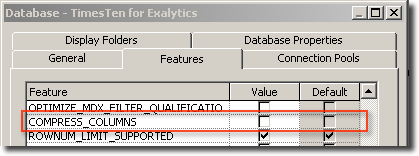

Make sure the Database Features for Exalytics in the your RPD has COMPRESS_COLUMNS disabled, since refresh of compressed tables using this method is not yet supported in TimesTen (11.2.2.4.1)

Run Summary Advisor (or Aggregate Persistence Wizard) to create the Aggregate Persistence script. In this example I’ve created a very simple aggregate using the standard SampleSales RPD:

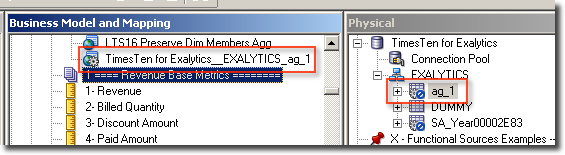

create aggregates "ag_1" for "01 - Sample App"."F0 Sales Base Measures"("1- Revenue") at levels ("01 - Sample App"."H0 Time"."Year") using connection pool "TimesTen for Exalytics"."Connection Pool" in "TimesTen for Exalytics".."EXALYTICS"Execute the Aggregate Persistence script. This will create and populate the initial aggregates on TimesTen. It will also do the appropriate ‘plumbing’ in the RPD so that the aggregates are available for use (adding into the Physical and Logical layers):

$ nqcmd -d AnalyticsWeb -u weblogic -p welcome1 -s sa.sql ------------------------------------------------------------------------------- Oracle BI ODBC Client Copyright (c) 1997-2011 Oracle Corporation, All rights reserved ------------------------------------------------------------------------------- Connection open with info: [0][State: 01000] [DataDirect][ODBC lib] Application's WCHAR type must be UTF16, because odbc driver's unicode type is UTF16 create aggregates "ag_1" for "01 - Sample App"."F0 Sales Base Measures"("1- Revenue") at levels ("01 - Sample App"."H0 Time"."Year") using connection pool "TimesTen for Exalytics"."Connection Pool" in "TimesTen for Exalytics".."EXALYTICS" create aggregates "ag_1" for "01 - Sample App"."F0 Sales Base Measures"("1- Revenue") at levels ("01 - Sample App"."H0 Time"."Year") using connection pool "TimesTen for Exalytics"."Connection Pool" in "TimesTen for Exalytics".."EXALYTICS" Statement execute succeeded Processed: 1 queries $Opening up the RPD shows that the physical tables have been created in TimesTen, and the Logical Table Sources have been added in the BMM layer.

Step-by-step - Part 2 - Aggregate refresh from TimesTen

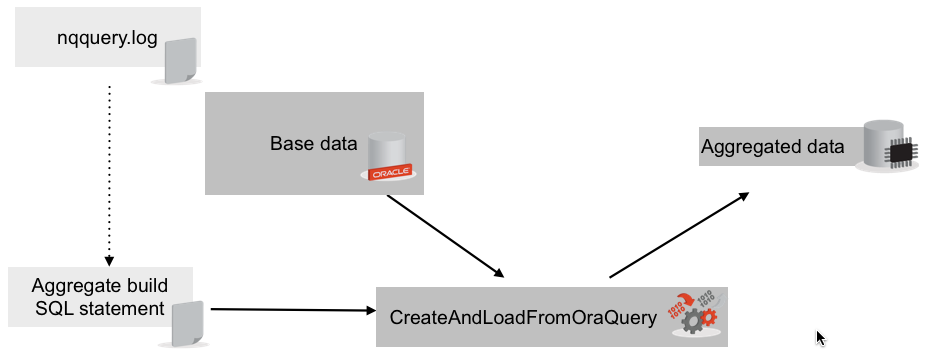

Having created the aggregate(s) we can now process the log file and put the refresh logic into TimesTen directly.

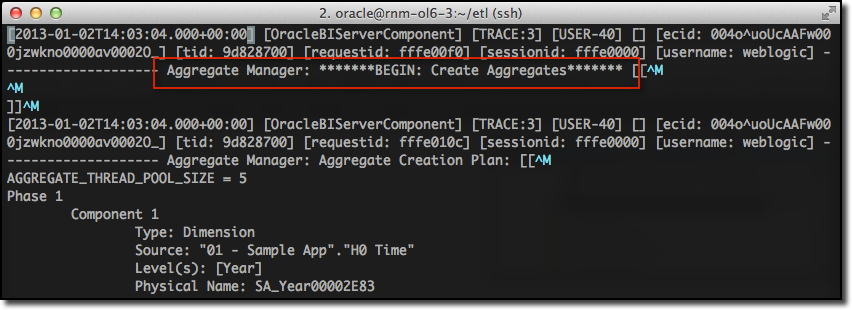

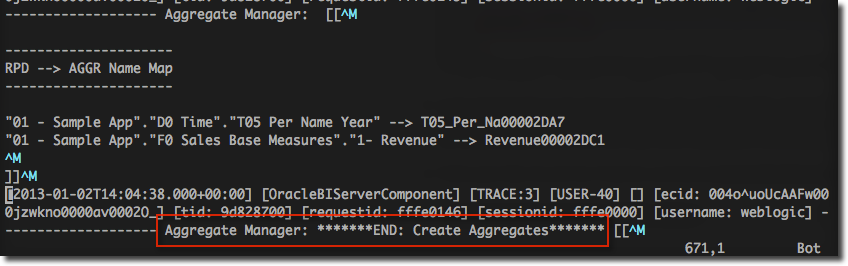

Load nqquery.log and extract the section that starts “BEGIN: Create Aggregates” and ends “END: Create Aggregates” (minus the quotation marks). Save this to a separate file, for example ag_1.nqquery.log.

In the extracted file (in the above example, ag_1.nqquery.log), extract the SQL that’s used to populate each table. Remember for each fact aggregate there is going to be at least one support aggregate dimension table too. Put each of the refresh statements in their own SQL file for subsequent manipulation.

Prefix each SQL file with the TimesTen command createandloadfromoraquery using the syntax:

createandloadfromoraquery <OWNER>.<TABLE>where <OWNER> is the Exalytics schema owner, and <TABLE> is the tablename being loaded

If rebuilding a table (i.e. not appending data) then prefix the file with:

TRUNCATE TABLE <OWNER>.<TABLE>;- For example:

Original extract: (as generated by OBIEE’s Aggregate Persistence execution, and subsequently extracted from the nqquery.log)

WITH SAWITH0 AS (select distinct T42406.PER_NAME_YEAR as c1 from BISAMPLE.SAMP_TIME_QTR_D T42406 /* D03 Time Quarter Grain */ ) select distinct D1.c1 as c1, ROW_NUMBER() OVER ( ORDER BY D1.c1) as c2 from SAWITH0 D1With createandloadfromora prefix added:

createandloadfromoraquery EXALYTICS.SA_YEAR00002E83 WITH SAWITH0 AS (select distinct T42406.PER_NAME_YEAR as c1 from BISAMPLE.SAMP_TIME_QTR_D T42406 /* D03 Time Quarter Grain */ ) select distinct D1.c1 as c1, ROW_NUMBER() OVER ( ORDER BY D1.c1) as c2 from SAWITH0 D1With a TRUNCATE statement first:

TRUNCATE TABLE EXALYTICS.SA_Year00002E83; createandloadfromoraquery EXALYTICS.SA_YEAR00002E83 WITH SAWITH0 AS (select distinct T42406.PER_NAME_YEAR as c1 from BISAMPLE.SAMP_TIME_QTR_D T42406 /* D03 Time Quarter Grain */ ) select distinct D1.c1 as c1, ROW_NUMBER() OVER ( ORDER BY D1.c1) as c2 from SAWITH0 D1

- By this point you should have at least two (one fact and minimum one dimension) SQL files. To run them we first need to make sure that TimesTen can connect to Oracle. There are two things to setup:

Oracle connection details from TimesTen. This can be defined using a TNS entry - but you then need to make sure TimesTen is configured with the correct tnsnames.ora file- or more easily using EasyConnect syntax such as

localhost:1521/orcl- User credentials. Because of how the connection from TimesTen to Oracle works, the connection we’re going to create between TimesTen and Oracle needs to use a single username that exists on both. There are a few ways to do this:

- Create a new user on TimesTen using the Oracle username. Give it permission to create tables in the TimesTen schema we're using to store the aggregates.

or - Create a new user on Oracle, using the TimesTen username. Give it permission to select data from the Oracle schema where the base (unaggregated) is stored

or - Store data in the same schema name on Oracle and TimesTen

- Create a new user on TimesTen using the Oracle username. Give it permission to create tables in the TimesTen schema we're using to store the aggregates.

In my example I’ve used the first option - creating the Oracle username (BISAMPLE) on TimesTen and giving it permission on the target TimesTen schema (EXALYTICS). To do this, connect to TimesTen database and set up required username to match Oracle. Assign required privileges.

create user BISAMPLE identified by password; GRANT CREATE SESSION TO BISAMPLE; GRANT CREATE ANY TABLE, INSERT ANY TABLE, UPDATE ANY TABLE, DELETE ANY TABLE TO BISAMPLE;NB. If more granular security is required then see the documentation for information on the privileges that can be granted to individual objects

To test the connection between TimesTen and Oracle, use the ttIsql program, with the following connection string syntax:

ttIsql -connStr "DSN=TT_AGGR_STORE;UID=BISAMPLE;PWD=password;OracleNetServiceName=localhost:1521/orcl;OraclePWD=BISAMPLE"Where

- DSN - The DSN of your TimesTen database (as defined in sys.odbc.ini)

- UID - The username that exists on both TimesTen and Oracle

- PWD - The password for the TimesTen user (UID)

- OraclePWD - The password for the Oracle user (UID)

- OracleNetServiceName - either a TNS entry, or (easier) use EasyConnect syntax (host:port/SID)

When you've entered the ttIsql command you should get the Command> prompt – but note that this doesn’t prove that the connection to Oracle is working. To do this, we can take advantage of the ttTableSchemaFromOraQuery procedure. This runs a query against Oracle (and returns a CREATE TABLE statement, but for our purposes it just serves to validate a query can be executed against Oracle):

Command> call ttTableSchemaFromOraQueryGet('BISAMPLE','TMP','SELECT 1 FROM DUAL'); < CREATE TABLE "BISAMPLE"."TMP" ( "1" number ) > 1 row found.If you get an error at this point then you need to resolve it, since the next steps rely on having the connectivity between TimesTen and Oracle working.

- So, we have our aggregate SQL extracted and amended to run on TimesTen. We’ve set up our users and connection so that TimesTen can connect to Oracle. Now, to execute the aggregate refresh script, you can either:

- run it interactively from ttIsql

- pass it as a script option to ttIsql

For debugging, the former is easier, whilst to run as part of an ETL batch the latter is more sensible. Here’s running it using the script option to run it as part of a batch:

$ ttIsql -connStr "DSN=TT_AGGR_STORE;UID=BISAMPLE;PWD=password;OracleNetServiceName=localhost:1521/orcl;OraclePWD=BISAMPLE" -f ~/etl/SA_Year00002E83.sql Copyright (c) 1996-2011, Oracle. All rights reserved. Type ? or "help" for help, type "exit" to quit ttIsql. connect "DSN=TT_AGGR_STORE;UID=BISAMPLE;PWD=password;OracleNetServiceName=localhost:1521/orcl;OraclePWD=BISAMPLE"; Connection successful: DSN=tt_aggr_store;UID=BISAMPLE;DataStore=/u01/data/tt/tt_aggr_store;DatabaseCharacterSet=AL32UTF8;ConnectionCharacterSet=US7ASCII;DRIVER=/u01/app/oracle/product/TimesTen/tt1122/lib/libtten.so;PermSize=256;TempSize=256;TypeMode=0;OracleNetServiceName=localhost:1521/orcl; (Default setting AutoCommit=1) run "/home/oracle/etl/SA_Year00002E83.sql"; TRUNCATE TABLE EXALYTICS.SA_Year00002E83; createandloadfromoraquery EXALYTICS.SA_YEAR00002E83 WITH SAWITH0 AS (select distinct T42406.PER_NAME_YEAR as c1 from BISAMPLE.SAMP_TIME_QTR_D T42406 /* D03 Time Quarter Grain */ ) select distinct D1.c1 as c1, ROW_NUMBER() OVER ( ORDER BY D1.c1) as c2 from SAWITH0 D1; Warning 2207: Table EXALYTICS.SA_YEAR00002E83 already exists 8 rows loaded from oracle. exit; Disconnecting... Done. [oracle@rnm-ol6-3 instance1]$

So at this point, you have a scripted method for refreshing aggregate tables held in TimesTen without touching the RPD.

Didn’t someone say INCREMENTAL?

For incremental refresh of fact tables, customise the WHERE clause as necessary to pick up just data that is expected to have changed. Here you have to understand the data you’re loading and particularly how it can be updated. Typically you will need to do two things for the incremental refresh:

- DELETE some data from the aggregate table for the latest date range (eg on a weekly aggregate table, current week)

- Use a WHERE clause in the aggregate refresh statement to only pull in data for the correct date range.

Things get more complicated if you consider there might be late data being updated in older date ranges etc, but the approach will remain the roughly same.

For example, if refreshing a yearly aggregate for 2010, the following SQL could be used:

delete from exalytics.ag_1 where T05_PER_NA00002DA7 = 2010; createandloadfromoraquery EXALYTICS.ag_1 select T42406.PER_NAME_YEAR as c1, sum(T42442.Revenue) as c2 from BISAMPLE.SAMP_TIME_QTR_D T42406 /* D03 Time Quarter Grain */ , BISAMPLE.SAMP_REVENUE_FA2 T42442 /* F21 Rev. (Aggregate 2) */ where ( T42406.QTR_KEY = T42442.Bill_Qtr_Key ) and T42406.PER_NAME_YEAR = 2010 group by T42406.PER_NAME_YEARWhich in practice would work like this:

Data exists in the fact table:

Command> select * from ag_1; < 2008, 23500000.0000000 > < 2009, 23000000.0000000 > < 2010, 23500000.0000000 > 3 rows found.Run the incremental load for new data

$ ttIsql -connStr "DSN=TT_AGGR_STORE;UID=BISAMPLE;PWD=password;OracleNetServiceName=localhost:1521/orcl;OraclePWD=BISAMPLE" -f ~/etl/ag_1.incr.sql Copyright (c) 1996-2011, Oracle. All rights reserved. Type ? or "help" for help, type "exit" to quit ttIsql. connect "DSN=TT_AGGR_STORE;UID=BISAMPLE;PWD=password;OracleNetServiceName=localhost:1521/orcl;OraclePWD=BISAMPLE"; Connection successful: DSN=tt_aggr_store;UID=BISAMPLE;DataStore=/u01/data/tt/tt_aggr_store;DatabaseCharacterSet=AL32UTF8;ConnectionCharacterSet=US7ASCII;DRIVER=/u01/app/oracle/product/TimesTen/tt1122/lib/libtten.so;PermSize=256;TempSize=256;TypeMode=0;OracleNetServiceName=localhost:1521/orcl; (Default setting AutoCommit=1) run "/home/oracle/etl/ag_1.incr.sql"; delete from exalytics.ag_1 where T05_PER_NA00002DA7 = 2010; 1 row deleted. createandloadfromoraquery EXALYTICS.ag_1 select T42406.PER_NAME_YEAR as c1, sum(T42442.Revenue) as c2 from BISAMPLE.SAMP_TIME_QTR_D T42406 /* D03 Time Quarter Grain */ , BISAMPLE.SAMP_REVENUE_FA2 T42442 /* F21 Rev. (Aggregate 2) */ where ( T42406.QTR_KEY = T42442.Bill_Qtr_Key ) and T42406.PER_NAME_YEAR = 2010 group by T42406.PER_NAME_YEAR; Warning 2207: Table EXALYTICS.AG_1 already exists 1 row loaded from oracle. exit; Disconnecting... Done. [oracle@rnm-ol6-3 etl]$Validate the loaded data - figures for 2010 have been updated

Command> select * from exalytics.ag_1; < 2008, 23500000.0000000 > < 2009, 23000000.0000000 > < 2010, 24766814.4734000 > 3 rows found.Compare to source data on Oracle:

select T42406.PER_NAME_YEAR as c1, sum(T42442.Revenue) as c2 from BISAMPLE.SAMP_TIME_QTR_D T42406 /* D03 Time Quarter Grain */ , BISAMPLE.SAMP_REVENUE_FA2 T42442 /* F21 Rev. (Aggregate 2) */ where ( T42406.QTR_KEY = T42442.Bill_Qtr_Key ) group by T42406.PER_NAME_YEAR order by 1 asc; C1 C2 ---------- --------------- 2008 23500000 2009 23000000 2010 24766814.4734

Running as a refresh batch

The above illustrates the concepts for using aggregate refresh in TimesTen directly from Oracle. To use it in Production one would need to consider

TimesTen statistics gathering (ttOptUpdateStats / ttOptEstimateStats) after refreshing the data

Error handling & Notification

Management and deployment of the refresh scripts

Scheduling and orchestration of the scripts

Updating the scripts when new aggregates are required

Updating the scripts if the source metadata changes

At which point, the question of right tools for the right job raises itself. Quite possibly, shell scripting is ultimately not the best vehicle through which to deploy an ETL batch. Interestingly, it’s no worse/better than the current vanilla Exalytics approach, which is also script batch (nqcmd to execute Summary Advisor scripts).

All of the above can be done through shell scripting (for example, use cron to schedule, bash scripting to parse return codes, write log files, etc), the question is more whether it should be.

Ultimately, the ease of support and development is not going to be as great as through an Enterprise-ready tool such as Oracle Data Integrator. At the moment, there is no TimesTen ODI Knowledge Module that takes advantage of the Load From Oracle option, but I wouldn’t be surprised if one were in the pipeline.

Support, Limitations & Troubleshooting

The 11.2.2.4 version of TimesTen, which provides the new CreateAndLoadFromOraQuery command, is only certified as part of the Exalytics patchset 2 (v1.0.0.2). For more details, see here

This method won’t directly work with Aggregate Persistence scripts configured to use using_surrogate_key, since the Logical SQL used to populate the aggregate fact table has multiple Physical statements needed to get the keys generated in the dimensions. That’s not to say a single TimesTen statement couldn’t be fashioned to achieve the same result, but you won’t be able to get it directly from nqquery.log.

TimesTen 11.2.2.4 doesn’t yet support loading data from Oracle if the target TimesTen table is compressed. If you try to you will get the error

805: Loading of read optimized tables using the Load From Oracle feature has not been implementedThe command createandloadfromoraquery will create a table if it doesn’t exist already. Since the table must be created with the column names as specified in the RPD, problems can arise if we let TimesTen implicitly create a table which should already be there. If it creates it implicitly it will label the columns as they are in the SELECT clause, i.e. c1, c2, etc. One way around this is to use the ttLoadFromOracle procedure instead, which will abort if the table does not exist.

The character set used on Oracle and TimesTen must be the same, otherwise you get the error:

8296: TimesTen and Oracle database character sets do not match. TimesTen: AL32UTF8, Oracle: WE8MSWIN1252 5109: Cache Connect general error: BDB connection not open.For more information about picking and setting a character set for your TimesTen datastore, see the documentation.

Be aware that the nqquery.log written by BI Server uses UTF–8 encoding with a BOM (Byte Order Marker) at the beginning; if you use the file (or a descendent of it) directly with TimesTen then you will get puzzling errors such as this:

1001: Syntax error in SQL statement before or at: ", character position: 1 ???truncate table exalytics.SA_Month00000487 ^^^^^^^^^^^ The command failed.The solution is to strip out the BOM, which can be easily done on linux (h/t) using

tail --bytes=+4 original_file.txt fixed_file.txt