Report Flexibility, or Split-Second Performance : Pick One or the Other

Kevin McGinley wrote an interesting post the other week reflecting on Oracle Endeca Information Discovery (OEID), and the role that it's come to play within Oracle's BI tools strategy. Kevin's view was that whilst Oracle positioned OEID originally as an "unstructured data", or even "big data" tool, where it's got the most take-up in Kevin's view was around fast, ad-hoc analysis with minimal up-front data modelling, something that traditionally OBIEE has been a bit weak at. I was reminded of Kevin's post last week whilst on-site with a customer's IT department, who were struggling with that age-old problem: how do we provide a reporting system to our users that puts no restriction on what they can do, but that also returns the results of all queries within seconds. Why is this so hard then?

OBIEE, and most of the rest of the "enterprise" BI platforms in my experience, are typically selected and then implemented by the IT department, because it ticks lots of boxes around integration with ERP systems, corporate middleware and security, and they can usually get a good deal license-wise if it's part of a wider EBS upgrade, for example. IT departments then sell OBIEE to the business as offering flexibility and self-service reporting, all without having to involve the IT department when you need a new report creating. Which is true of course, but there's also the pleasant side-effect for IT in that users are, in fact, quite severely constrained on the data they can use in their reports, and the way in which they can combine it, and it usually does involve IT when changes to the RPD are made, for example to bring in a new data source or add a new hierarchy.

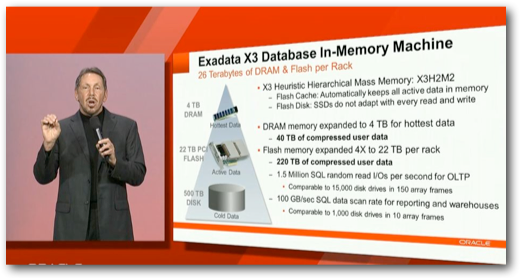

The reason for which, of course, is because the types of dataset typically reported against by OBIEE - large data warehouses, federated data marts, even transactional databases - will typically return results very slowly to users unless they've been indexed, summarised and otherwise optimized by the DBAs beforehand. Some of this is just basic physics - relational databases and disk-based storage is optimized for storing lots of data, in-detail, very safely, but you can't just throw any query at it and expect it to consistently return results in a split-second - not unless you bake-in some fairly constrained access paths, pre-compute and summarise in advance, or even use technologies such as Oracle Exalytics and TimesTen to replicate "hot spots" into an in-memory store.

So there you have it - you can either have flexibility, or speed, but not both. But should users accept this restriction?

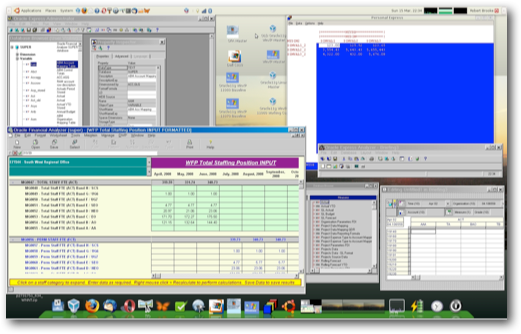

I first got into the BI game back in the late 90's, and back then there were systems you could deploy to users, that were both infinitely flexible, and fast; for example, the old Oracle Express Server and its "blue-screen" interface returned data in seconds albeit with fairly primitive client-server, or even DOS-based user interfaces as shown in the screenshot screenshot below (courtesy of the Independent Oracle OLAP Blog - brings back a few memories).

Even now if you go to certain client sites where they're major users of Excel, you'll see this sort of response time when they have Analysis Services providing the data, or more recently PowerPivot, Microsoft's new in-memory, column-store database.

So is it unreasonable for users to ask for both speed and flexibility, especially when they've spent millions on license fees for OBIEE, and they've got an Exadata server running in the background? I know Express Server and other OLAP tools have their own restrictions, but for sales analysis and other desktop BI applications, from the users' perspective have we really come all that far in the last ten to twenty years, or is it all excuses now?

Kevin makes the point in his post that perhaps Endeca Information Discovery fills this need now, with its "schema-less" data model and in-memory analysis, and we've certainly seen results that would support that - particularly when running Endeca on our Exalytics server, where the Endeca Server engine can leverage the entire 40 cores to massively-parellel query the in-memory data store. But Endeca though comes with its own limitations - there's no metadata layer, for example, and no obvious support for hierarchies or other "drill to detail" structures, though it'll be interesting to see how this pans out as Endeca gets integrated into the wider Oracle BI stack, perhaps working together with OBIEE's BI Server and using the same visualisation layer as the Presentation Server. Essbase of course could also meet this requirement too, but I'm not sure its integration with the OBIEE is quite at the point yet where end-users don't need to be aware that there's two different analysis engines running in the background.

So, do you think that it's fair to say "you can have report flexibility or performance, but not both", or is that just a limitation in our thinking, or the OBIEE toolset? Do you think having Endeca, or Essbase, in the background now makes this combination possible, or do you feel that, with the right amount of tuning, optimising and preparation, a decently set-up Exadata + Exalytics combination should be able to return results for users within a split-second, regardless of what they throw at it? I'd be interested in readers' opinions.