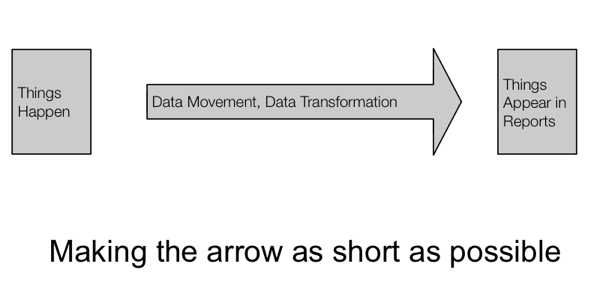

Tuning Philosophy - Tuning the Right Thing

My second presentation at this year's RMOUG Training Days was on tuning "realtime data warehouses"; as usual, this paper is now on the Rittman Mead Articles page. Perhaps more accurately my talk was more about my tuning philosophy rather than a cookbook of tuning "rules" to give optimal performance. I don't think there is a single recipe for guaranteed tuning success; the best we can come up with is set of principles for getting things as right as possible and to keep in our heads that each system has its own unique combinations of hardware, software and data and this interaction modifies the steps we should evaluate in our tuning process. The sub-title of my talk came from an early slide: "Making the Arrow as short as possible"

Another name for this arrow is "latency", the thing that stops "realtime" from actually being "same time". We will never have no arrow as the act of observing the event at source and reacting to output at target will always add some amount of delay to the data flow. I discussed this in a paper for the Evaluation Centre.

Rather than present my RMOUG talk here I will take a step back and write about how I go about improving performance in a data warehouse ETL.

Firstly, if we set out to improve performance we need to measure it, or else how can we be confident that we have "improved" things. This measurement can be as crude as clock time to run an ETL step, a throughput measure such as rows inserted per minute or we can really delve into performance and look fine detail such as number and type of database waits. I tend to start with coarse measurements. I do this for two simple reasons: execution time or throughput is often the business visible metric that is the basis of the problem perceived by the customer; and, from my experience of many data warehouses, the code as implemented may not be doing what the designers wanted, and there is little merit in tuning a process to do the "wrong thing" more quickly. I therefore take as my starting point what the business wants to achieve in the ETL step and not the query being run. Here I see four kinds of problems:

- The code used does not answer the business requirement.

- The query has a flaw that causes it to process too much data.

- The code uses inappropriate techniques.

- The process has redundant steps.

Too many times I have seen code created by developers that have insufficient understanding of what a relational database can do or what a particular vendor's database can do. I have seen people calculating the first of the month by taking the date, converting it to a string, then concatenate the "month and year" substring on to the literal "01 before converting it back to a date" because they don't know you can do this in a single function (TRUNC) in Oracle . I have seen developers re-inventing database joins as procedural steps (often they hand code nested loops!) rather than letting the database do it. I have seen others look for change in a row by computing the MD5 hash of the concatenated columns of the new row and comparing it to the previous MD5 hash for the original row. Don't get me wrong, MD5 hashing can work but it is so compute intensive that it can starve the database of vital CPU resource; For change detection I much prefer to use the mainstays of set based ETL: MINUS operators or simple outer joins between new and old data and looking for inequality between columns.

Once I am sure I am looking at the right problem I can go about optimising performance. I tend to start with OEM12c and the SQL Monitoring reports or good old fashioned EXPLAIN. Just getting the cardinalities right can help the CBO to do wonders. But accurate CBO costings are not always the solution, it is this stage that I start to look at the more physical aspects of design such as partitioning, parallel (assuming these are licensed), memory, concurrent activity, indexing and the way the tables are defined. More on this in another blog.