OBIEE, ODI and Hadoop Part 4: Hive Data Transformation & Integration via ODI 11g

In the previous three articles in this series (and well done for everyone that's still with us), we looked at how OBIEE 11.1.1.7 and ODI 11.1.1.6+ can access Hadoop data sources via a related technology called Hive, looking in the second article in more detail at the OBIEE aspect including how the OBIEE metadata import was set up, and how new HiveODBC drivers supplied by Oracle make this all possible. In the last posting in the series, we saw how ODI can be used to populate the Hive "warehouse" tables, stored on Hadoop's HDFS (Hadoop Distributed File System) storage grid, that are then analysed via HiveQL and MapReduce, and in this final post, we'll take a look at how ODI can go beyond simple loading from file into Hive tables and start to do some of the data transformation, and integration tasks that we regularly perform with more traditional data sources and targets.

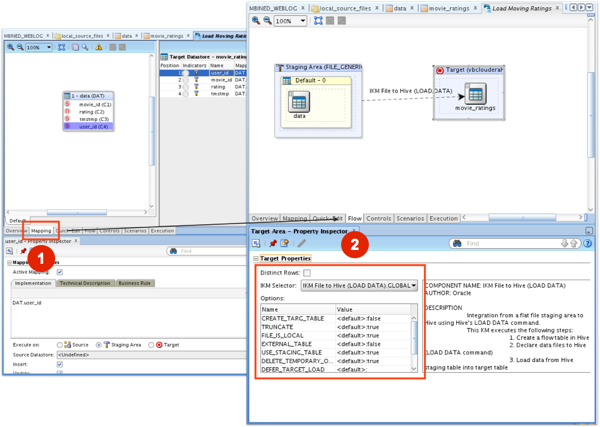

In the previous ODI and Hive/Hadoop example, we used an ODI interface to load data from a local file into a Hive table, using the IKM File to Hive knowledge module. In this first example columns in the source file were mapped 1:1 into "columns" in the Hive table source, like this:

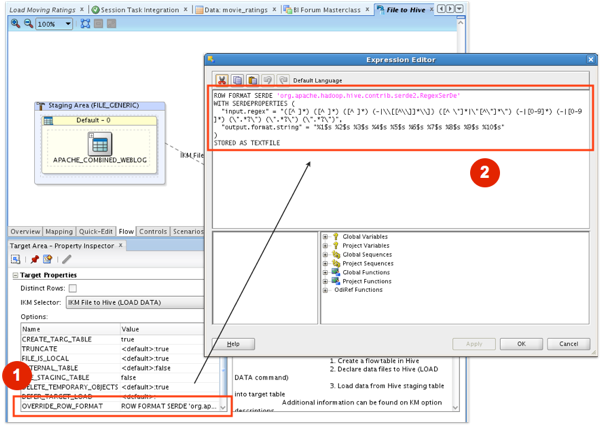

whilst in the second example, we used a Hadoop "SerDe" serializer-deserializer transformation to parse incoming weblog rows into the target Hive column format, again using this same IKM File to Hive knowledge module.

Which of course is great if you're loading data directly from files into corresponding Hive warehouse tables, but what if you've already got data in Hive but you want to "reshape" or transform it, creating the equivalent of a star schema, say, of a set of "normalised" tables?

Hive, compared to Oracle at least, is pretty simplistic in terms of the data manipulation you can do with it, and is more akin to working with Oracle external tables than a full insert-update-delete-ACID-compliant database (but then again, it was never intended to be that). You can only insert new data into Hive tables, not (ordinarily) update rows or delete them, with the only way to delete data in a Hive table being to drop it, then re-create it new. HiveQL has syntax for joins, group by and some functions, and you can drop in your own custom MapReduce scripts for the more complex stuff - which is not bad going and probably more than enough for your average Hadoop/Big Data analyst.

But the scenarios we're considering probably won't have a Hadoop expert around, and are typically something like:

- We've got some marketing or analyst-type users who want to access some behavioural, weblog or activity data sitting in Hadoop/HDFS/Hive, they're happy users of OBIEE, and we want to be able to connect OBIEE to this data so that they can report on it - which probably means "reshaping" it somewhat to fit OBIEE's preference for star-schema (or at least simplified, denormalized) source data models, or

- We've got some data sitting in Hadoop/HDFS that we'd like to add in as a new source into our data warehouse, and ODI is the preferred tool for bringing in new sources

In neither case do we have much in the way of Hadoop or MapReduce skills, so we can either (a) use OBIEE's BI Repository to do some source data reshaping, or even better (b) do the job properly, maybe as part of a much wider ETL process, using ODI. The key thing is fitting Hadoop into ODI's way of doing things, and giving ODI the ability to do Hadoop and Hive-specific tasks through its extensible "knowledge module" framework.

In the example above that I used to illustrate ODI's ability to work with Hive, I used the IKM File to Hadoop knowledge module that comes as part of the ODI Application Adaptor for Hadoop (ODIAAH); ODIAAH is one of a number of Fusion Middleware "application adapters", and is licensed separately (but in conjunction with) ODI EE costing around the same as GoldenGate (around $17000/processor, according to the current tech price list). For some more background into ODIAAH this self-study training session goes into the basics, but at a high-level this adapter actually ships four mainly Hive-based ODI KMs that enables data loading, transformation and integration between Hadoop/Hive/HDFS and the Oracle database (amongst other sources/targets);

- IKM File to Hive (Load Data) : what we've been using so far, used to load Hive tables from local and HDFS-stored files

- IKM Hive Control Append : used for loading data into Hive tables, with data sourced from one or more other Hive tables - the equivalent of loading one relational table by joining, filtering and transforming other tables

- IKM Hive Transform : a variation on the previous KM that allows incoming data to be transformed via Python or Perl scripts

- IKM File-Hive to Oracle : load data from either a Hive table, or from files stored on HDFS, into an Oracle database using the separately-licensed Oracle Loader for Hadoop

- CKM Hive : a check knowledge module for Hive (which because of its "schema on read" rather than "schema on write" approach, doesn't natively support keys or constraints)

- RKM Hive : a "reverse-engineering" KM that allows ODI to read table definitions from the Hive metastore, and use them to create ODI datastore definitions

A typical Oracle BI&DW project is going to use these KMs for a couple of main reasons; one, as stated before, is because their might be information sitting in Hadoop that the BI/DW system wants access to, and would otherwise have to start writing MapReduce, Pig, Sqoop etc code to get into their data warehouse. The other might be to leverage Hadoop's ability to crunch and count large sets of data massively parallel, at relatively low cost, with ODI then initiating and monitoring the process, then loading the results into a more traditional Oracle data store. Other technologies, some implemented or licensed by Oracle, also surround this process - Oracle NoSQL database for example - but for now lets concentrate on Hadoop and Hive, and see what else these KMs can do for us.

Starting off with IKM Hive Control Append, you might have a situation where you've already got some data in Hive tables (perhaps through loading them up using ODI's File to Hive (Load Data) KM, but the data needs joining, transforming, filtering or otherwise reshaping before you can connect a tool such as OBIEE to it. In this case it doesn't make sense to use ODI File to Hive (Load Data) as this KM is for when the data sits outside Hive in source files, so instead we can use IKM Hive Control Append to truncate/insert append new rows into an existing target Hive table.

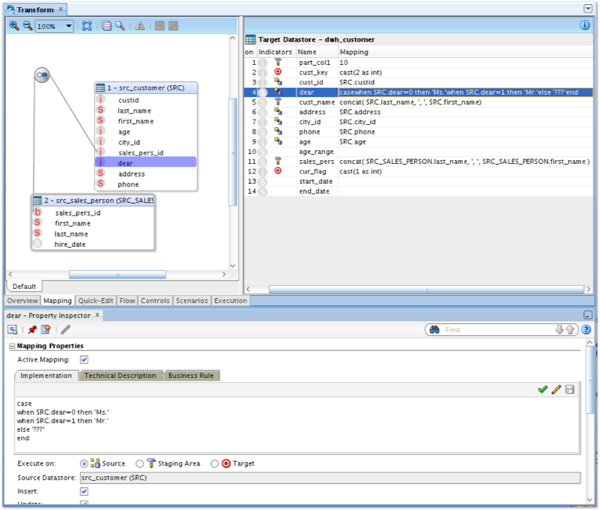

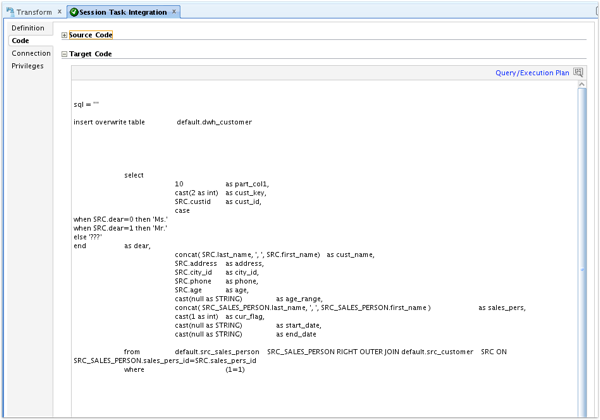

This process works very similar to regular table loading IKMs (except of course you can't insert/update into the target, only insert append); for example, in the screenshot below, two Hive tables, one for customer information and one for salesperson information, are joined and the results transformed using HiveQL with the results loaded into another Hive table.

Looking at the loading code generated by ODI for this interface, you can see that the HiveQL used to extract and load the data looks very similar to Oracle SQL, and in fact this KM is about the closest one to "regular" relational database ones out of the set of ODIAAH knowledge modules.

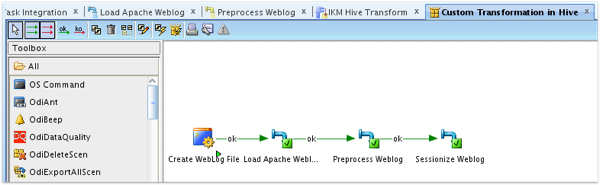

Things get more interesting with the IKM Hive Transform, a knowledge module that takes data from any source and loads it, via custom shell scripts, into a target Hive table. These transformation shell scripts are typically written in Python or Perl, and give you the ability to write your own custom pre-processing or transformation code that (via ODI's temporary interfaces feature) can then be used as "inline views" or multi-step processes when performing more complex data loading processes around Hive. In the example below, a three-step data loading process first creates and then loads an Apache Weblog file using IKM File to Hive (Load Data), then sessionizes (tags the log file with IDs to identify all activity within a particular browser session) using IKM Hive Transform:

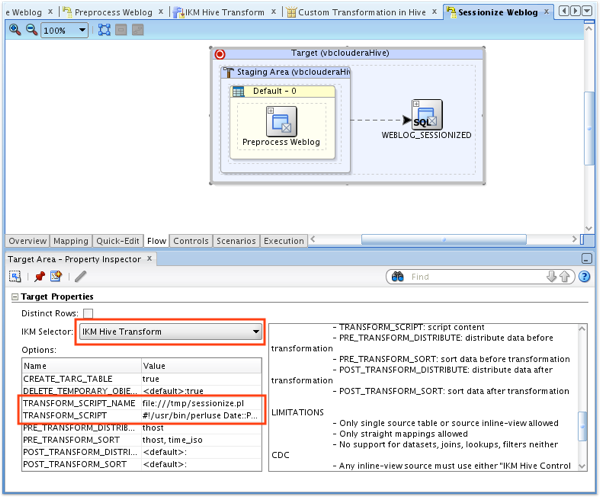

Taking a look at the final "Sessionize Weblog" step in more detail, you can see in the interface Flow tab that this step uses the IKM Hive Transform module, and a script called "sessionize.pl" to do the transform work.

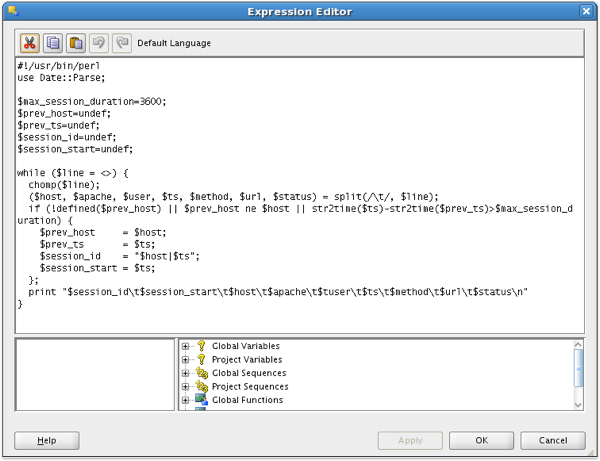

The perl script itself then parses through the log file information and works out the start, and stop points for each individual user session, outputting the results which are then transformed into the correct target columns by the PRE_TRANSFORM_DISTRIBUTION settings in the KM options.

Finally, the IKM File-Hive to Oracle knowledge module takes things in the other direction, extracting from Hive tables or HDFS files into an Oracle database, via the Oracle Loaded for Hadoop big data connector, but that's really a topic in itself and for another day, when I'd like to look in more detail at the big data connectors in general, and how you can leverage Hadoop and HFDS from within SQL and PL/SQL commands. For now though, this concludes my look at Hadoop connectivity from within OBIEE 11.1.1.7 and ODI.11.1.1.6, but if you're like me this brings up as many questions as it answers; for example:

- How would I go about setting up my own Hadoop/Hive/HDFS development environment, and can I use Windows or does only Linux make sense?

- Just what could I do with Hadoop as a data source that I can't do with regular Oracle and file sources; in terms of scale, and also complexity/speed?

- Can ODI also make use of Hadoop data loading/transformation tools like Pig, or Sqoop?

- Do any of the related/commercialized Hive/Hadoop technologies add anything to these scenarios - for example, Cloudera's Impala (for BI metadata/reporting) or Cloudera Manager (for Hadoop administration)?

- And - is there a time/volume threshold where Hadoop makes more sense as a data processing platform than an Oracle database?

If these sound interesting to you, they're exactly what I'll be covering during my part of the Data Integration Masterclass at the Rittman Mead BI Forum 2013 events in Brighton and Atlanta, running in just a few weeks time. We've still got a few places left, so if you're interested and want to see all this technology in action, sign-up now and I'll hopefully see you soon.