Conclusions on Storing Detail-Level Data in Oracle Exalytics

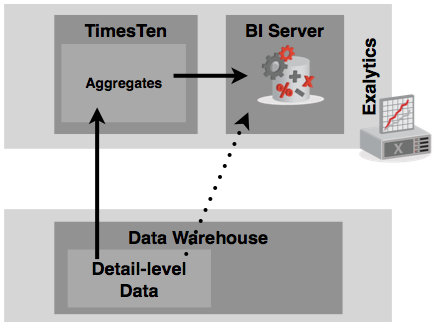

Last week I wrote a blog post on the topic of storing lots of detail-level data in Oracle Exalytics, not the primary use-case for the product but something we're increasingly asked about. Most implementations of Exalytics use OBIEE's Summary Advisor to recommend aggregations against large, data warehouse data sets with those aggregates then getting stored in TimesTen, but some customers are looking at their data set, seeing that its well-under 1 or 2TB in size, and wondering whether it's possible to put the whole dataset in-memory, rather than just specific aggregations.

My initial conclusion on writing the article was that TimesTen, although fast for small datasets, wasn't particularly suited to querying large, sparse datasets because it lacked common VLDB features such as parallel query and partitioning. Instead, I recommended readers looked at the other database engine that comes with Exalytics - Essbase, and in particular the Aggregate Storage Option, as an alternative way of storing this volume of data.

And Essbase ASO performed very well at the task, rapidly loading 130m source rows of data into an ASO database, and then responding to queries typically within a couple of seconds. The source Oracle database took up to a minute to return queries (having disabled any materialised views in the background), and then TimesTen database performed as expected, typically taking 10, 20 seconds to return answers. Except - having discussed the results with the TimesTen development team over the weekend, I'd missed out a crucial step, by not running TimesTen's Index Advisor after some queries had run, to create indexes that suited the dashboards I was analysing. In yesterday's post then I ran the Index Advisor on the two TimesTen databases, and as Oracle advised, the results were much, much better - in fact, faster than the Essbase ASO database that I'd been singing the praises of in the previous post.

But of course - now things aren't being fair on Essbase, as there's a similar step that you can perform on Essbase ASO databases, to run an advisor that recommends what are called "aggregate views", similar to Oracle database materialised views, based on the structure of your cube and the workload on your system. And, of course, there's the Summary Advisor that I can run on the original Oracle source database, which of course should make certain queries against this source run faster. So which one is best?

Before we get into any more testing though, it's worth thinking about why we're looking at this scenario, and what Exalytics is designed for - at least., the OBIEE parts of Exalytics. When Exalytics was put together, Oracle's vision for the product was as a complement to technologies such as Exadata and Big Data Appliance, where the reporting dataset could typically be many, many terabytes in size. As such, you're never going to get all of the data into the 1TB (now 2TB) of RAM that's in the Exalytics server, so you've got two choices; either you take a detail-level subset of the entire dataset and put it into RAM (the SAP HANA approach, perhaps) or you take a slice of it - say, just the aggregates - and put those into memory instead. Going down the latter route means that Exalytics can work with source datasets many times the size of Exalytics' memory, and the approach fits with the general way that data warehouse systems are optimised, by pre-aggregating and pre-caching data.

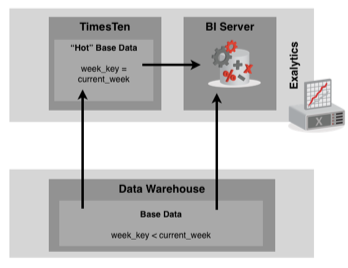

But some customers don't fit this model, but still want to take advantage of the power of Exalytics. Some might buy Exalytics to give them their own, departmental analytics server, in the same way that companies used to buy Hyperion Essbase or Oracle Express to be able to "own" their own analysis server. In this case, it's reasonable that they might want to put all the data required for analysis "in the Exalytics server", but this isn't a scenario catered for well by the Summary Advisor. Others might just have a reporting dataset that's within the 1 or 2TB of RAM within the Exalytics server, and just say - let's put it all in there. Another scenario might involve copying just this month's data into TimesTen ("hot data"), and then use a feature such as OBIEE's LTS fragmentation to get the hot data from TimesTen, and the older data from Oracle. Whichever way, Exalytics has to be able to handle both summary-level queries, and retrievals of individual sets of rows, right down to the grain of the dataset.

So the way that TimesTen, with the Index Advisor, speeds-up queries against large datasets actually serves this well - index access will suit retrieval of small sets of rows, and in fact the new indexes recommended by the Index Advisor in the example in yesterday's post even seemed to speed up aggregations as well, which was a bonus. The equivalent advisor within Essbase concentrates on aggregations though, not single "row" retrievals, so how does that work?

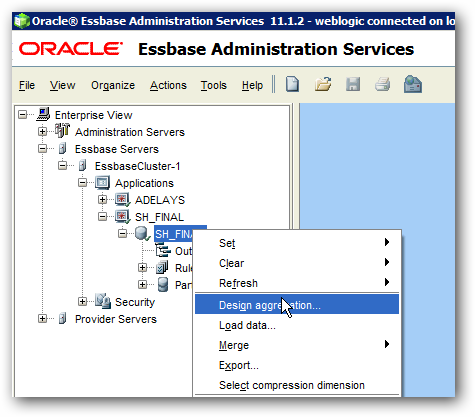

Whilst you can create ASO aggregate views manually, probably the easiest place to create them is within Essbase Administration Services Console, where there's a menu option against ASO databases for designing these aggregate views (remember, ASO databases automatically aggregate when queries run against non level-0 members, but this process is about pre-calculating more aggregates in order to speed up those queries).

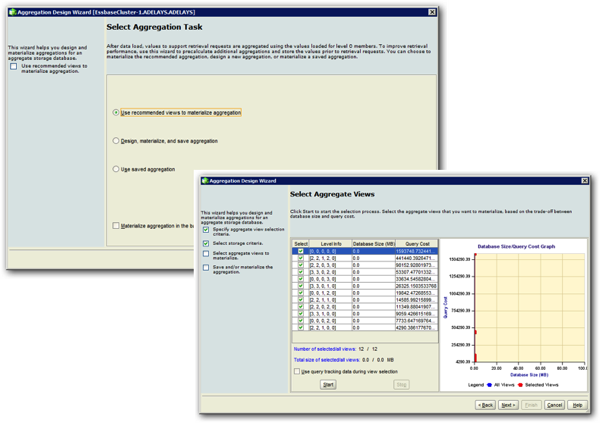

Selecting this menu option brings up a wizard, that lets you either have the wizard pick and materialize the aggregations, or let you select and materialise them individually, based either on the structure of the ASO database or a query workload captured earlier.

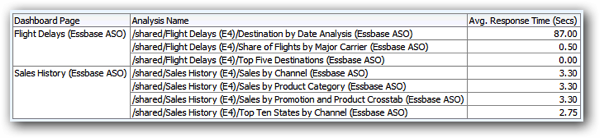

Creating the aggregates, for both the Sales History and the AIrline Delays dataset, was surprisingly fast, and going back to the dashboards and running the queries again, response time as predicted, dropped.

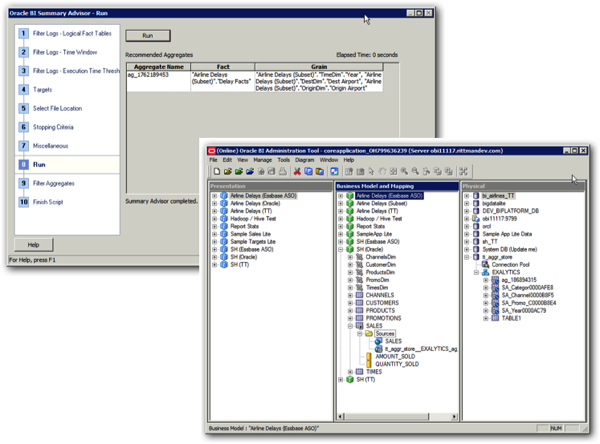

So in-fact, from a purely response-time perspective, there's not really much in it between TimesTen (plus the Index Advisor) and Essbase ASO (and the Aggregation Design Wizard). For good measure I also created some in-memory aggregate for the Oracle source using the Summary Advisor, which recommended a couple for each, albeit with the TimesTen one coming in at a whopping 10GB.

So - to answer the question - can I put my entire dataset into Exalytics's RAM, either because in total it's less than 1TB in size, or because I want all my reporting data on my own server, in-memory, which option comes out best? In truth, there's no straight answer, with both TimesTen and Essbase ASO capable of doing the job, but each with their own limitations.

During the Essbase testing I'd been corresponding with Dan Pressman, author of the "How ASO Works and How to Design for Performance" chapter in the "Developing Essbase Applications" book, who was very bullish about Essbase Aggregate Storage Option in this type of scenario. In his opinion (and to paraphrase), Essbase ASO creates such compact databases (250MB vs. TimesTen's 10GB) and returns both detail-level and summary data so quickly because:

- "It effectively “compresses” the metadata when it creates the bitmap (which could be described as containing an efficient surrogate key for each piece of metadata)

- The bitmap not only identifies each piece of data at the lowest level but includes the full ancestry within the hierarchy.

- The full alternate hierarchy ancestry (for all alternates) of each data item is additionally represented in alternate bitmaps, which while not physically in the data file is available to the query evaluator

- This compressed metadata is very much like a column store index. Since the full identity all of the way up the hierarchy (and on alternate hierarchies) is included all possible “additive” queries can be answered from this one compact representation.

- ANY aggregate can be generated dynamically by querying the compressed bitmap. And if it fits in RAM then ANY aggregate can be calculated at RAM speeds. There is no need to use a summary advisor and hope that you got the right Aggregates.

- Even though there is no need to use a summary advisor, one exists: the aggregation wizard. It creates summary aggregations which have all the advantages of the original metadata compression – simply limited to the upper portions of the hierarchies not summarised in the aggregation process.

- This means that an aggregation can serve many queries."

In addition, Dan observed (correctly) that the queries I ran from the various dashboards were pretty simple, and in his words "if instead you ran a more complicated grid type query that required results from all query categories, I believe you would find Essbase would win on the UNAGGREGATED cube - as TT would need results that would have to come from a number of indexed queries that would then have to be correlated. Essbase would be able to do all the levels in a single pass of the full 900k bitmapped rows."

But one thing that's worth also bearing in mind is that it was a lot harder to create the Essbase ASO dataset, even using Essbase Studio, than it was to replicate the tables into TimesTen using ttimportfromOracle. Its certainly possible to model a multiple-fact scenario in Essbase, but in practice reporting across multiple Essbase databases and modelling multiple "facts" is trickier in Essbase (within the OBIEE context), and I had to do lots of data-munging such as prefixing member names to ensure uniqueness, that I didn't have to do with TimesTen. That said, the out-of-the-box performance with the Essbase ASO source was a lot better than the TimesTen one, and the RPD modelling part was easier as the import process creates all the RPD metadata out of the Essbase database outline.

And all of this assumes that you can get your full dataset into Exalytics' 1 or 2TB of RAM. With the Essbase ASO database, even with the additional aggregate views, the dataset was tiny, whereas with TimesTen the tables I imported in came to around 10GB (with compression), fine for an Exalytics server but a slight warning that we'd have to carefully manage space when loading data into the server. And this is where the regular Summary Advisor comes into its own, taking just the aggregated data and storing that, leaving the detail in the source database with Exalytics just holding the aggregates.

Unfortunately, therefore, there's no black-and-white answer as to which technology is best when you want to store all of your reporting data, not just the aggregates, in Exalytics. The good news is that they both work (in the end), but each approach has its benefits - Essbase is easier to setup and model, gives the best initial results, but requires Essbase modelling skills and may not scale-up to the point where you could reasonably model the entire BI Apps dataset in Essbase ASO, for example. TimesTen is a bit more fiddly, takes a bit of post-load tuning, but has the benefit of matching closely the structure and layout of the source Oracle database, making it perhaps a more realistic option when replicating a whole schema into RAM - as long as it all fits in.

For me though - in the short term, I'll be presenting this along with Stewart Bryson at next week's Enkitec Extreme Exadata Expo (E4) Conference in Dallas, Texas, as part of a wider "tips and tricks from the field" session on Monday afternoon. Beyond that, my next set of tests will be to revisit the work that Pete Scott and I did on accelerating Oracle BI Apps dataset using Exalytics and TimesTen, this time with the Index Advisor and with the hope that we'll finally hit that goal of a data warehouse in-memory, with split-second response times. Watch this space, and maybe see one or two of you in Texas next week.