Using DAC11g to Replicate BI Apps DW Tables into TimesTen

A couple of months ago Peter Scott and I wrote a series of posts on using Exalytics with the Oracle BI Apps, with the idea begin to try and use Exalytics' In-Memory database cache to speed up BI Apps dashboards. We looked at a number of approaches, including using OBIEE11g's Summary Advisor to replicate commonly-used BI Apps aggregates into TimesTen, whilst Pete looked at the more manual route of using TimesTen's ttimportfromOracle utility, which I expanded on a couple of weeks later. But there's another way you can replicate data into TimesTen that doesn't involve the Summary Advisor, scripts or indeed anything outside of a regular BI Apps installation - it's actually the DAC, which in the 11g release has a feature for replicating tables into the TimesTen in-memory database. So how does this work, and is it a viable solution for moving your BI Apps data warehouse in-memory?

As a bit of background for anyone new to Exalytics and TimesTen but that knows the BI Apps, there's two typical use-cases for TimesTen when used in the context of OBIEE:

- As an in-memory aggregate cache, automatically populated and managed by OBIEE's Summary Advisor, as part of the Exalytics hardware and software package

- As a regular database but stored in-memory, used to hold detail-level data warehouse data plus any aggregate tables you choose to add-in

Strictly-speaking, the second use-case can be done outside of Exalytics, but you'll most probably need to use the TimesTen table compression feature only available within Exalytics if you want to store any significant volume of data in TimesTen. In fact, given the typical size of data warehouses including the BI Apps data warehouse, it's unlikely that you'll ever get your whole dataset into TimesTen as it has to store everything in-memory, so you've then got to choose either to:

- Just store aggregates in TimesTen, which is the route Oracle went down with Exalytics

- Only try this with data marts or other small data warehouses

- Put just the "hot" data from your data warehouse into TimesTen - for example, the last couple of years data across the data warehouse, or a particular fact table and its dimensions

It's this third approach that the new DAC11g is most suited to, with functionality to mark one or more BI Apps DW tables as "in-memory", then replicate them into TimesTen using an ETL engine actually built-in to the DAC, so there's no need to add new Informatica routines or otherwise customise your main load routine. It does, however, come with some significant limitations, some of which you can work around but others you'd need to just live with. Let's go through what's involved though, using BI Apps 7.9.6.4 and DAC 11g as currently downloadable from OTN.

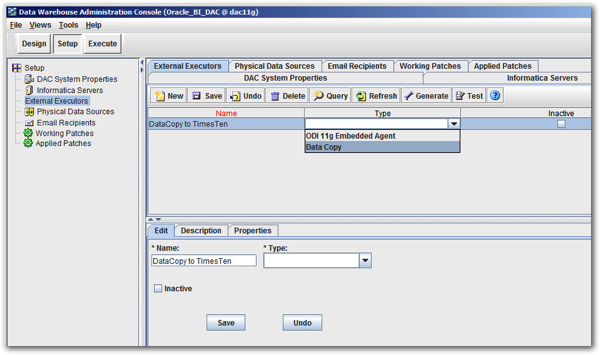

DAC 11g has a number of new concepts within this release, and one we're interested in for this example is the "external executor". What this allows is the DAC to use ETL engines other than Informatica to do work for it, so you could use ODI, for example, to do some ELT-centric work in support for a wider Informatica load if that made more sense. Another type of external executor that you can register though is called "Data Copy", and this is a special additional data movement feature that's actually built-in to DAC 11g and uses parallel Java processes to copy data from one table to another. The TimesTen replication feature in DAC 11g uses this to move data from the BI Apps data warehouse into TimesTen tables, so lets register this Data Copy external executor before we do anything else, by switching to the Setup view within the DAC console and creating a new entry within the External Executors section.

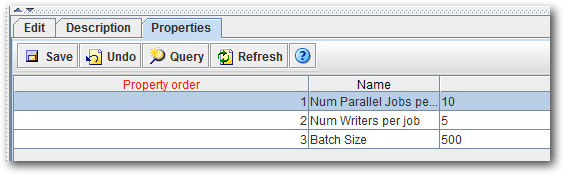

Notice the ODI 11g Embedded Agent option that's also there, but for now I give the external executor a name, press the Generate button to create the parameters for this particular external executor type, then review the default settings for the parallel data load engine. DAC 11g executes the data load using multiple Java JDBC calls that take subsets of the source table's data and copy them into the target table, and you'll most probably need to fiddle-around with these settings for your own environment, to get the best mix of concurrent data load vs. overall capacity vs. avoiding TimesTen table locks.

Now we can go and mark some tables as being "in-memory", ready for replicating them into TimesTen using this new feature.

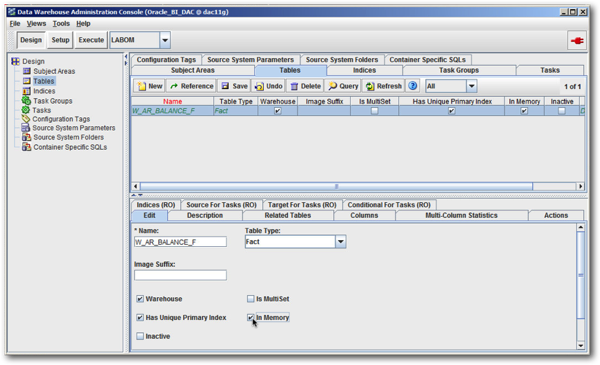

Space is of course at a premium with TimesTen, so it'd make sense to start-off with a small source BI Apps DW table and try and replicate that, to get a sense of the space it takes up, and how that might extrapolate to the wider source data set. W_AR_BALANCE_F in my sample BI Apps data warehouse has just 62 rows in it, and it joins to a number of dimension tables, once of which (W_PROFIT_CENTER_D) has just 162 rows of data in it. So let's start with these two, and see where things go.

To select tables for replicating into TimesTen, switch to the Design view, then use the Tables tab to locate the tables, and check the In Memory checkbox, like this:

I then do the same for the W_PROFIT_CENTER_D table, with the DAC Console creating copies of the table metadata in the new source system container I'd set up prior to this exercise.

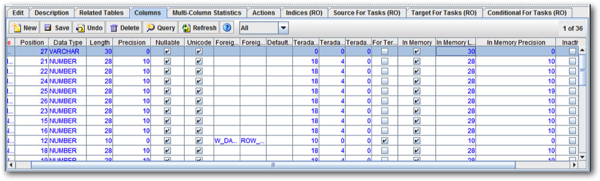

For each of these tables, I now also need to define the datatype lengths and precisions of each column. To do this, with each of the two tables tables selected, click on the Columns tab and then navigate to the In Memory Length and In-Memory Precision columns, and either copy across the default values from each column, or use a utility such as ttimportfromOracle (if your data warehouse source is the Oracle Database) to first generate optimal datatype lengths, then copy those settings into the in-memory column definitions.

Now the TimesTen tables are defined in the DAC repository, I now need to set up a connection from the DAC to the TimesTen server. To do so, I perform the following steps:

1. Ensure TimesTen 11.1.2.2.4+ (ideally 11.1.2.2.5+) is installed either on the server running OBIA, or the server is installed somewhere else and the client is installed on the OBIA server.

2. Copy across the TimesTen library files from $TIMESTEN_HOME/lib to $DAC_HOME/dac/lib - I just copied and pasted all of the .jar and .lib files from the TimesTen /lib directory into the DAC one.

3. If you're running the DAC server on a 64-bit machine, and you've got 64-bit TimesTen installed, alter the DAC config file so that it points to a 64-bit JVM rather than the default 32-bit one that gets installed with the DAC client - I pointed it to the one that ships with 64-bit OBIEE 11.1.1.7, as also installed on this server.

4. Create the required TimesTen client ODBC DSN on your OBIA server, and the TimesTen Data Manager server DSN if you've got both TimesTen server and client on your OBIA machin

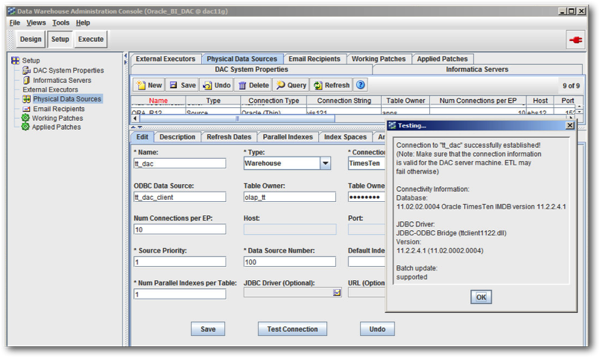

5. Go into the DAC client, select Setup, then the Physical Data Sources tab, then create a new data source that points through to the TimesTen Client ODBC DSN

At the end of these steps you should have an additional physical datasource connection registered in the DAC console, that successfully connects through to your TimesTen database, like this:

Now you need to create a logical data source that we'll use later to point to this physical connection. To do so, from the DAC Client select Tools > Seed Data > Logical Data Sources, then create a new logical data source called DBConnection_TimesTen.

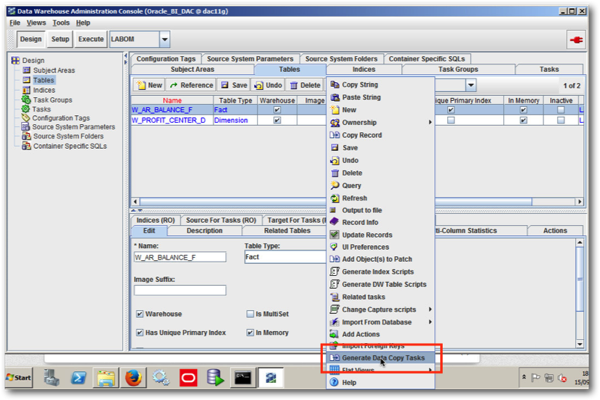

To create the data copy tasks, I do the following:

1. Switch to the DAC Client Design view, select the Tables tab, press Query, check the In Memory checkbox, then press Go. The list of tables marked as in-memory, to be replicated into TimesTen, is then displayed. In this case, it's the W_AR_BALANCE_F table and the W_PROFIT_CENTER_D one

2. Right-click anywhere in this list of tables, and select Generate Data Copy Tasks. When prompted, select the All Records in the List radio button.

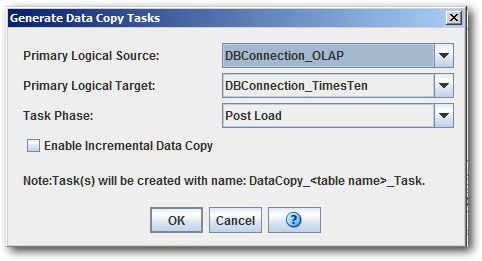

3. When the Generate Data Copy Tasks dialog is shown, select the following values:

Primary Logical Source: DBConnection_OLAP

Primary Logical Target: DBConnection_TimesTen

Task Phase: Post Load

Note that the Task Phase can be changed to suit where you want the data copying to happen in a real-life project, and that you can check the Enable Incremental Data Copy checkbox if you want update just the new and changed data in your TimesTen tables - see the online docs on this feature for more details. Once done, press OK to have the DAC Client create the data copy tasks for you.

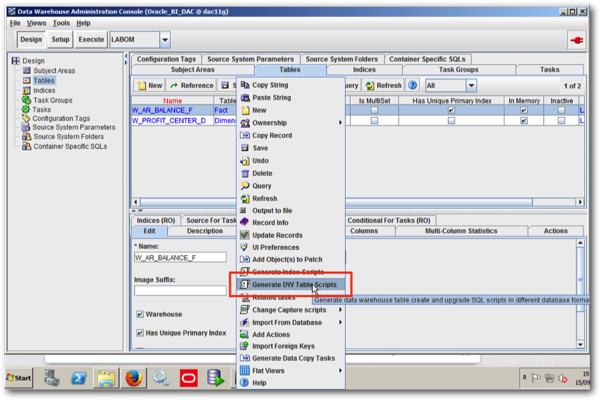

You'll also need to create the corresponding TimesTen tables, a task you can also do from the DAC Client. With the same set of In Memory-flagged tables displayed, this time select the Generate DW Table Scripts option, like this:

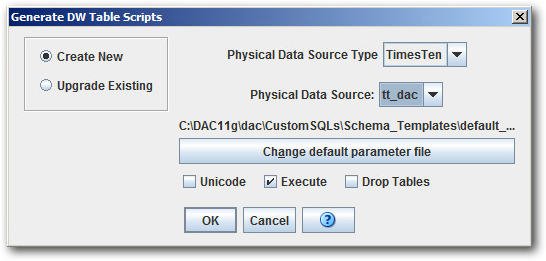

When the Generate DW Table Scripts dialog is shown, select TimesTen as the Physical Data Source Type, check the Execute checkbox, and then select the tt_dac option for the Physical Data Source, like this:

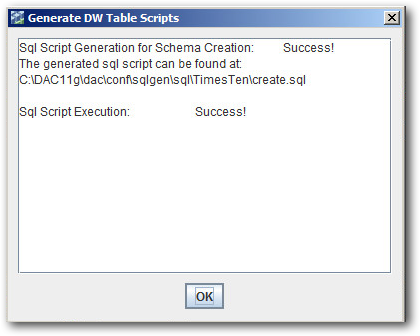

The DAC Client will now generate the TimeTen table DDL scripts and execute them, to create the tables to hold your replicated DW data. Assuming all is well, the Generate DW Table Scripts dialog should show a success message like this:

You should then repeat this to create the DW Index scripts, which the DAC Client will base on the indexes on the original BI Apps DW tables. As I talked about in my blog post on indexing TimesTen tables a few weeks ago, you should follow-up this replication process with the use of the TimesTen Index Advisor, which generates further index recommendations based on the actual query workload on your system, and can dramatically improve the performance of queries against TimesTen tables compared to what you'd get with the default DAC-generated indexes.

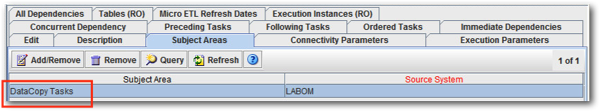

Now back to the data copy tasks. These new tasks need now to be added to an existing, or a new, subject area so that you can then include them in an execution plan. In this instance, I'm going to create a new subject area for the tasks, .hen add the new tasks to this subject area - in reality, you might choose to include these tasks in with your existing DAC subject areas, so that the TimesTen replication happens as the rest of the load takes place, but in my example I'm going to keep this replication separate from my main data load to keep things simple. To create this subject area and add the data copy tasks to it, I do the following:

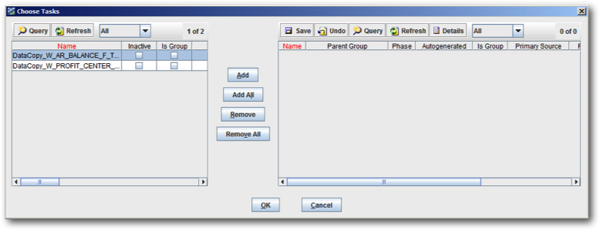

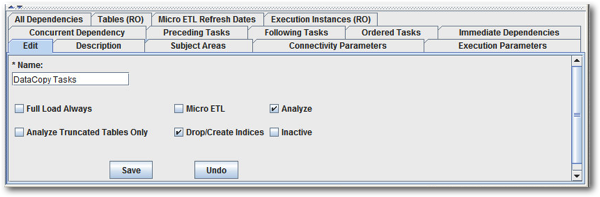

1. From the DAC Client, select the Design view, then the Subject Areas tab. Press New to create new subject area and call it Data Copy Tasks, then press Save.

2. With the subject area still open for editing, select the Tasks tab, press the Add / Remove button, then type in "DataCopy*" into the Name field and press Go. The two data copy tasks should then be displayed. Press the Add All button to add these tasks to the right-hand list of selected tasks, then press OK.

Now it's a case of either adding this subject area to an existing DAC execution plan or creating a new execution plan just for this data copy subject area. I'm going to do the latter.

1. Switch to the Execute view, select the Execution Plans tab, then press New, and call the new execution plan DataCopy Tasks, and press Save.

2. Now with that execution plan still open for editing, switch to the Subject Areas tab and use the Add / Remove button to add the new subject area you just created to this execution plan.

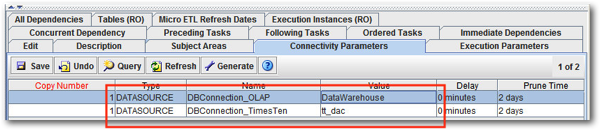

3. Now switch to the Connectivity Parameters tab and press the Generate button. Once the parameter generation process completes, select the following values for the two parameters:

DBConnection_OLAP: DataWarehouse

DBConnection_TimesTen: tt_dac

This connects the tasks' logical data source connections to the physical ones, including the physical TimesTen database connection I created earlier in the process.

4. Finally, for this stage, press the Build button above the list of execution plans with this one still selected, to create the list of ordered tasks for this new execution plan.

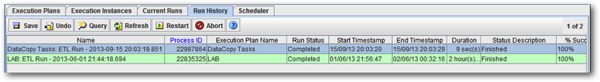

Now we're ready to run the data copy process, and replicate these two DW's tables into the TimesTen database. To do so, again within the Execute view and with the new execution plan selected, press Run. Once the execution plan completed, you should see it listed under the Run History tab, hopefully with a success message next to it.

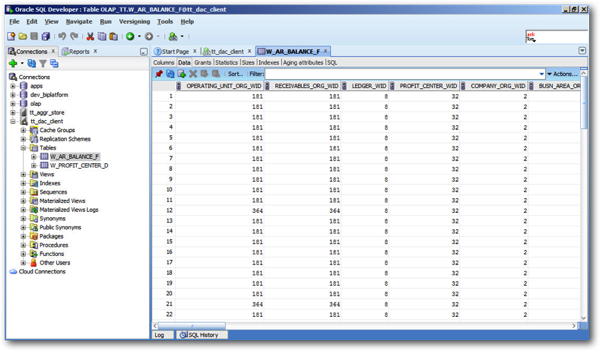

Finally, if you move over to SQL*Developer and create a connection through to the TimesTen database, you should be able to see the replicated data in these two tables.

So there you have it - replication of BI Apps DW data into equivalent TimesTen tables, all handled by the DAC and its internal JDBC-based ETL engine. But … what happens next, and how do you get these tables into the BI Apps repository and make them available to the BI Apps dashboards, so that they can be used in-place of the regular Oracle tables to speed up queries? Well - you'll have to wait for the follow up…. ;-)