Connecting OBIEE 11.1.1.7 to Cloudera Impala

A few months ago I posted a series of articles about connecting OBIEE 11.1.1.7, Exalytics and ODI to Apache Hadoop through Hive, an SQL-interface layer for Hadoop. Hadoop/Hive connectivity is a cool new feature in OBIEE 11g but suffers from the problem common to Hive - it’s actually quite slow, particularly when you’re used to split-second response times from your BI tool. The reason it’s slow is because Hive, in the background, generates MapReduce jobs which in-turn query Hadoop, batch processing-style, for each of your queries; each one of these MapReduce jobs requires a Java program to be written, and then submitted to the Hadoop job control framework and run within a Java JVM, which adds latency to your query. It’s not uncommon for a Hive query to take a minute or so to retrieve even a small set of “rows” from the Hadoop cluster, which isn’t really what it’s for - Hive and Hadoop are typically used with very large datasets spread over multiple servers - but you can’t help thinking there must be a better way to query Hadoop data.

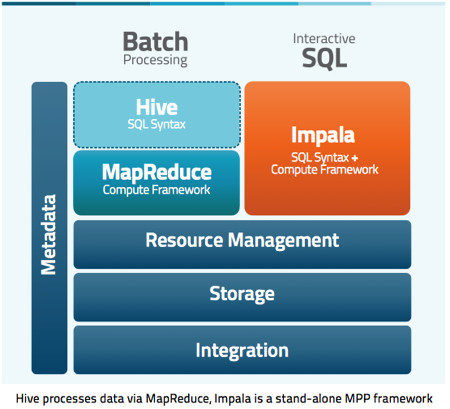

And there is a better way - several organisations and companies have come up with improvements to Hive, the idea being to take the central idea of SQL-access-over-Hadoop, but remove the dependency on writing MapReduce jobs on the fly, and instead create separate, typically in-memory, server processes that provide similar functionality but with much improved response times. Probably the most well-known product like this is Cloudera’s “Impala”, an open-source but commercially-sponsored Hive replacement that’s available as part of Cloudera’s “Quickstart” demo VM, downloadable for free from the Cloudera website. The architecture image below is from the Cloudera Impala datasheet, which nicely explains the differences between Hive and Impala, and where it fits in as part of the overall Hadoop framework.

Now whilst Impala isn’t officially supported by Oracle as a data source, Hive is, so I thought it’d be interesting to see if we could swap-out Hive for Impala and connect more efficiently to a Hadoop datasource. I managed to get it working, with a couple of workarounds, so I thought I’d share it here - note that in a real-world installation, where the server is on Linux/Unix, or where your server isn't on the same machine as your BI Administration client, it's a bit more involved as you'll need both server-side and client-side ODBC driver install and configuration.

Keeping it simple for now though, to get this working you’ll need:

- OBIEE 11.1.1.7, for either Windows or Linux - in my case, I’ve used Windows. Oracle’s recommendation is you use Linux for Hadoop access but Windows seems to work OK.

- The Cloudera Quickstart CDH4 VM - you’ll need to add some data to Impala, I’ll leave this to you - if this article makes sense to you, I’m sure you can add some sample data ;-)

- The Cloudera ODBC Driver for Impala - the Windows 64-bit ones are here, and the rest of the drivers are on this page.

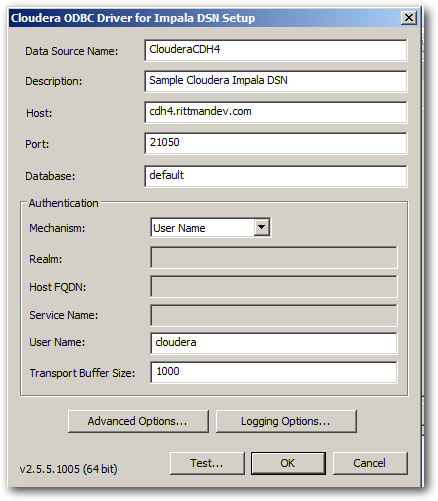

Once you’ve downloaded the Quickstart VM and got Impala up and running, and set up OBIEE 11.1.1.7 on a separate server, start by installing the ODBC drivers so you’re ready to configure them. In my case, my Impala tables were held in the standard “default” schema, and my Quickstart VM was running on the hostname cdh4.rittmandev.com, so my ODBC configuration settings looked like this:

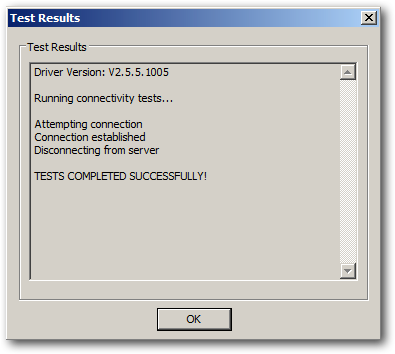

“cloudera” is the default username on the Quickstart VM, with a password also of “cloudera”, so when I press the Test… button and put in the password, I see the success message:

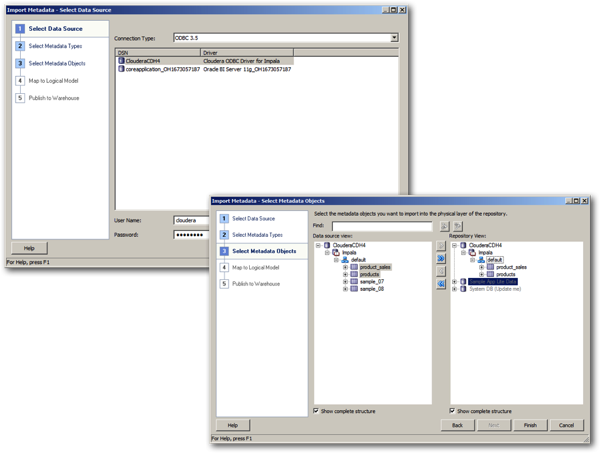

So far so good. So now over to the BI Administration tool, where the process to import the Impala table metadata is the same as with Hive. First, select the new Impala DSN from the list of ODBC connections, then bring in the Impala tables that you want to include in the RPD - in this case, two tables called “product” and “product_sales” that I added myself to Impala.

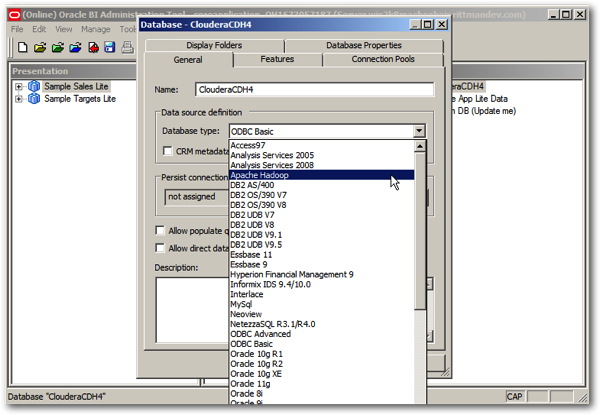

Next, double-click on the new physical database entry that the import just created, and set the Database type from ODBC Basic to Apache Hadoop, like this:

When you’re prompted to change the connection pool settings as well - ignore this and press No, and leave them as they are.

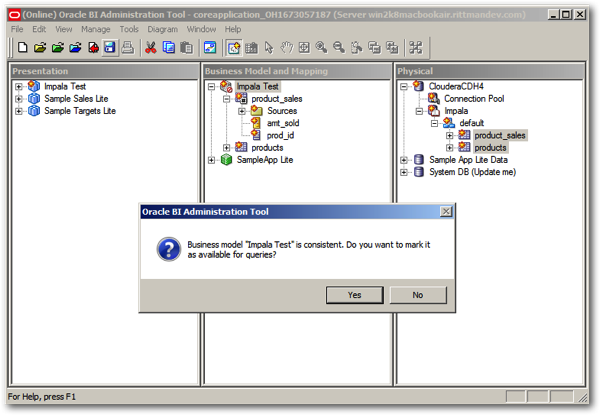

Then, create your business model and presentation layer subject area as you would do normally - in my case, I add a primary key to the products table, join it in the physical layer to the product_sales fact table, and then create corresponding BMM and Presentation Layer models so that it’s then ready to report on.

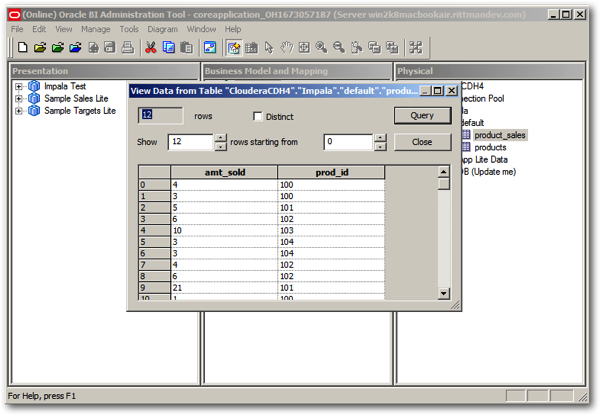

Running a quick test on the datasource, displaying some sample rows from the Impala tables, indicates it’s working OK.

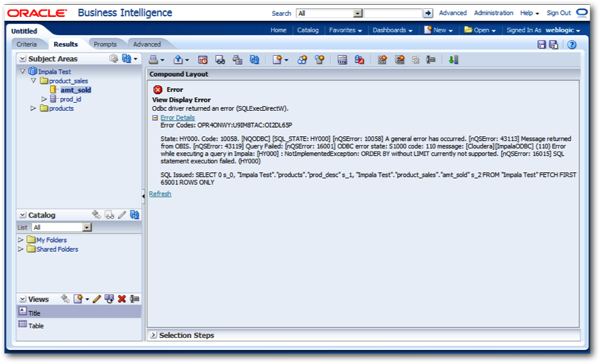

So over to the dashboard. I run a simple query that sums up sales by product, and … it doesn’t work.

If you take a look at the logical and physical SQL that the BI Server is generating for the query, it all looks OK ...

]]

[2013-11-11T19:20:17.000+00:00] [OracleBIServerComponent] [TRACE:2] [USER-0] [] [ecid: ea4e409c7d956b38:-112518e2:14248823f42:-8000-00000000000001b4] [tid: c] [requestid: 60460014] [sessionid: 60460000] [username: weblogic] ############################################## [[

-------------------- SQL Request, logical request hash:

2f2b87c8

SET VARIABLE QUERY_SRC_CD='Report';SELECT

0 s_0,

"Impala Sample"."products"."prod_desc" s_1,

"Impala Sample"."products"."prod_id" s_2,

"Impala Sample"."product_sales"."amt_sold" s_3

FROM "Impala Sample"

ORDER BY 1, 3 ASC NULLS LAST, 2 ASC NULLS LAST

FETCH FIRST 65001 ROWS ONLY

]]

[2013-11-11T19:20:17.000+00:00] [OracleBIServerComponent] [TRACE:2] [USER-23] [] [ecid: ea4e409c7d956b38:-112518e2:14248823f42:-8000-00000000000001b4] [tid: c] [requestid: 60460014] [sessionid: 60460000] [username: weblogic] -------------------- General Query Info: [[

Repository: Star, Subject Area: Impala Sample, Presentation: Impala Sample

]]

[2013-11-11T19:20:17.000+00:00] [OracleBIServerComponent] [TRACE:2] [USER-18] [] [ecid: ea4e409c7d956b38:-112518e2:14248823f42:-8000-00000000000001b4] [tid: c] [requestid: 60460014] [sessionid: 60460000] [username: weblogic] -------------------- Sending query to database named ClouderaCDH4 (id: <<10894>>), connection pool named Connection Pool, logical request hash 2f2b87c8, physical request hash ee7aff05: [[

select 0 as c1,

D1.c2 as c2,

D1.c3 as c3,

D1.c1 as c4

from

(select sum(T43766.amt_sold) as c1,

T43769.prod_desc as c2,

T43769.prod_id as c3

from

products T43769 inner join

product_sales T43766 On (T43766.prod_id = T43769.prod_id)

group by T43769.prod_id, T43769.prod_desc

) D1

order by c3, c2

]]

[2013-11-11T19:20:17.000+00:00] [OracleBIServerComponent] [TRACE:2] [USER-34] [] [ecid: ea4e409c7d956b38:-112518e2:14248823f42:-8000-00000000000001b4] [tid: c] [requestid: 60460014] [sessionid: 60460000] [username: weblogic] -------------------- Query Status: [nQSError: 16015] SQL statement execution failed. [[

[nQSError: 16001] ODBC error state: S1000 code: 110 message: [Cloudera][ImpalaODBC] (110) Error while executing a query in Impala: [HY000] : NotImplementedException: ORDER BY without LIMIT currently not supported.

[nQSError: 43119] Query Failed:

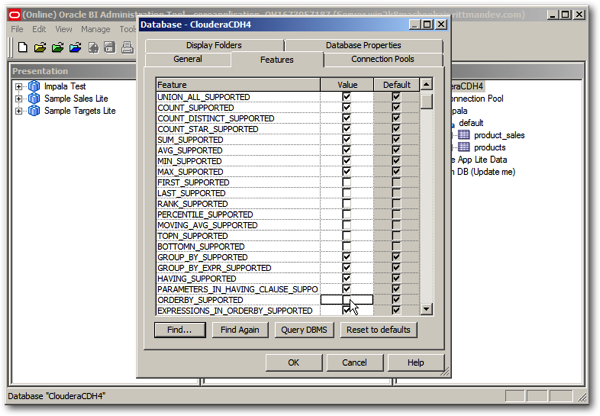

But the problem is that Impala doesn’t like ORDER BY clauses without a corresponding LIMIT clause, something the product insists on presumably because of the potential size of Impala/Hadoop datasets and the need to contain the rows returned in-memory. If you’re running Impala queries directly within the Impala shell, you can issue the command set DEFAULT_ORDER_BY_LIMIT = x; to provide a default LIMIT value when one isn’t specified, but I can’t as yet see how to provide that setting over an ODBC connection, so the workaround I used was to uncheck the ORDERBY_SUPPORTED database setting in the physical database properties dialog, so that the BI Server leaves-off the ORDER BY clause entirely, and does the result ordering itself after Impala returns the (now un-ordered) result set.

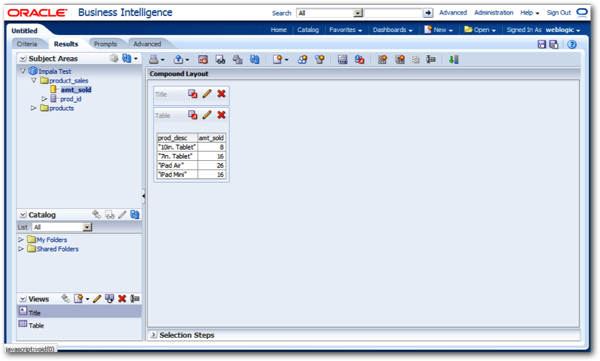

Saving the RPD again now, and refreshing the results, brings back the query as expected - and in under a second or so, rather than the 30, 60 seconds etc that Hive would have taken.

So - there you go. As I said, Impala’s not officially supported which means it may work, but Oracle haven’t tested it properly and you won’t be able to raise SRs etc - but it’s an interesting alternative to Hive if you’re serious about connecting OBIEE 11g to your Hadoop datasources.