Creating a Multi-Node Hadoop/Impala Cluster as a Datasource for OBIEE 11.1.1.7

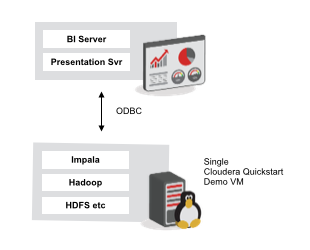

The other day I posted an article on the blog about connecting OBIEE 11.1.1.7 to Cloudera Impala, a new “in-memory” SQL engine for Hadoop that’s much faster than Hive for interactive queries. In this example, I connected OBIEE 11.1.1.7 to the Cloudera Quickstart CDH4 VM, which comes with all the Hadoop and Cloudera tools pre-installed and configured, making it easy to get going with the Hadoop platform.

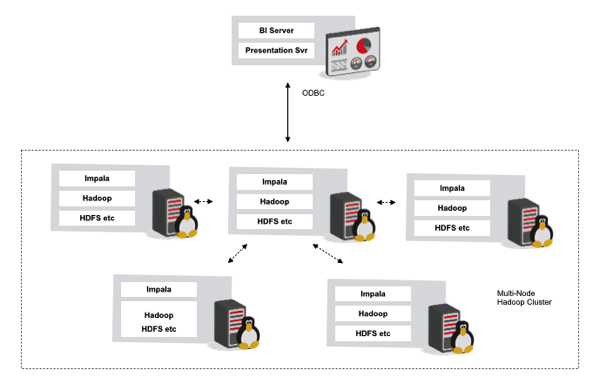

Whilst the example worked though, I couldn’t help thinking that using Impala against a single node Hadoop install isn’t really how it’d be used in real-life; in reality, if you used OBIEE in this way, you’re much more likely to be connecting to a full Hadoop cluster, with multiple server nodes handling the incoming queries and potentially gigabytes, terabytes or petabytes of data being processed. So it it possible to set up a Hadoop cluster that gets a bit nearer to this multi-node architecture, so we can practice connecting to a cluster and not a single server, and we can see Hadoop process our queries across all of the nodes - as we’d see in real life, given that this low-cost MPP processing is the key benefit of Hadoop as a whole?

Hadoop, as you’re probably aware, was designed from the ground-up to run across multiple nodes, with those nodes typically either being small, low-cost servers, or in many cases servers running in the “cloud”. As such, you’re as likely to see Hadoop running on a cluster of Amazon EC2 server as running on physical servers in a datacenter, and in most cases the underlying OS running on those servers is Linux - most usually, Ubuntu 64-bit. So if we want to set up our own Hadoop cluster, there’s a few options open to us:

1. Get hold of a bunch of physical servers (maybe, old PCs or blade servers), install Linux and Hadoop on them, and then do the configuration and setup manually.

2. Buy a preconfigured solution - Oracle’s Big Data Appliance, for example, which has all the software pre-installed along with connectivity to ODI, Oracle Database etc

3. Spin-up a bunch of servers in the Cloud, or

4. Spin-up a bunch of Linux VMs, for example using VirtualBox or VMWare Fusion/Workstation

In the past I’ve done the Hadoop setup myself, manually, using the Hadoop distribution files available on the Hadoop website, but more recently vendors such as Hortonworks, MapR and Cloudera have put together their own Hadoop + added value tools distributions, and it’s Cloudera I’ve been looking at in most detail recently (if only because there’s a lot of ex-Oracle people there who I know, and it’s the bundled Hadoop distribution that comes with Oracle Big Data Appliance). What’s particularly good about Cloudera’s Hadoop offering is their “Cloudera Manager” utility - of which there’s a free version - and which simplifies the whole process of setting up a cluster by automating most of the process.

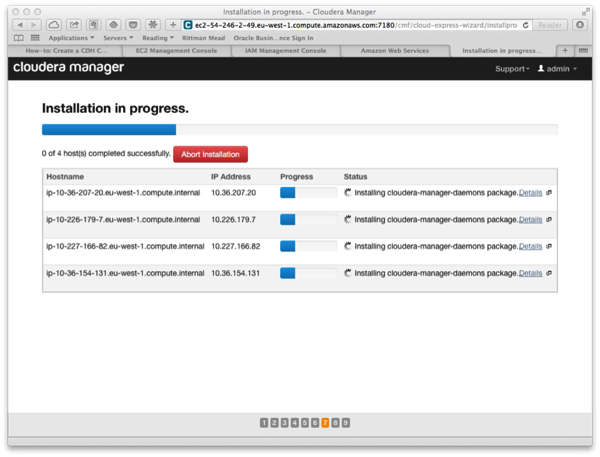

What’s also particularly interesting about Cloudera and Cloudera Manager, is that there are a number of solution available out there that automate the process of spinning-up clusters. One of them, described in this blog post on Cloudera’s website, involves using a built-in feature in Cloudera Manager to automatically create, provision and configure X number of Amazon EC2 virtual servers, with this servers then able to handle your queries in parallel and you just paying by the hour for the compute resource you need. So let’s give it a try.

I won’t go into a tutorial or explanation into Amazon Web Services and their EC2 (“Elastic Compute Cloud”) service here, suffice to say that you can create on-demand cloud-based VMs, paying by the hour and with pricing based on the size of instance, amount of memory needed, OS needed and so forth. We use Amazon AWS and EC2 extensively within Rittman Mead for development work, training environments and so forth, and what’s particularly interesting about AWS is the fact it’s all scriptable, there’s a public API and so forth. It’s this public API that Cloudera Manager uses to provision and spin-up the cluster VMs, something Cloudera Manager will automatically offer to do if it detects it’s running on Amazon EC2. Once you’ve provisioned the basic VMs, Cloudera Manager will automatically install the required Hadoop software on each of the VMs, meaning you can just sit back and watch the install, and then at the end, log in and check it’s all working.

And it did work - compared with problems I’d had with earlier versions of Cloudera Manager I’d had, where I’d set up the servers myself, installed Linux and Cloudera Manager myself, it all went amazingly well - to the point where I could upload some data into Impala itself, run some queries, and make use of my cloud-based Hadoop cluster.

And for one-off tasks, particularly where you need a very large amount of compute resource for a relatively small amount of time, Amazon AWS is great, but the cost soon starts to mount-up if you leave it running for too long - reckon on a cost of around $200-$400 for a reasonably-high specced instance for a month, multiplied by the amount of servers in your cluster.

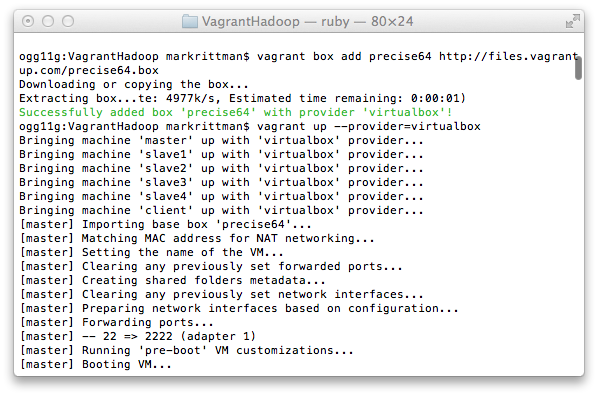

So what are the alternatives? Well as I said before, you could set up a cluster yourself, installing the various bits of software, and potentially creating lots of VMs on a test server to host the Hadoop nodes. But another route you could take is to use one of the various “devops” tools out there to automate the build of a number of Hadoop nodes, using virtualisation tools such as VMWare or VirtualBox and a reasonably well-specced desktop or laptop. Back on the Cloudera website again, I saw a blog post and how-to just along these lines - one that used a devops tool called Vagrant to script and automate the build of the cluster, including setting up Ubuntu Linux on the VMs, and downloading and installing Cloudera Manager, just leaving the job of configuring the Hadoop cluster to us afterwards. This is the approach I finally went with in the end, and to do the same you’d need the following bits of kit and software:

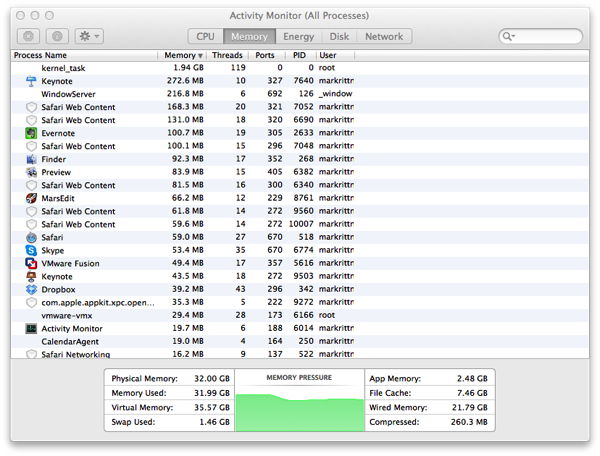

- A desktop or server with a lot of RAM - I’ve used my iMac which has 32GB RAM; you could use a 16GB server or laptop but you’ll need to alter the Vagrant scripts to reflect this

- Either VirtualBox (supported by default by Vagrant) or VMWare Fusion / Workstation (which require an extra-cost plugin for Vagrant)

- Vagrant itself - a free download with install instructions here

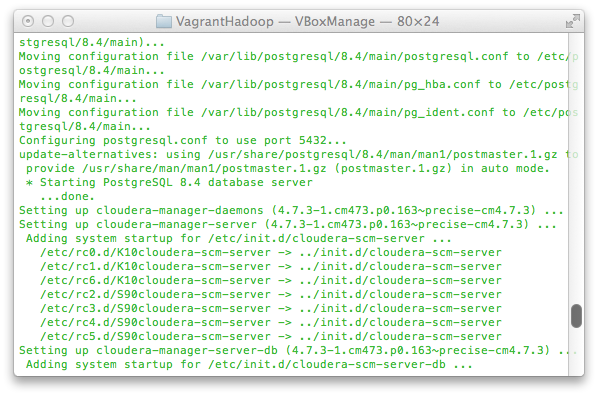

Vagrant itself is an easy install and is available for OS X, Windows, Linux etc, and you can download Vagrant configuration flle for setting everything up from the Cloudera blog post. Then, it was a case of running the Vagrant script, and watching it create my VMs.

In the terminal screenshot above, you can see the VMs begin created (I started off using VirtualBox, later on I switched to VMWare Fusion), and in the screenshot below, you can see the various Cloudera packages being downloaded and installed.

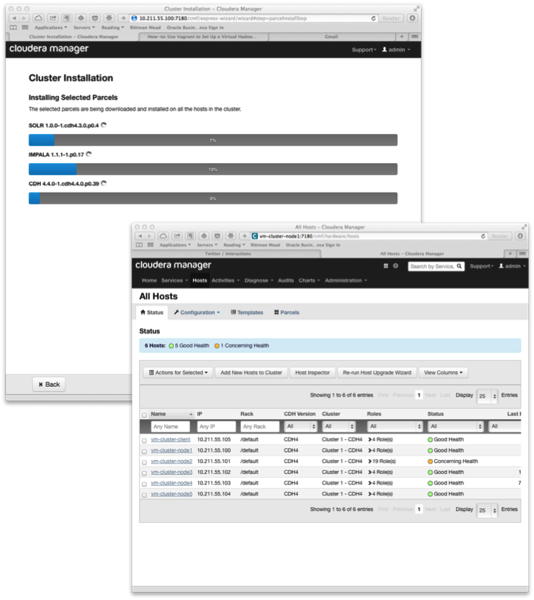

Once the Vagrant set-up of the VMs was complete, I then logged into the Cloudera Manager website, and similar to how I’d done it with the EC2-based install, I just selected the other VMs to configure, chose the software components, and let the configuration complete.

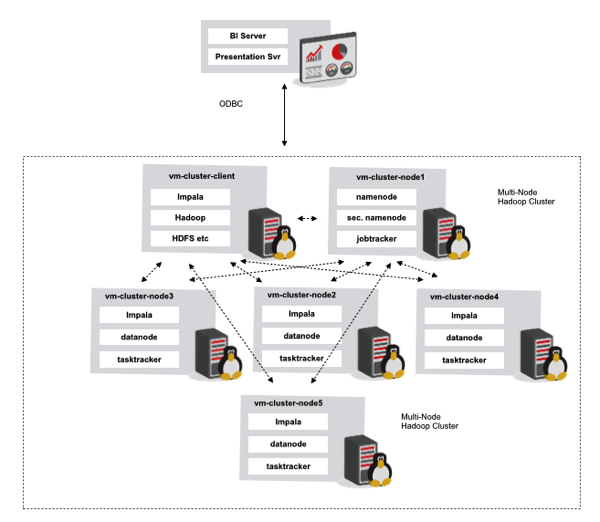

At the end of the install process, I had six VMs running to provide by Hadoop cluster, each one using about 4GB of memory, and playing the following roles:

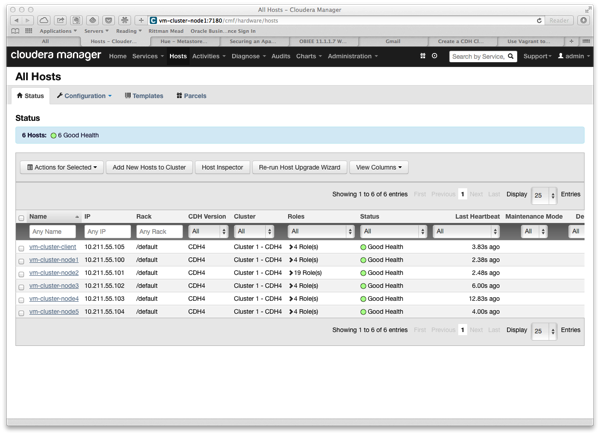

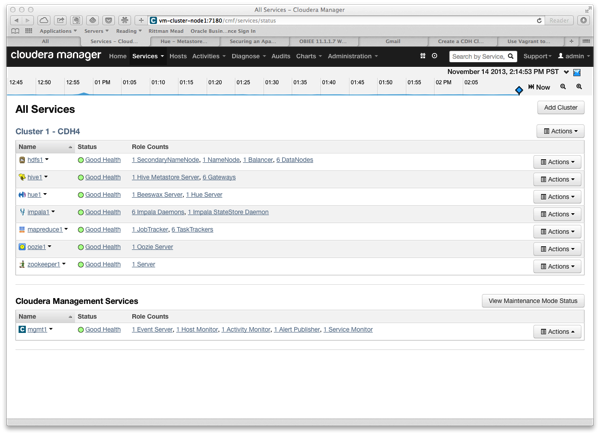

To be honest - you still need to know a bit about Hadoop, what the various bits do and so on to get it working - but then you also do to get Oracle installed, SQL Server, and so on. I guess the real barrier is having a machine big enough to run multiple Hadoop server nodes - too few and you don’t really see how the query processing works - so I guess this is why the cloud / EC2 route is so popular. But for me, I’ve got the six nodes working now, along with an OBIEE Windows VM with 11.1.1.7 installed to test out the connectivity. The screenshot below shows Cloudera Manager listing out the nodes in the cluster:

whilst the screenshot below this shows the various Hadoop platform elements listed out alongside the cluster nodes (a.k.a. VirtualBox/VMWare VMs) they’re running on.

and with OS X’s Activity Monitor showing they’re (just about) comfortably running within the overall 32GB RAM in the iMac.

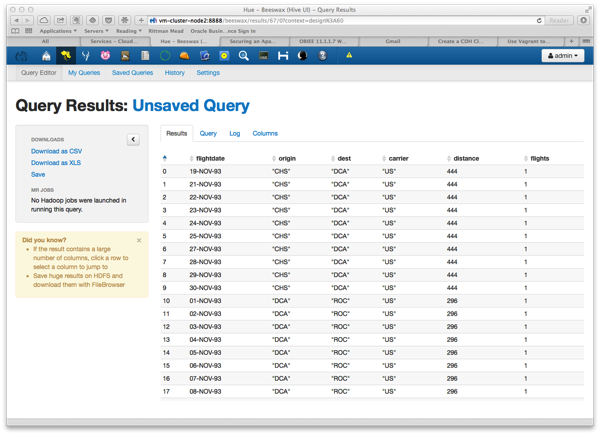

So - the moment of truth - let’s try out some queries. I’ll start with Hive first of all, as Hue (Hive’s web-based UI) has some nice tools for uploading files and creating Hive tables out of them - or of course you can use ODI and it’s Hadoop Adapter and upload some data to the cluster as part of an ETL process. To use a more meaningfully-large dataset, I unloaded some of the tables from the full Airline Delays dataset to CSV files (around 180m rows of flight leg data), and then created Hive tables out of those - the screenshot below shows data from the main flight leg fact table.

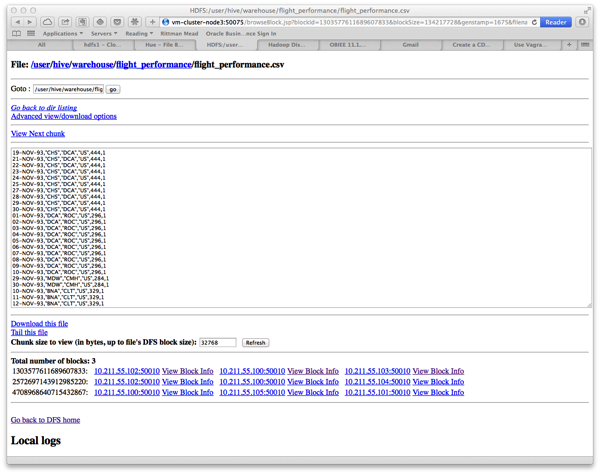

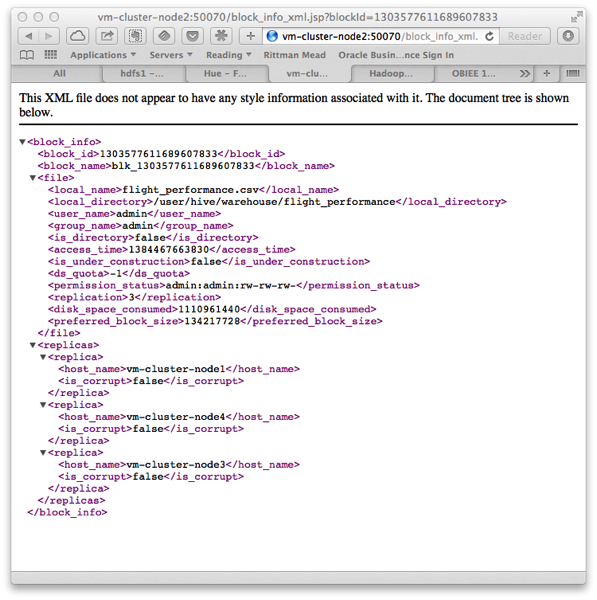

In the background, two things happen when you upload new data into Hive; first, the file containing the data is stored in Hadoop’s filesystem, called HDFS (Hadoop Distributed File System), a unix-like distributed filesystem that breaks data down into blocks, and stores the blocks redundantly across the nodes in the cluster. If we take a look at the file I uploaded with the flight delays fact table data in it, you can see that it’s been broken down into blocks as shown at the bottom of the page:

If you click on an individual block, you can also see that the block is stored primarily on one node, and then redundantly on three other nodes in the cluster.

HDFS does this for two reasons; first, by spreading the data file over multiple servers, it can take advantage of the parallel processing provided by the Hadoop framework. Second, though, this redundancy means that if any node goes down, there’s copies of the data blocks elsewhere in the cluster, giving you the ability to use low-cost, commodity hardware (or cloud-based servers) whilst still protecting uptime, and your data.

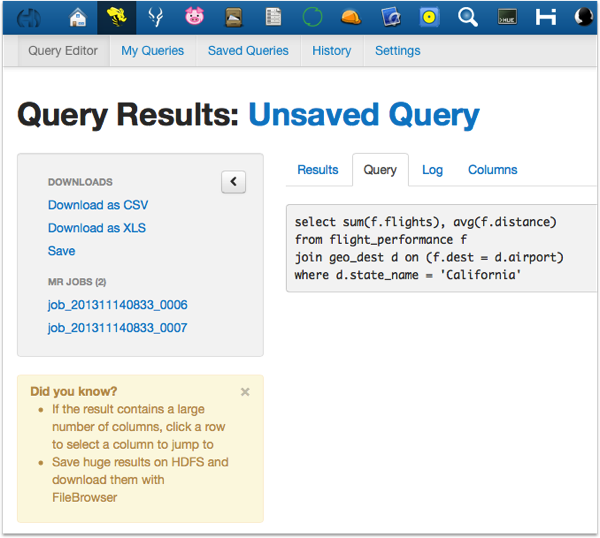

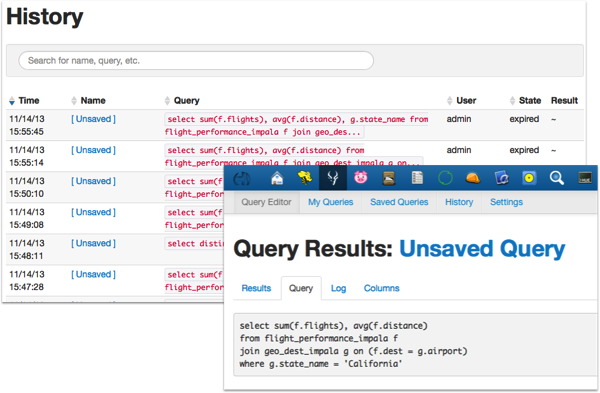

So let’s run a query via the Hue UI, using Hive first of all. I put together a simple query that sums up flights, and averages distances, for all flights with California as the destination. As you can see from the Hue screenshot below, the query triggered two MapReduce jobs, one to find all flights with Californian destinations (the “map”), and one to aggregate the results (the “reduce”).

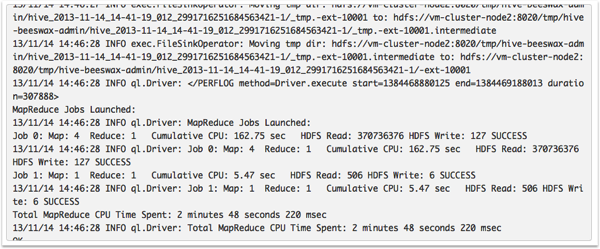

Looking at the MapReduce jobs being spun-up, run and then results gathered in, you can see that the MapReduce element (i.e., query time) took just under three minutes.

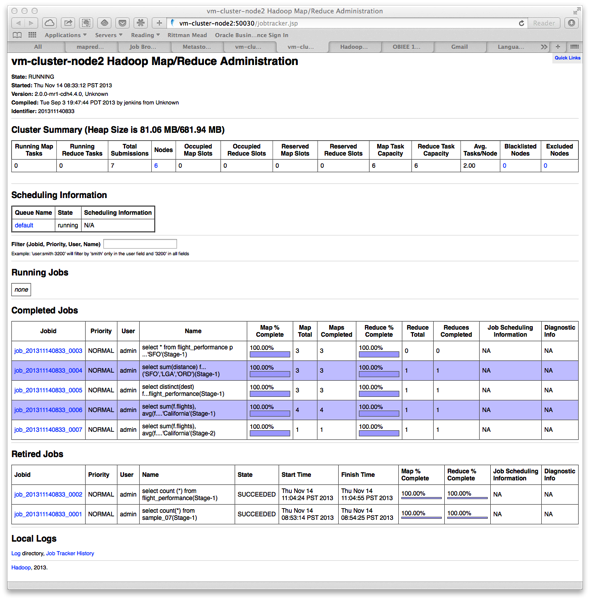

Going over the Job Tracker / MapReduce admin pages in Cloudera Manager, you can see the MapReduce jobs that were triggered by the Hive query - see how it handles the join, and how the filtering (mapping) is handled separately to the aggregating (reducing).

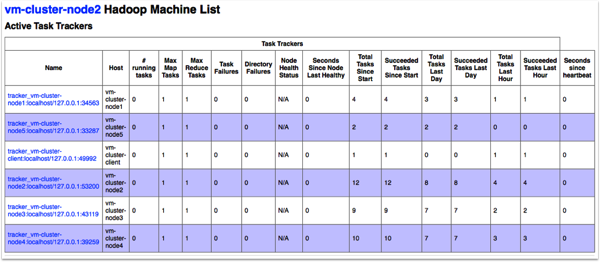

You can also bring up the Hadoop task tracker page, to see how the task track gave out chunks of the work to the various nodes in the cluster, and then got the results back in the end.

So - what about the Impala equivalent of the same query, then? Let’s give it a go. Well the query is more or less the same, but this time the results come back in around ten seconds, as we’d expect with Impala.

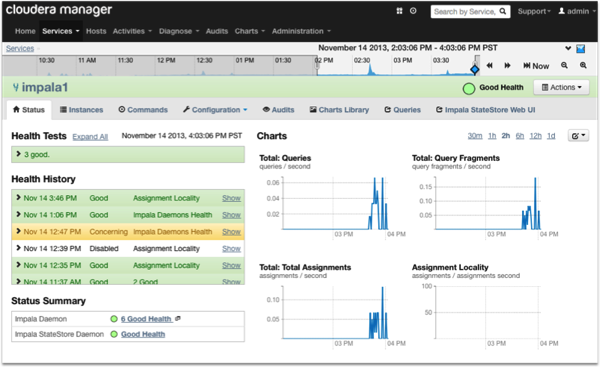

Looking inside Cloudera Manager, you can see the various Impala server processes working in the background, bypassing the need to generate MapReduce code and instead, using their own in-memory MPP framework to query the HDFS files and return the results.

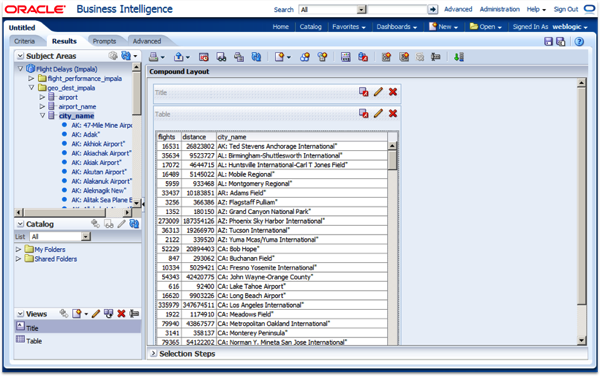

And finally - the $64,000 question - can we connect OBIEE to the cluster? To do this, you’ll need to download the Cloudera Impala ODBC drivers, as I outlined in my previous blog post on the subject, but once you do, it should work - see the screenshot below where I’m querying the flight delays data using OBIEE 11.1.1.7.

So there you have it - a couple of ways you can spin-up your own multi-node Hadoop cluster, and confirmation that it should all still work with OBIEE once it’s put together.