Looking at the ODI12c Hadoop Demos in the New Oracle BigDataLite VM

In a post earlier today on the blog I took a look at the new Oracle BigDataLite virtual machine that’s now downloadable from OTN, and walked-through some of the Cloudera Hadoop tools that come with the VM. At the end of the post I mentioned that there was also an install of ODI12c on the VM, and it comes with a couple of Hadoop integration examples already set-up for you. So what do these examples do, and how do they use the Hadoop tools and servers on the VM?

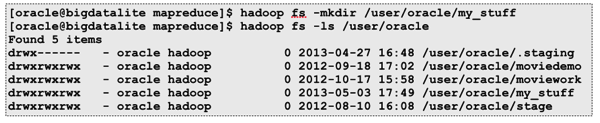

Let’s start with some background first. Hadoop is a framework for executing simple selection, filtering and aggregation batch jobs in a fault-tolerant way across horizontal clusters of servers (the BigDataLite VM is just a single node cluster, but the process is the same). When you load data into a Hadoop cluster for analysis, it’s put into what’s called HDFS (Hadoop Distributed File System), a Unix-like filesystem that spreads data across all nodes in the cluster and has built-in redundancy and fault tolerance - basically Hadoop server nodes are designed with cheap, higher-failure-rate hardware in-mind, and the Hadoop parallel query process detects failed nodes and works around them. In ODI terms, you’d often find data of interest sitting in HDFS, most probably because someone has done some prior processing or analysis using a tool like R, and now you want to load the results in a regular Oracle data warehouse.

Then, so that SQL-based tools such as ODI can access these files, another technology called Hive provides a SQL-like access layer over the files, very similar to how Oracle accesses files through external tables, with a Hive metastore playing the role of the Oracle data dictionary in terms of arranging files into tables, columns and databases.

Then, in the background, when you query the Hive tables, the Hive Server creates MapReduce jobs on the fly to return your data, splitting the job into various mapper and reducer activities which then run across the Hadoop cluster. Hive isn’t really (these days) designed for BI-type ad-hoc queries, but it’s great for batch access to Hadoop data which is why ODI uses it.

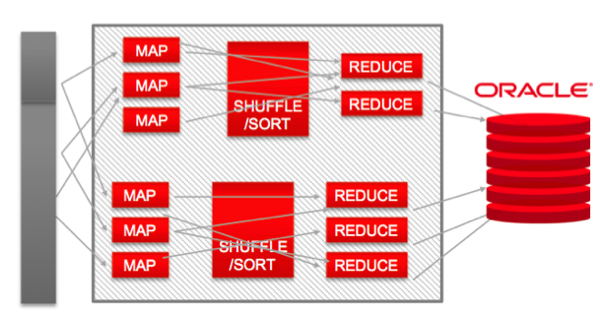

In addition there are a bunch of Oracle utilities that Oracle provide for connecting Hadoop to the Oracle database, collectively called Oracle’s Big Data Connectors. One of them, Oracle Loader for Hadoop, extracts data from Hadoop by pushing all of the data transformation work into MapReduce jobs, allowing you to leverage the power of the Hadoop cluster whilst easily loading data into Oracle tables. One of the ODI Hadoop knowledge modules uses this utility, along with another one called Oracle Direct Connector for HDFS. The diagram below shows the architecture behind Oracle Loader for Hadoop, and how it leverages MapReduce to do the “heavy lifting” around the data transformation.

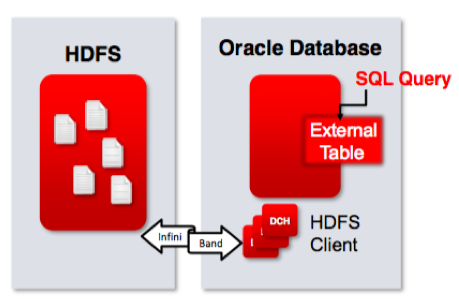

Oracle Direct Connector for HDFS is even-more conceptually-familiar, and allows you to create a special type of external table in Oracle to connect to HDFS files, as opposed to regular filesystem files.

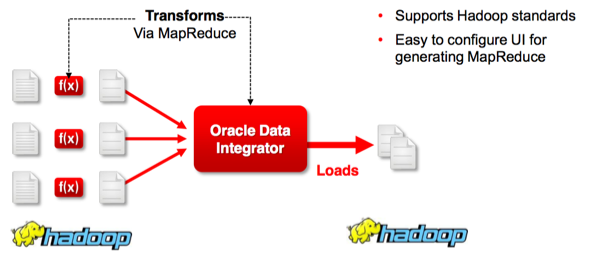

The last piece of the puzzle is an add-in to Oracle Data Integrator, called Oracle Data Integrator Application Adaptor for Hadoop. Available for both ODI11g and 12c, this provides a number of new knowledge modules designed for accessing Hadoop data along with connectivity to Hive and HDFS, and is a pre-requisite for the connectivity we’ll see in this posting.

The knowledge modules that this application adapter provides are:

- IKM File to Hive (Load Data) - for loading file data into an existing Hive table

- IKM Hive Control Append - for loading data to-and-from Hive tables, for in-Hive-database ETL

- IKM Hive Transform - for transforming Hive data using more complex expressions and SerDes

- IKM File-Hive to Oracle (OLH) - for loading data into an Oracle table from Hive, using OLH/ODCH

- CKM Hive - for applying static and flow controls to Hive tables

- RKM Hive - for reverse-engineering Hive metadata into the ODI repository

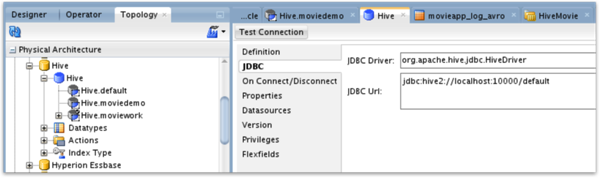

So let’s take a closer look at what’s in the ODI12c examples in the BigDataLite VM, starting with the Topology. If you take a look at the Topology tab in ODI Studio you’ll see the Hive technology, and if you drill into it further, connections to the Hive server on the VM and the various Hive databases.

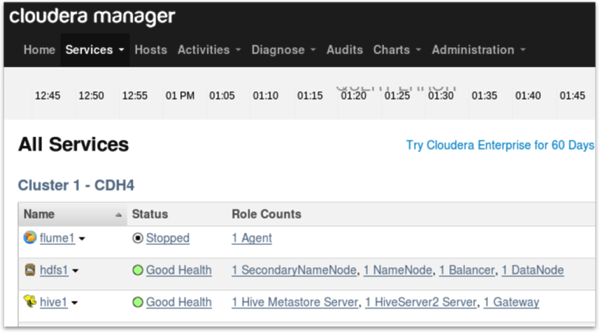

What this is connecting to is a service within the Hadoop cluster called HiveServer2 - this is an improvement over the old HiveServer1 that came with earlier distributions of Cloudera Hadoop, which could only reliably support a single connection, whereas HiveServer2 can support many concurrent connections. If you go over to Cloudera Manager and look at the Services tab, you’ll see it listed alongside the Hive Metastore server under the main Hive service.

Note that most out-of-the-box Cloudera Hadoop 4 distributions don’t have HiveServer2 enabled and running, so you’ll need to add it from the Services menu if you’re creating your own Hadoop setup.

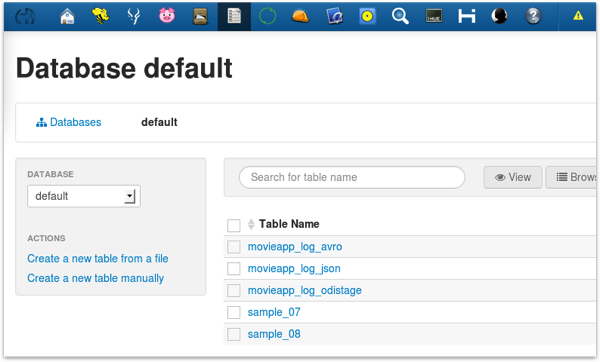

HiveServer2 runs on port 10000, and ODI connects to it via a JDBC connection. The files and tables that ODI is then going to work with exist in a Hive database, which you can see by looking at Hue, and clicking on the Metastore Manager icon. The tables ODI will be working with are movieapp_log_avro, and the table called movieapp_log_odistage. In the background, these Hive tables map onto HDFS files, with the movieapp_log_avro one using the Apache Avro data serialisation tool to parse log data into separate “columns” of data.

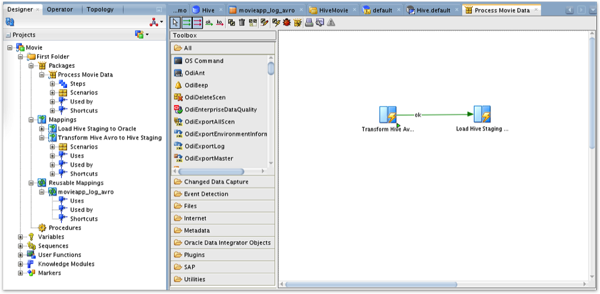

So the ODI project does two things (or three, to be precise):

1. It uses the reusable mapping feature to load data from the avro-format log file into a staging table, also in Hive

2. It then takes that Hive data and loads it in to an Oracle database table

All of these are then wrapped-up into an ODI package, which calls the first step (and the reusable mapping), and then then second step.

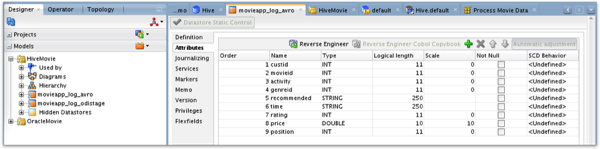

The Model section within the Designer navigator shows the two Hive tables as data sources and targets we can work with, with the avro file’s parsing specification turning the log file into a set of columns we can extract from.

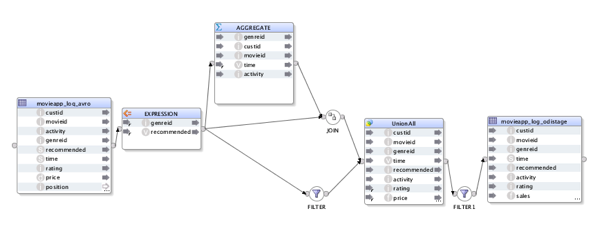

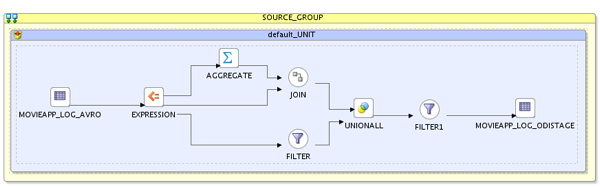

So looking at the first mapping, it reads from the reusable mapping over the avro table, then transforms and loads the data into another Hive table.

Switching to the Physical tab in the mapping editor, you can see that all the work is taking place within a single execution unit - because the transformation is all internal to Hive.

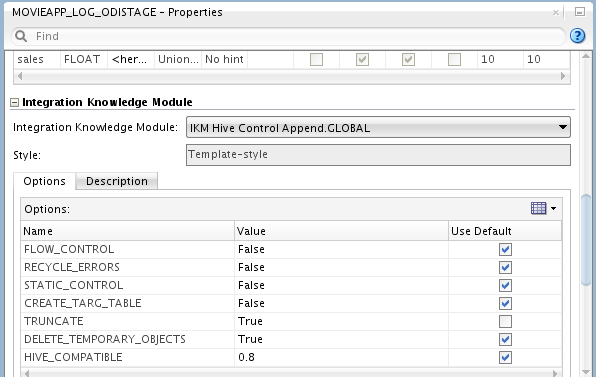

Looking at the target properties, you can see the IKM Hive Control Append knowledge module was used.

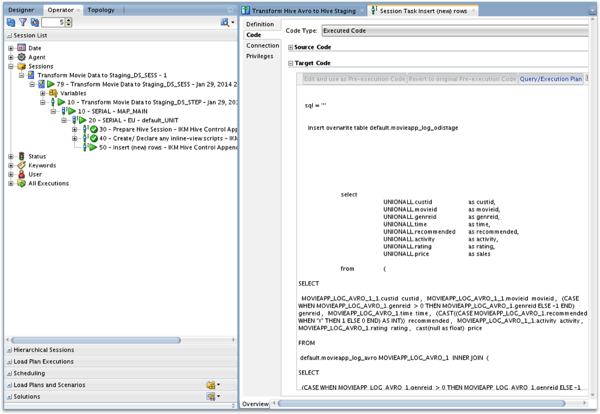

Running the mapping shows you the various steps in the process, and as this is an internal Hive transformation, all you see is HiveQL - the SQL dialect used by Hive.

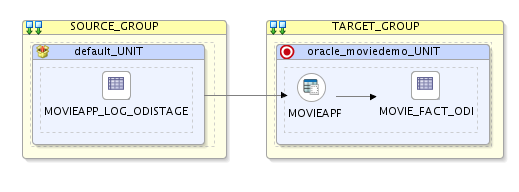

The second mapping then takes this Hive staging table and loads its data into Oracle, with two execution units shown in the Physical mapping view.

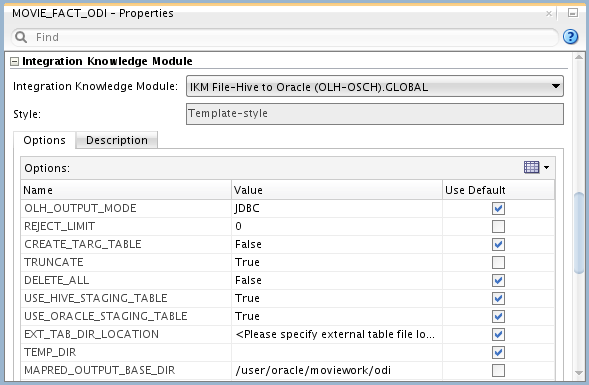

Then, looking at the target object’s properties, you can see that the IKM File-Hive to Oracle (OLH-OSCH) knowledge module is used to move data out of Hadoop and into Oracle.

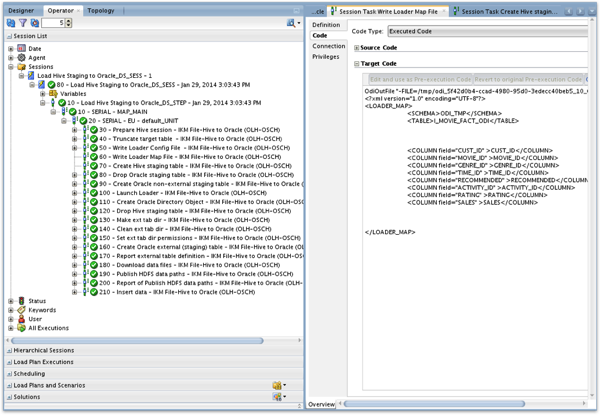

And when you execute the mapping, you can see the Oracle Loader for Hadoop mapping file being created, the utility run, and then the data moved through the usual staging table and into the target Oracle table.

So there you have it. There’s more you can do with ODI and the ODI Application Adaptor for Hadoop but these are two nice examples - take a look if you get a chance.