OBIEE 11.1.1.7, Cloudera Hadoop & Hive/Impala Part 1 : Install and Set-up an EC2 Hadoop Cluster

I’ve been over in San Francisco this last week for BIWA Summit 2014, and one of the things I demo’d during the week was OBIEE 11.1.1.7 connecting to a Hadoop cluster running on Amazon EC2, and analysing the flight delays dataset that ships with recent SampleApps and Exalytics. There’s quite a few interesting steps and concepts in setting this up, so I thought it’d be interesting to go through them on the blog, so that others can have a try if they’re interested. Don’t take this as a definitive, 100%-complete set of steps you’ll need to work through to set up the example - I’m currently writing this in the BA lounge at SFO trying to get this written before my flight leaves, and I might have inadvertently missed a couple of steps - but this should give you the gist of what’s involved and show what’s possible.

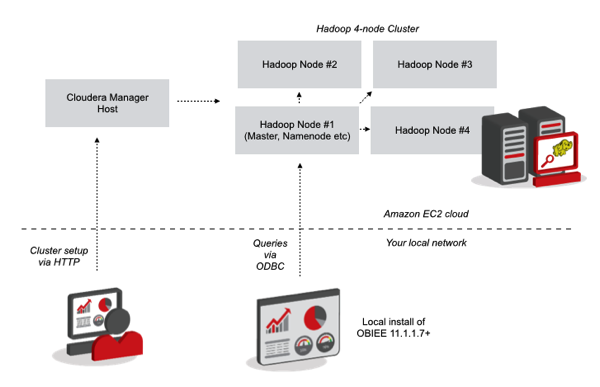

What the example will do is create the following setup:

In this setup, we’ll initially create an Amazon EC2 instance that we’ll then install the free version of Cloudera Manager 4.5 onto; Cloudera are a company that have created a distribution of Hadoop which they then sell alongside their own management tools (similar to how Red Hat took Linux, made it “enterprise” and sold software and services around it), but who also provide a freely-downloadable version of their tools (“Cloudera Standard”) that have special setup routines when run on Amazon EC2.

We’ll then use this install of Cloudera Manager to automatically create and provision four Amazon EC2 instances which we’ll then install Hadoop onto, along with other tools like Impala (for in-memory SQL access over the cluster), Hive, HDFS and so on. Then, in the second part of this two-part series, we’ll then upload some data from the Flight Delays dataset into the cluster, connect OBIEE to it via the Cloudera Impala ODBC drivers, and analyse from Answers. I’m assuming with this that you’ve got some familiarity with Amazon AWS, EC2 and the rest of their cloud platform, and that you’ve got yourself set up with an account, your secret access keys and so on - if not, do that first before you try and of these steps.

Let’s start by setting up the initial EC2 virtual server instance onto which we’ll install Cloudera Manager.

Installing the EC2 Hadoop Cluster

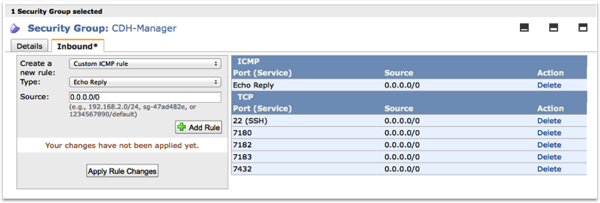

1. What we’re going to before anything else is create what’s called a “security group”, a collection of firewall settings that we’ll apply to the Cloudera Manager virtual server so that it can then connect out to the nodes it’s going to set up to run Hadoop (and so that we can connect to it to run the web interface). To do this, log into the AWS Management Console, and from the Amazon Web Services menu navigate to EC2 > Network & Security > Security Groups.

Then when the Security Groups page is displayed, press the Create Security Group button, then enter the following details when prompted:

Name : CDH-Manager

Description : Security group for CDH4 Manager instance

VPC : No VPC

Then, with this new security group selected, use the Add Rule button to add the following inbound rules:

SSH : 0.0.0.0/0

7180 : 0.0.0.0/0

7182 : 0.0.0.0/0

7183 : 0.0.0.0/0

7432 : 0.0.0.0/0

Custom ICMP rule : Echo Reply 0.0.0.0/0

Once you’ve done this, the security group area should look like this:

Then, press the Apply Rule Changes button to register the security settings.

2. Next we’ll create an Amazon EC2 virtual server instance to run Cloudera Manager on, using this security group settings to ensure the right ports are open - then we’ll use that instance and install of Cloudera Manager to then set up the Hadoop cluster.

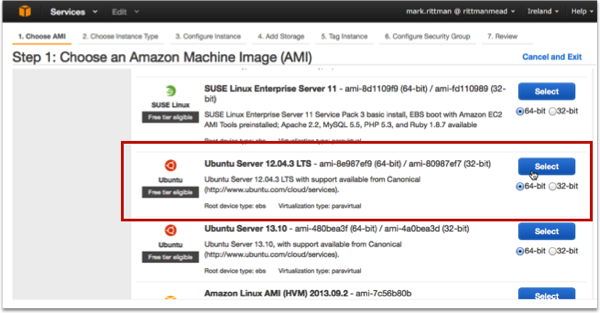

To do this with the EC2 Dashboard web page still open, click on the Instances menu item on the left-hand side of the page, then press Launch Instance, noting the EC2 region you’ll be working in at the same point (for me, it’s the EU Ireland region).

For this initial virtual server instance, use the Ubuntu Server 12.0.4 LTS 64-bit image - Cloudera Manager 4.5 Free can install onto either Ubuntu or Centos, and will adjust what it installs accordingly, so for now let’s select Ubuntu.

Then, when prompted, select the m1.medium image type, and on the Step 7: Review Instance Launch page, select the security group you created a moment ago for the instance’s security group settings. Once done, press the Launch button, create or select an SSH key pair and then download that key pair to your local laptop or PC so you can connect to the virtual server once it’s spun-up.

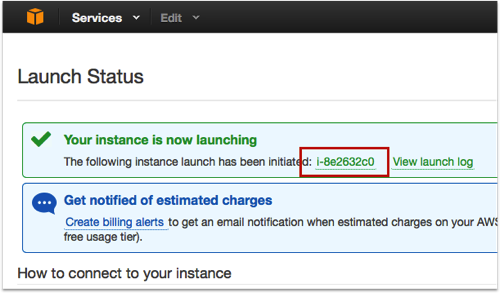

3. Now you need to SSH into this new EC2 virtual server and download the Cloudera Manager software to it, to then create the Hadoop cluster. To do this, first make a note of the instance name that the EC2 launch instance process gave you, like this:

Click on that link to then show the status of the virtual server, and more importantly, its public DNS address. Once the virtual server shows a status of “running”, you can then SSH into it and download and run the Cloudera Manager software; note that “EC2-cluster.pem” is the name of the keypair I created in the previous steps, and this file will need to be “chmod 400”-protected before EC2 and SSH will let you use it - see this blog article on setting up EC2 command-line access on the Mac for example details.

To SSH into the virtual server and install and run Cloudera Manager, type in the following (using your own SSH key file name and virtual server DNS address):

ssh -i EC2-cluster.pem [email protected]

Then, once you’re connected, download and install Cloudera Manager like this:

wget http://archive.cloudera.com/cm4/installer/latest/cloudera-manager-installer.bin

chmod +x cloudera-manager-installer.bin

sudo ./cloudera-manager-installer.bin

You’ll then be walked through a wizard that will get you to agree to a couple of licenses, and then download and install the Cloudera Manager software for your instance type. Note that this is something CM does when it detects it’s running on Amazon EC2 - for other types of install it’s a slightly different process.

4. Once the Cloudera Manager software install has completed, give it a couple of minutes and then use your web browser to navigate to the Cloudera Manager website, at machine-name:7180, in my case:

http://ec2-54-216-126-144.eu-west-1.compute.amazonaws.com:7180

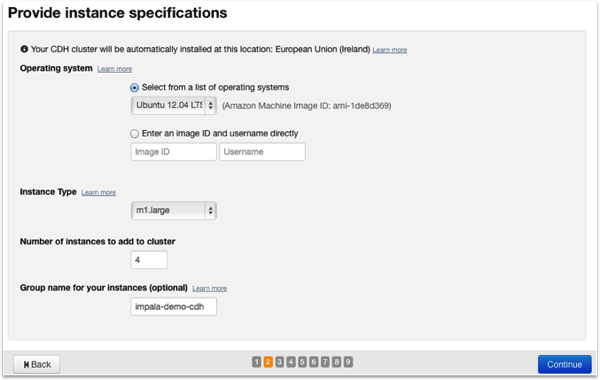

Log in as “admin/admin” and when prompted, select the free Cloudera Standard option. Press Continue so that you’re then presented with the Provide instance specification page. Using this page, you can select the EC2 instance size and type, the number of nodes in your cluster, and a group name for your instances. In this example, we’ll create a four-node cluster using the Ubuntu 12.0.4. LTS 64-bit image. select m1.large as the image type, and call it “impala-demo-cdh”.

Then, on the Provide Credentials page, paste in your AWS access key ID and Secret Access Key, let Cloudera Manager generate a new key pair for use with the cluster (or upload your own one from before), and then press the Start Installation button on the next page to have Cloudera Manager start provisioning the cluster instances. Once the instances are created, download the additional key file and place it with the other one, “chmod 400”-ing it as before so it’ll work with SSH into EC2.

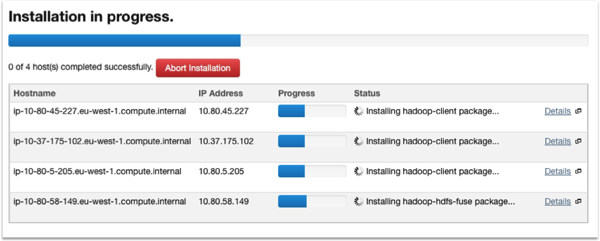

5. Once the instance provisioning completes, Cloudera Manager will then install the relevant software onto the different nodes. The Installation in Progress page will show you the progress of these installs, with the screenshot below showing it mid-way through the process.

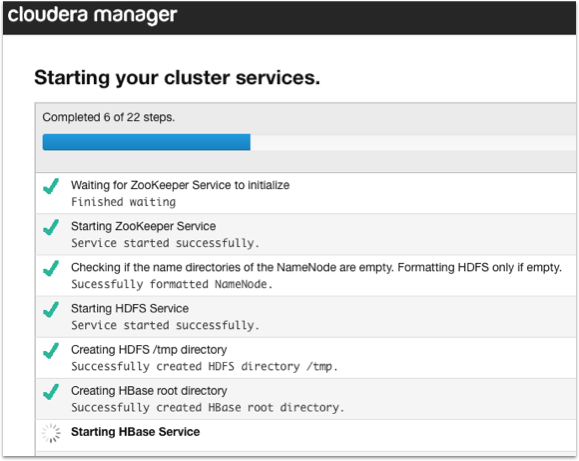

Assuming all the cluster nodes install properly, walk through the rest of the steps to confirm what’s installed where, check all of the services are running OK and complete the process.

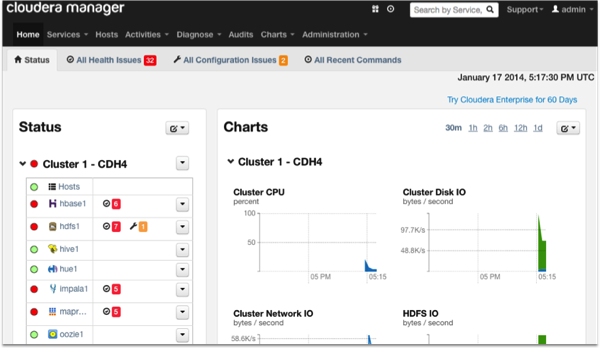

Configuring and Setting up Hadoop

So assuming all of the install and service startup steps went OK, what you have now is a four-node Hadoop cluster running on Amazon EC2, with additional management tools and services provided by Cloudera - think of it like a Linux distribution by Red Hat or Suse, where the core is standard open-source software and the vendor provides other complementary tools, and tools they write themselves, to enhance the product. The screenshot below is the overall summary page for your cluster, as provided by Cloudera Manager - don’t worry too much about the warnings, they’re down to log file disk space and can be ignored for this particular exercise.

If you select Services > All Services from the Cloudera Manager menu, you’ll see what’s been installed on your cluster:

Some of the key services are:

- HDFS - the cluster filesystem that Hadoop processes data on, and we’ll use later on to upload text files containing the flight delays data we’re going to analyse. HDFS is unix-like in how you work with it, but it stores data redundantly across all nodes in the cluster, enabling parallel operations and providing fault-tolerance.

- HBase - a NoSQL database that we won’t use here, but that stores data in key/value pairs using the HDFS filesystem

- Hive - a SQL-like access layer over Hadoop, typically used for ETL access, and currently by OBIEE

- Impala - an improved version of Hive that runs in-memory and bypasses MapReduce code creation, the thing that slows Hive down

- Hue - a web UI that we’ll be using later on to run Hive and Impala queries, and create tables in Hive’s HCatalog

- MapReduce - the framework and server within Hadoop that typically crunches, filters and transforms the data

Before we go into Hue to create some Hive tables, there’s one tasks we need to do if we’re to access this cluster via Hive - we need to install something called “Hiveserver2”, a server process that the Hive ODBC drivers OBIEE uses will need in order to connect to the cluster, but that isn’t installed by default.

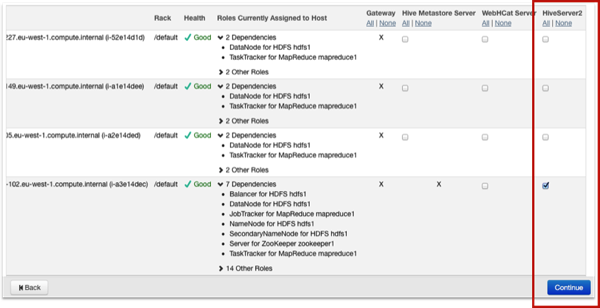

To install Hiveserver2, from the Cloudera Manager website select Services > hive1, and then click on the instances tab. Then, scroll-across so that you can see the HiveServer2 column, locate the cluster node with the majority of services and the Hive Metastore Server installed on it, and check the checkbox to select that service for install.

Press Continue, and then back on the Role Instances page, select the new hiveserver2 service, and select Actions for Selected > Start to start the service.

Now we’re at the point where we can use Hue to set up a Hive database, upload some files and create some tables for analysis. Check back tomorrow for the second-part in this series where we’ll do just that.