Building an Amazon Redshift Data Warehouse using ODI and Attunity Part 2 : Using ODI and OBIEE with Redshift as a Target/Source

In my post yesterday we stepped through the initial set up and staging load for a data warehouse using Amazon Redshift and Attunity, for eventual use with OBIEE and ODI. Now that we have our source data in Redshift, let's look at transforming it into a star schema using ODI, by initially looking how we set up the connection to Redshift in ODI’s Topology Navigator.

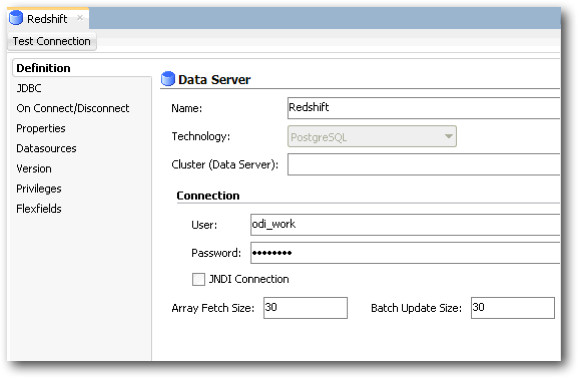

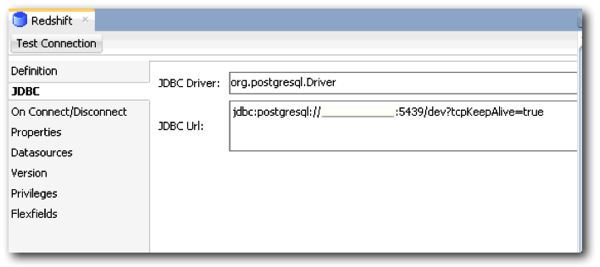

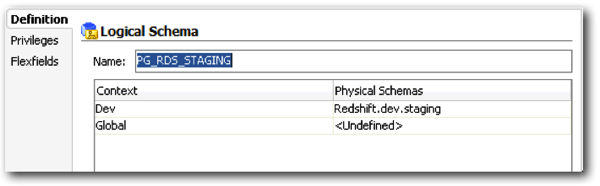

As I mentioned in yesterday’s post and on a blog article we wrote on non-Oracle databases a few months ago, Redshift is based on ParAccel technology, but uses PostGreSQL ODBC and JDBC drivers to connect to it. Therefore. we can create a Physical Architecture connection in ODI through to it using the PostgresSQL technology type, like this:

For the Redshift JDBC URL you’ll need, refer to the AWS Cluster summary page back on the Amazon AWS Console.

For the Redshift JDBC URL you’ll need, refer to the AWS Cluster summary page back on the Amazon AWS Console.

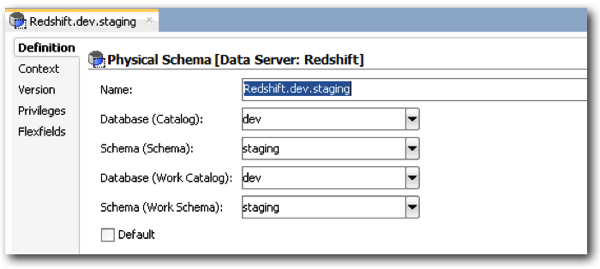

We can then add our schemas:

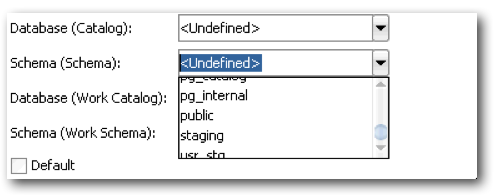

Then, if your connection to the Redshift database is working correctly, you should see a list of schemas in the drop down menus:

Once all the schemas have been added, when then go through the familiar ODI process of logical architecture and context mapping:

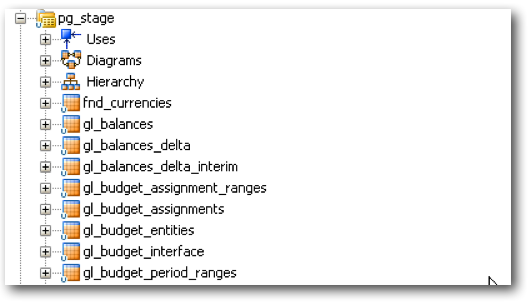

Next, we can create a model and reverse engineer in our data stores -

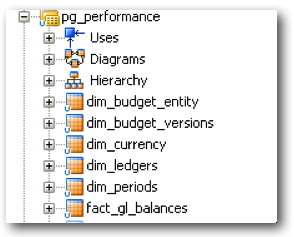

It's a good idea at this point to review each datastore and ensure that all attributes have been correctly assigned a datatype. To save a little reading time, a set of dimension and fact tables were created in the performance schema, and these were also reverse engineered in:

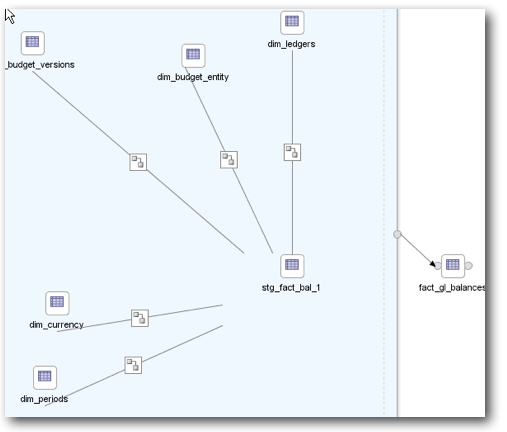

So from here on out, the process of creating and using mappings, packages, variables etc to populate your target tables is fairly straightforward, one which ODI Developers will be familiar with. You add your source and target data stores and implement the transformation logic.

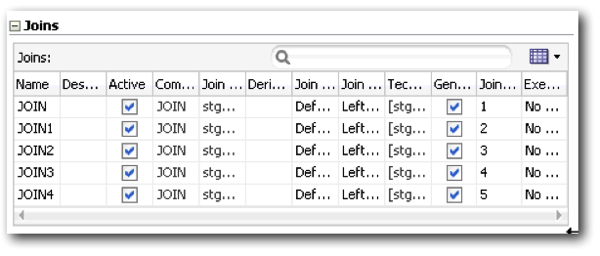

For the initial load, the SQL Control Append IKM was used and for the most part, this KM worked well without customisation. However, one of the early issues encountered during execution was with unordered outer joins - this appears to be a an issue with the PostGresQL technology. This was resolved by amending the mapping and setting an order for the joins:

Merging / Incremental loading

One of the things to be aware with Redshift at the time of writing is the absence of Merge functionality (we're so spoilt in Oracle DBMS land ;) ) You can of course achieve this by issuing separate insert and update statements. There is also the SQL Incremental Update IKM in ODI, but you may want to review the KM Task steps against your requirements before proceeding, as invariably some customisation will be required to improve performance. The same applies to SCD functionality - you should consider utilising analytic window functions (Redshift supports several aggregate and ranking window functions) to achieve your desired format.

Additionally, as mentioned previously Attunity supports a variety of replication tasks - you can do one off initial loading, an initial load + apply changes, and/or collect deltas into a change tracking table in the Redshift database. This in itself means you have the basic building blocks to create and maintain a Foundation layer as part of your Data Warehouse Design.

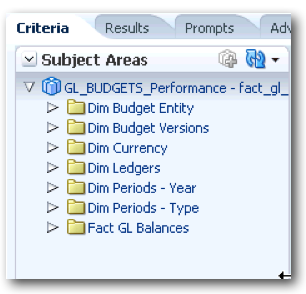

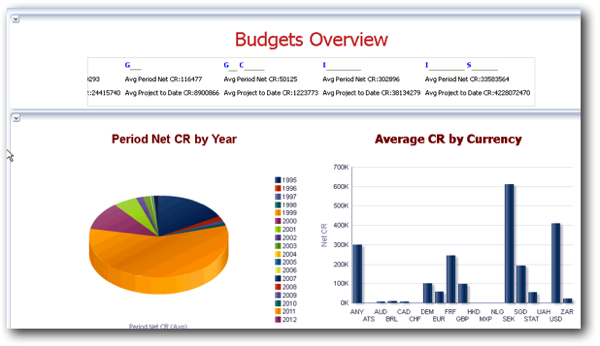

So, once we have data in our target fact and dimension tables, we can model these in OBIEE and create some analyses.

OBIEE Configuration

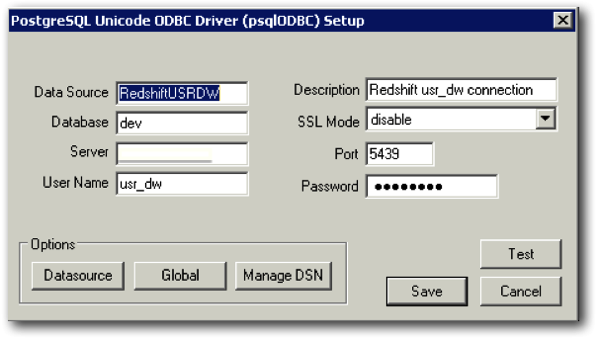

As per the AWS documentation for Redshift, the recommended ODBC driver was downloaded from the Amazon AWS website, and an ODBC data source was created on the OBIEE server:

For the server entry, you can use either the public or private leader node IP, depending on your network/security configuration. Just backtracking a little here - you may recall that the usr_dw account was created after the initial Redshift cluster build. In order for it to be able to access the tables in the performance schema, we need to grant the required select privileges.

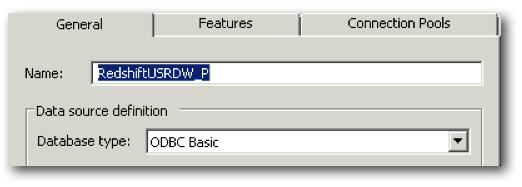

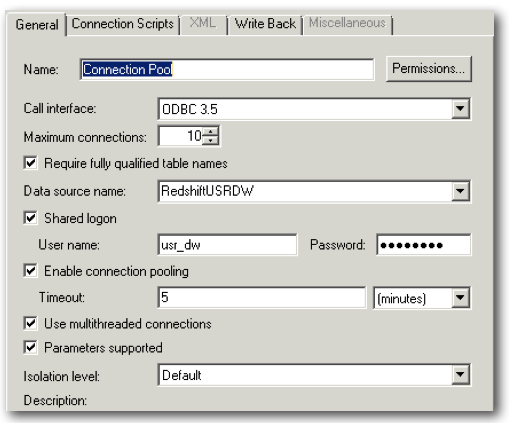

Once the ODBC connection is defined, we can create an RPD, define our database connection properties, import our tables and start modelling.

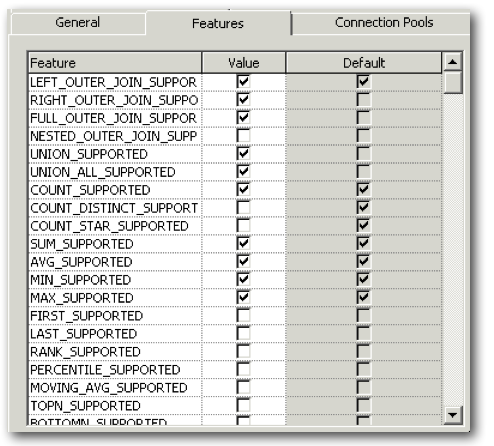

When it came to setting the features I used the query DBMS function and then went with what it set. Bear in mind that because PostgreSQL and Redshift aren’t supported source databases for OBIEE, depending on the analyses you are creating you may or may not hit functionality issues, so you may find yourself tweaking these settings.

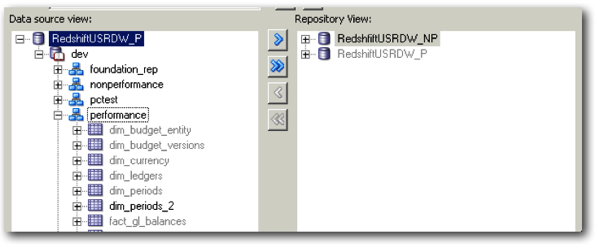

So if your connection is defined correctly and there are no other issues, you should now be able to import the tables:

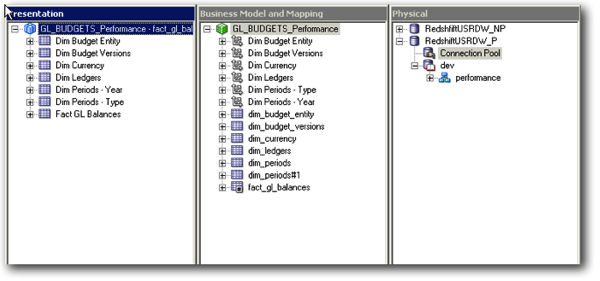

Once you've made your selection, you can then define the relationships between the fact and dimension tables (if you have primary and foreign keys defined these should propagate through), create any aliases etc. and then you're about ready to start building up the the Business Model and Presentation areas.

Once the RPD modelling is completed, we can upload it and create some content.

Admittedly the analyses created for this dashboard were fairly basic, so no functionality issues were encountered during creation and execution - you might hit some with more “edge-cases” such as analytic functions or nested subtotals, or you might end-up hitting performance issues when you’ve got lots of concurrent users, as the SQL issued by OBIEE might not be as efficient as it would be for a supported data source.

Performance Considerations for Redshift

Pete Scott will be covering the ins and outs of designing for performance in a blog post tomorrow, for which I’ll add a link to this post once it’s up. However, one thing that should be mentioned is the importance of setting sort and distribution keys appropriately on the fact and dimension tables, as well as Primary and Foreign keys (whilst these constraints are not enforced, they are used by the optimiser when determining execution plans). The sort and distribution keys determine how the data is stored on disk, and how it is distributed across the compute nodes, and can make a big impact on query response times, as well as any issues around lack of official support in OBIEE for Redshift as a data source.

So to conclude - whilst not officially supported, it is possible to create and maintain a Data Warehouse in Redshift and use the Oracle Product set to drive ETL and reporting. Some of the features that make Redshift an attractive DB platform is it's ease of management, it's scaleability and sizing options, and the ability to get a cluster up and running in hours rather than days. Combined with ODI and OBIEE and therefore a transferable skills base, it makes for an intriguing DW solution.