Testing Oracle Direct Connector for HDFS as an Alternative to Hive ODBC for OBIEE11g

In a post on the blog a couple of weeks ago, I looked at loading up a set of flight delays data into Apache Hadoop, then analysing it using Apache Hive, Cloudera Impala and OBIEE. In this scenario, OBIEE connects to the Hadoop cluster using Hive and Impala ODBC drivers, and then either Hive (through MapReduce jobs) or Impala (through its in-memory distributed query engine) returns the data to OBIEE, for display on the dashboard.

In my initial tests, as you’d expect Hive was fairly slow (as its optimised towards large (TB+) batch jobs), whilst Impala was fast, on a par with regular Oracle database queries albeit with a much more limited set of SQL functions supported. One of the questions I got asked after I posted the blog article though, was how this approach compared to accessing the Hadoop data via Oracle’s “big data connectors” - either Oracle Loader for Hadoop (OLH) or Oracle Direct Connector for HDFS? Could these give us similar performance to Hive or Impala, but make the Hadoop data more "Oracle-compatible” and suitable for querying with OBIEE?

For anyone not all that familiar with Oracle’s big data connectors, there’s two that are particularly relevant to BI/DW use-cases; Oracle Loader for Hadoop (OLH) is a bulk-loader between Hadoop and the Oracle Database that uses MapReduce on the Hadoop-side to prepare, sort and transform data before efficiently loading it into an Oracle database. There’s already lots of ways you can move data in and out of Oracle from a Hadoop source - sqoop for example - but presumably Oracle feel OLH is a particularly-efficient way of doing it, exploits Oracle direct/bulk-loading capabilities and parallelism, and so forth - but it’s a loader, not a transparent reading mechanism, so it’s only really appropriate for ETL-type situations where you want to copy the data out of Hadoop and into an Oracle data warehouse.

Oracle Direct Connector for HDFS (ODCH) is a bit different though, in that it uses the Oracle external table feature to map an Oracle data dictionary table onto HDFS files, or optionally Apache Hive tables (and therefore onto HDFS files, NoSQL databases or whatever). So you could potentially use ODCH to create Oracle database table representations of your Hive tables / HDFS files, then map these into the OBIEE repository and work with them just like any other Oracle (external) table. What this means then is that you’re not reliant on Hive or Impala ODBC drivers, or the BI Server having to generate HiveQL or ImpalaQL queries (with Impala of course not yet being officially supported), making your work from the OBIEE side a lot easier than if you’re trying to work with Hive or Impala directly.

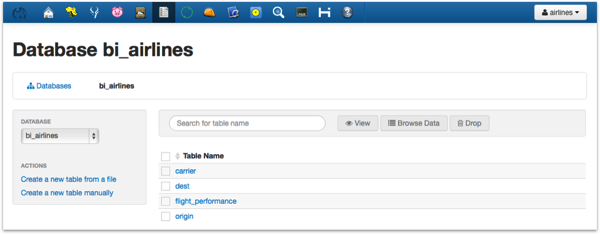

So how well does it work then? I decided to give it a try using the new BigDataLite VM I mentioned on the blog last week, along with the Airline Delays dataset I used in the post on Impala and Hive the other week. My starting point then was a Hive database with four tables - flight performance (19m rows), dest and origin (2k rows each) and carrier (1.5k rows), like this:

Now obviously Oracle’s big data connectors aren’t installed as part of Hadoop by default, but the BigDataLite VM does have them pre-installed, along with an Oracle Database 12c database, so a lot of the work in setting things up is done for you. By default, an install of the big data connectors connects to the Hadoop environment on the same machine, so all of the setup commands I use will assume that the Hive server and so on are on the same server - localhost in this case - although of course you can configure them to connect to a remote Hadoop server or cluster.

The way ODCH works is that it uses the Oracle external table “pre-processor” feature to stream HDFS file content into your query session, with the pre-processor in this scenario being a utility provided by the big data connectors to connect the two environments together. This blog post from Oracle explains the process in a bit more detail, but the key things to understand are that it’s conceptually similar to accessing regular file data via external tables, giving us the benefit of fairly seamless access to this external data (vs. using SQL*Loader, or OLH in this case), but it’s also not “real” Oracle table data so there’s no indexes or any other performance structures that can make queries run faster - thought like regular external tables you can run ODCH loading in-parallel.

So let’s use the setup in the BigDataLite VM to create some external tables in the Oracle database that map to my Hive tables, and underlying HDFS data files. Before we do this though we need to set up a few things; first, we need to create a temporary directory on the Linux filesystem to hold the metadata files created by ODCH, and we also need to specify where the ODCH HDFS file streamer utility can be found. Logging in as oracle/welcome1 on the VM, I first create the temporary directory, and then set an environment variable the rest of the process will be looking for:

mkdir bi_airlines_dir

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*

Now I log into SQL*Plus and create a database user that I’ll create the external tables in, and grant it access to the USERS tablespace:

sqlplus / as sysdba

create user bi_airlines identified by bi_airlines quota unlimited on users;

grant connect, resource to bi_airlines;

Next, I need to create two database directory objects; one to point to where the ODCH HDFS stream pre-processor lives, and the other to point to my temporary directory:

create or replace bi_airlines_dir as ‘/home/oracle/bi_airlines_dir’;

grant read, write on directory bi_airlines_dir to bi_airlines;

create or replace directory osch_bin_path as ‘/u01/connectors/osch/bin’;

grant read, write, execute on directory osch_bin_path to bi_airlines;

Now, re-purposing the scripts on the BigDataLite VM provided to create external tables over the Moviework Hive database, each of my tables requires two setup files; one, a shell script, to call the ODCH utility and reference an XML parameter file, and the second, the XML file, which contains the definition of the external table. To take an example, the shell script (genloc_origin_hive.sh) to create an external table over my “origin” Hive table looks like this:

# Add HIVE_HOME/lib* to HADOOP_CLASSPATH. This cannot be done

# in the login profiles since this breaks Pig in the previous lab.

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*

hadoop jar $OSCH_HOME/jlib/orahdfs.jar

oracle.hadoop.exttab.ExternalTable

-conf /home/oracle/movie/moviework/osch/origin_hive.xml

-createTable

whereas the accompanying XML file (origin_hive.xml) looks like this:

<Configuration>

<property>

<name>oracle.hadoop.exttab.tableName</name>

<value>BI_AIRLINES.ORIGIN_EXT_TAB_HIVE</value>

</property>

<property>

<name>oracle.hadoop.exttab.sourceType</name>

<value>hive</value>

</property>

<property>

<name>oracle.hadoop.exttab.hive.tableName</name>

<value>origin</value>

</property>

<property>

<name>oracle.hadoop.exttab.hive.databaseName</name>

<value>bi_airlines</value>

</property>

<property>

<name>oracle.hadoop.connection.url</name>

<value>jdbc:oracle:thin:@localhost:1521:orcl</value>

</property>

<property>

<name>oracle.hadoop.connection.user</name>

<value>BI_AIRLINES</value>

</property>

<property>

<name>oracle.hadoop.exttab.defaultDirectory</name>

<value>bi_airlines_dir</value>

</property>

</configuration>

Within the XML file, we specify the name of the external table that the utility will create in Oracle for you (ORIGIN_EXT_TAB_HIVE within the BI_AIRLINES schema); that it’s a Hive (rather than HDFS file) source, and the Hive database name and table name to source data from. With the operating system directory that you pass to it, the utility stores metadata within this to point to the individual HDFS files that contain your data, which means that whilst the HDFS data itself isn’t copied to Oracle, a hard reference to the file is, which means if you add more files to the Hive table’s HDFS directory, you’ll need to re-run the utility to register them - so its not completely automatic beyond this point, but more or less OK if you’ve got a single file providing the data, as I have in this slightly non-representative case.

So let’s run the shell script and create one of the external tables, passing in the Oracle database password for the “bi_airlines” user when prompted:

[oracle@bigdatalite osch]$ sh genloc_origin_hive.sh

Oracle SQL Connector for HDFS Release 2.3.0 - Production

Copyright (c) 2011, 2013, Oracle and/or its affiliates. All rights reserved.

[Enter Database Password:]

14/02/06 11:59:58 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore.

14/02/06 11:59:58 INFO hive.metastore: Trying to connect to metastore with URI thrift://bigdatalite.localdomain:9083

14/02/06 11:59:58 INFO hive.metastore: Waiting 1 seconds before next connection attempt.

14/02/06 11:59:59 INFO hive.metastore: Connected to metastore.

The create table command succeeded.

CREATE TABLE "BI_AIRLINES"."ORIGIN_EXT_TAB_HIVE"

(

"ORIGIN" VARCHAR2(4000),

"ORIGIN_DEST" VARCHAR2(4000),

"ORIGIN_CITY" VARCHAR2(4000),

"ORIGIN_STATE" VARCHAR2(4000),

"AIRPORT_ID" VARCHAR2(4000)

)

ORGANIZATION EXTERNAL

(

TYPE ORACLE_LOADER

DEFAULT DIRECTORY "BI_AIRLINES_DIR"

ACCESS PARAMETERS

(

RECORDS DELIMITED BY 0X'0A'

CHARACTERSET AL32UTF8

PREPROCESSOR "OSCH_BIN_PATH":'hdfs_stream'

FIELDS TERMINATED BY 0X'7C'

MISSING FIELD VALUES ARE NULL

(

"ORIGIN" CHAR(4000) NULLIF "ORIGIN"=0X'5C4E',

"ORIGIN_DEST" CHAR(4000) NULLIF "ORIGIN_DEST"=0X'5C4E',

"ORIGIN_CITY" CHAR(4000) NULLIF "ORIGIN_CITY"=0X'5C4E',

"ORIGIN_STATE" CHAR(4000) NULLIF "ORIGIN_STATE"=0X'5C4E',

"AIRPORT_ID" CHAR(4000) NULLIF "AIRPORT_ID"=0X'5C4E'

)

)

LOCATION

(

'osch-20140206120000-1947-1'

)

) PARALLEL REJECT LIMIT UNLIMITED;

The following location files were created.

osch-20140206120000-1947-1 contains 1 URI, 150606 bytes

150606 hdfs://bigdatalite.localdomain:8020/user/hive/warehouse/bi_airlines.db/origin/geog_origin.txt

Note the HDFS file reference at the end - this is the metadata created by the utility, which we’d need to update if more data files are added to the underlying Hive table.

So let’s fire-up SQL*Plus and take a look at the tables:

[oracle@bigdatalite osch]$ sqlplus bi_airlines/bi_airlines

SQL*Plus: Release 12.1.0.1.0 Production on Thu Feb 6 13:20:12 2014

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Last Successful login time: Thu Feb 06 2014 12:21:52 -05:00

Connected to:

Oracle Database 12c Enterprise Edition Release 12.1.0.1.0 - 64bit Production

With the Partitioning, OLAP, Advanced Analytics and Real Application Testing options

SQL> select table_name from user_tables;

TABLE_NAME

FLIGHT_PERF_EXT_TAB_HIVE

ORIGIN_EXT_TAB_HIVE

DEST_EXT_TAB_HIVE

CARRIER_EXT_TAB_HIVE

SQL> set timing on

SQL> select count(*) from flight_perf_ext_tab_hive;

COUNT(*)

19648958

Elapsed: 00:01:09.74

Not bad, but just over a minute to do a quick row-count on the table. Let’s try the same thing using Hive itself - note that ODCH and Hive aren’t using the same access technique to the underlying data, and one might more sense than the other for particular query situations.

[oracle@bigdatalite ~]$ hive

Logging initialized using configuration in jar:file:/usr/lib/hive/lib/hive-common-0.10.0-cdh4.5.0.jar!/hive-log4j.properties

Hive history file=/tmp/oracle/hive_job_log_2b7797b2-1ef8-4ffc-b8b1-ab4f77b19cea_423831297.txt

hive> select count(*) from bi_airlines.flight_performance;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Starting Job = job_201402052208_0001, Tracking URL = http://bigdatalite.localdomain:50030/jobdetails.jsp?jobid=job_201402052208_0001

Kill Command = /usr/lib/hadoop/bin/hadoop job -kill job_201402052208_0001

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 1

2014-02-06 13:26:48,223 Stage-1 map = 0%, reduce = 0%

2014-02-06 13:27:23,402 Stage-1 map = 51%, reduce = 0%

2014-02-06 13:27:40,487 Stage-1 map = 75%, reduce = 0%, Cumulative CPU 16.59 sec

2014-02-06 13:27:41,512 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 34.0 sec

2014-02-06 13:27:42,523 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 34.0 sec

2014-02-06 13:27:43,535 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 34.0 sec

2014-02-06 13:27:44,549 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 34.0 sec

2014-02-06 13:27:45,558 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 34.0 sec

2014-02-06 13:27:46,569 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 34.0 sec

2014-02-06 13:27:47,582 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 34.0 sec

2014-02-06 13:27:48,596 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 35.05 sec

2014-02-06 13:27:49,608 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 35.05 sec

2014-02-06 13:27:50,616 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 35.05 sec

2014-02-06 13:27:51,625 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 35.05 sec

2014-02-06 13:27:52,641 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 35.05 sec

MapReduce Total cumulative CPU time: 35 seconds 50 msec

Ended Job = job_201402052208_0001

MapReduce Jobs Launched:

Job 0: Map: 2 Reduce: 1 Cumulative CPU: 35.05 sec HDFS Read: 524501756 HDFS Write: 9 SUCCESS

Total MapReduce CPU Time Spent: 35 seconds 50 msec

OK

19648958

Time taken: 76.525 seconds

Ok, a bit longer, 76 seconds vs. 69 seconds - but as I said, they’re different access mechanisms and you’d probably get different results if you joined the data, filtered it etc.

Let’s try it in Impala now:

[oracle@bigdatalite ~]$ impala-shell

Starting Impala Shell without Kerberos authentication

Connected to bigdatalite.localdomain:21000

Server version: impalad version 1.2.3 RELEASE (build 1cab04cdb88968a963a8ad6121a2e72a3a623eca)

Welcome to the Impala shell. Press TAB twice to see a list of available commands.

Copyright (c) 2012 Cloudera, Inc. All rights reserved.

(Shell build version: Impala Shell v1.2.3 (1cab04c) built on Fri Dec 20 19:39:39 PST 2013)

[bigdatalite.localdomain:21000] > select count() from bi_airlines.flight_performance;

Query: select count() from bi_airlines.flight_performance

+----------+

| count(*) |

+----------+

| 19648958 |

+----------+

Returned 1 row(s) in 1.09s

[bigdatalite.localdomain:21000] >

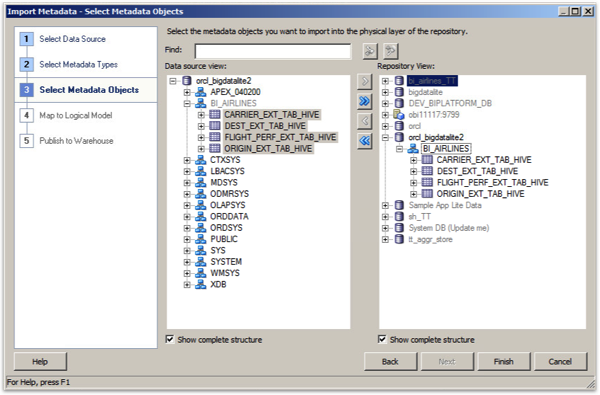

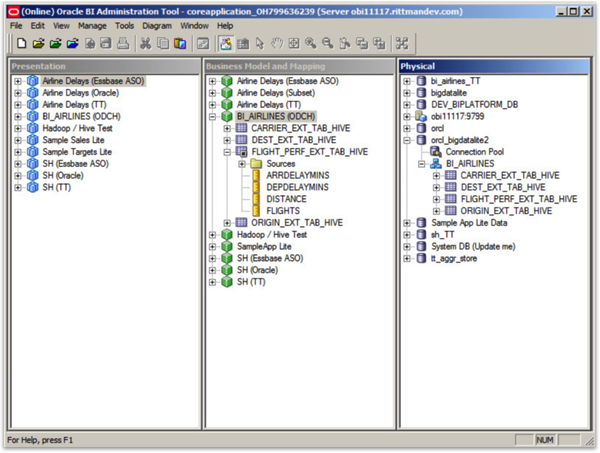

Ah, that’s more like it - the count this time was returned in just over a second, which is what you’d expect as Impala is optimised for ad-hoc queries, Hive for larger, batch-style SQL access and transformations. Moving over to OBIEE though, after creating a TNSNAMES connection through to the BigDataLite Oracle Database 12c database, I can import the external tables in just like any regular Oracle source:

Building the RPD against these tables is thereafter the same as any Oracle database source - no need to worry about Hive drivers, SQL dialects, Impala not being supported and so on.

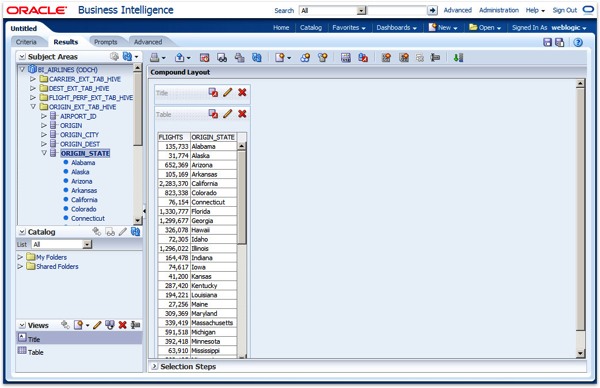

But … when you come to run queries, it’s slow. As slow as accessing Hadoop data via Hive, in the order of minutes to return a result set to the dashboard.

And this is what you’d expect if you connected OBIEE to a file data source, or an Oracle external table. There’s no indexes on the underlying tables, no aggregates and so forth, and you’re not benefiting from the query optimisations you’d get with technologies such as Impala, Stinger from Hortonworks or whatever. In reality, if what you’re after is the convenience of connecting to Oracle tables rather than Hive or Impala ones, you’d just use the external tables you created to then load the data into regular Oracle tables, and just query those.

What ODCH is really for is data extraction and ETL, it’s not a high-performance ad-hoc query tool, and realistically you’d only really use it like this for initial prototyping or if your use-case really suited direct query access to HDFS file data. So - back to Impala then for this type of Hadoop access, though it’s a neat feature to be aware of, particularly in the context of ODI and bulk-loading Hadoop data into Oracle.