Oracle Enterprise Data Quality Revisited

Recently I have be working with Oracle Enterprise Data Quality (EDQ) combined with Oracle Data Integrator (ODI), we will tell you more about exactly what we have been doing in another blog, but for now I would like to revisit our earlier EDQ blogs. I am going to keep to the batch ETL paradigm and not really look at the Real-Time aspects of Oracle EDQ.

My colleague, Koen Vantomme, blogged about EDQ back in August 2012, and Mark Rittman wrote about EDQ and ODI in December 2012. New versions of ODI (12c) and EDQ (11.1.7.3) have been released and this has introduced a few changes in the way we use the two products together. Before discussing that I will give a brief overview of what EDQ can do and three typical use cases we may wish to use in a data integration / data warehousing project.

The product name says it all. "Oracle Enterprise Data Quality” is Oracle’s Data Quality toolset for Enterprises. It provides a comprehensive set of functionality for data stewardship, data profiling and data correction (both realtime by use of web services and, more traditionally, in batch operations). Additional, added-cost, components can be added to provide functionality such as address verification, CRM address services and watch-list screening.

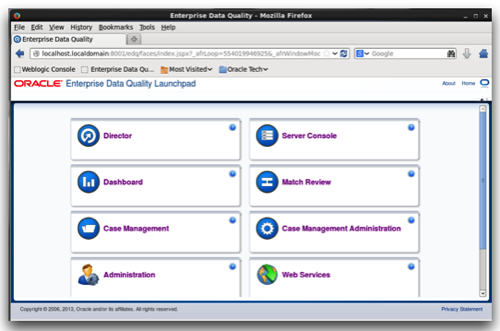

Out-of-the-box we get a web front end giving access to various applets.

As developers, we will probably use the Director applet most often. Director is a web-launch Oracle ADF applet and is the IDE for creating and managing EDQ processes and jobs. One or more processes can be chained together in an EDQ job and this job can be executed from the Director GUI, the command line or, as I will cover later, from inside an ODI package (and hence from an ODI scenario or loadplan).

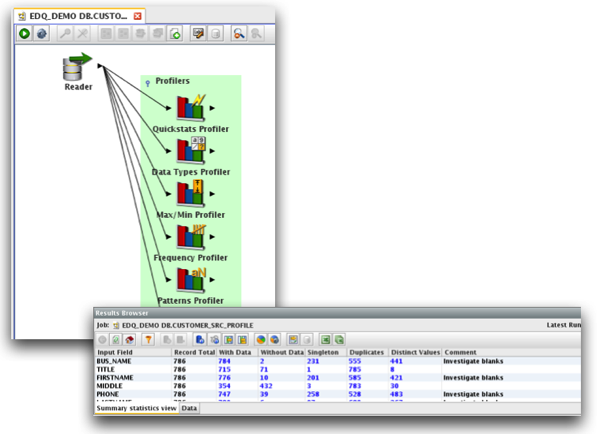

In the data warehousing world success is usually measured by whether we give the data users what they want. This usually boils down to being ’timely’ (are the data recent enough for their purposes?) and ‘correct’. Timely is about ETL process and load design. Correct, however, is mainly about data quality (although ETL logic flaws also play a part). Typically we see three kinds of issue:

- Incomplete: attributes or values that are missing. For example an order in process will not have a shipping date until the order is shipped; and in the case of a website data feed non-mandatory fields on a web form may be left blank.

- Incorrect: I have seen a e-retailer with a disproportionate number of customers from Albania, where Albania just happened to be the first country listed on the web-form country selector on the customer registration page.

- Inconsistent: the same data being presented in different ways so that we don’t always spot multiple records referring to the same entity. This can be simply an inconsistent use of letter case in a description or it can be more complex such as the use of synonyms and variant spellings; for example my father was William Scott, but he could appear as ‘Will Scott’, ‘Bill Scott’, ‘Wm Scott’, ‘W Scott’ or ‘W G Scott’ . However we can’t just blindly convert all cases of Billy to William as Billy could be a legitimate data value; we need to build more complex data rules about this. Sometimes inconsistent data is more physical in nature, for example one of our data sources may use a different character set and we see unexpected character conversions in our data.

Having profiled our data we are in a position to decide whether our ETL processes need to be written to handle anticipated data anomalies (of course we should always code defensively) or that we go back to the data source owners for improved data feeds. If we adopt the "handle anomalies" route we can use the EDQ tool set to help with this. Notice I say handle and not “fix” I am a strong believer that a data warehouse is the single source of truth and not the "place where truth is created” If we receive data that needs fixing we should separate it from the data suitable for loading and ideally return it to the data owner for fixing. We may do some automatic fix ups but only if the rules can be firmly agreed with the business and that we always maintain the original source data along side so we can go back if we find our ‘rule’ was incorrect.

Having profiled our data we are in a position to decide whether our ETL processes need to be written to handle anticipated data anomalies (of course we should always code defensively) or that we go back to the data source owners for improved data feeds. If we adopt the "handle anomalies" route we can use the EDQ tool set to help with this. Notice I say handle and not “fix” I am a strong believer that a data warehouse is the single source of truth and not the "place where truth is created” If we receive data that needs fixing we should separate it from the data suitable for loading and ideally return it to the data owner for fixing. We may do some automatic fix ups but only if the rules can be firmly agreed with the business and that we always maintain the original source data along side so we can go back if we find our ‘rule’ was incorrect.

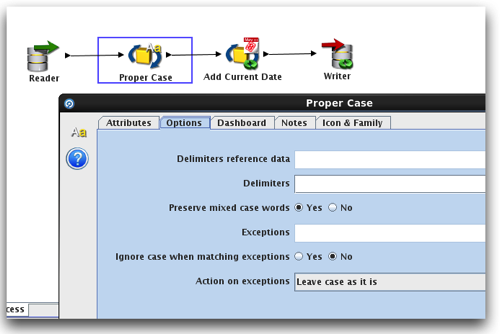

In the EDQ sample data (available on OTN for download) we find a customer CSV file where amongst other things the LastName column is highly inconsistent; some names in lowercase, some in uppercase, some in proper case (first character of words is uppercase). We can build out a simple process that does a proper case conversion on all of the data. We can be even smarter, and get it to ignore embedded uppercase so we don’t mess up names like McDonnell and DiCampo. In the real world we would possibly add an exceptions list to handle prefix words such as van, de and von which are traditionally in lower case. In my data correction task I have added another processor to add the current date to outward-bound data so we have a trail of when data was processed. The data written out also has both the original last name and the amended version.

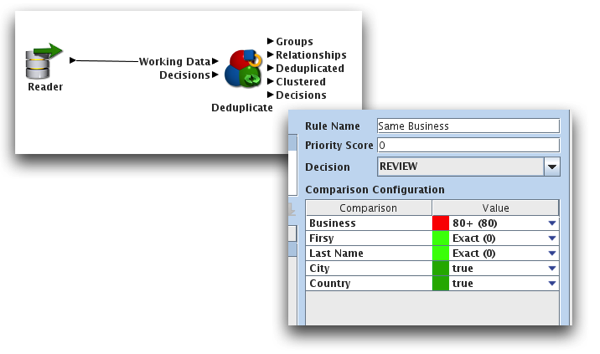

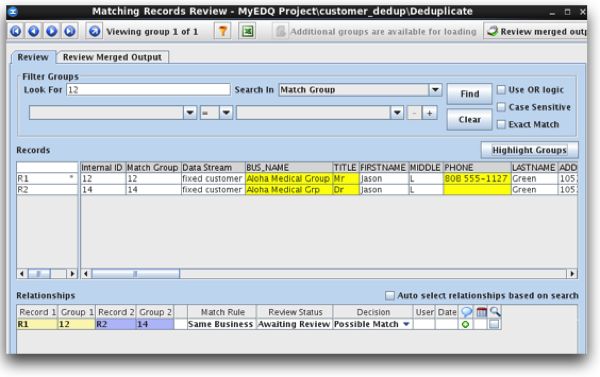

Sometimes (I’d say “mostly!") fixing data in the ETL is the wrong thing to do. In cases like these we can build a process using the Data Match processors and based on defined data criteria elect to process data, reject data or flag for further investigation. We can use these match operators as a trigger for instigating Oracle EDQ case management workflows or just use the inbuilt Director functionality to split good and rejected data and raise an email alert using the email processor. Using my customer data as an example we have several customers that are likely to be duplicates, with either variant versions of company name or address. We can build match rules and assign weights to the rule components so that certain conditions trigger matching, non-matching or review. Oracle EDQ supports some very sophisticated match algorithms, such as "within a given number of character substitutions” (aka typo matching) and "matching a percentage of words in a string".

We can review our results from the data match tool, in my case I had one record that my rules considered to be an exact match and 52 records that were flagged for human review a likely matches. Note we can highlight the discrepancies

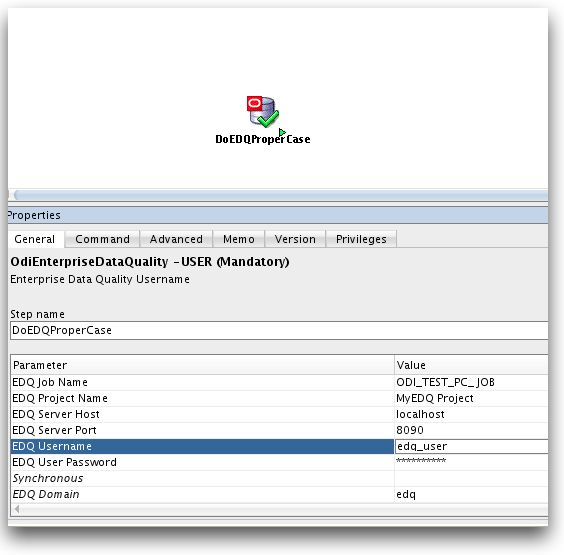

As I mentioned above we can call these EDQ jobs from the command line or as part of ODI packages. The simplest way is probably using the EDQ tool that has been available since ODI 11.1.1.6.

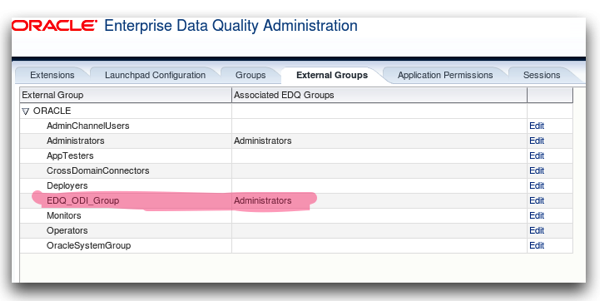

However, calling the EDQ command line interface from ODI is also feasible for earlier ODI versions or for more complex use cases that may not be supported by the tool (for example the use of execution parameters although this restriction can be worked around in other ways). To use the ODI tool we need to know some information about the EDQ instance, particularly the host, EDQ JMX server port (this is not the same as the browser url port) the user name and password, EDQ project and job names, and the EDQ domain. There are a few gotchas that are not clear from the documentation, but setting thus up is relatively easy. The biggest of these gotchas is that the EDQ domain name (that is the base mbean to which you connect) has changed in the current EDQ release from the default name provided by ODI, it is now edq. We can verify this base mbean name by using Java Console on the server and drilling into the EDQ server process. The JMX server port number has also changed (8090 is now the default on the EDQ server). We need to explicitly set these values on the ODI EDQ tool General Tab (the ODI defaults no longer work). There are two further restrictions we need be aware of, but these are probably unchanged from earlier releases; the execution user for EDQ must be a full EDQ Administration user and also that user must not be currently logged into EDQ on another connection. For my testing I created a Weblogic EDQ-USER user and an EDQ_ODI_GROUP group in the WebLogic Security Realm and mapped the WebLogic group to the EDQ Administrators role on the edq Administration web page. That is I have pushed user administration down to the WebLogic server.

Installing EDQ

Oracle EDQ installs on to an application server such as Apache Tomcat, IBM WebSphere and of course Oracle WebLogic. For this blog post I have downloaded the latest available versions of EDQ and ODI. It should be noted that WebLogic 12 is not supported by the current Oracle EDQ release. For my Application Server I am using a basic WebLogic 10.3.6 install. Download and run the EDQ Repository creation assistant to create required meta data and schemas on the repository database and then run the EDQ provided Oracle Universal Installer. When the base install is complete run the WebLogic configuration utility and extend the domain to include Oracle EDQ. Startup the services from the WebLogic console. If the EDQ url does not bring up the home page it may be that you need to make a fix to the node manager configuration (see MOS note 1617687.1) If you are using the latest Java 1.7 you will also probably need to change some Java security setting to allow the Java Web Start applets to launch. Do to this connect to the Java Control Panel and add the EDQ server and port to the exception site list.In a follow-up post we will take a look at some of the Director functionality in detail.