Using Sqoop for Loading Oracle Data into Hadoop on the BigDataLite VM

This is old-hat for most Hadoop veterans, but I’ve been meaning to note it on the blog for a while, for anyone who’s first encounter with Hadoop is Oracle’s BigDataLite VM.

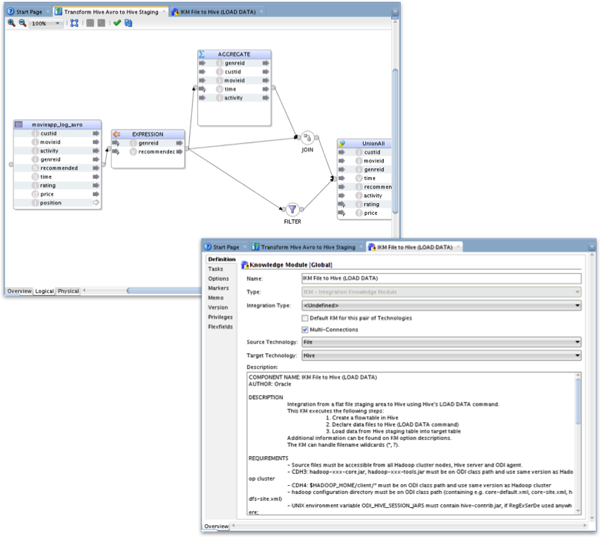

Most people looking to bring external data into Hadoop, do so through flat-file exports that they then import into HDFS, using the “hadoop fs” command-line tool or Hue, the web-based developer tool in BigDataLite, Cloudera CDH, Hortonworks and so on. They then often create Hive tables over them, either creating them from the Hive / Beeswax shell or through Hue, which can create a table for you out of a file you upload from your browser. ODI, through the ODI Application Adapter for Hadoop, also gives you a knowledge module (IKM File to Hive) that’s used in the ODI demos also on BigDataLite to load data into Hive tables, from an Apache Avro-format log file.

What a lot of people don’t know who’re new to Hadoop, is that you can skip the “dump to file” step completely, and load data straight into HDFS direct from the Oracle database, without an intermediate file export step. The tool you use for this comes as part of the Cloudera CDH4 Hadoop distribution that’s on BigDataLite, and it’s called “Sqoop”.

“Sqoop”, short for “SQL to Hadoop”, gives you the ability to do the following Oracle data transfer tasks amongst other ones:

- Import whole tables, or whole schemas, from Oracle and other relational databases into Hadoop’s file system, HDFS

- Export data from HDFS back out to these databases - with the export and import being performed through MapReduce jobs

- Import using an arbitrary SQL SELECT statement, rather than grabbing whole tables

- Perform incremental loads, specifying a key column to determine what to exclude

- Load directly into Hive tables, creating HDFS files in the background and the Hive metadata automatically

Documentation for Sqoop as shipped with CDH4 can be found on the Cloudera website here, and there are even optimisations and plugins for databases such as Oracle to enable faster, direct loads - for example OraOOP.

Normally, you’d need to download and install JDBC drivers for sqoop before you can use it, but BigDataLite comes with the required Oracle JDBC drivers, so let’s just have a play around and see some examples of Sqoop in action. I’ll start by importing the ACTIVITY table from the MOVIEDEMO schema that comes with the Oracle 12c database also on BigDataLite (make sure the database is running, first though):

[oracle@bigdatalite ~]$ sqoop import --connect jdbc:oracle:thin:@localhost:1521/orcl --username MOVIEDEMO --password welcome1 --table ACTIVITYYou should then see sqoop process your command in its console output, and then run the MapReduce jobs to bring in the data via the Oracle JDBC driver:

14/03/21 18:21:36 INFO sqoop.Sqoop: Running Sqoop version: 1.4.3-cdh4.5.0

14/03/21 18:21:36 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

14/03/21 18:21:37 INFO manager.SqlManager: Using default fetchSize of 1000

14/03/21 18:21:37 INFO tool.CodeGenTool: Beginning code generation

14/03/21 18:21:38 INFO manager.OracleManager: Time zone has been set to GMT

14/03/21 18:21:38 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM ACTIVITY t WHERE 1=0

14/03/21 18:21:38 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/lib/hadoop-0.20-mapreduce

14/03/21 18:21:38 INFO orm.CompilationManager: Found hadoop core jar at: /usr/lib/hadoop-0.20-mapreduce/hadoop-core.jar

Note: /tmp/sqoop-oracle/compile/b4949ed7f3e826839679143f5c8e23c1/ACTIVITY.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

14/03/21 18:21:41 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-oracle/compile/b4949ed7f3e826839679143f5c8e23c1/ACTIVITY.jar

14/03/21 18:21:41 INFO manager.OracleManager: Time zone has been set to GMT

14/03/21 18:21:41 INFO manager.OracleManager: Time zone has been set to GMT

14/03/21 18:21:42 INFO mapreduce.ImportJobBase: Beginning import of ACTIVITY

14/03/21 18:21:42 INFO manager.OracleManager: Time zone has been set to GMT

14/03/21 18:21:44 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

14/03/21 18:21:45 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(ACTIVITY_ID), MAX(ACTIVITY_ID) FROM ACTIVITY

14/03/21 18:21:45 INFO mapred.JobClient: Running job: job_201403111406_0015

14/03/21 18:21:47 INFO mapred.JobClient: map 0% reduce 0%

14/03/21 18:22:19 INFO mapred.JobClient: map 25% reduce 0%

14/03/21 18:22:27 INFO mapred.JobClient: map 50% reduce 0%

14/03/21 18:22:29 INFO mapred.JobClient: map 75% reduce 0%

14/03/21 18:22:37 INFO mapred.JobClient: map 100% reduce 0%

...

14/03/21 18:22:39 INFO mapred.JobClient: Map input records=11

14/03/21 18:22:39 INFO mapred.JobClient: Map output records=11

14/03/21 18:22:39 INFO mapred.JobClient: Input split bytes=464

14/03/21 18:22:39 INFO mapred.JobClient: Spilled Records=0

14/03/21 18:22:39 INFO mapred.JobClient: CPU time spent (ms)=3430

14/03/21 18:22:39 INFO mapred.JobClient: Physical memory (bytes) snapshot=506802176

14/03/21 18:22:39 INFO mapred.JobClient: Virtual memory (bytes) snapshot=2714157056

14/03/21 18:22:39 INFO mapred.JobClient: Total committed heap usage (bytes)=506724352

14/03/21 18:22:39 INFO mapreduce.ImportJobBase: Transferred 103 bytes in 56.4649 seconds (1.8241 bytes/sec)

14/03/21 18:22:39 INFO mapreduce.ImportJobBase: Retrieved 11 records.

By default, sqoop will put the resulting file in your user’s home directory in HDFS. Let’s take a look and see what’s there:

[oracle@bigdatalite ~]$ hadoop fs -ls /user/oracle/ACTIVITYFound 6 items

-rw-r--r-- 1 oracle supergroup 0 2014-03-21 18:22 /user/oracle/ACTIVITY/_SUCCESS

drwxr-xr-x - oracle supergroup 0 2014-03-21 18:21 /user/oracle/ACTIVITY/_logs

-rw-r--r-- 1 oracle supergroup 27 2014-03-21 18:22 /user/oracle/ACTIVITY/part-m-00000

-rw-r--r-- 1 oracle supergroup 17 2014-03-21 18:22 /user/oracle/ACTIVITY/part-m-00001

-rw-r--r-- 1 oracle supergroup 24 2014-03-21 18:22 /user/oracle/ACTIVITY/part-m-00002

-rw-r--r-- 1 oracle supergroup 35 2014-03-21 18:22 /user/oracle/ACTIVITY/part-m-00003

[oracle@bigdatalite ~]$ hadoop fs -cat /user/oracle/ACTIVITY/part-m-000001,Rate

2,Completed

3,Pause

[oracle@bigdatalite ~]$ hadoop fs -cat /user/oracle/ACTIVITY/part-m-000014,Start

5,Browse

What you can see there is that sqoop has imported the data as a series of “part-m” files, CSV files with one per MapReduce reducer. There’s various options in the docs for specifying compression and other performance features for sqoop imports, but the basic format is a series of CSV files, one per reducer.

You can also import Oracle and other RDBMS data directly into Hive, with sqoop creating equivalent datatypes for the data coming in (basic datatypes only, none of the advanced spatial and other Oracle ones). For example, I could import the CREW table in the MOVIEDEMO schema in like this, directly into an equivalent Hive table:

[oracle@bigdatalite ~]$ sqoop import --connect jdbc:oracle:thin:@localhost:1521/orcl --username MOVIEDEMO --password welcome1 --table CREW --hive-import<Taking a look at Hive, I can then see the table this is created, describe it and count the number of rows it contains:

[oracle@bigdatalite ~]$ hive

Logging initialized using configuration in jar:file:/usr/lib/hive/lib/hive-common-0.10.0-cdh4.5.0.jar!/hive-log4j.properties

Hive history file=/tmp/oracle/hive_job_log_effb9cb5-6617-49f4-97b5-b09cd56c5661_1747866494.txt

hive> desc CREW;

OK

crew_id double

name string

Time taken: 2.211 seconds

hive> select count(*) from CREW;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Starting Job = job_201403111406_0017, Tracking URL = http://bigdatalite.localdomain:50030/jobdetails.jsp?jobid=job_201403111406_0017

Kill Command = /usr/lib/hadoop/bin/hadoop job -kill job_201403111406_0017

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2014-03-21 18:33:40,756 Stage-1 map = 0%, reduce = 0%

2014-03-21 18:33:46,797 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.74 sec

2014-03-21 18:33:47,812 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.74 sec

2014-03-21 18:33:48,821 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.74 sec

2014-03-21 18:33:49,907 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.74 sec

2014-03-21 18:33:50,916 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.74 sec

2014-03-21 18:33:51,929 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 1.75 sec

2014-03-21 18:33:52,942 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 1.75 sec

2014-03-21 18:33:53,951 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 1.75 sec

2014-03-21 18:33:54,961 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 1.75 sec

MapReduce Total cumulative CPU time: 1 seconds 750 msec

Ended Job = job_201403111406_0017

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 1.75 sec HDFS Read: 135111 HDFS Write: 5 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 750 msec

OK

6860

Time taken: 20.095 seconds

I can even do an incremental import to bring in new rows, appending their contents to the existing ones in Hive/HDFS:

[oracle@bigdatalite ~]$ sqoop import --connect jdbc:oracle:thin:@localhost:1521/orcl --username MOVIEDEMO --password welcome1 --table CREW --hive-import --incremental append --check-column CREW_IDThe data I’ve now loaded can be processed by a tool such as ODI, or you can use a tool such as Pig to do some further analysis or number crunching, like this:

grunt> RAW_DATA = LOAD 'ACTIVITY' USING PigStorage(',') AS

grunt> (act_type: int, act_desc: chararray);

grunt> B = FILTER RAW_DATA by act_type < 5;

grunt> STORE B into 'FILTERED_ACTIVITIES' USING PigStorage(‘,');

the output of which is another file on HDFS. Finally, I can export this data back to my Oracle database using the sqoop export feature:

[oracle@bigdatalite ~]$ sqoop export --connect jdbc:oracle:thin:@localhost:1521/orcl --username MOVIEDEMO --password welcome1 --table ACTIVITY_FILTERED --export-dir FILTERED_ACTIVITIESSo there’s a lot more to sqoop than just this, including features and topics such as compression, transforming data and so on, but it’s a useful tool and also something you could call from the command-line, using an ODI Tool if you want to. Recent versions of Hue also come with a GUI for Sqoop, giving you the ability to create jobs graphically and also schedule them using Oozie, the Hadoop scheduler in CDH4,