End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.3 : Enhance with Oracle Reference Data via Sqoop, and CKMs

In the first two posts in this series, I used the software on the Oracle Big Data Appliance 3.0 to ingest web log data from the Rittman Mead blog server, parse and load that data into a Hive table, and then join that table to another to add details on post and author. Links to the post in this series are below, and I’ll complete them as the series is posted this week:

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.1 : Flume to Initial Hive Table

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.2 : Hive Table Joins, Aggregation and Loading

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.3 : Enhance with Oracle Reference Data via Sqoop, and CKMs

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.4 : Transforming Data using Python & Hive Streaming

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.5 : Bulk Unload to Oracle

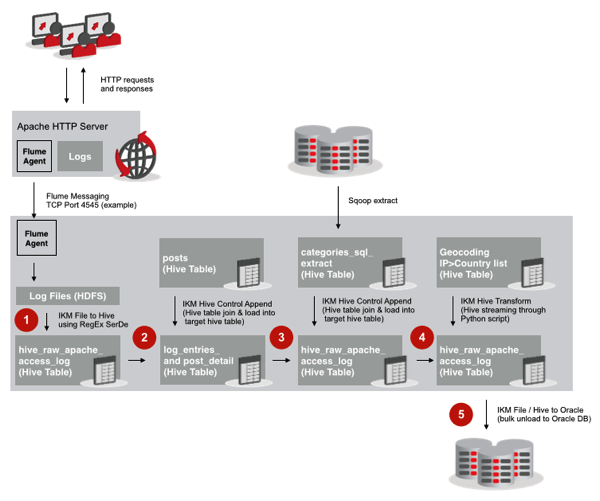

In this next step, I’ve got some data sitting in an Oracle database that I’d like to use to enhance the data in the Hadoop cluster; as a reminder, the diagram below shows how I’m looking to move data through the system, and we’re currently at step number three:

The data in the Oracle tables contains categories that I can join to the page entries that have come through the ETL process, and the join is pretty simple: POST_ID = POST_ID; but how do I get access to the Oracle data - I need to register the Oracle source in the ODI topology and create a model to represent the table, but can I then just join that table to the Hive table and load the results into another Hive table? Let’s try.

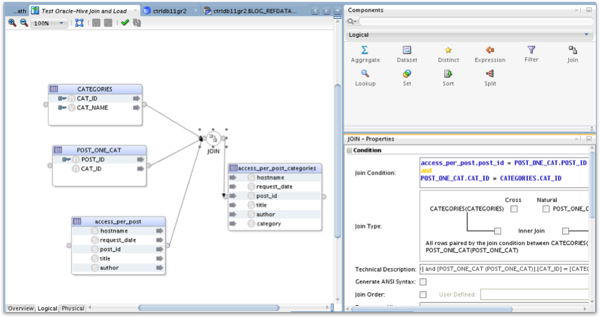

The screenshot below shows the mapping that would use this approach, with two Oracle tables joining to the Hive table and another Hive table as the target; on the right-hand side of the screen is the join condition, across both sources:

The Execution view shows how ODI intends to run the transformation, with LKM SQL to SQL KMs used to load the Oracle tables and stage their data in Hive, and then IKM Hive Control Append used to load the joined dataset into another Hive table.

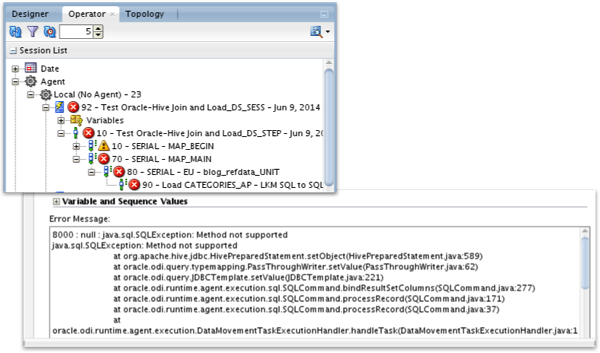

If you try and execute the mapping though, it fails - because the LKM SQL to SQL KM can’t work with Hive tables yet.

In fact. the various IKMs that come with Oracle Data Integrator Application Adapter for Hadoop (IKM file to Hive, IKM Hive Control Append etc) are a bit of a mix of IKMs and LKMs in that they contain extraction code, and integration code in the same KM, and none of the regular LKMs and IKMs will otherwise work with Hadoop sources. In fact, what we need to do with ODI at this point is actually land the Oracle data in Hive first, then do the join, which then begs the second question - how do we do that?

Currently, the only way to get Oracle data in to Hadoop is via IKM File to Hive (LOAD DATA), which involves an unnecessary extra step of exporting the Oracle data to a file, and then loading that file into HDFS and Hive. What we can do though is use sqoop, a tool within Hadoop to extract from, and load into, relational databases, something I covered on the blog a few weeks ago. Sqoop creates data loading and unloading jobs that run in parallel on the Hadoop cluster, and can use native JDBC drivers or even additional plugins such as Oraoop to make the process run faster (though Oracle Loader for Hadoop is considered the fastest way to unload to Oracle, if you’ve licensed the Big Data Adapters).

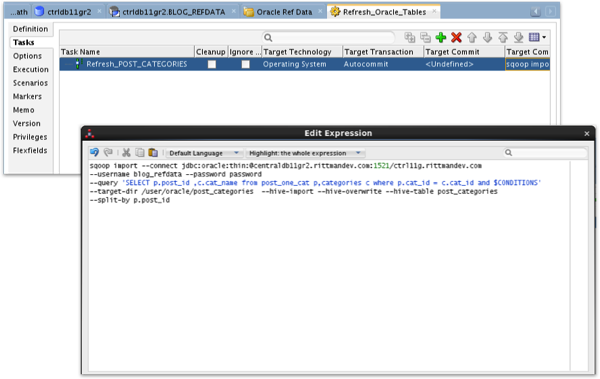

The only problem is that there’s no official support for sqoop in ODI, so no KMs that make use of it. What you can do though is create a command-line script to run sqoop and include that in an ODI procedure, which is what I’ll now do to bring in my Oracle data into Hive. To do this, I create an ODI procedure and add a single task, using the Operating System (command-shell) technology type, and use it to tell Sqoop to create me a Hive table based on an SQL SELECT statement against my Oracle database:

(note that I had to format the sqoop command, in practice, as one line, to get it to run - the above listing is so that you can see all of the code.)

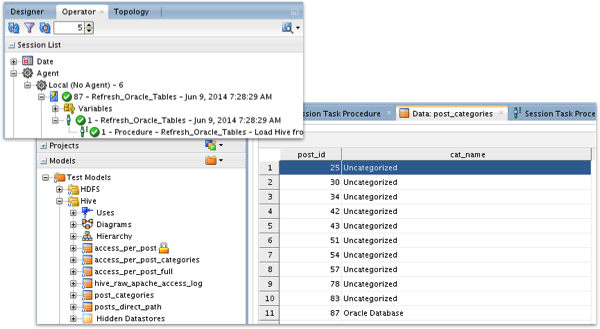

Executing this procedure works OK, and thereafter I’ve got a single Hive table containing the joined dataset from Oracle.

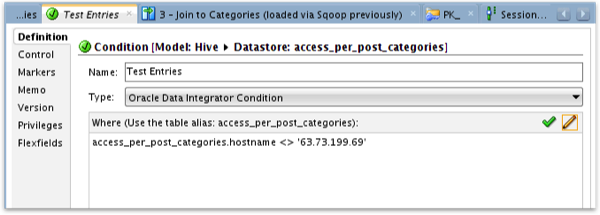

Joining this new Hive table to the previous one containing the distinct set of page views is then fairly straightforward, but something I’d also like to do is stop any entries going into the rest of the ETL process where the calling IP address is a test one we use, “63.73.199.69". The way I can do this is to use the CKM Hive knowledge module and put a constraint on the hostname in the table I’ll be loading from the join, so that I can then use ODI’s flow control feature to divert those rows to an error table.

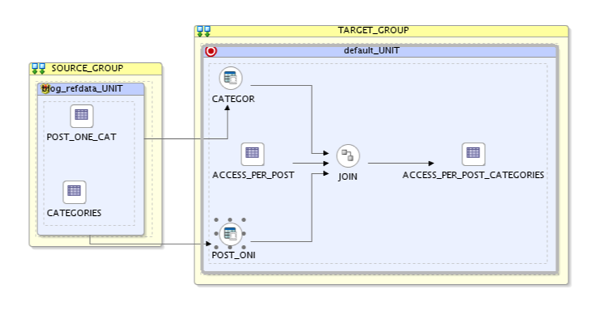

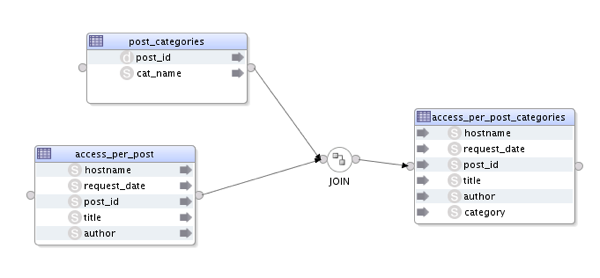

I also need to define a primary key for this table, something that’s mandatory when flow control is used. So let’s put the mapping together, joining the table I just brought in from Sqoop with the latest version of the weblog entries Hive table, loading into the Hive table I’ve just enabled the constraint for:

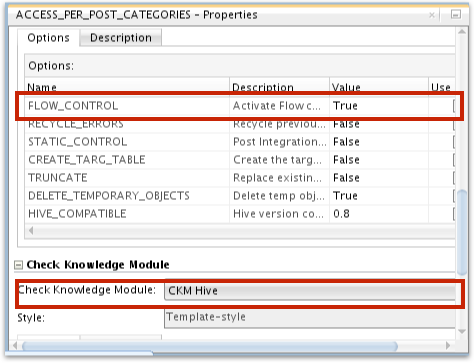

and then I enable flow control, and the CKM Hive check knowledge module, in the Physical mapping settings.

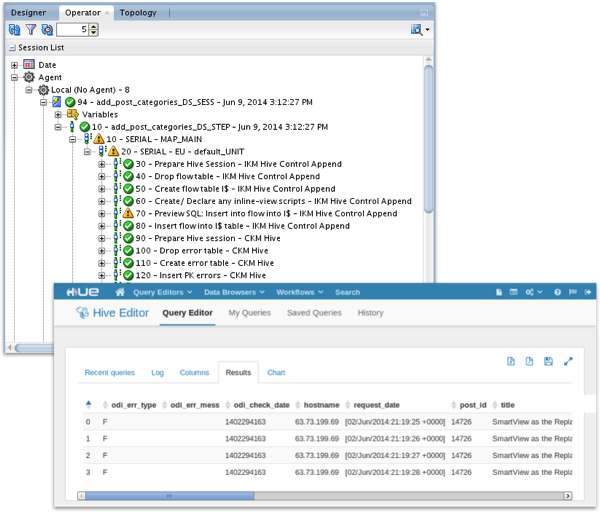

This is of course one of the benefits of using ODI to do your Hadoop data loading - you’ve got access to the data quality and error handling features that come with the tool. Then, when I execute the mapping and check with the Operator navigator, I can see the error handling process running, and afterwards in Hue I can see the contents of the new error table, which now contains those log entries where my test IP address was used, removing them from the target Hive table where they’d ordinarily have gone.

So that’s the third step in the ODI BDA ETL process complete. The next one’s a bit trickier though - I need to geocode the entries in the log table, assigning country names to each row based on where the IP address is located. More tomorrow.