Oracle OpenWorld 2015 Roundup Part 2 : Data Integration, and Big Data (in the Cloud...)

In yesterdays part one of our three-part Oracle Openworld 2015 round-up, we looked at the launch of OBIEE12c just before Openworld itself, and the new Data Visualisation Cloud Service that Thomas Kurian demo’d in his mid-week keynote. In part two we’ll look at what happened around data integration both on-premise and in the cloud, along with big data - and as you’ll see they’re too topics that are very much linked this year.

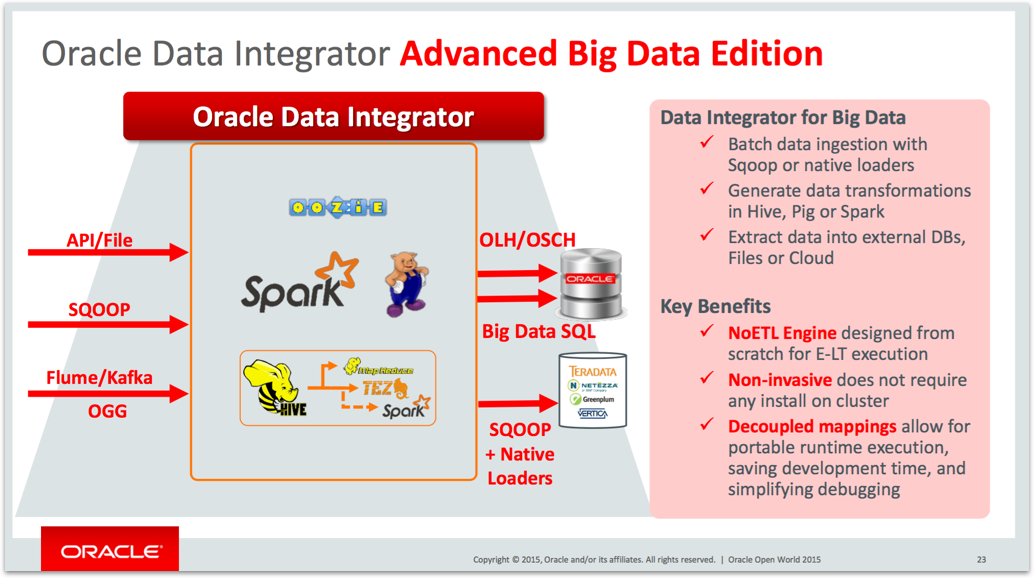

First off, data integration - and like OBIEE12c, ODI 12.2.1 got released a day or so before Openworld as part of the wider Oracle Fusion Middleware 12c Release 2 platform rollout. Some of what was coming in ODI12.2.1 got back-ported to ODI 12.1 earlier in the year in the form of the ODI Enterprise Edition Big Data Options, and we covered the new capabilities it gave ODI in terms of generating Pig and Spark mappings in a series of posts earlier in the year - adding Pig as an execution language gives ODI an ability to create dataflow-style mappings to go with Hive’s set-based transformations, whilst also opening-up access to the wide range of Pig-specific UDF libraries such as DataFu for log analysis. Spark, in the meantime, can be useful for smaller in-memory data transformation jobs and as we’ll see in a moment, lays the foundation for streaming and real-time ingestion capabilities.

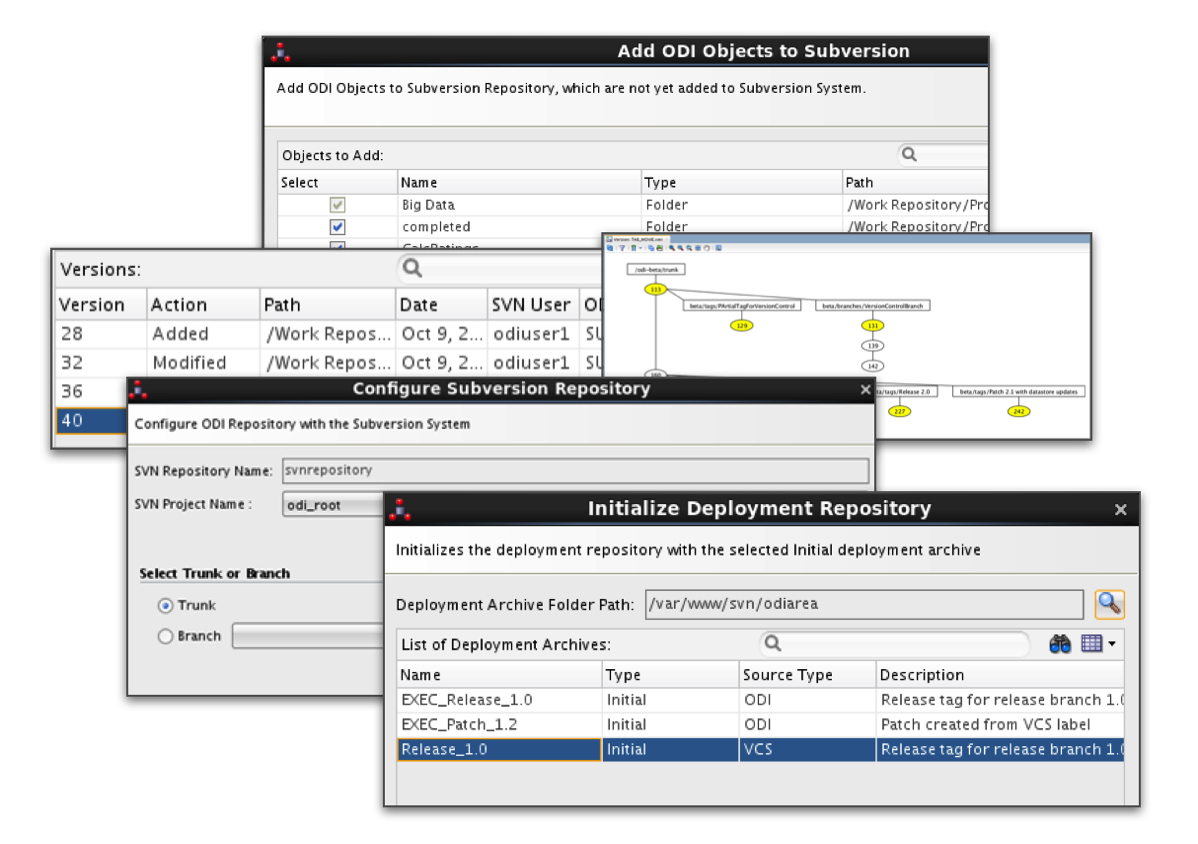

The other key feature that ODI12.2.1 provides though is better integration with external source control systems. ODI already has some element of version control built in, but as it’s based around ODI’s own repository database tables it’s hard to integrate with more commonly-used enterprise source control tools such as Subversion or Git, and there’s no standard way to handle development concepts like branching, merging and so on. ODI 12.2.1 adds these concepts into core ODI and initially focuses on SVN as the external source control tool, with Git support planned in the near future.

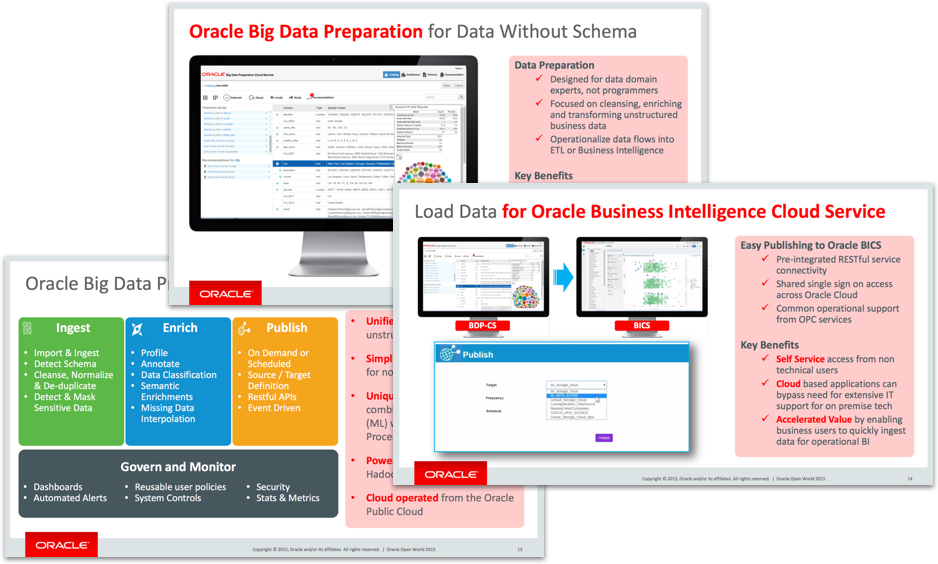

Updates to GoldenGate, Enterprise Data Quality and Enterprise Metadata Management were also announced, whilst Oracle Big Data Preparation Cloud Service got its first proper outing since release earlier in the year. Big Data Preparation Cloud Service (BDP for short) to my mind suffers a bit from confusion over what it does and what market it serves - at some point it’s been positioned as a tool for the “citizen data scientist” as it enables data domain experts to wrangle and prepare data for loading into Hadoop, whilst at other times it’s labelled a tool for production data transformation jobs under the control of IT. What is misleading is the “big data” label - it runs on Hadoop and Spark but it’s not limited to big data use-cases, and as the slides below show it’s a great option for loading data into BI Cloud Service as an alternative to more IT-centric tools such as ODI.

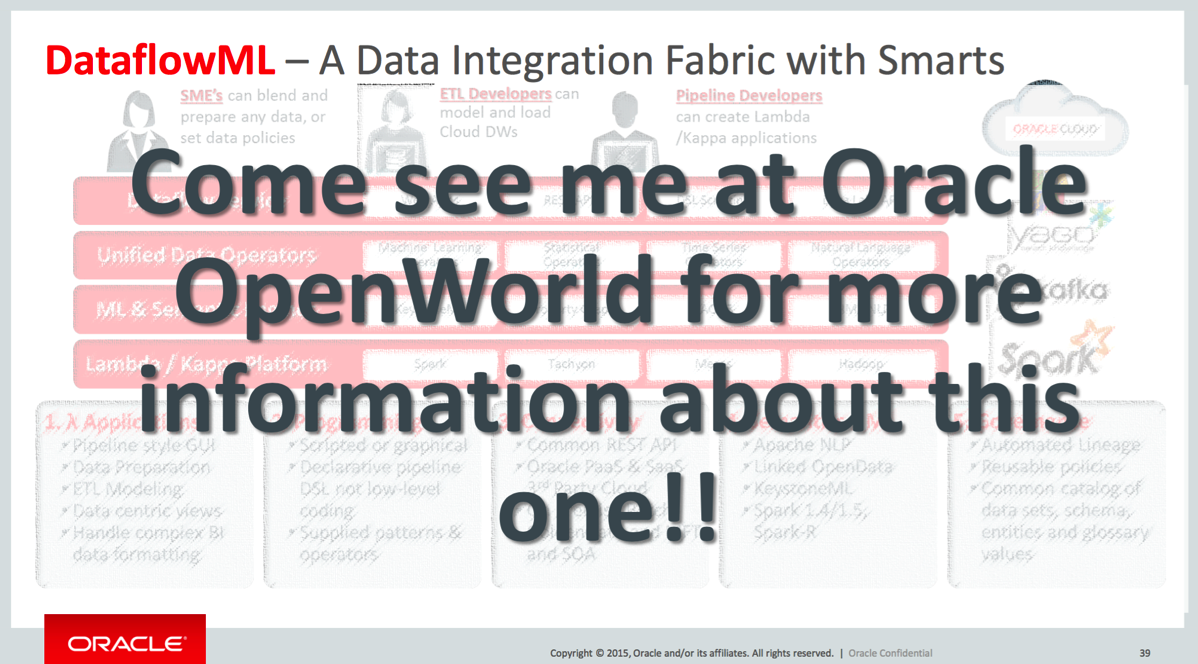

It was another announcement though at Openworld that made Big Data Prep Service suddenly make a lot more sense - the announcement of a new initiative called Dataflow ML, something Oracle describe as “ETL 2.0” with an entirely cloud-based architecture and heavy use of machine learning (the “ML” in “Dataflow ML”) to automate much of the profiling and discovery process - the key innovation on Big Data Prep Service.

It’s early days for Dataflow ML but clearly this is the direction Oracle will want to take as applications and platforms move to the cloud - I called-out ODI’s unsuitability for running in the cloud a couple of years ago and contrasted its architecture with that of cloud-native tools such as Snaplogic, and Dataflow ML is obviously Oracle’s bid to move data integration into the cloud - coupling that with innovations around Spark as the data processing platform and machine-learming to automate routine tasks and it sounds like it could be a winner - watch this space as they say.

So the other area I wanted to cover in this second of three update pieces was on big data. All of the key big data announcements from Oracle came in last year’s Openworld - Big Data Discovery, Big Data SQL, Big Data Prep Service (or Oracle Data Enrichment Cloud Service as it was called back then) and this year saw updates to Big Data SQL (Storage Indexes), Big Data Discovery (general fit-and-finish enhancements) announced at this event. What is probably more significant though is the imminent availability of all this - plus Oracle Big Data Appliance - in Oracle’s Public Cloud.

Most big data PoCs I see outside of Oracle start on Amazon AWS and build-out from there - starting at very low-cost and moving from Amazon Elastic MapReduce to Cloudera CDH (via Cloudera Director), for example, or going from cloud to on-premise as the project moves into production. Oracle’s Big Data Cloud Service takes a different approach - instead of using a shared cloud infrastructure and potentially missing the point of Hadoop (single user access to lots of machines, vs. cloud’s timeshared access to slices of machines) Oracle instead effectively lease you a Big Data Appliance along with a bundle of software; the benefits being around performance but with quite a high startup cost vs. starting small with AWS.

The market will tell which approach over time gets most traction, but where Big Data Cloud Service does help tools like Big Data Discovery is that theres much more opportunities for integration and customers will be much more open to an Oracle tool solution compared to those building on commodity hardware and community Hadoop distributions - to my mind every Big Data Cloud Service customer ought to buy BDD and most probably Big Data Prep Service, so as customers adopt cloud as a platform option for big data projects I’d expect an uptick in sales of Oracle’s big data tools.

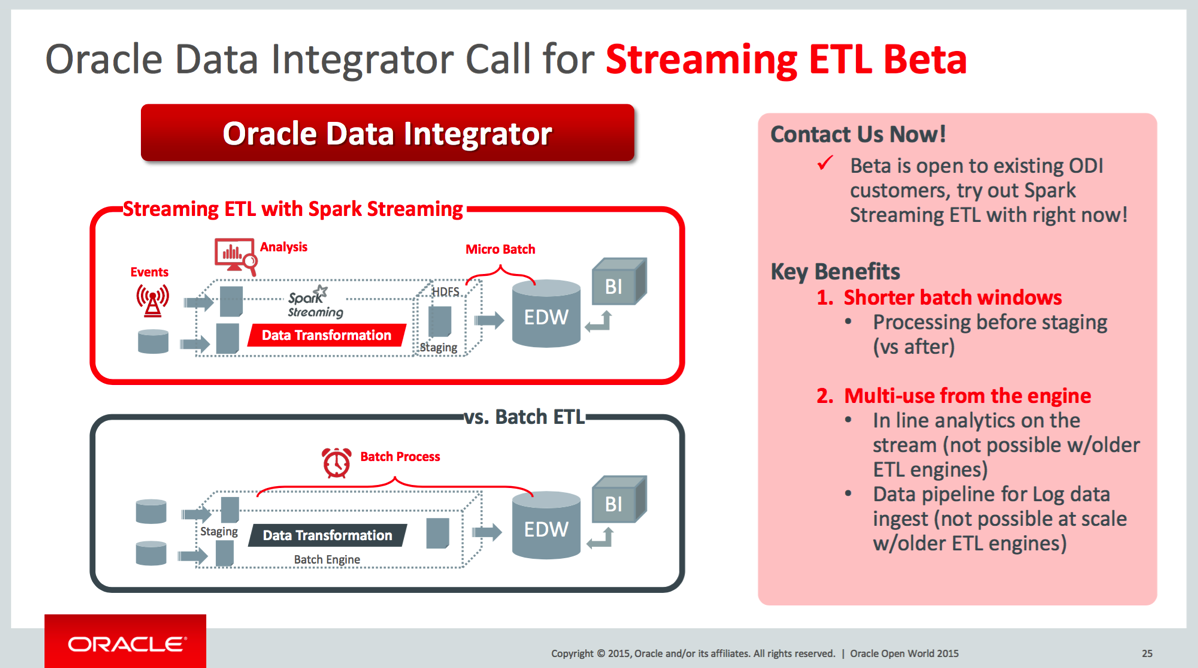

On a related topic and looping back to Oracle Data Integration, the other announcement in this area that was interesting was around Spark Streaming support in Oracle Data Integrator 12c.

ODI12c has got some great batch-style capabilities around Hadoop but as I talked about earlier in the year in an article on Flume, Morphines and Cloudera Search the market is all about real-time data ingestion now, batch is more for one-off historical data loads. Again like Dataflow ML this feature is in beta and probably won’t be out for many months, but when it comes out it’ll complete ODI’s capabilities around big data ingestion - we’re hoping to take part in the beta so keep an eye on the blog for news as it comes out.

So that’s it for part 2 of our Oracle Openworld 2015 update - we’ll complete the series tomorrow with a look at Oracle BI Applications, Oracle Database 12cR2 “sharding” and something very interesting planned for a future Oracle 12c database release - “Analytic Views”.