Source Control and Automated Code Deployment Options for OBIEE

It's Monday morning. I've arrived at a customer site to help them - ironically enough - with automating their OBIEE code management. But, on arrival, I'm told that the OBIEE team can't meet with me because someone did a release on the previous Friday, had now gone on holiday - and the wrong code was released but they didn't know which version. All hands-on-deck, panic-stations!

This actually happened to me, and in recent months too. In this kind of situation hindsight gives us 20:20 vision, and of course there shouldn't be a single point of failure, of course code should be under version control, of course it should be automated to reduce the risk of problems during deployments. But in practice, these things often don't get done - and it's understandable why. In the very early days of a project, it will be a manual process because that's what is necessary as people get used to the tools and technology. As time goes by, project deadlines come up, and tasks like this are seen as "zero sum" - sure we can automate it, but we can also continue doing it manually and things will still get done, code will still get released. After a while, it's just accepted as how things are done. In effect, it is technical debt - and this is your reminder that debt has to be paid, sooner or later :)

I'll not pretend that managing OBIEE code in source control, and automating code deployments, is straightforward. But, it is necessary, so in this post I'll walk through why you should be doing it, and then importantly how.

Why Source Control?

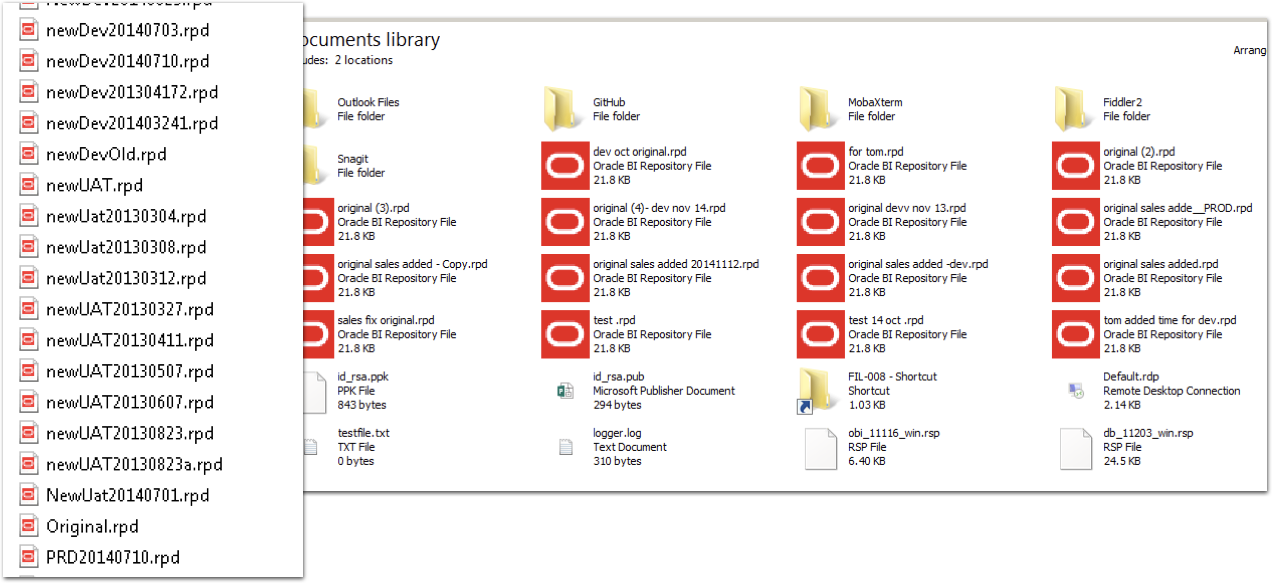

Do we really need source control for OBIEE? After all, what's wrong with the tried-and-tested method of sticking it all in a folder like this?

What's wrong with this? What's right with this? Oh lack of source control, let me count the number of ways that I doth hate thee:

- No audit trail of who changed something

- No audit of what was changed, and when

- No enforceable naming standards for versions

- No secure way of identifying deployment candidates

- No distributed method for sharing code (don't tell me that a network share counts!)

- No way of reliably identifying the latest version of code

These range from the immediately practical through to the slightly more abstract but necessary in a mature deployment.

Of immediate impact is the simply ability to identify the latest version of code on which to make new changes. Download the copy from the live server? Really? No. If you're tracking your versions accurately and reliably then you simply pull the latest version of code from there, in the knowledge that it is the version that is live. No monkeying around trying to figure out if it really is (just because it's called "PROD-091216.rpd" how do you know that's actually what got released to Production? And was that on 12th December or 9th September? Who knows!).

Longer term, having a secure and auditable code line simply makes it easier and less risky to manage. It also gives you the ability to work with it in a much more flexible manner, such as genuine concurrent development by multiple developers against the RPD. You can read more about this in my presentation here.

Which Source Control?

I don't care. Not really. So long as you are using source control, I am happy.

For preference, I always advocate using git. It is a modern platform, with strong support from many desktop clients (SourceTree is my favourite, along with the commandline too, natch). Git is decentralised, meaning that you can commit and branch code locally on your own machine without having to be connected to a server. It supports a powerful fork and pull process too, which is part of the reason it has almost universal usage within the open source world. The most well known of git platforms is github, which in effect provides git as a Platform-as-a-service (PaaS), in a similar fashion to Bitbucket too. You can also run git on its own locally, or more pragmatically, with gitlab.

But if you're using Subversion (SVN), Perforce, or whatever - that's fine. The key thing is that you understand how to use it, and that it is supported within your organisation. For simple source control, pretty much all the common platforms work just fine. If you get onto more advanced use, such as feature-branches and concurrent development, you may find it worth ensuring that your chosen platform supports the workflow that you adopt. Even then, whilst I'd chose git for preference, at Rittman Mead we've helped clients develop very powerful concurrent development processes with Subversion providing the underlying source control.

What Goes into Source Control? Part 1

So you've drunk the Source Control koolaid, and accepted that really there is no excuse not to use it. So what do you put into it? The RPD? The OBIEE 12c BAR file? What if you're still on OBIEE 11g? The answer here depends partially on how you are planning to manage code deployment in your environment. For a fully automated solution, you may opt to store code in a more granular fashion than if you are simply migrating full BAR files each time. So, read on to understand about code deployment, and then we'll revisit this question again after that.

How Do You Deploy Code Changes in OBIEE?

The core code artefacts are the same between OBIEE 11g and OBIEE 12c, so I'll cover both in this article, pointing out as we go any differences.

The biggest difference with OBIEE 12c is the concept of the "Service Instance", in which the pieces for the "analytical application" are clearly defined and made portable. These components are:

- Metadata model (RPD)

- Presentation Catalog ("WebCat"), holding all analysis and dashboard definitions

- Security - Application Roles and Policy grants, as well as OBIEE front-end privilege grants

Part of this is laying the foundations for what has been termed "Pluggable BI", in which 'applications' can be deployed with customisations layered on top of them. In the current (December 2016) version of OBIEE 12c we have just the Single Service Instance (ssi). Service Instances can be exported and imported to BI Archive files, known as BAR files.

The documentation for OBIEE environment migrations (known as "T2P" - Test to Production) in 12c is here. Hopefully I won't be thought too rude for saying that there is scope for expanding on it, clarifying a few points - and perhaps making more of the somewhat innocuous remark partway down the page:

PROD Service Instance metadata will be replaced with TEST metadata.

Hands up who reads the manual fully before using a product? Hands up who is going to get a shock when they destroy their Production presentation catalog after importing a service instance?...

Let's take walk through the three main code artefacts, and how to manage each one, starting with the RPD.

The RPD

The complication of deployments of the RPD is that the RPD differs between environments because of different connection pool details, and occassionally repository variable values too.

If you are not changing connection pool passwords between environments, or if you are changing anything else in your RPD (e.g. making actual model changes) between environments, then you probably shouldn't be. It's a security risk to not have different passwords, and it's bad software development practice to make code changes other than in your development environment. Perhaps you've valid reasons for doing it... perhaps not. But bear in mind that many test processes and validations are based on the premise that code will not change after being moved out of dev.

With OBIEE 12c, there are two options for managing deployment of the RPD:

- BAR file deploy and then connection pool update

- Offline RPD patch with connection pool updates, and then deploy

- This approach is valid for OBIEE 11g too

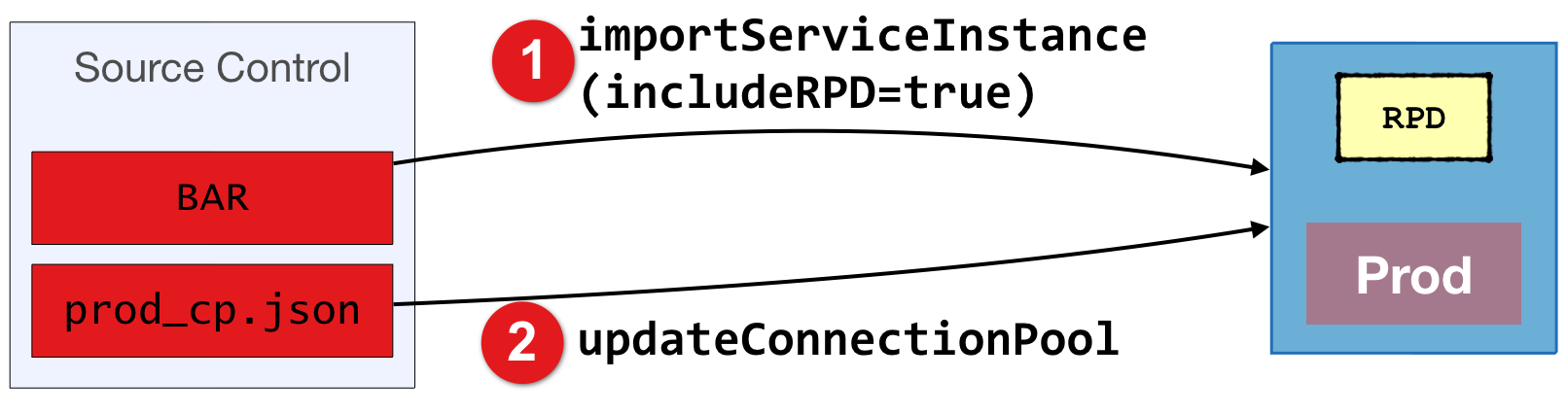

RPD Deployment in OBIEE 12c - Option 1

This is based on the service instance / BAR concept. It is therefore only valid for OBIEE 12c.

-

One-off setup : Using

listconnectionpoolto create a JSON connection pool configuration file per target environment. Store each of these files in source control. -

Once code is ready for promotion from Development, run

exportServiceInstanceto create a BAR file. Commit this BAR file to source control/app/oracle/biee/oracle_common/common/bin/wlst.sh <<EOF exportServiceInstance('/app/oracle/biee/user_projects/domains/bi/','ssi','/home/oracle','/home/oracle') EOF

-

To deploy the updated code to the target environment:

-

Checkout the BAR from source control

-

Deploy it with

importServiceInstance, ensuring that theimportRPDflag is set./app/oracle/biee/oracle_common/common/bin/wlst.sh <<EOF importServiceInstance('/app/oracle/biee/user_projects/domains/bi','ssi','/home/oracle/ssi.bar',true,false,false) EOF -

Run

updateConnectionPoolusing the configuration file from source control for the target environment to set the connection pool credentials/app/oracle/biee/user_projects/domains/bi/bitools/bin/datamodel.sh updateconnectionpool -C ~/prod_cp.json -U weblogic -P Admin123 -SI ssi

Note that your OBIEE system will not be able to connect to source databases to retrieve data until you update the connection pools.

- The BI Server should pick up the new RPD after a few minutes. You can force this by restarting the BI Server, or using "Reload Metadata" from OBIEE front end.

-

Whilst you can also create the BAR file with includeCredentials, you wouldn't use this for migration of code between environments - because you don't have the same connection pool database passwords in each environment. If you do have the same passwords then change it now - this is a big security risk.

The above BAR approach works fine, but be aware that if the deployed RPD is activated on the BI Server before you have updated the connection pools (step 3 above) then the BI Server will not be able to connect to the data sources and your end users will see an error. This approach is also based on storing the BAR file as whole in source control, when for preference we'd store the RPD as a standalone binary if we want to be able to do concurrent development with it.

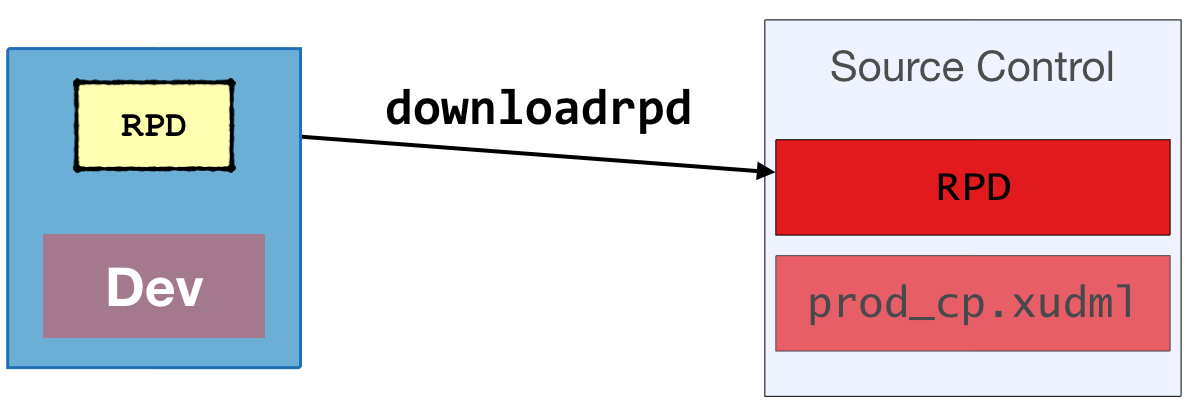

RPD Deployment in OBIEE 12c - Option 2 (also valid for OBIEE 11g)

This approach takes the RPD on its own, and takes advantage of OBIEE's patching capabilities to prepare RPDs for the target environment prior to deployment.

-

One-off setup: create a XUDML patch file for each target environment.

Do this by:

-

Take your development RPD (e.g. "DEV.rpd"), and clone it (e.g. "PROD.rpd")

-

Open the cloned RPD (e.g. "PROD.rpd") offline in the Administration Tool. Update it only for the target environment - nothing else. This should be all connection pool passwords, and could also include connection pool DSNs and/or users, depending on how your data sources are configured. Save the RPD.

-

Using

comparerpd, create a XUDML patch file for your target environment:/app/oracle/biee/user_projects/domains/bi/bitools/bin/comparerpd.sh \ -P Admin123 \ -W Admin123 \ -G ~/DEV.rpd \ -C ~/PROD.rpd \ -D ~/prod_cp.xudml -

Repeat the above process for each target environment

-

-

Once code is ready for promotion from Development:

2. Extract the RPD* In OBIEE 12c use [`downloadrpd`](http://docs.oracle.com/middleware/1221/biee/BIEMG/planning.htm#BIEMG4668) to obtain the RPD file /app/oracle/biee/user_projects/domains/bi/bitools/bin/datamodel.sh \ downloadrpd \ -O /home/oracle/obiee.rpd \ -W Admin123 \ -U weblogic \ -P Admin123 \ -SI ssi * In OBIEE 11g copy the file from the server filesystem- Commit the RPD to source control

-

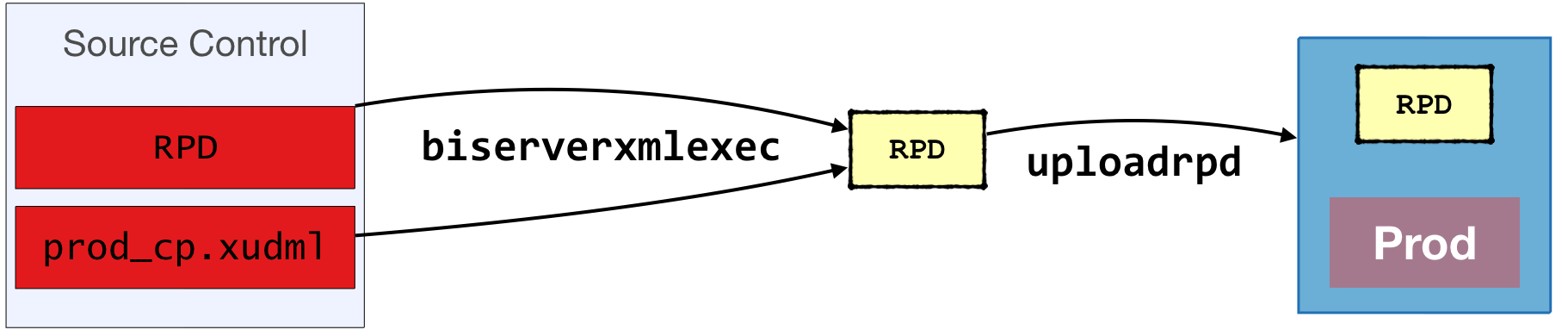

To deploy the updated code to the target environment:

-

Checkout the RPD from source control

-

Prepare it for the target environment by applying the patch created above

3. Check out the XUDML patch file for the appropriate environment from source control

4. Apply the patch file usingbiserverxmlexec:/app/oracle/biee/user_projects/domains/bi/bitools/bin/biserverxmlexec.sh \ -P Admin123 \ -S Admin123 \ -I prod_cp.xudml \ -B obiee.rpd \ -O /tmp/prod.rpd -

Deploy the patched RPD file

-

In OBIEE 12c use

uploadrpd/app/oracle/biee/user_projects/domains/bi/bitools/bin/datamodel.sh

uploadrpd

-I /tmp/prod.rpd

-W Admin123

-U weblogic

-P Admin123

-SI ssi

-D

The RPD is available straightaway. No BI Server restart is needed.

-

In OBIEE 11g use WLST's uploadRepository to programatically do this, or manually from EM.

After deploying the RPD in OBIEE 11g, you need to restart the BI Server.

-

-

This approach is the best (only) option for OBIEE 11g. For OBIEE 12c I also prefer it as it is 'lighter' than a full BAR, more solid in terms of connection pools (since they're set prior to deployment, not after), and it enables greater flexibility in terms of RPD changes during migration since any RPD change can be encompassed in the patch file.

Note that the OBIEE 12c product manual states that uploadrpd/downloadrpd are for:

"...repository diagnostic and development purposes such as testing, only ... all other repository development and maintenance situations, you should use BAR to utilize BAR's repository upgrade and patching capabilities and benefits.".

Maybe in the future the BAR capabilites will extend beyond what they currently do - but as of now, I've yet to see a definitive reason to use them and not uploadrpd/downloadrpd.

The Presentation Catalog ("WebCat")

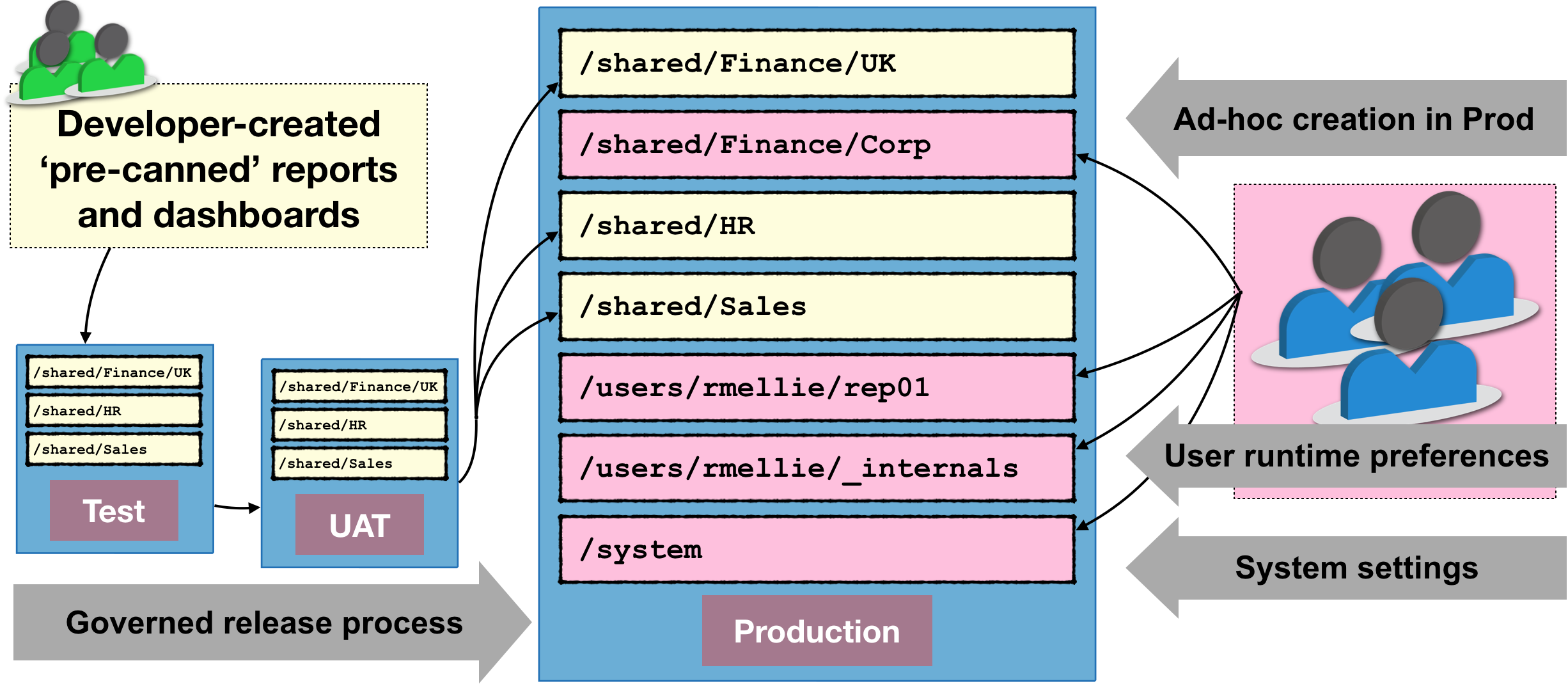

The Presentation Catalog stores the definition of all analyses and dashboards in OBIEE, along with supporting objects including Filters, Conditions, and Agents. It differs significantly from the RPD when it comes to environment migrations. The RPD can be seen in more traditional software development lifecycle terms, sine it is built and developed in Development, and when deployed in subsequent environment overwrites in entirety what is currently there. However, the Presentation Catalog is not so simple.

Commonly, content in the Presentation Catalog is created by developers as part of 'pre-canned' reporting and dashboard packs, to be released along with the RPD to end-users. Where things get difficult is that the Presentation Catalog is also written to in Production. This can include:

- User-developed content saved in one (or both) of:

- My Folders

- Shared, e.g. special subfolders per department for sharing common reports outside of "gold standard" ones

- User's profile data, including timezone and language settings, saved dashboard customisations, preferred delivery devices, and more

- System configuration data, such as default formatting for specific columns, bookmarks, etc

In your environment you maybe don't permit some of these (for example, disabling access to My Folders is not uncommon). But almost certainly, you'll want your users to be able to persist their environment settings between sessions.

The impact of this is that the Presentation Catalog becomes complex to manage. We can't just overwrite the whole catalog when we come to deployment in Production, because if we do so all of the above listed content will get deleted. And that won't make us popular with users, at all.

So how do we bring any kind of mature software development practice to the Presentation Catalog, assuming that we have report development being done in non-Production environments?

We have two possible approaches:

- Deploy the full catalog into Production each time, but backup first existing content that we don't want to lose, and restore it after the deploy

- Fiddly, but means that we don't have to worry about which bits of the catalog go in source control - all of it does. This has consequences for if we want to do branch-based development with source control, in that we can't. This is because the catalog will exist as a single binary (whether

BARor7ZIP), so there'll be no merging with the source control tool possible. - Risky, if we forget to backup the user content first, or something goes wrong in the process

- A 'heavier' operation involving the whole catalog and therefore almost certainly requiring the catalog to be in maintenance-mode (read only).

- Fiddly, but means that we don't have to worry about which bits of the catalog go in source control - all of it does. This has consequences for if we want to do branch-based development with source control, in that we can't. This is because the catalog will exist as a single binary (whether

- Deploy the whole catalog once, and then do all subsequent deploys as deltas (i.e. only what has changed in the source environment)

- Less risky, since not overwriting whole target environment catalog

- More flexible, and more granular so easier to track in source control (and optionally do branch-based development).

- Requires more complex automated deployment process.

Both methods can be used with OBIEE 11g and 12c.

Presentation Catalog Migration in OBIEE - Option 1

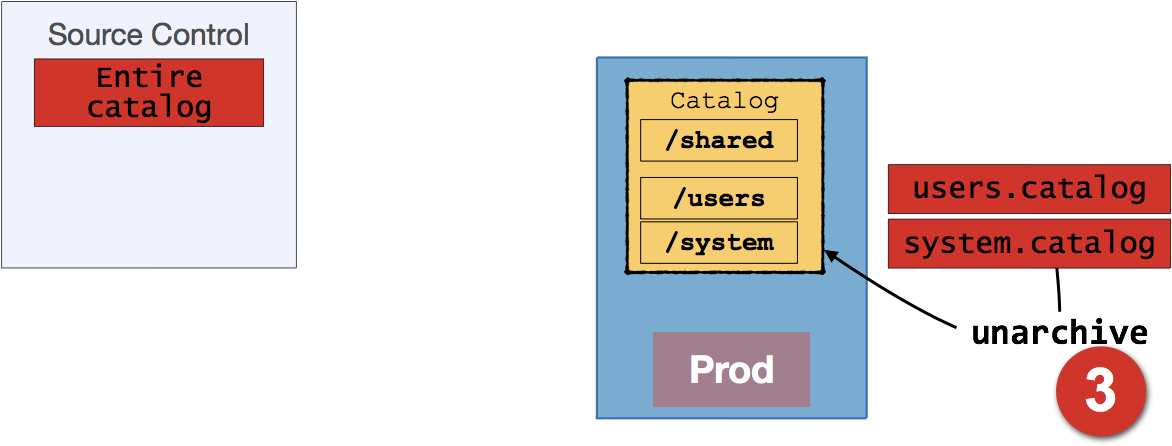

In this option, the entire Catalog is deployed, but content that we want to retain backed up first, and then re-instated after the full catalog deploy.

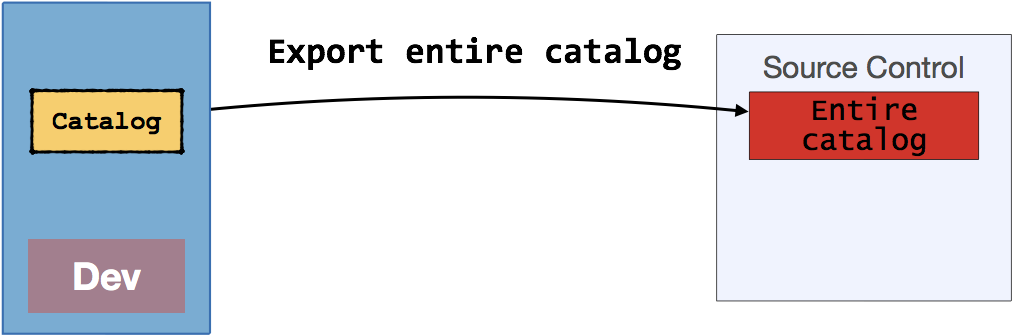

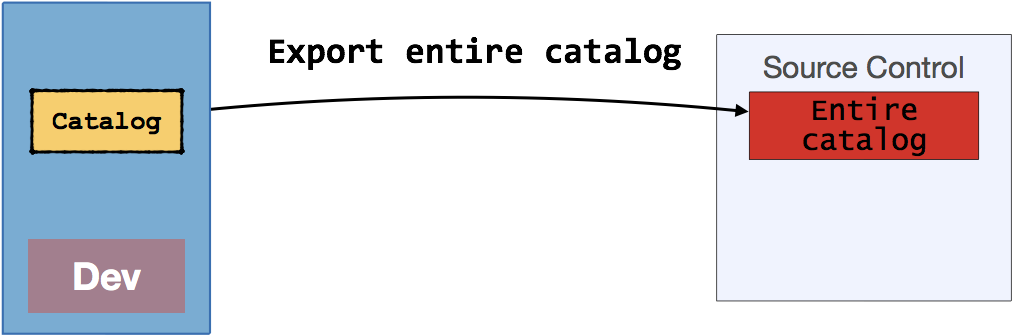

First we take the entire catalog from the source environment and store it in source control. With OBIEE 12c this is done using the exportServiceInstance WLST command (see the example with the RPD above) to create a BAR file. With OBIEE 11g, you would create an archive of the catalog at its root using 7-zip/tar/gzip (but not winzip).

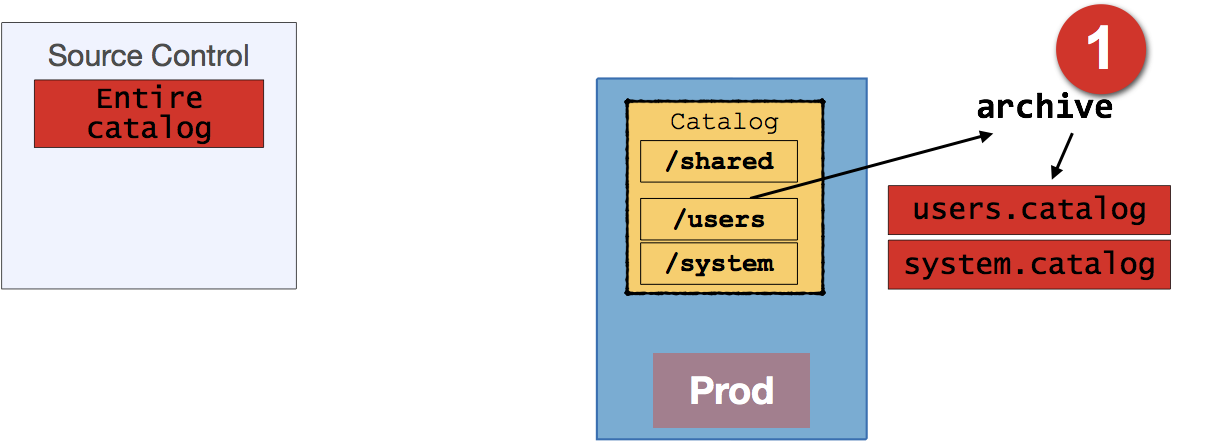

When ready to deploy to the target environment, we first backup the folders that we want to preserve. Which folders might we want to preserve?

/users- this holds both objects that users have created and saved inMy Folders, as well as user profile information (including timezone preferences, delivery profiles, dashboard customisations, and more)/system- this hold system internal settings, which include things such as authorisations for the OBIEE front end (/system/privs), as well as column formatting defaults (/system/metadata), global variables (/system/globalvariables), and bookmarks (/system/bookmarks).- See note below regarding the

/system/privsfolder

- See note below regarding the

/shared/<…>/<…>- if users are permitted to create content directly in the Shared area of the catalog you will want to preserve this. A valid use of this is for teams to share content developed internally, instead of (or prior to) it being released to the wider user community through a more formal process (the latter being often called 'gold standard' reports).

Regardless of whether we are using OBIEE 11g or 12c we create a backup of the folders identified by using the Archive functionality of OBIEE. This is NOT just creating a .zip file of the file system folders - which is completely unsupported and a bad idea for catalog management, except at the very root level. Instead, the Archive functionality creates a .catalog file which can be stored in source control, and unarchived back into OBIEE to restore content.

You can create OBIEE catalog archives in one of four ways, which are also valid for importing the content back into OBIEE too:

-

Manually, through OBIEE front-end

-

Manually, through Catalog Manager GUI

-

Automatically, through Catalog Manager CLI (

runcat.sh)-

Archive:

runcat.sh \ -cmd archive \ -online http://demo.us.oracle.com:7780/analytics/saw.dll \ -credentials /tmp/creds.txt \ -folder "/shared/HR" \ -outputFile /home/oracle/hr.catalog -

Unarchive:

runcat.sh \ -cmd unarchive \ -inputFile hr.catalog \ -folder /shared \ -online http://demo.us.oracle.com:7780/analytics/saw.dll \ -credentials /tmp/creds.txt \ -overwrite all

-

-

Automatically, using the

WebCatalogServiceAPI (copyItem2/pasteItem2).

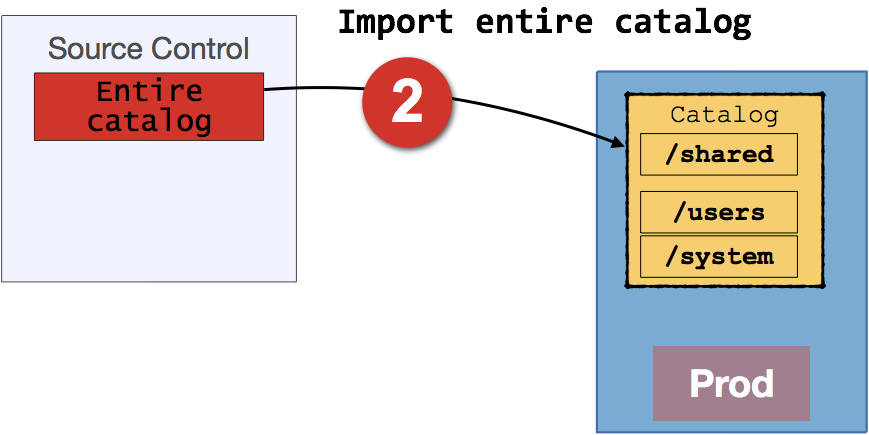

Having taken a copy of the necessary folders, we then deploy the entire catalog (with the changes from the development in) taken from source control. Deployment is done in OBIEE 12c using importServiceInstance. In OBIEE 11g it's done by taking the server offline, and replacing the catalog with the filesystem archive to 7zip of the entire catalog.

Finally, we then restore the folders previously saved, using the Unarchive function to import the .catalog files:

Presentation Catalog Migration in OBIEE - Option 2

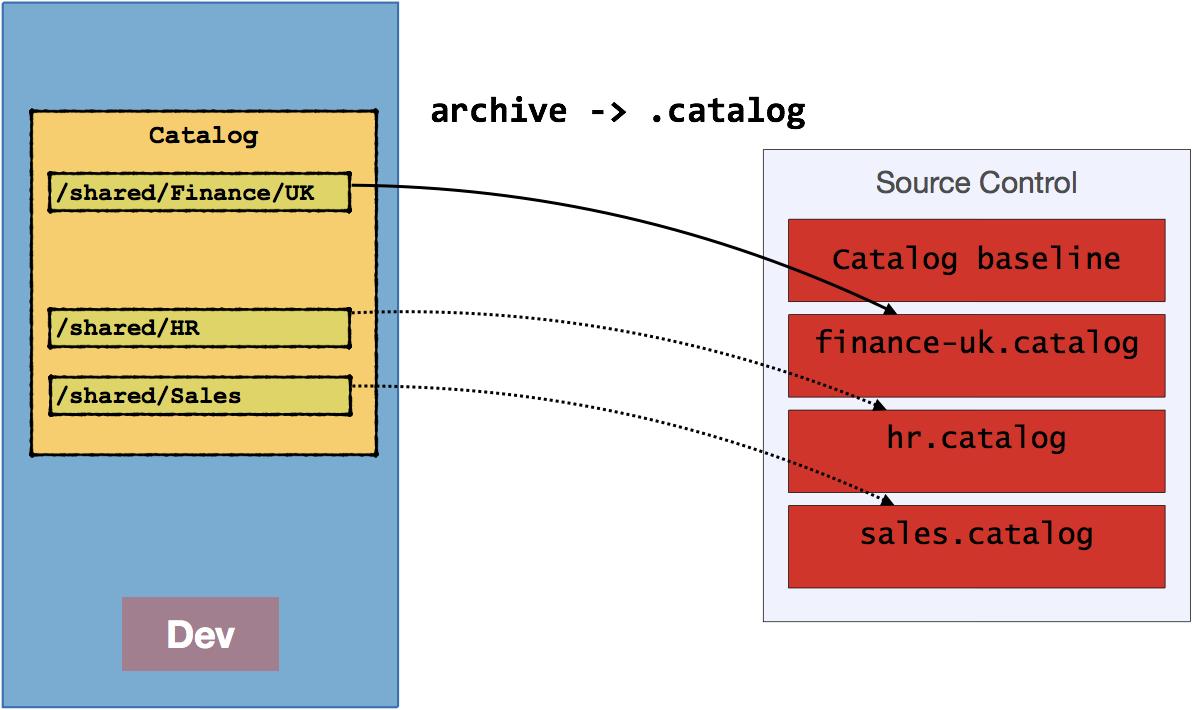

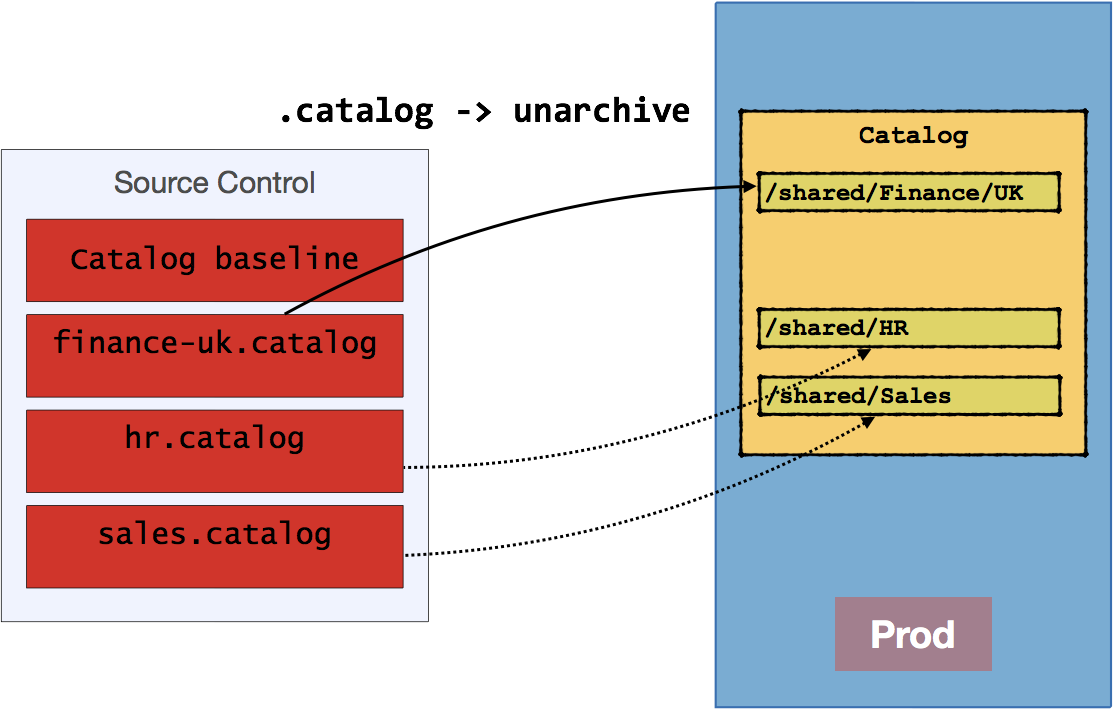

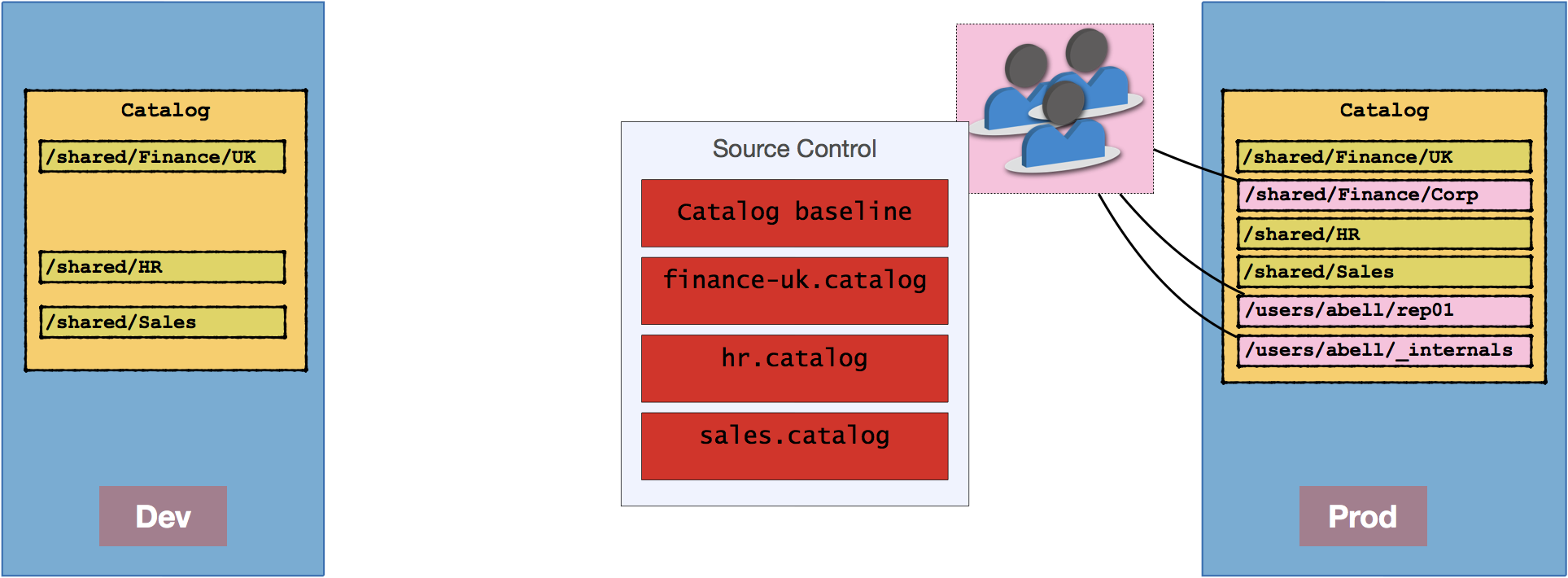

In this option we take a more granular approach to catalog migration. The entire catalog from development is only deployed once, and after that only .catalog files from development are put into source control and then deployed to the target environment.

As before, the entire catalog is initially taken from the development environment, and stored in source control. With OBIEE 12c this is done using the exportServiceInstance WLST command (see the example with the RPD above) to create a BAR file. With OBIEE 11g, you would create an archive of the catalog at its root using 7zip.

Note that this is only done once, as the initial 'baseline'.

The first time an environment is commissioned, the baseline is used to populate the catalog, using the same process as in option 1 above (in 12c, importServiceInstance/ in 11g unzip of full catalog filesystem copy).

After this, any work that is done in the catalog in the development environment is migrated through by using OBIEE's archive function against just the necessary /shared subfolder to a .catalog file, storing this in source control

This is then imported to target environment with unarchive capability. See above in option 1 for details of using archive/unarchive - just remember that this is archiving with OBIEE, not using 7zip!

You will need to determine at what level you take this folder: -

- If you archive the whole of

/sharedeach time you'll never be able to do branch-based development with the catalog in which you want to merge branches (because the.catalogfile is binary). - If you instead work at, say, department level (

/shared/HR,/shared/sales, etc) then the highest grain for concurrent catalog development would be the department. The lower down the tree you go the greater the scope for independent concurrent development, but the greater the complexity to manage. This is because you want to be automating the unarchival of these.catalogfiles to the target environment, so having to deal with multiple levels of folder hierarchy gets hard work.

It's a trade off between the number of developers, breadth of development scope, and how simple you want to make the release process.

The benefit of this approach is that content created in Production remains completely untouched. Users can continue to create their content, save their profile settings, and so on.

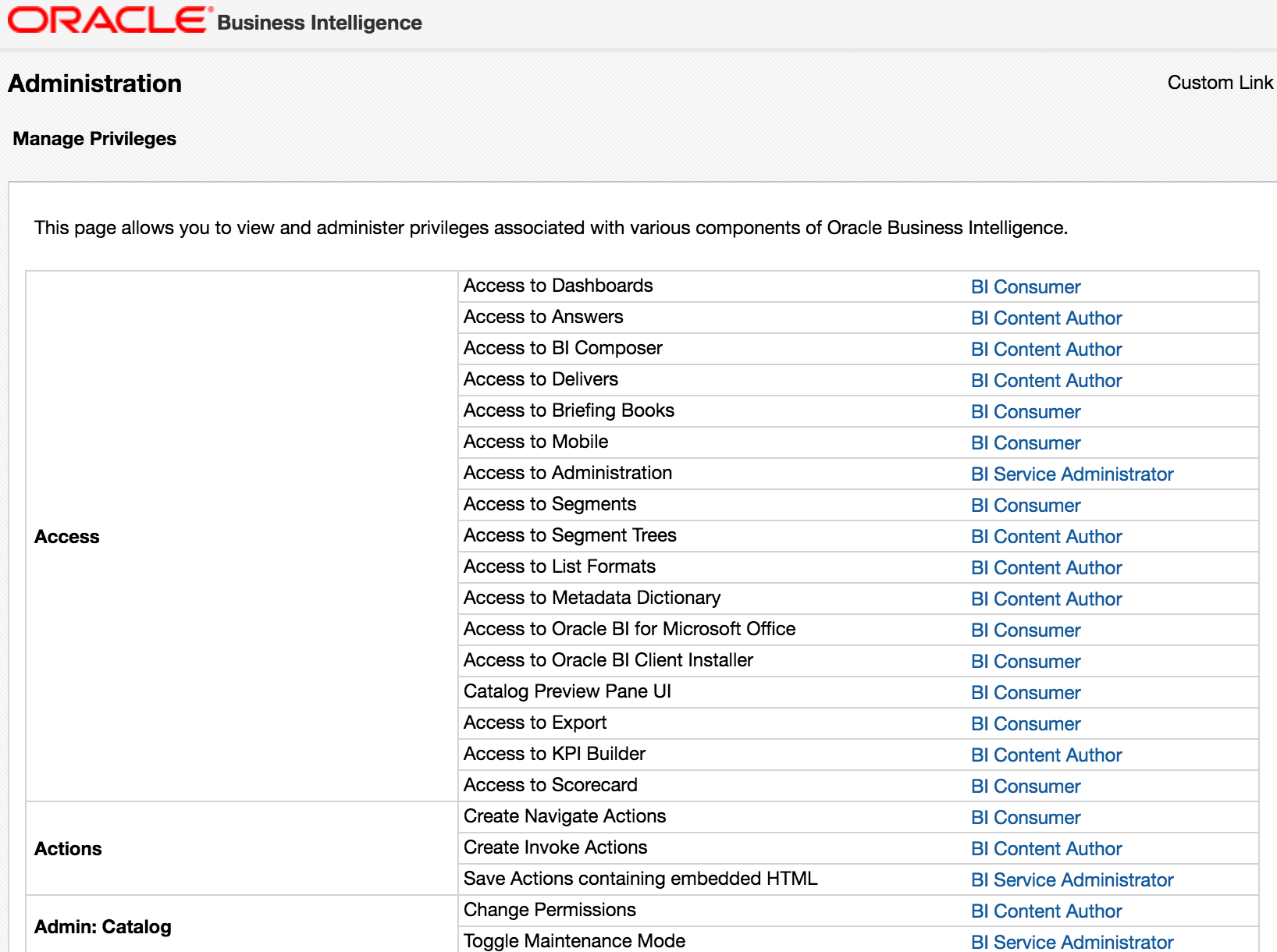

Presentation Catalog Migration - OBIEE Privilege Grants

Permissions set in the OBIEE front end are stored in the Presentation Catalog's /system/privs folder.

Therefore, how this folder is treated during migration dictates where you must apply your security grants (or conversely, where you set your security grants dictates how you should treat the folder in migrations). For me the "correct" approach would be to define the full set of privileges in the development environment and the migrate these through along with pre-built objects in /shared through to Production. If you have a less formal approach to environments, or for whatever reason permissions are granted directly in Production, you will need to ensure that the /system/privs folder isn't overwritten during catalog deployments.

When you create a BAR file in OBIEE 12c, it does include /system/privs (and /system/metadata). Therefore, if you are happy for these to be overwritten from the source environment, you would not need to backup/restore these folders. If you set includeCatalogRuntimeInfo in the OBIEE 12c export to BAR, it will also include the complete /system folder as well as /users.

Agents

Regardless of how you move Catalog content between environments, if you have Agents you need to look after them too. When you move Agents between environment, they are not automatically registered with the BI Scheduler in the target environment. You either have to do this manually, or with the web service API : WebCatalogService.readObjects to get the XML for the agent, and then submit it to iBotService.writeIBot which will register it with the BI Scheduler.

Security

- In terms of the Policy store (Application Roles and Policy grants), these are managed by the Security element of the BAR and migration through the environments is simple. You can deploy the policy store alone in OBIEE 12c using the

importJaznflag ofimportServiceInstance. In OBIEE 11g it's not so simple - you have to use themigrateSecurityStoreWLST command. - Data/Object security defined in the RPD gets migrated automatically through the RPD, by definition

- See above for a discussion of OBIEE front-end privilege grants.

What Goes into Source Control? Part 2

So, suddenly this question looks a bit less simple than when orginally posed at the beginning of this article. In essence, you need to store:

- RPD

2. BAR + JSON configuration for each environment's connection pools -- 12c only, simpler, but less flexible and won't support concurrent development easily

3. RPD (.rpd) + XUDML patch file for each environment's connection pools -- works in 11g too, supports concurrent development - Presentation Catalog

5. Entire catalog (BAR in 12c / 7zip in 11g) -- simpler, but impossible to manage branch-based concurrent development

6. Catalog baseline (BAR in 12c / 7zip in 11g) plus delta.catalogfiles -- More complex, but more flexible, and support concurrent development - Security

8. BAR file (OBIEE 12c)

9. system-jazn-data.xml (OBIEE 11g) - Any other files that are changed for your deployment.

It's important that when you provision a new environment you can set it up the same as the others. It is also invaluable to have previous versions of these files so as to be able to rollback changes if needed, and to track what settings have changed over time.

This could include:

* Configuration files (`nqsconfig.ini`, `instanceconfig.xml`, `tnsnames.ora`, etc)

* Custom skins & styles

* writeback templates

* etc

Summary

I never said it was simple ;-)

OBIEE is an extremely powerful product, and just as you have to take care to build your data models correctly, you also need to take care to understand why and how to manage your code correctly. What I've tried to do here is pull together the different options available, and lay them out with their respectively pros and cons. Let me know in the comments below what you think and how you manage OBIEE code at your site.

One of the key messages that it's important to get across is this: there are varying degrees of complexity with which you can embrace source control. All are valid, and in fact an incremental adoption of them rather than big-bang can sometimes be a better idea:

- At one end of the scale, you simply use source control to hold copies of all your code, and continue to deploy manually

- Getting a bit smarter, automating code deployments from source control. Code development is still done serially though.

- At the other end of the scale, you use source control with branch-based feature-driven concurrent development. Completed features are merged automatically with RPD conflicts managed by the OBIEE tooling from the command line. Testing and deployment are both automated.

If you'd like assistance with your OBIEE development and deployment practices, including fully automated source-control driven concurrent development management, please get in touch with us here at Rittman Mead. We would be delighted to use our extensive experience in this field to produce a flexible and customised process for your particular environment and requirements.

You can find the companion slide deck to this article, with further discussion on concurrent development, here.