Assessing the Performance of Looping ODI Package Tasks

Our SQL query extracts data from ODI Repository log tables and can be used to find performance bottlenecks in ODI Load Plans that execute Packages with loops by identifying the most time-consuming Package tasks.

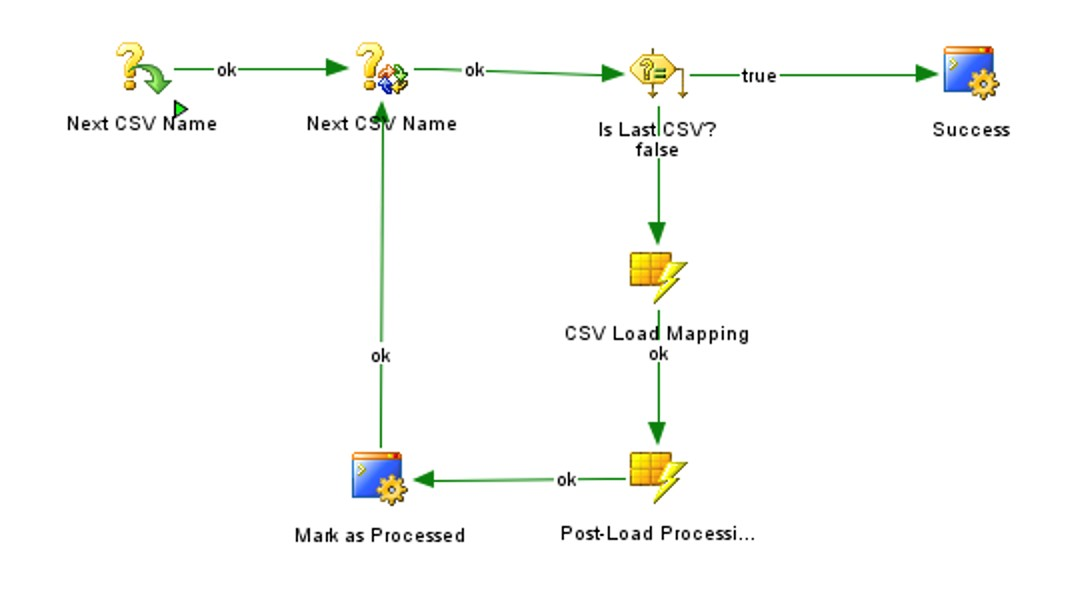

ODI Packages often use loops, especially to load CSV files or query APIs that have limits on how much data can be returned at once.

The problem with the loop in the example above, besides the fact that it loads data sequentially, is that the log will be very long and difficult to analyse if you are loading thousands of CSV files. It can also be difficult to identify the CSV files that are taking the longest to load. Now imagine a Load Plan with many loops like this! There must be a better way than scrolling through the log of endless task steps.

I found a blog post on the DEV EMP website that shows how to extract Load Plan Task Execution Steps. This was close to what I needed, but it only returned a single record per Package run. I wanted to see the Package Tasks that formed the loop and their execution times.

Below is the query I wrote to aggregate Package Task execution times, calculating the summary, average, minimum, and maximum execution times, and ordering the results by summary execution time in descending order. (Packages are called by Load Plan Steps.)

WITH

Q_LP_STEPS AS (

SELECT

LP_STEP.I_LOAD_PLAN

, LP.LOAD_PLAN_NAME

, LP_INSTANCE.I_LP_INST

, LP_RUN.STATUS LP_RUN_STATUS

, TO_CHAR(LP_RUN.START_DATE, 'YYYY-MM-DD HH24:MI:SS') LP_RUN_START_DATETIME

, TO_CHAR(LP_RUN.END_DATE, 'YYYY-MM-DD HH24:MI:SS') LP_RUN_END_DATETIME

, CAST(COALESCE(LP_RUN.end_date, SYSDATE) AS TIMESTAMP) - CAST(LP_RUN.start_date AS TIMESTAMP) LP_RUN_DURATION

, LP_STEP.LP_STEP_NAME

, LP_STEP.I_LP_STEP

, LP_STEP.PAR_I_LP_STEP

, LP_STEP.STEP_ORDER

, LP_STEP.IND_ENABLED

, SYS_CONNECT_BY_PATH(LP_STEP.LP_STEP_NAME, ' -> ') AS STEP_NAME_PATH

, SYS_CONNECT_BY_PATH(LP_STEP.STEP_ORDER, ' ') AS STEP_ORDER_PATH

, SYS_CONNECT_BY_PATH(LP_STEP.IND_ENABLED, ' ') AS IND_ENABLED_PATH

, SYS_CONNECT_BY_PATH(DECODE(LP_STEP.LP_STEP_TYPE, 'EX', 1, 0), ' ') AS EXCEPTION_STEP_PATH

, CONNECT_BY_ISLEAF IS_LEAF_STEP

, LEVEL AS STEP_LEVEL

FROM SNP_LOAD_PLAN LP -- Load Plan Definition

INNER JOIN SNP_LP_STEP LP_STEP ON -- Load Plan Step Definition

LP_STEP.I_LOAD_PLAN = LP.I_LOAD_PLAN

INNER JOIN SNP_LP_INST LP_INSTANCE ON -- Load Plan Instance

LP_STEP.I_LOAD_PLAN = LP_INSTANCE.I_LOAD_PLAN AND

---------------------------------------------------------------------------------------------------

-- Filter by LP Instance ID here (mandatory filter):

---------------------------------------------------------------------------------------------------

LP_INSTANCE.I_LP_INST = 123456

INNER JOIN SNP_LPI_RUN LP_RUN ON -- Load Plan Run

LP_RUN.I_LP_INST = LP_INSTANCE.I_LP_INST

CONNECT BY PRIOR LP_STEP.I_LP_STEP = LP_STEP.PAR_I_LP_STEP

START WITH LP_STEP.PAR_I_LP_STEP IS NULL

),

Q_LP_STEP_LOG AS (

SELECT

LP_STEP.I_LOAD_PLAN

, LP_STEP.LOAD_PLAN_NAME

, LP_STEP.I_LP_INST

, LP_STEP.LP_STEP_NAME

, LP_STEP.I_LP_STEP

, LP_STEP.STEP_LEVEL

, LP_STEP.IS_LEAF_STEP

, LP_STEP.STEP_NAME_PATH

, LP_STEP.STEP_ORDER_PATH

, CASE WHEN LP_STEP.IND_ENABLED_PATH LIKE '%0%' THEN 'N' ELSE 'Y' END ENABLED

, LP_STEP_LOG.SESS_NO AS SESS_NO

FROM Q_LP_STEPS LP_STEP -- LP Run with Step Definitions

LEFT JOIN SNP_LPI_STEP_LOG LP_STEP_LOG ON -- LP Step Run Log

LP_STEP.I_LP_INST = LP_STEP_LOG.I_LP_INST AND

LP_STEP.I_LP_STEP = LP_STEP_LOG.I_LP_STEP

WHERE

LP_STEP.IS_LEAF_STEP = 1 AND -- only analyse Leaf Steps

LP_STEP.EXCEPTION_STEP_PATH NOT LIKE '%1%' -- exclude Step hierarchies that include Exceptions

),

Q_TASK_DETAIL AS (

SELECT

STEP_LOG.I_LOAD_PLAN

, STEP_LOG.I_LP_INST

, STEP_LOG.I_LP_STEP

, STEP_LOG.LOAD_PLAN_NAME

, STEP_LOG.LP_STEP_NAME

, STEP_LOG.STEP_NAME_PATH

, STEP_LOG.SESS_NO

, CASE WHEN STEP_LOG.ENABLED = 'N' THEN 'Step has been disabled'

WHEN STEP_LOG.SESS_NO IS NULL THEN 'Step did not run'

WHEN SESS.SESS_STATUS = 'R' THEN 'Step currently running'

WHEN SESS.SESS_STATUS = 'D' THEN 'Step finished successfully'

WHEN SESS.SESS_STATUS = 'E' THEN 'Step failed'

WHEN SESS.SESS_STATUS = 'W' THEN 'Step finished with warnings'

ELSE 'Other status not relevant'

END STEP_STATUS

, TO_CHAR(SESS.SESS_BEG, 'DD-MON-YYYY HH24:MI:SS') AS SESS_BEG

, TO_CHAR(SESS.SESS_END, 'DD-MON-YYYY HH24:MI:SS') AS SESS_END

, SESS.SESS_DUR

, REPLACE(REPLACE(REPLACE(SUBSTR(SESS.ERROR_MESSAGE, 1, INSTR(SESS.ERROR_MESSAGE, CHR(9) || 'at ')), CHR(10), ' '), CHR(13), ' '), CHR(9), ' ') AS ERROR_MESSAGE

, SCEN.SCEN_NAME

, TASKLOG.NNO

, TO_CHAR(TASKLOG.TASK_BEG,'YYYY-MM-DD HH24:MI:SS') TASK_BEGIN_DT

, TO_CHAR(TASKLOG.TASK_END,'YYYY-MM-DD HH24:MI:SS') TASK_END_DT

, TASKLOG.TASK_DUR TASK_DURATION_SECONDS

, SUM(TASKLOG.TASK_DUR) OVER (PARTITION BY STEP_LOG.I_LP_INST) LP_TASK_DURATION_SECONDS

, SCENTASK.TASK_NAME2 TASK_NAME

FROM Q_LP_STEP_LOG STEP_LOG

LEFT JOIN SNP_SESSION SESS ON

STEP_LOG.SESS_NO = SESS.SESS_NO

LEFT JOIN SNP_SCEN SCEN ON

SESS.SCEN_VERSION = SCEN.SCEN_VERSION AND

SESS.SCEN_NAME = SCEN.SCEN_NAME

LEFT JOIN SNP_SESS_TASK_LOG TASKLOG ON

TASKLOG.SESS_NO = SESS.SESS_NO

LEFT JOIN SNP_SCEN_TASK SCENTASK ON

SCENTASK.SCEN_NO = SCEN.SCEN_NO AND

TASKLOG.SCEN_TASK_NO = SCENTASK.SCEN_TASK_NO

----------------------------------------------------------------------------------------------------

-- Filter by Task Name here (remove filter to get all Steps in your analysis):

----------------------------------------------------------------------------------------------------

WHERE LP_STEP_NAME in (

'Load Plan Step 1',

'Load Plan Step 2',

'Load Plan Step 3'

)

ORDER BY TASK_BEGIN_DT

)

SELECT

TASK_NAME,

COUNT(*) TASK_EXEC_COUNT,

REGEXP_SUBSTR(NUMTODSINTERVAL(SUM(TASK_DURATION_SECONDS), 'SECOND'), '\d{2}:\d{2}:\d{2}') SUM_TASK_DURATION,

ROUND(100.0 * SUM(TASK_DURATION_SECONDS) / MAX(LP_TASK_DURATION_SECONDS),1) SUM_TASK_DURATION_PERCENT,

REGEXP_SUBSTR(NUMTODSINTERVAL(AVG(TASK_DURATION_SECONDS), 'SECOND'), '\d{2}:\d{2}:\d{2}') AVG_TASK_DURATION,

REGEXP_SUBSTR(NUMTODSINTERVAL(MAX(TASK_DURATION_SECONDS), 'SECOND'), '\d{2}:\d{2}:\d{2}') MAX_TASK_DURATION,

REGEXP_SUBSTR(NUMTODSINTERVAL(MIN(TASK_DURATION_SECONDS), 'SECOND'), '\d{2}:\d{2}:\d{2}') MIN_TASK_DURATION

FROM Q_TASK_DETAIL

WHERE TASK_NAME IS NOT NULL

GROUP BY TASK_NAME

ORDER BY SUM(TASK_DURATION_SECONDS) DESC, TASK_NAME;The SQL query above takes two filter inputs:

- The Load Plan Instance ID, which is the number before the Load Plan name in the Load Plan run log.

- The names of the Load Plan Steps that we want to analyse. (Multiple tasks can be included in an analysis if their task names match.)

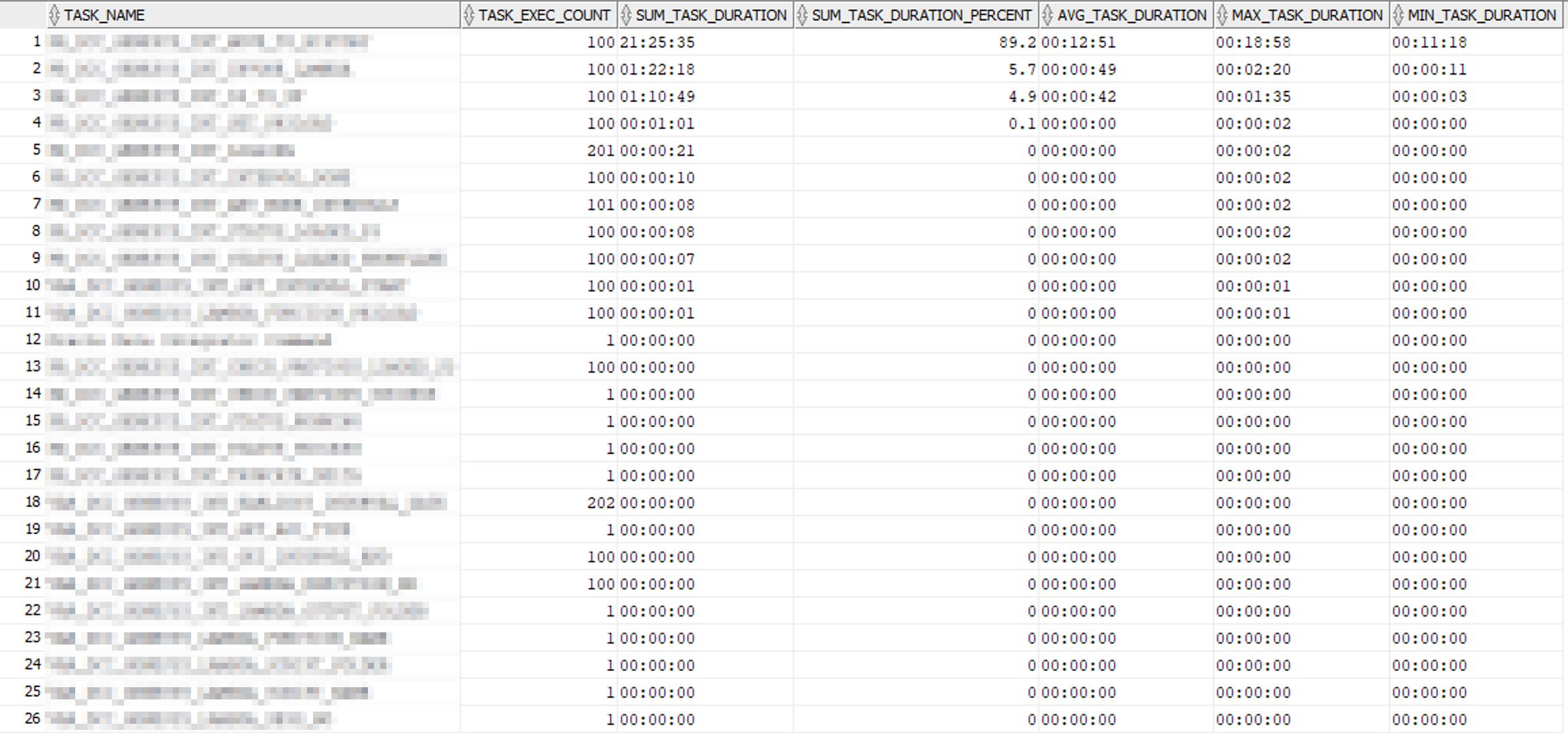

When run on our repository, The result looks like this:

The three most time-consuming tasks in the above analysis were:

- Data post-processing, taking more than 21 hours.

- API call, taking much less than the post-processing task but still a significant one-and-a-half hours.

- Copying API return files to the RDBMS server, taking just above one hour.

We redesigned our ODI Package to move the steps 1. and 3. out of the loop, which significantly improved performance.