Migrating OBIEE Logical Models to use a Data Warehouse : Part 1

A couple of weeks ago I posted an article about a next-generation Oracle BI architecture, where I advocated building a first cut OBIEE system against your operational applications, producing some "quick win" reports, and then over time copying your operational data into a data warehouse and re-pointing the OBIEE semantic model to point to this instead. The idea behind this is to allow you to realize some immediate benefits from OBIEE and the semantic model, whilst providing a method by which you can eventually move your data into an Oracle data warehouse without disturbing the reports you created earlier. I'm presenting on this at the upcoming UKOUG BI & Performance Management Special Event next week in London, you can download the slides from here.

All well and good, but how well does this work in practice? Well, there's no better way to find out than to give it a go, so what I'm going to do over the next three days is work through three stages of the type of project I'm suggesting:

- Firstly, we'll start off with three operational systems (Orders, CRM and HR), and create a single logical model that maps on top of them.

- We'll then create some reports against this data and save them for checking later.

- Then, we'll use OWB to extract this data from the source systems and create a data warehouse

- We'll then re-map the logical model I created earlier to point to this new data source

- Then, we'll check the same reports and see whether they still work ok.

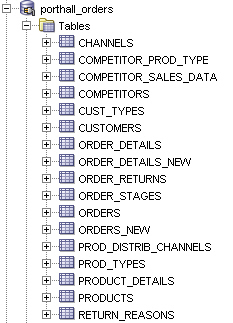

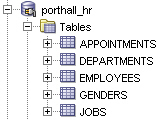

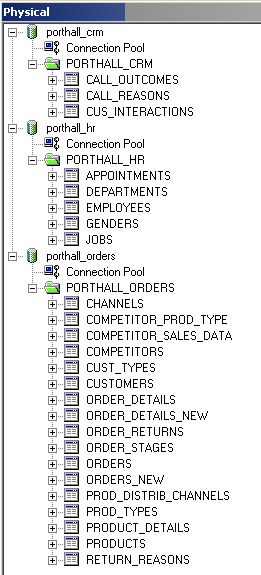

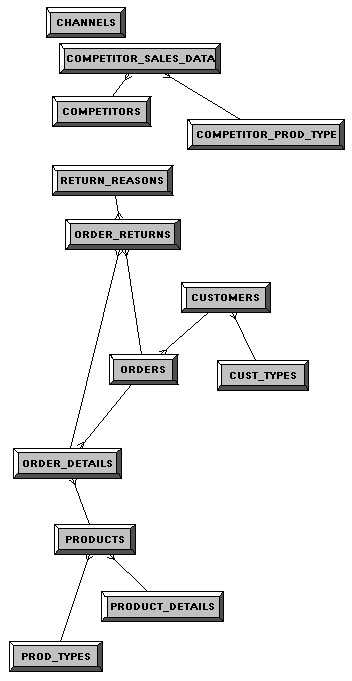

Starting up SQL Developer and taking a look at the three application data sources, the Orders schema contains a number of tables in a "normalized" design. It's got an orders table, an order details table, various product tables and so on.

The CRM schema is a bit simpler, and contains details on customer interactions, their reason and their outcome. The customers it refers to are in the Orders schema.

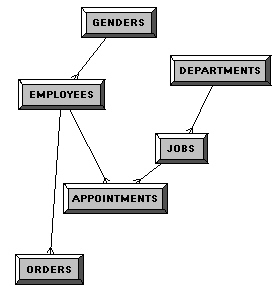

The HR Schema contains details of employees, appointments, jobs and departments, with details of employees being in the Orders schema to record salesperson activity.

So what we have here is a set of normalized tables that contains details on order activity, refunds, staff appointments and customer interactions. Out of these tables I therefore want to create a dimensional model, with the fact tables corresponding to the activities I just mentioned (orders, appointments, customer calls and so on) and the dimensions corresponding to the customer, product, employee and other lookup tables in the source schemas.

Starting off with a new repository then, the first thing I do is start creating this logical model. I'll start off by modeling the facts first, then I'll build out the dimensions, and only once that's complete will I start thinking about mapping them to the source data.

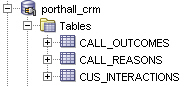

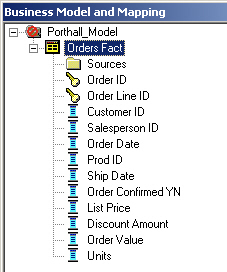

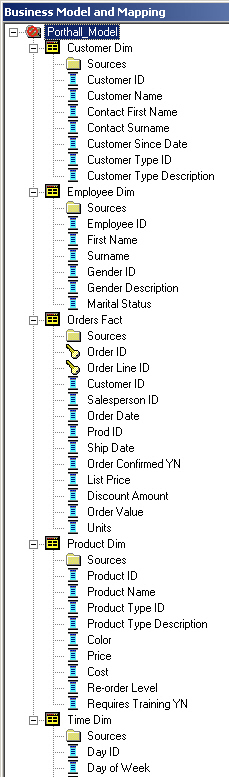

I start off them by creating my first fact table, which will model the orders that I receive.

At this initial stage, I just create the logical columns within the fact table; the datatypes for each column will be defined later once I map source columns to them. Then, I repeat the process for the dimension tables, again just creating the column names and leaving the definition of the datatypes until later on. The point here is that you are modeling what the logical model should be, later on we'll worry about mapping these objects to the source data.

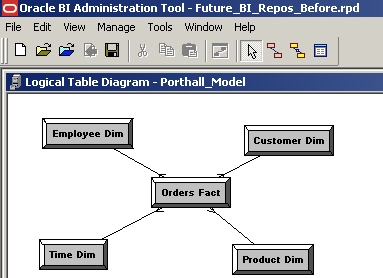

Now, I create logical(complex) joins between the dimensions and fact table that I've just created.

Then, it's a case of defining the dimensions for my logical model and setting the aggregation method for the measures, and the logical model is complete.

So I'm now at the point where I have a complete first cut of my logical model. At the moment, I've only got a single fact table, later on I'll add some more, but for now my next step is to map in the source tables. To do this, I use the Import ... From Database feature in Administrator and read in the source table metadata.

One of the most important things you have to do when importing source data into OBIEE is to make sure all the primary and foreign keys are registered in the physical model. I therefore go and create a physical database diagram for each schema, making sure all the tables are properly joined together - this for example is the Orders schema.

Then, I go and create joins between the different schemas, joining for example the tables in the HR schema to the Order schema via the Orders table, as employee data is references in orders via the Salesperson ID.

By repeating this across all of the source schemas, I make it possible for OBIEE to join together all the source data into a single, "virtual" source database.

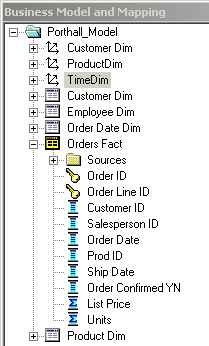

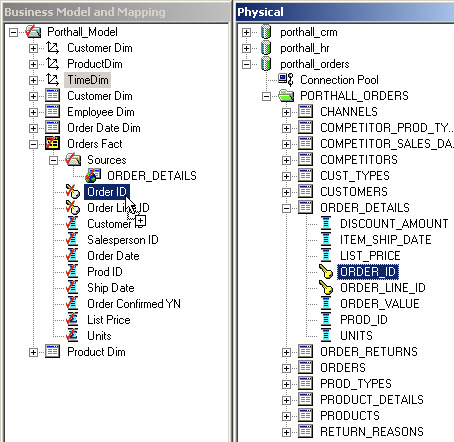

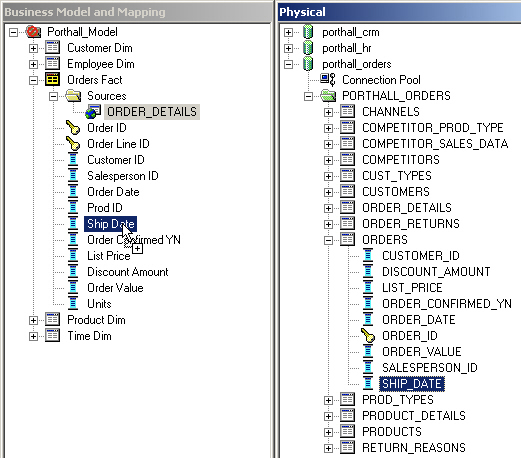

Now it's time to map the source data onto the logical model I created earlier. I start off by dragging the Order ID field from the Order Details physical table and dropping it on top of the Orders Fact logical table, which adds this physical table as a logical table source for the fact table and maps the Order ID logical column to the Order ID physical column.

I then repeat the process for the Order Line ID column, and the rest of the columns in this source table that have corresponding entries in the logical fact table.

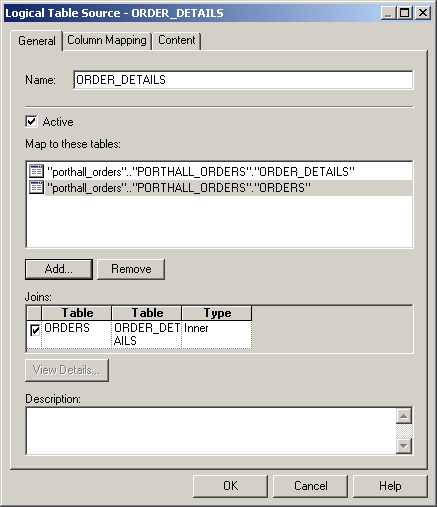

The Order Details physical table only contains a part of the order information I want to put into my logical fact table - the most granular part that details the individual line items in the order. I also want to map across some data from the actual Orders table as this contains the order "header" information, such as the date of the order and the customer who made the order. To do this, I first map the existing fact table logical table source to this additional table, which I can do because the Orders and Order Details tables are joined together in the physical model.

Now I can just map in the additional columns from the Orders table to finish off this logical table's mapping.

Once this step is complete, I've mapped in all the source columns for the logical fact table, with the fact table's logical table source pointing to two source tables, the Orders and Order Details tables.

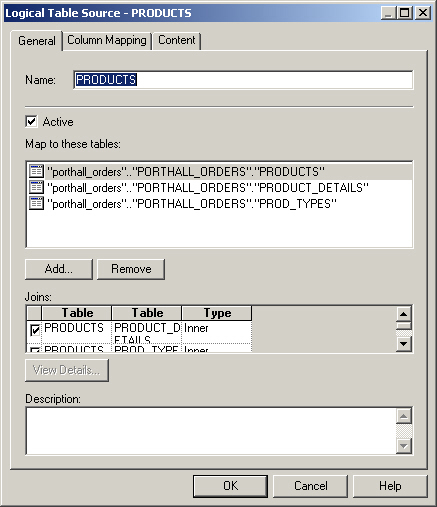

I then repeat the process for the logical dimension tables, editing the logical table sources as needed to provide access to additional lookup tables.

Once this is complete, I then edit the logical tables to add the primary keys, and then check the state of the logical model at this stage.

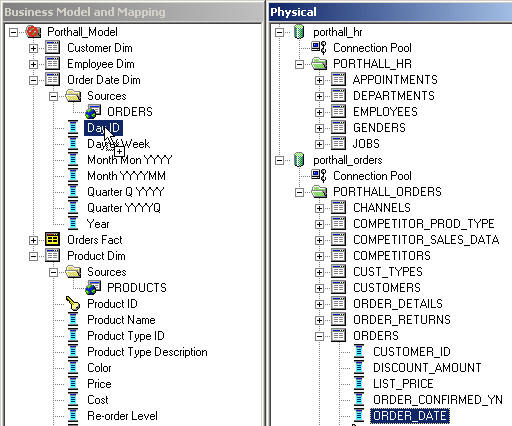

Everything is now complete except that I haven't yet provided source table mappings for the time dimension. There's a reason for this though, in that the source systems don't have a "Times" table, all dates are held as Date datatypes in the various transaction tables and it'll therefore be up to me to make a separate table out of them, together with derived fields such as Month, Day of Week and so on.

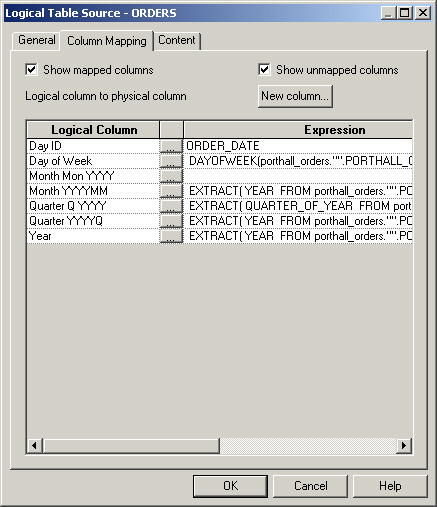

In my Orders fact table, time actually crops up a couple of times; there's an Order Date and a Ship Date, and to be able to analyze these dates independently, I'll need to create two time dimension tables, one for order data and one for ship date. I rename the Time dimension table I created earlier on to Order Date instead, and then map in the Order Date from the Orders table on to the Day ID logical column within it.

I then edit the logical table source for the Order Date logical table and use the Expression Editor to derive the various date components from the Order Date physical column.

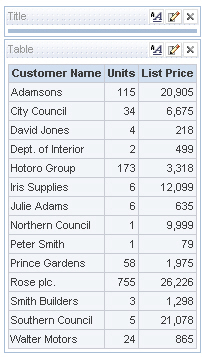

We're now ready to go. I create a simple Presentation model out of my Logical Model, start up Oracle BI Answers and run a couple of test reports.

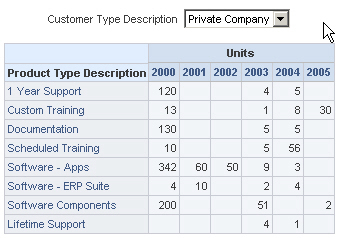

The tabular report comes back OK. What about one that involves dates and a bit more analysis, say a pivot table report?

Not bad, it looks OK. So, tomorrow we'll look at the next step - taking this operational data spread over three applications and copying it into a data warehouse, which we'll do using Oracle Warehouse Builder 10gR2.