Oracle BI EE 10.1.3.4.1 – Setting up Disconnected Analytics

Recently in the UKOUG, we exhibited a demo (more details in the coming blog entries) wherein we also showed the capabilities of the new iphone app, BI Indicators. This actually triggered a chain of thoughts for me on the pros and cons of 3 commonly used BI EE remote access techniques(or content delivery methods)

- iPhone app accessing the BI Server live

- Pushing reports/dashboards through iBots to remote mobile devices

- Using disconnected analytics

The first 2 are quite commonly used and pretty straightforward to setup as well. But the least used (only certain verticals like pharma etc seem to use this) component of BI EE amongst customers is the Disconnected Analytics. That too with everyone moving towards the cloud (we have internet even in the planes these days), the practical use of Disconnected analytics is actually reducing. Having said that, it is probably one of the components that has a lot of moving parts and reasonably a sound architecture. Today i will be covering the process of setting up disconnected analytics.

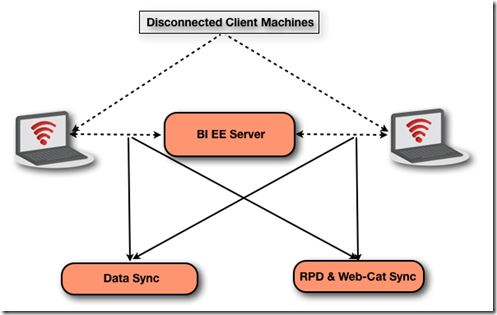

The high level architecture of Disconnected analytics is given below.

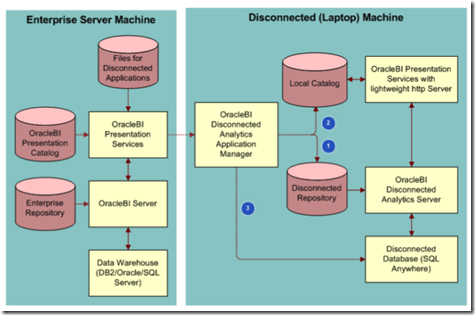

The basic premise behind disconnected analytics is the fact that none of the client machines will have access to the network(only sporadically for syncing). So, in order to enable access to BI EE dashboards/reports in a disconnected mode, every client machine will have a local install of a simplified version of BI EE called as disconnected analytics. This simplified version works exactly the same as the actual BI EE. The biggest difference is in enabling data access. Along with the simplified version of BI EE, a small database called Sybase SQLAnywhere gets installed as a component of disconnected analytics. So, in order to enable local access of data through disconnected analytics, small portions of the live data are copied on to the SQL Anywhere database through a process called as synchronization. This can be easily understood by looking at the screenshot below

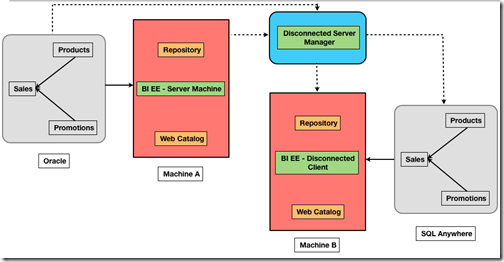

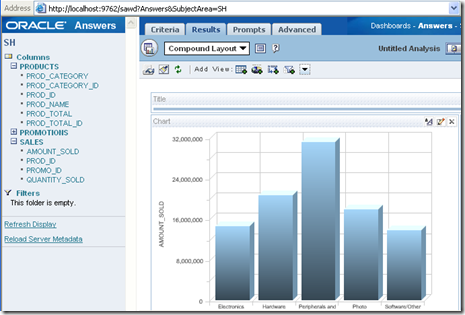

Though the architecture is pretty straightforward, there are quite a few number of steps that we need to go through to get this to work. I will start with a very simple model shown below

We basically have 3 tables – Products, Promotions and Sales in an Oracle database. We also have a repository designed against these 3 tables and a set of reports defined against this repository. All of these reside on Machine A which will be our server machine. The first step in the whole setup process is to define what will be needed by the client machine when its not on the network. The lowest possible unit that can be synchronized is a single subject area (variations are possible, but if we can keep it at subject area level, it will become easier to handle the synchronization). So, we start with defining an Application SH which will correspond to all the source tables, subset of the RPD contributing to this subject area and the set of reports created out of this subject area. Following are the steps that we need to do on the Server Machine A.

Server Machine:

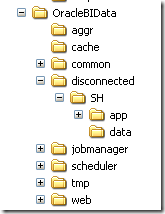

Once the application is identified, we start with creating this application as a folder in the {OracleBIData}\disconnected folder. Every application will consist of the following

- A sub-folder of the name app that will store the RPD to be synchronized, Archived Shared folder of the webcatalog to be synchronized and the set of SQL DDL statements which will be used to create the source tables in the SQLAnywhere database.

- A sub-folder of the name data that will be used by the server machine to extract data out of certain reports (which we will call sourcing reports) to populate our SQLAnywhere tables. This data will be a sub-set of the data that will be there in the actual source.

- A configuration XML file of the same name as the application folder, which will contain a set of tags defining how the synchronization for the application will happen.

Once the structure is created, we start with creating the DDL SQL statements for creating the source tables in the SQLAnywhere database. Remember, this is the SQL that will be used during synchronization. There are 2 kinds of data synchronization. Incremental and Overwrite. I will just be covering the latter which will overwrite the source tables every time during the synchronization. We need a SQL file for every table that needs to be created in the SQLAnywhere database on each client. Since we have just 3 tables we will create 3 SQL files containing the following DDL

DROP TABLE PRODUCTS; CREATE TABLE PRODUCTS( PROD_CATEGORY CHAR(50), PROD_CATEGORY_ID INTEGER, PROD_ID INTEGER, PROD_NAME CHAR(50), PROD_TOTAL CHAR(13), PROD_TOTAL_ID INTEGER);

DROP TABLE PROMOTIONS; CREATE TABLE PROMOTIONS( PROMO_CATEGORY CHAR(30), PROMO_CATEGORY_ID INTEGER, PROMO_ID INTEGER, PROMO_NAME CHAR(50), PROMO_TOTAL CHAR(15), PROMO_TOTAL_ID INTEGER);

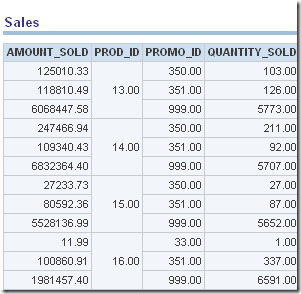

DROP TABLE SALES; CREATE TABLE SALES( AMOUNT_SOLD INTEGER, PROD_ID INTEGER, PROMO_ID INTEGER, QUANTITY_SOLD INTEGER);

Place the SQL files in the app folder. They can be given any name as these will be referred in the Application configuration xml file.

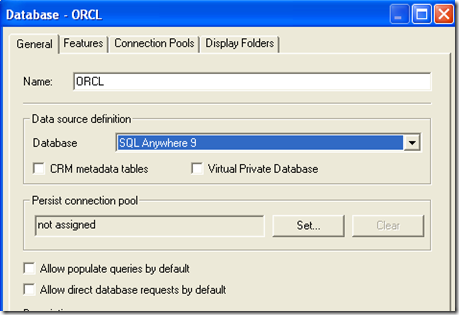

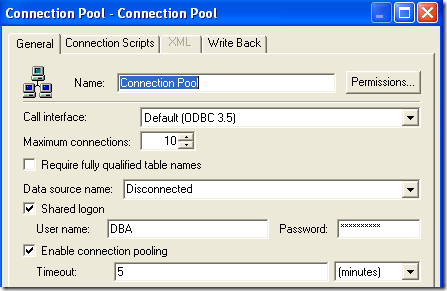

Once we have the SQL files, we need to create a subset of the RPD which will be used in each client machine. In our case, since we have only one subject area in our application, we will create an RPD with just that subject area alone. Remember this is the RPD that will be used by each client. And each client will only have SQLAnywhere database. So, ensure that you are changing the connection pool of the data source to point to the SQLAnywhere database

Copy this RPD over to the app folder.

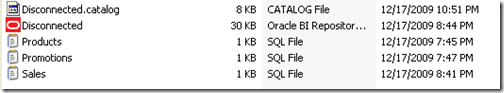

Once the RPD is copied as well, the next step is to copy the reports that we need to move over to the client machines. The best option is to archive the entire shared folder and then copy it onto app folder. The archive can be done either from the BI EE UI or through the catalog manager. In effect, the app folder should have the SQL DDL files, RPD and the web catalog in an archived format as shown below

Now that we have our app folder has been setup completely for the synchronization, we need to create a set of reports that will populate our tables in the SQLAnywhere database. The idea is, whenever client machines try to synchronize the applications, BI EE will first create the tables and then will run these sourcing reports to extract the data in a CSV or compressed DAT format. It will then import these back into the SQLAnywhere database. The sourcing reports should include all the columns chosen in the SQL DDL and the order of the columns should match as well. In our case we create one source report for each table.

These reports can contain filters that is relevant to each of your reports. This is the part that can be tricky. In most cases, we do not expose ID columns in the presentation layer. But for DA to work, you need to ensure that you have a report that will populate the IDs as well. Also, it will get a lot tricky when you have federation, fragmentation setup in your repository.

Once we have these reports created, the next step is to create an application config XML file. The XML file that i used is given below

<remotecfg> <application name="SH" displayname="SH" dir="app"> <repository name="Disconnected.rpd"/> <webcatalog name="Disconnected.catalog"/> <displayname lang="en" value="SH"/> </application> <data dir="data" catalogfolder="/shared/Disconnected/SH"> <dataset name ="SH" incremental="true" syncmode="online" subjectarea="SH"> <displayname lang="en" value="SH"/> <table name="Products"> <sourcingreport name="Products" file="Products.csv"/> <tablesql name="Products.sql"/> </table> <table name="Promotions"> <sourcingreport name="Promotions" file="Promotions.csv"/> <tablesql name="Promotions.sql"/> </table> <table name="Sales"> <sourcingreport name="Sales" file="Sales.csv"/> <tablesql name="Sales.sql"/> </table> </dataset> </data> </remotecfg>

This XML file is nothing but a set of instructions on how to parse the application during synchronization.

Client Machines:

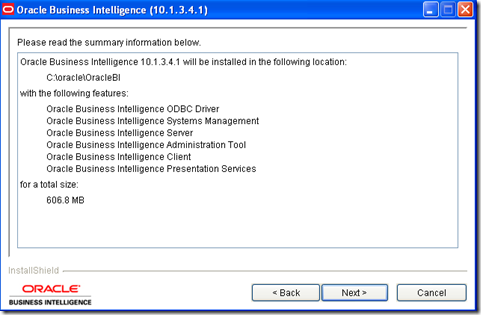

The above setup instructions were for the BI EE Server machine. On the client machines, the first thing that we would have to do is to install the Disconnected analytics client (an option during BI EE install)

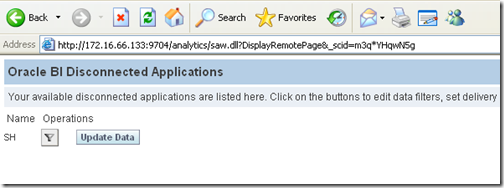

Once the install is done we need to first connect the machine on to the network for synchronization. Then navigate to the Server machine BI EE URL and click on Disconnected Analytics. This is what took me a while to figure out. I was of the impression that there would be some setting in the Disconnection Application manager to change the Server machine URL. Unfortunately, it does not work that way. What we need to do is to open the Server URL from the client machine and navigate to the Disconnected Analytics link.

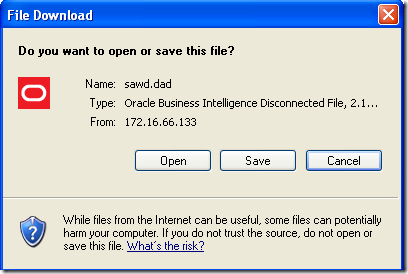

This will show the list of all the applications that have been setup on the Server machine. If we click on Update Data, it will automatically open up a Save/open window asking us to save/open a DAD file.

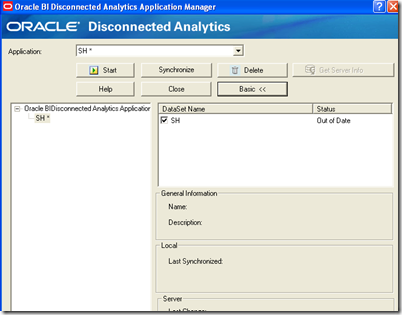

This DAD file basically provides the necessary configuration settings for the Disconnected application manager to work. Just click on open, it will automatically open up the Application Manager

From here on, the synchronization will work out of the box.

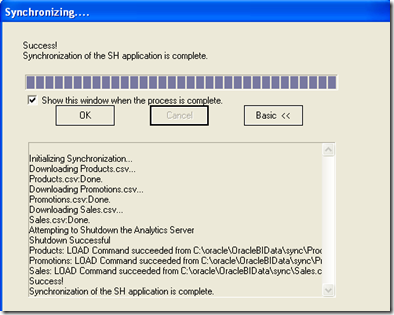

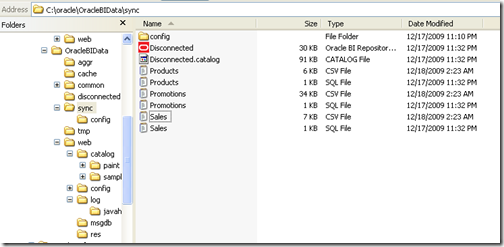

As you see, the synchronization process will first fire all the Logical SQL corresponding to our sourcing reports on the server machine. The results of these reports are then stored as compressed CSVs and are then moved over to the client machine. You can see the CSVs even after the synchronization

This can potentially raise security concerns as the data is stored in a csv format. But in this case, since the logical SQLs are fired as the person who is logging in, it will honor all the rules that we had setup in the repository and the database (if VPD is used). Also the synchronization will automatically update the NQSConfig.ini to new the repository etc.

To me there are quite a few moving parts. But one good thing about this there are quite a few things that we could do like incremental updates etc which could potentially make this very robust. Still i could not comprehend someone installing 600MB worth of software on a client machine and also go through the hassle of setting all these up for getting the same functionality as the BI Server (atleast not now considering the amount of connectivity that we have these days). One other thing that i could not understand is the reason behind Oracle sticking with SQL Anywhere. There are quite a few other databases like TimesTen, Berkeley DB, Sleepy Cat that Oracle owns which could potentially be a replacement for this (probably because these databases are not supported by BI EE).