Alternatives to the Summary Advisor for Refreshing Exalytics Aggregates

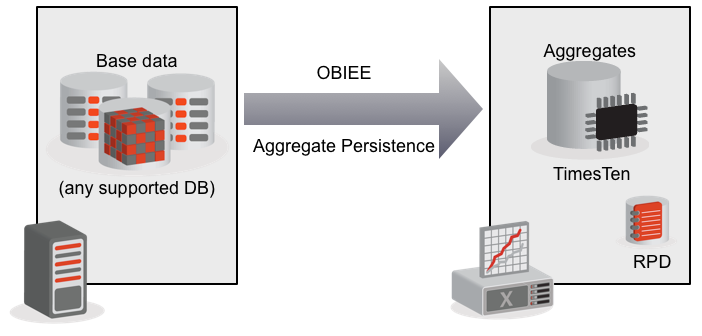

One of the several elements of Exalytics' power comes from it holding pre-calculated (aggregated) data in the TimesTen for Exalytics In-Memory Database. Exalytics comes with a tool called the Summary Advisor (which Mark Rittman has written about previously here) which suggests aggregates to build based on the reports which have been run on the system. It analyses which will benefit most from aggregation and at what level(s) of granularity.

The output from the Summary Advisor is a aggregate creation script, which is something that can also be generated in other ways, either through the standard OBIEE Aggregate Persistence Wizard, or just by hand.

Whichever way the aggregate script is created, it will look something like this:

delete aggregates "TT_AGGR_STORE".."DW_AGG"."ag_3110279699";This script is then executed via the nqcmd command line utility, and invokes the OBIEE Aggregate Persistence function. This function does three things:create aggregates "ag_3110279699"

for "Sales"."Fact Sales"("Sale Amount","Quantity")

at levels ("Sales"."Times (Level-Based, Time)"."Month")

using connection pool "TT_AGGR_STORE"."Connection Pool"

in "TT_AGGR_STORE".."DW_AGG";

- Create the physical aggregate table on the target database (TimesTen, in the case of Exalytics)

- Loads the data from the source into the aggregate

- Updates the RPD to include the new aggregate

There are two things in particular to note here which seem to be overlooked in some of the general understanding of Exalytics:

There are two things in particular to note here which seem to be overlooked in some of the general understanding of Exalytics:

- The base data can come from any source that OBIEE supports, including (but not limited to) Oracle, DB2, SQL Server, Teradata, generic ODBC, and so on.

- OBIEE uses Aggregate Navigation to automatically determine the most appropriate source to use for a user's query. The user does not need to specify where their data comes from; OBIEE determines the grain and then decides from which of the tables it will be most efficient to fetch it.

Refreshing aggregates

Lightening fast data from TimesTen aggregates is only useful when it's correct. When the source data changes - for example, a new day's data is loaded - the aggregates must also reflect this.Aggregate Persistence

Out of the box, the method suggested for keeping aggregates refreshed with the correct data is to do a complete refresh of the aggregate. What this does is:

- Delete the existing aggregate:

- Delete the aggregate table from the database

- Remove references to the aggregate from the RPD

- Create the aggregate from scratch:

- Create the physical aggregate table on the target database (TimesTen, in the case of Exalytics)

- Recalculate the whole aggregate from source and load it into the target (TimesTen)

- Update the RPD to include the new aggregate

Alternatives

OBIEE's Aggregate Persistence functionality (whether used through the Summary Advisor, or Aggregate Persistence Wizard) is a very useful tool for getting started with building aggregates and particularly in understanding how they are integrated into the RPD. Release 11.1.1.6.2 BP1 of OBIEE included the Model Checker in the Administration Tool which is very useful alongside the existing Consistency Checker, but instances can still arise when Aggregate Persistence fails with more complex RPDs and data models.In addition, as we've seen above, when OBIEE's Aggregate Persistence runs it modifies the RPD (online, i.e. in-place). In terms of minimising the number of moving parts (and thus scope for problems), it can make sense to leave the RPD untouched if possible.

An alternative approach is to instead to manually update the TimesTen aggregate with a complete refresh of data from base, using other methods. This could be options such as:

- Build the aggregate refresh into the base data ETL load

- Extract the Aggregate Persistence aggregate creation SQL and execute it manually through ODI

Aggregate Persistence can then be used either as an accelerator, to get the initial aggregates defined and built, or not at all. The best approach is to try using it, as it does speed things up, and then evaluate the different options for refreshing the aggregates that suit your particular RPD and deployment.

Limitations

However you implement it, doing a complete refresh of aggregates each time the base data changes is only viable if:- Your data volumes are small enough

and/or

- your time window in which to update the aggregates is large enough

The calculation of the aggregates will typically be pushed down on to your source database, so this also needs to have the resource capacity to recalculate the entire aggregates each time the base data has changed.

Incremental refresh

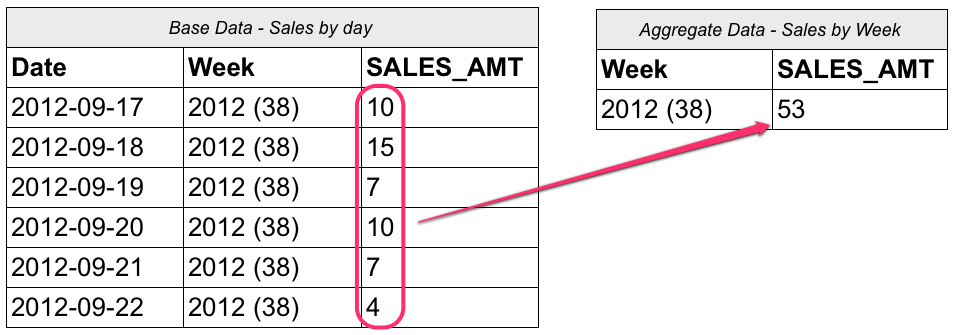

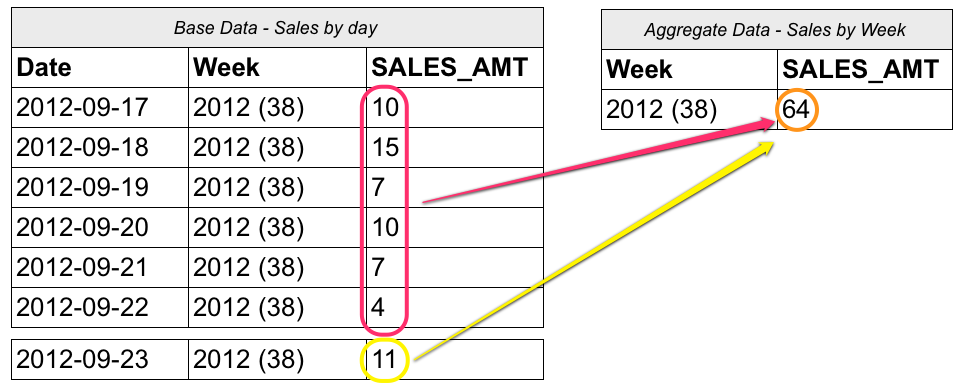

Consider an aggregate table which holds sales data as a weekly level instead of its source day level.

If a new day's data for an existing week is loaded, just that week in the aggregate needs updating:

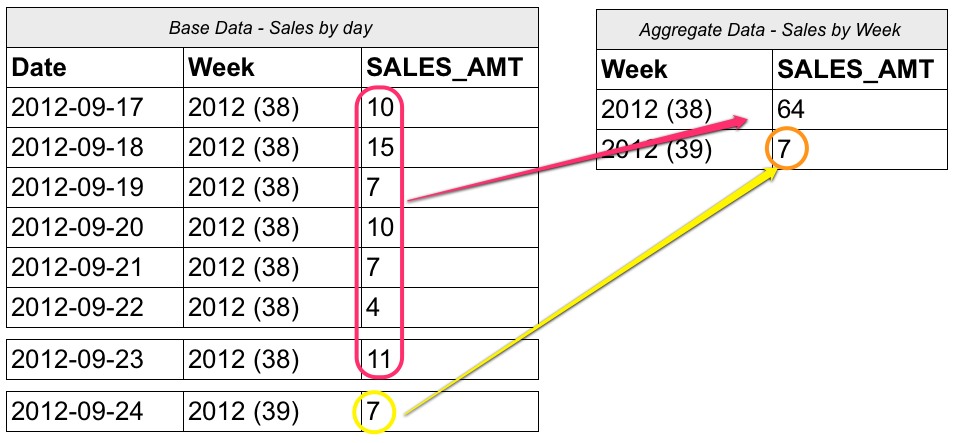

When a new day's data for a new week is loaded, a new row needs adding to the aggregate and the existing rows are untouched:

NB The above illustration is excluding consideration of late and updated data.

This approach is known as incremental refresh, and if you are working with any kind of serious data volumes it is the only properly scalable strategy for aggregates.

There are at least two ways in which we can implement incremental refresh of aggregates on Exalytics:

- Changed Data Capture (CDC) using Oracle GoldenGate (OGG)

- Custom ETL embedded in the base data load design

Incremental refresh using GoldenGate

Oracle's Fusion Middleware stack includes two components which we can utilise for incremental refresh:- Oracle GoldenGate (OGG) - Oracle's strategic real-time replication and change-data-capture tool

- Oracle Data Integrator (ODI) - Oracle's strategic ELT/ETL tool

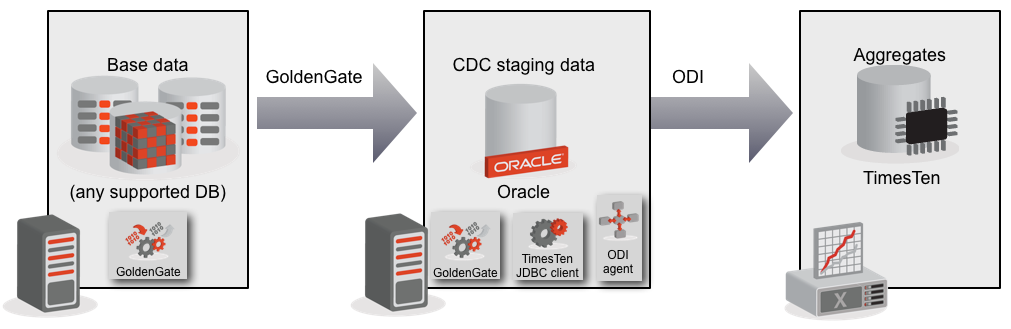

The design looks like this:

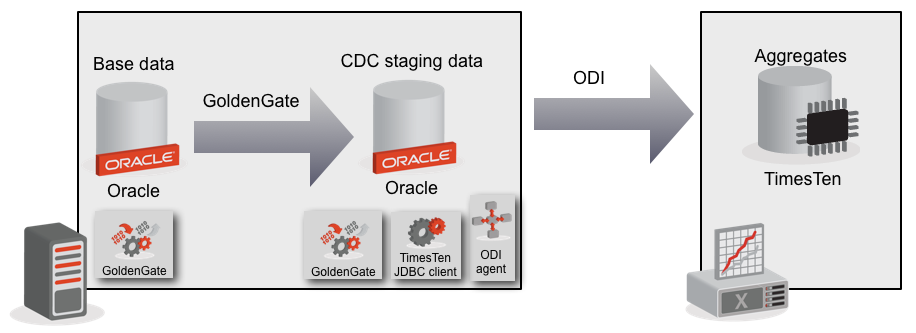

or if the source is Oracle already (and there is capacity on the server) it could be simplified to this:

or if the source is Oracle already (and there is capacity on the server) it could be simplified to this:

One key point to note in this design is that the impact on the source system holding the base data is almost none - simply the addition of GoldenGate to pick up the changes to the base data in the database logs.

One key point to note in this design is that the impact on the source system holding the base data is almost none - simply the addition of GoldenGate to pick up the changes to the base data in the database logs.

Looking at this in a bit more detail, it works like this:

- The initial aggregate creation is still done using the Summary Advisor (although doesn't have to be)

- GoldenGate replicates any changes made to the base data to the staging area

- ODI's CDC functionality is used to examine the changes and apply them incrementally to the TimesTen aggregates

Once the changes from the base data are captured and available on staging, it is over to standard ODI processing. Custom interfaces are built to apply logic to the captured changes and work out how the data should be applied to the aggregates. Here consideration needs to be given to the types of aggregate measure, and how they will be affected by changes to the base data. Simple SUM measures can be updated for the particular grain and dimension values by adding or subtracting new or deleted rows respectively. More complex measures, such as COUNT DISTINCTs might well need recalculating from base, but since the changed data will include the specific dimension values only the relevant subset of the aggregate will need recalculating.

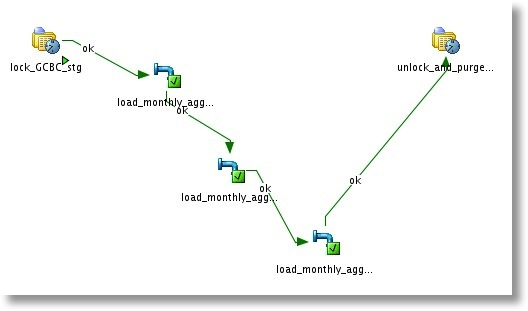

To round off the implementation, use ODI's support for a Model in CDC by adding it into a package to handle the CDC subscriber lock/unlock and journal purging.

Custom ETL incremental refresh

I mentioned that there are at least two options for incrementally refreshing aggregates held in TimesTen. An alternative to using GoldenGate/ODI would be a more invasive approach with the system that loads the base data. This in itself may be a non-starter for some implementations, and certainly detracts from the idea of Exalytics being a plug & play option.Since TimesTen support a variety of interfaces, including JDBC, ODBC, and load from flat file (ttBulkCp), whatever tool you use to load your base datawarehouse could be extended to also load the TimesTen aggregate. The key would be some form of custom control table and logic which records what dimension values have been updated in the base data, so that the corresponding aggregates can also be updated.

This is not a solution for the light-hearted, and would be best utilised in a new implementation where it could be designed in from the beginning. Retrofitting it to an existing datawarehouse may prove troublesome, and is why the above solution using GoldenGate may be a better option. Using GoldenGate, the source system is virtually untouched - only the installation of the GoldenGate agent is needed, and this operates at the low level of database logs.