OBIEE, ODI and Hadoop Part 1: So What Is Hadoop, MapReduce and Hive?

Recent releases of OBIEE and ODI have included support for Apache Hadoop as a data source, probably the most well-recognised technology within the "big data" movement. Most OBIEE and ODI developers have probably heard of Hadoop and MapReduce, a data-processing programming model that goes hand-in-hand with Hadoop, but haven't tried it themselves or really found a pressing reason to use them. So over this next series of three articles, we'll take a look at what these two technologies actually are, and then see how OBIEE 11g, and also ODI 11g connect to them and make use of their features.

Hadoop is actually a family of open-source tools sponsored by the Apache foundation that provides a distributed, reliable shared storage and analysis system. Designed around clusters of commodity servers (which may actually be virtual and cloud-based) and with data stored on the server, not on separate storage units, Hadoop came from the world of Silicon Valley social and search companies and has spawned a raft of Apache foundation sub-projects such as Hive (for SQL-like querying of Hadoop clusters), HBase (a distributed, column-store database based on Google's "BigTable" technology), Pig (a procedural language for writing Hadoop analysis jobs that's PL/SQL to Hive's SQL) and HDFS (a distributed, fault-tolerant filesystem). Hadoop, being open-source, can be downloaded for free and run easily on most Unix-based PCs and servers, and also on Windows with a bit of mucking-around to create a Unix-like environment; the code from Hadoop has been extended and to an extent commercialised by companies such as Cloudera (who provide the Hadoop infrastructure for Oracle's Big Data Appliance) and Hortonworks, who can be though of as the "Red Hat" and "SuSE" of the Hadoop world.

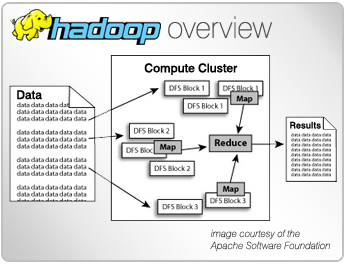

MapReduce, on the other hand, is a programming model or algorithm for processing data, typically in parallel. MapReduce jobs can be written, theoretically, in any language as long as they exposure two particular methods, steps or functions to the calling program (typically, the Hadoop Jobtracker):

- A "Map" function, that takes input data in the form of key/value pairs and extracts the data that you're interested in, outputting it again in the form of key/value pairs

- A "Reduce" function, which typically sorts and groups the "mapped" key/value pairs, and then typically passes the results down to the line to another MapReduce job for further processing

Joel Spolsky (of Joel on Software fame, one of mine and Jon's inspirations in setting up Rittman Mead) explains MapReduce well in this article back from 2006, when he's trying to explain the fundamental differences between object-orientated languages like Java, and functional languages like Lisp and Haskell. Ironically, most MapReduce functions you see these days are actually written in Java, but it's MapReduce's intrinsic simplicity, and the way that Hadoop abstracts away the process of running individual map and reduce functions on lots of different servers , and the Hadoop job co-ordination tools take care of making sense of all the chaos and returning a result in the end, that make it take off so well and allow data analysis tasks to scale beyond the limits of just a single server..

I don't intend to try and explain the full details of Hadoop in this blog post though, and in reality most OBIEE and ODI developers won't need to know how Hadoop works under the covers; what they will often want to be able to do though is connect to a Hadoop cluster and make use of the data it contains, and its data processing capabilities, either to report against directly or more likely, use as an input into a more traditional data warehouse. An organisation might store terabytes or petabytes of web log data, details of user interactions with a web-based service, or other e-commerce-type information in an HDFS clustered, distributed fault-tolerant file system, and while they might then be more than happy to process and analyse the data entirely using Hadoop-style data analysis tools, they might also want to load some of the nuggets of information derived from that data in a more traditional, Oracle-style data warehouse, or indeed make it available to less technical end-users more used to writing queries in SQL or using tools such as OBIEE.

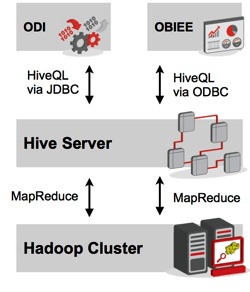

Of course, the obvious disconnect here is that distributed computing, fault-tolerant clusters and MapReduce routines written in Java can get really "technical", more technical than someone like myself generally gets involved in and certainly more technical than you average web analytics person will want to get. Because of this need to provide big-data style analytics to non-Java programmers, some developers at Facebook a few years ago came up with the idea of "Hive", a set of technologies that provided a SQL-type interface over Hadoop and MapReduce, along with supporting technologies such as a metadata layer that's not unlike the RPD that OBIEE uses, so that non-programmers could indirectly create MapReduce routines that queried data via Hadoop but with Hive actually creating the MapReduce routines for you. And for bonus points, because the HiveQL language that Hive provided was so like SQL, and because Hive also provided ODBC and JDBC drivers conforming to common standards, tools such as OBIEE and ODI can now access Hadoop/MapReduce data sources and analyse their data just like any other data source (more or less…)

So where this leaves us is that the 11.1.1.7 release of OBIEE can access Hadoop/MapReduce sources via a HiveODBC driver, whilst ODI 11.1.1.6+ can access the same sources via a HiveJDBC driver. There is of course the additional question as to why you might want to do this, but we'll cover how OBIEE and then ODI can access Hadoop/MapReduce data sources in the next two articles in this series, as well as try and answer the question as to why you'd want to do this, and what benefits OBIEE and ODI might provide over more "native" or low-level big data query and analysis tools such as Cloudera's Impala or Google's Dremel (for data analysis) or Hadoop technologies such as Pig or Sqoop (for data loading and processing). Check back tomorrow for the next instalment in the series.