Using Oracle TimesTen With Oracle BI Applications (Part 2)

Last time I posted an introductory blog to this series of posts on using Oracle TimesTen with Oracle BI Applications (OBIA). Today, I am going to look at some of the OBIA structures and then start to explore the ways we can use TimesTen with OIBA. As TimesTen may be a new topic for some readers, I will also talk about some of the features of TimesTen and some of the differences from Oracle 11g that we need to allow for in building a solution that incorporates TimesTen.

As I described, OBIA consists of three principal pieces: an ETL system to extract source data, stage, transform it and, finally, publish it to a data warehouse; a database to host both the staged data and the target data warehouse tables; and OBI to provide dashboards and other analysis. During the data load process the ETL extracts the propriety formatted data of the source systems (EBS, JD Edwards, SAP, whatever) and transforms it into a common model that is usable by the reporting layer. The OBIA product development team have done most of the legwork in mapping the complex ERP, HR and CRM application schemas through to the reporting database, however some work may be needed to deal with source customisations such as the use of flexfields. From our point of view we are only concerned with two areas, the OBIA database's warehouse layer tables and how they are mapped into the OBI physical and logical data models. How data is extracted and staged is not of so important for our TimesTen work

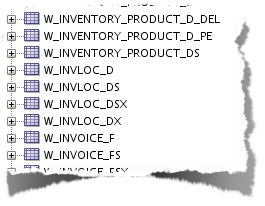

In OBIA we can readily find the tables of interest. The table names all begin with 'W_' for warehouse, have a content related name such as INVOICE and end with a suffix that indicates the usage. There are three suffixes that we need to consider for loading into Oracle TimesTen:

- _F for the fact tables

- _D for the dimension tables

- _A for aggregated fact tables

Looking at the structure of a fact table we can see that we have columns for the measures and key columns that are used to join to the related dimension tables keys, in other words a Star Schema. On the face of it it sounds like we can take the simple approach and copy the required tables to TimesTen or perhaps amend the ETL process to use TimesTen as the target database. Remember that TimesTen is an in-memory database and every object transferred to the database needs to be loaded into the TimesTen server RAM; we are thus unlikely to have enough RAM to store all of the OBIA warehouse tables. We must also remember that not all of the RAM on the server can be dedicated to TimesTen table storage. In addition to the RAM needed by the OS we may well need to dedicate memory for other processes running on the same platform - in the case of Exalyitics this could well be both Essbase and OBI. Even then not all of the memory allocated to TimesTen can be used for table storage. There is a significant amount need for working space (similar to the temporary tablespace in Oracle 11g) and space may be need for any other TimesTen database structures such as indexes, materialized view and PL/SQL packages.

Building our TimesTen Database

Having downloaded and installed the Oracle TimesTen software we need to create a TimesTen database server. This process is well described in the quickstart.html document in the The TimesTen install directory. We need to create both a TimesTen server and a TimesTen client. Both of these are configured as ODBC data sources; on Linux we will have to edit odbc.ini files but for Microsoft Windows we can use the Data Sources applet and create our connections in the GUI. Once the database is running we can connect to using ODBC or JDBC and start to create tables. I tend to use SQLDeveloper for this as I am used to the tool, if you like using the command line there is a program called ttisql which looks quite similar to SQL/Plus. We will need to use ttisql anyway to physically instantiate the database for the first timeMy TimesTen Server is called DW_PETE_TT. As it is running on a small testing environment I have kept the memory size allocations down to 1.5GB for both data and TEMP. A real implementation will have properly sized allocations. I have also created a user (A_TEST) and given the user the necessary grants to create sessions and tables

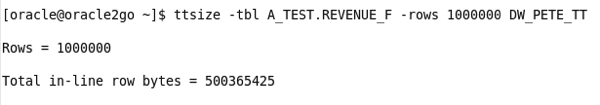

As I have already mentioned, Oracle TimesTen is a relational database so the basic CREATE TABLE syntax (less any storage specific features such as assigning tablespaces) will work. So by extracting the DDL from the OBIA W_xxxx_F and W_yyyy_D tables we can create empty tables of the same structure in TimesTen. We can then use the TimesTen ttsize utility to estimate the size of the table based on structure and expected row counts. Working with the REVENUE_F table in my sample source schema I have 1,000,000 rows. In Oracle 11gR2 this table occupies about 140MB of disk storage - the same table is estimated to be around 500MB when created like for like in Oracle TimesTen - that's about 3.5 times larger.

Being this much larger is clearly bad news, we may well struggle to fit our database into available RAM (don't forget we need TEMP space too) ; the initial load of data from disk to the database will take a longer as more bytes need to be read from disk, and our queries will need to trawl through a large amount of memory to find our results. We can however improve things. If we are on Exalytics we can use column compression and even if not, we can modify our table structures to use native TimesTen datatypes to save on space. The first thing to tackle is the datatype issue. We can do this manually by changing our create table statement or we can use a new Oracle TimesTen utility (ttImportFromOracle) that may be obtainable from Oracle's TimesTen product team. This utility will inspect the source table and content and generate SQL scripts to: create the TimesTen table with the appropriate column data types and if using Exalytics columnar compression, to extract the data from source and load the target and finally update optimiser stats.

Lets revise the structure of the TimesTen table to use native numeric types - I'll use TT_INTEGER for the key columns and BINARY_FLOAT for the numeric measures, doing this brings the size estimate down to 228313208 bytes, still larger than the original table in Oracle but less than half the size of our original straight copy of the structure. There is further scope for size reduction through optimising the VARCHAR2 columns.

-- Using Oracle Datatypes

create table A_TEST.REVENUE_F (

SHIPTO_ADDR_KEY NUMBER,

OFFICE_KEY NUMBER,

EMPL_KEY NUMBER,

PROD_KEY NUMBER,

ORDER_KEY NUMBER,

REVENUE NUMBER,

UNITS NUMBER,

DISCNT_VALUE NUMBER,

BILL_MTH_KEY NUMBER,

BILL_QTR_KEY NUMBER,

BILL_DAY_DT DATE,

ORDER_DAY_DT DATE,

PAID_DAY_DT DATE,

DISCNT_RATE NUMBER,

ORDER_STATUS VARCHAR2(20 BYTE) INLINE,

CURRENCY VARCHAR2(3 BYTE) INLINE,

ORDER_TYPE VARCHAR2(20 BYTE) INLINE,

CUST_KEY NUMBER,

SHIP_DAY_DT DATE,

COST_FIXED NUMBER,

COST_VARIABLE NUMBER,

SRC_ORDER_NUMBER VARCHAR2(20 BYTE) INLINE,

ORDER_NUMBER NUMBER);

-- using TimesTen Native Datatypes

CREATE TABLE "REVENUE_F_NATIVE"

( "SHIPTO_ADDR_KEY" TT_INTEGER,

"OFFICE_KEY" TT_INTEGER,

"EMPL_KEY" TT_INTEGER,

"PROD_KEY" TT_INTEGER,

"ORDER_KEY" TT_INTEGER,

"REVENUE" BINARY_FLOAT,

"UNITS" BINARY_FLOAT,

"DISCNT_VALUE" BINARY_FLOAT,

"BILL_MTH_KEY" TT_INTEGER,

"BILL_QTR_KEY" TT_INTEGER,

"BILL_DAY_DT" DATE,

"ORDER_DAY_DT" DATE,

"PAID_DAY_DT" DATE,

"DISCNT_RATE" BINARY_FLOAT,

"ORDER_STATUS" VARCHAR2(20 BYTE),

"CURRENCY" VARCHAR2(3 BYTE),

"ORDER_TYPE" VARCHAR2(20 BYTE),

"CUST_KEY" TT_INTEGER,

"SHIP_DAY_DT" DATE,

"COST_FIXED" BINARY_FLOAT,

"COST_VARIABLE" BINARY_FLOAT,

"SRC_ORDER_NUMBER" VARCHAR2(20 BYTE),

"ORDER_NUMBER" TT_INTEGER

) ;

/* Size comparison

[oracle@oracle2go ~]$ ttsize -tbl A_TEST.REVENUE_F -rows 1000000 DW_PETE_TT

Rows = 1000000

Total in-line row bytes = 500365425

Total = 500365425

[oracle@oracle2go ~]$ ttsize -tbl A_TEST.REVENUE_F_NATIVE -rows 1000000 DW_PETE_TT

Rows = 1000000

Total in-line row bytes = 228313201

*/

On Exalyitcs we have access to Columnar Compression. TimesTen Compression works by replacing data with tokens held in a dictionary. When we create a compressed table we specify a column (or group of columns) to compress and the token size (based on number of distinct values): 1 byte tokens support 256 distinct values, 2 byte up to 65,535 values, the largest token is 4 bytes. It is obvious that high compression is only possible for character strings longer than the replacement token. We can repeat the compression process on other columns or column groups, each compressed column has its own token dictionary table.

Next time I will look moving data into our TimesTen table and techniques we can use to boost query performance