Oracle Openworld 2013 : Reflections on Product Announcements and Strategy

I'm sitting writing this at my desk back home, with a steaming mug of tea next to me and the kids pleased to see me after having been away for eight days (or at least my wifepleased to hand them over to me after looking after them for eight days). It was an excellent Oracle Openworld - probably the best in the ten years I've been going in terms of product announcements, and if you missed any of my daily updates, here's the links to them:

- Oracle Openworld 2013 Day 0: Previewing the Week Ahead

- Oracle Openworld 2013 Day 1 : User Group Forum, News on Oracle Database In-Memory Option

- Oracle Openworld 2013 Day 2: Exalytics, TimesTen and Essbase Futures

- Oracle Openworld 2013 Days 3 & 4 : Oracle Cloud, OBIEE and ODI Futures

- Deep Dive into OBIA 11.1.1.7.1 – Overview (Mark Rittman)

- Deep Dive into OBIA 11.1.1.7.1 – Data Integration (Stewart Bryson)

- Agile BI Development using OBIEE, ODI and Golden Gate (Stewart Bryson)

- Hyperion Profitability & Cost Management – Integration of Standard & Detailed Profitability (Venkatakrishnan J)

- Make the Most of your Exalytics and BI Investments with Enterprise Manager 12c (Mark Rittman, Henrik Blixt, Dhananjay Papde)

- Birds of a Feather Session: Best Practices for TimesTen on Exalytics (Mark Rittman, Chris Jenkins, Tony Heljula)

- Oracle BI EE Integration with Hyperion Data Sources (Venkatakrishnan J)

- Oracle Data Quality Solutions, Oracle Data Integrator and Oracle GoldenGate on Exadata (Jérôme Françoisse and Gurcan Orhan)

- Innovation in BI: OBIEE against Essbase and Relational (Stewart Bryson and Edward Roske)

- How to Handle Dev/Test/Prod with Oracle Data Integrator (Jerome Francoisse and Gurcan Orcan)

- Oracle Endeca User Experience Case Study at Barclays (James Knight and Kelvin Lau)

- Configuring OBIA 11.1.1.7.1 on ODI - Deep Dive (Mark Rittman, Kevin McGinley and Hari Cherukupalli)

First off - Exalytics. Clearly there's a lot of investment going into the Exalytics offering, both from the hardware and the software sides. For hardware, it's just really a case of Oracle keeping up with additions to Sun's product line, and with the announcement of the T5-8 model we're now up to 4TB of RAM and 128 SPARC CPU cores - aimed at the BI consolidation market, where 1 or 2TB of RAM quickly goes if you're hosting a number of separate BI systems. Cost-wise - it's correspondingly expensive, about twice the price of the X3-4 machine, but it's got twice the RAM, three times the CPU cores and runs Solaris, so you've got access to the more fine-grained workload separation and virtualisation that you get on that platform. Not a machine that I can see us buying for a while, but there's definitely a market for this.

With Exalytics though you could argue that it's been the software that's underwhelmed so far, as opposed to the hardware. The Summary Advisor is good, but it doesn't really handle the subsequent incremental refresh of the aggregate tables, and TimesTen itself whilst fast and powerful hasn't had a great "out of the box" experience - in the wrong hands, it can give misleadingly-slow response-times, something I found myself a few months ago back on the blog. So it was interesting to hear some of the new features that we're likely to see in "Exalytics v2.0", probably late in calendar year 2014; an updated aggregate refresh mechanism based on DAC Server technology and with support for GoldenGate; new visualisations including data mash-up capabilities that I'm guessing we'll see as exclusives on Exalytics and Oracle's cloud products; enhancements coming for Essbase that'll make it easier to spin-off ASO cubes from an OBIEE repository; and of course, the improvements to TimesTen to match those coming in the core Oracle database - in-memory analytics.

And what an announcement that was - in-memory column-store technology within the Oracle database, not predicated on using Exadata, and all running transparently in the background withminimal DBA setup required. Now in-reality, not only is this not the first in-memory Oracle database offering - the Exadata boxes in previous open world presentations also were positioned as in-memory, but that was flash memory, not DRAM - and they're not the first vendor to offer in-memory, column-store as a feature, but given that it'll be available to all Oracle 12.1.2 databases that license the in-memory option, and it'll be so easy to administer - in theory - it's a potential industry game-changer.

Of course the immediate question on my lips after the in-memory Oracle Database announcement was "what about TimesTen", and "what about TimesTen's role in Exalytics", but Oracle played this in the end very well - TimesTen will gain similar capabilities, implemented in a slightly different way as TimesTen already stores its data in memory, albeit in row-store format - and in fact TImesTen can then take on a role of a business-controlled, mid-tier analytic "sandbox", probably receiving new in-memory features faster than the core Oracle database as it's got less dependencies and a shorter release cycle, but complementing the Oracle database an it's own, more large-scale in-memory features. And that's not forgetting those customers with data from multiple, heterogenous sources, or those that can't afford to stump-up for the In-Memory option for all of the processors in their data warehouse database server. So - fairly internally-consisent at least at the product roadmap level, and we'll be looking to get on any betas or early adopter programs to put both products through their paces.

The other major announcement that affects OBIEE customers, is, of course, OBIEE in the Cloud - or "Reporting-as-a-Service" as Oracle referred to it during the keynotes. This is one of the components of Oracle's new "platform-as-a-service" or PaaS offering, alongside a new, full version of Oracle 12c based on its new multitenant architecture, identity-management-as-a-service, documents-as-a-service and so on. What reporting-a-service will give us isn't quite "OBIEE in the cloud", or at least, not as we know it now; Oracle's view on platform-as-a-service is that it should be consumer-level in terms of simplicity to setup, and the quality of the user interface, it should be self-service and self-provisioning, and simple to sign-up for with no separate need to license the product. So in OBIEE terms, what this means is a simplified RPD/data model builder, simple options to upload and store data (also in Oracle's cloud), and automatic provisioning using just a credit card (although there'll also be options to pay by PO number etc, for the larger customers.)

And there's quite a few things that we can draw-out of this announcement; first, it's squarely aimed - at least at the start - at individual users, departmental users and the like looking to create sandbox-type applications most probably also linking to Oracle Cloud Database, Oracle Java-as-a-Service and the like. It won't, for example, be possible to upload data to this service's datastore using conventional ETL tools, as the only datasource it will connect to at least initially will be Oracle's Cloud Database schema-as-a-service, which only allows access via ApEx and HTTP, because it's a shared service and giving you SQL*Net access could compromise other users. In the future, it may well connect to Oracle's full DBaaS which gives you a full Oracle instance, but for now (as far as I've heard) there's no option to connect to an on-premise data source, or Amazon RDS, or whatever. And for this type of use-case - that may be fine, you might only want a single data source, and you can still upload spreadsheets which, if we're honest, is where most sandbox-type applications get their data from.

This Reporting-as-a-Service offering though might well be where we see new user interface innovations coming through first, though. I get the impression that Oracle plan to use their Cloud OBIEE service to preview and test new visualisation types first, as they can iterate and test faster, and the systems running on it are smaller in scope and probably more receptive to new features. Similar to Salesforce.com and other SaaS providers, it may well be the case that there's a "current version", and a"preview version" available at most times, with the preview becoming the current after a while and the current being something you've got 6-12 months to switch from after that point. And given that Oracle will know there's an Oracle database schema behind the service, it's going to make services such as the proposed "personal data mashup" feature possible, where users can upload spreadsheets of data through OBIEE's user interface, with the data then being stored in the cloud and the metrics then being merged in with the corporate dataset, with the source of each bit of data clearly marked. All this is previews and speculation though - I wouldn't expect to see this available for general use until the middle of 2014, given the timetable for previous Oracle cloud releases.

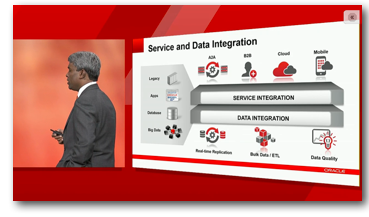

The final product area that I was particularly interested in hearing future product direction about, was Oracle's Data integration and Data Quality tools. We've been on the ODI 12c beta for a while and we're long-term users of OWB, EDQ, GoldenGate and the other data integration tools; moreover on recent projects, and in our look at the cloud as a potential home for our BI, DW and data analytcs projects, its become increasingly clear that database-to-database ETL is no longer what data integration is solely about. For example, if you're loading a data warehouse in the cloud, and the source database is also in the cloud, does it make sense to host the ETL engine, and the ETL agents, on-premise, or should they live in the cloud too?

And what if the ETL source is not a database, but a service, or an application such as Salesforce.com that provides a web service / RESTful API for data access? What if you want to integrate data on-the-fly, like OBIEE does with data federation but in the cloud, from a wide range of source types including services, Hadoop, message buses and the like. And where does replication come in, and quality-of-service management, and security and so forth come in? In my view, ODI 12c and its peers will probably be the last of the "on-premise", "assumed-relational-source-and-target" ETL tools, with ETL instead following apps and data into the cloud, assuming that sources can be APIs, messages, big data sources and so forth as well as relational data, and it'll be interesting to see what Oracle' Fusion Middleware and DI teams come up with next year as their vision for this technology space. Thomas Kurian's keynote touched on this as a subject, but I think we're still a long way from working out what the approach will be, what the tooling will look like, and whether this will be "along with", or "instead of" tools like ODI and Informatica.

Anyway - that's it for Openworld for me, back to the real world now and time to see the family. Check-back on the blog next week for normal service, but for now - laptop off, kids time.