Thoughts on ETL in the Cloud

In a few week’s time I’m presenting at the BIWA Summit 2014 on running OBIEE in the Cloud, with the idea being that I’ll go through the various options for deploying OBIEE in public clouds, including Amazon EC2 and Oracle’s own cloud offering. But the more I thought through the various architecture and product options, the more I began to think that ETL - getting data into the BI system, from wherever it is now - is the key enabling technology. The vast majority of BI systems use data that’s refreshed on a daily, weekly or even real-time basis, and on premise we tend to use tools such as Oracle Data Integrator, Oracle Warehouse Builder, Informatica PowerCenter and the like to move data from source to target, transforming and cleansing it en-route.

When you deploy a BI platform such as OBIEE into the cloud, you’ve got a couple of options around where you hold its data, and how you do the ETL:

- You can keep the data warehouse database on-premise, along with the ETL tool, and just run the BI part in the cloud

- You can move the database into the cloud as well (using a service such as Amazon RDS), but keep the ETL tool on-premise

- You can try and move the ETL into the cloud too - either hosting a tool like ODI in its own cloud VM, or make use of ETL-as-a-service such as that provided by Informatica Cloud.

Things get more interesting when part of your IT infrastructure sits on-premise, and some sits in the cloud. It gets even more interesting when your data sources are also software-as-a-service (SaaS), for example Salesforce.com and Workday, where data access is via APIs rather than direct SQL*Net connections.

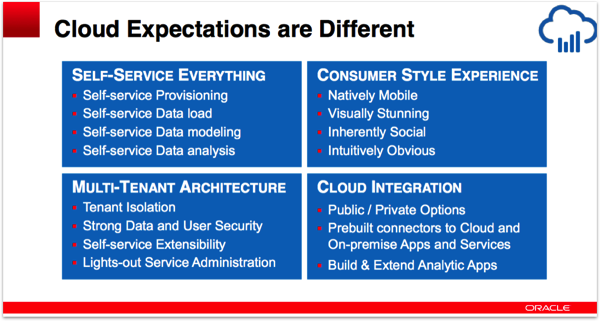

So where do we start with ETL-in-the-cloud? One place to start is Oracle’s own slide-deck on OBIEE in the cloud, where they set out what they see as the key differences between traditional on-premise software and software delivered via SaaS:

In this SaaS world, the expectation is that:

- The infrastructure, software etc is already in placed, is patched regularly for you in the background, and new features emerge regularly

- You’re on a shared platform, but you won’t be aware of other tenants on a day-to-day basis

- The “innards” and workings are hidden from you - you just consume a service

- It’s cloud-native - from connectivity to cloud apps through to common social sign-ons, commenting and collaboration

- Everything is thin-client, web-based

- Everything is self-service, self-provisioning, right from creation of your user account

- Sign-up is on-demand, paid by the hour/day etc, and with no long setup process

What this means for data integration (and ODI) then, in my opinion, is:

- It must be available as a service - log in, create data source connections, define mappings, run code

- The internals typically would be hidden - so no need to know about agents, GoldenGate vs. ODI, choices of knowledge modules etc

- Web-based data flow modellers as well as admin tools

- Connectors through to Salesforce.com and other cloud apps

- Connectors through to Hadoop, Hive, JSON compatibility etc

- Instant sign-up and charging by time/use

With bonus points for:

- Low-cost or free option to get you started

- A consistent story that goes through from messaging, application integration through to large-scale data movement

- Easy clustering, scale-out, deployment on services such as Amazon EC2

- Options to deploy on-premise or cloud, and run integration tasks on-premise or cloud

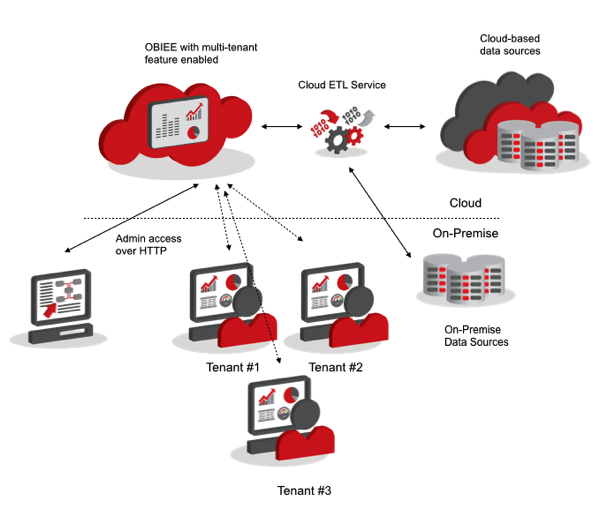

Looking at it architecturally, ETL-in-the-cloud would sit between sources and targets, both on-premise and in the cloud, providing the vital data movement capability between source systems and the BI Platform itself - most probably to its own database-in-the-cloud, running the data warehouse.

So what are the options then, if you want to use cloud-based ETL to load a cloud-based data warehouse and BI system? To my mind, there’s four main options:

- If you’re using a cloud-based database service such as Amazon’s Redshift, or Oracle’s own public cloud “schema-as-a-service” database, you can use the ETL tools provided with the service

- You can try and put ODI in the cloud, maybe using an Amazon EC2 instance running WebLogic and a JEE agent, with another instance providing the metadata repository database

- or as an alternative, do the same for one of the open-source ETL tools

- You can use one of the new “cloud-native” ETL-as-a-service products, such as Informatica Cloud or SnapLogic

- Or - you can try and abstract away ETL altogether - more on this later on.

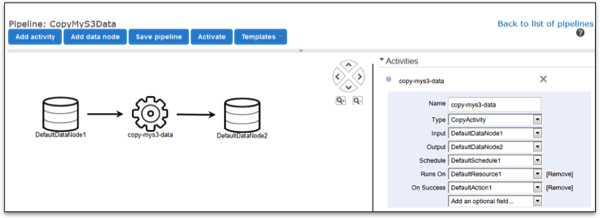

The first option really applies if (a) you’re using a service such as Amazon Web Services’ EC2, (b) your data also most probably sits in AWS cloud storage, (c) you want to move data between your source application or export into the main data warehouse database, and (d) you don’t really need to integrate data from different sources. Amazon AWS provides a number of options for loading data into EC2 and the various database and analytic services they provide, including Amazon Data Pipeline (shown in the screenshot below), the most “ETL-like” of their loading services, along with a sneakernet service, and the new Amazon Kinesis service for real-time streaming data.

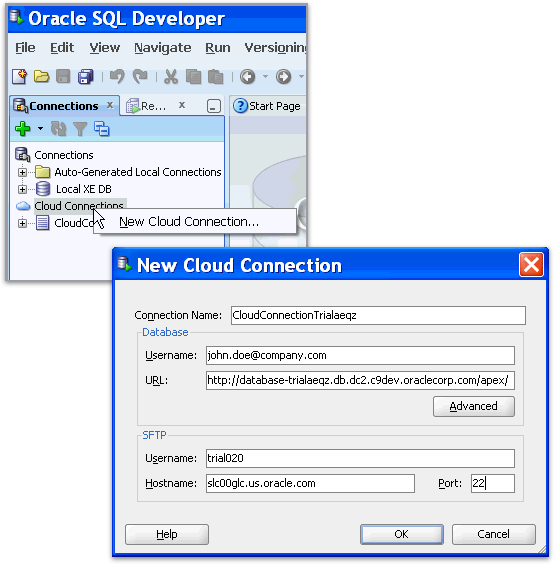

Oracle’s Cloud Database service at the moment restricts you to a single schema, and more importantly there’s no SQL*Net access, so uploading data is either through SQL*Developer (which has a special, custom Oracle Cloud connector), or through utilities provided via ApEX.

Clearly this sort of web-based data loading isn’t designed for data warehouse scenarios, and in this initial iteration Oracle’s cloud database is probably designed to support cloud applications running on Oracle’s cloud Java service, with OBIEE-in-the-cloud mainly designed for reporting against this data, and personal “sandbox” scenarios. What’s not immediately obvious when you use these cloud ETL tools is that each one has its own admin console, its own API, it’s own scheduler, it’s own metadata - which is usually the point at which you decide you need an enterprise ETL tool.

So what about the idea of running Oracle Data Integrator in the cloud? There’s a few options here, that I can think of:

- Creating a cloud server instance that runs ODI, along with all of its metadata repositories, WebLogic instances and agents in a single location

- Run the repository database either on-premise or on another cloud server (or even Amazon’s RDS service), with agents running either in their own cloud servers, or on the cloud servers holding the target database

- Build ODI into the target platform, as Oracle have done with BI Apps 11.1.1.7.1, along with management tools to hide the complexity and workings of ODI

The first option sounds the “neatest” in terms of a wholly-cloud deployment, as all the parts of ODI are held in one place, and you can think of it as an applicance or service. But ODI’s Extract-Transform-Load approach ends-up complicating things in a cloud-only deployment; the target platform (for example one of the Postgres-compatible cloud databases) might not support all of the target-layer transformations you want, and the integration part can’t really sit in the ODI cloud instance, unless you run it hub-and-spoke style and either use a local database for transformations, or use the in-memory feature within the agents. In fact where ODI makes most sense is in a hybrid on-premise/cloud setup, where most of your data resides on-premise, as does your ETL process, with the cloud being gradually migrated to and in the meantime used alongside on-premise applications.

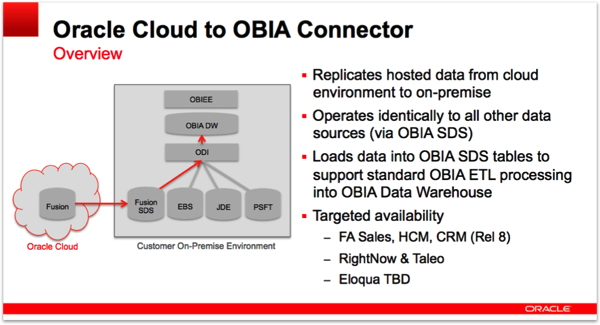

Oracle’s white paper on cloud data integration majors on this type of scenario, with Oracle GoldenGate also used to replicate data between the two environments. At this year’s Oracle Openworld the BI Apps team also announced cloud adapters for the BI Applications, initially used to extract data from Fusion Apps in the Cloud back to the on-premise BI Apps data warehouse, with other Oracle cloud applications data sources following-on.

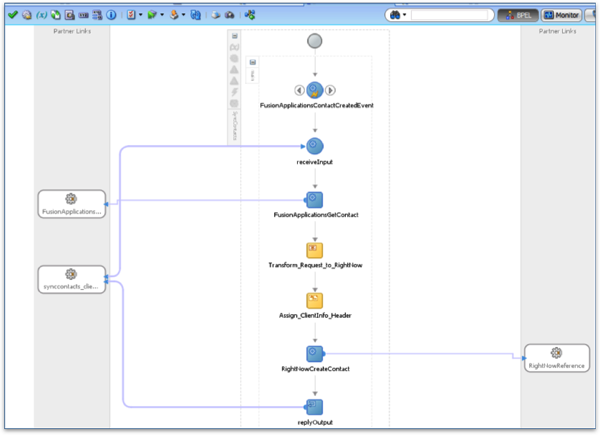

Where things get interesting with Oracle’s approach is when it’s non-Oracle cloud applications that we’re looking to integrate with. "Cloud Integration – A Comprehensive Solution”, another Oracle white paper, describes techniques to integrate with Salesforce.com, Workday and the Fusion Apps, but all of this takes place through Oracle SOA Suite and Oracle Jdeveloper, products that are way too technical for the average ETL developer (or customer implementor).

In fact there’s two obvious things that are missing here, that are present in the Cloud Database and Cloud OBIEE offerings that Oracle recently launched:

- It’s not a “service” - you can’t just create an account, design some mappings, load a target - the assumption is that each system is single tenant, perpetual license, self-install

- It’s not consumer-level in terms of simplicity - there’s bits of ODI, bits of GoldenGate, bits of SOA, bits of Jdeveloper - all good bits, but not a single integration-platform-as-a-service

But it’s probably fine if you’re running a hybrid on-premise/cloud strategy, and as I’ll talk about later on, the ELT approach does still have some distinct advantages over cloud-native ETL tools.

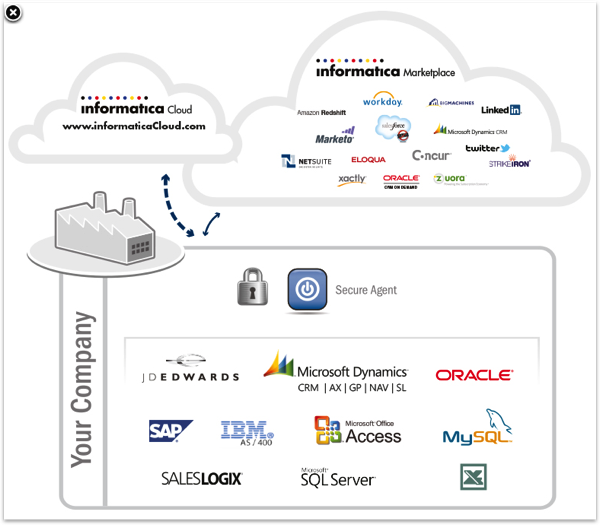

What about the more recent, “cloud-native” ETL tools such as SnapLogic, and Informatica Cloud? Informatica Cloud is probably the easiest product to understand if, like me, you’re from an ODI background. What Informatica have done here in terms of architecture is move the metadata and application-layer parts of Informatica PowerCenter into the cloud, add a bunch of cloud application adapters (including a third-party marketplace for them), but still do the actual data integration on-premise, using a cut-down version of the main PowerCenter engine.

Some of the nicer features in this setup are its multi-tenant and “integration-as-a-service” nature, the way it deals with firewall issues (do the integration on-premise), and the interoperability with traditional Informatica PowerCenter, where you can publish custom maplets from on-premise Informatica and push them into the cloud version. If Oracle came out with an ODI-in-the-cloud service, I think it’d look a lot like this.

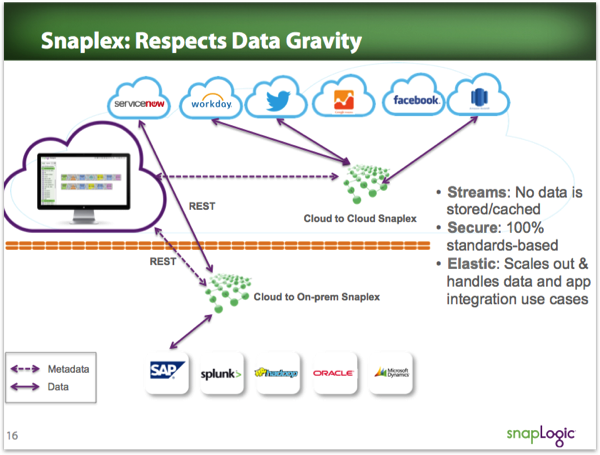

To my mind though, the most impressive of the cloud integration vendors is SnapLogic. Their SnapLogic Integration Cloud product looks like it was designed first and foremost to run “in the cloud”, it’s available as a service, and the data integration designer focuses on data paths and application integration rather than the low-level database centric approach traditional ETL tools take,

What’s particularly impressive to me is the way that they’ve taken concepts used in tools like ODI - agents that perform the data integration tasks, running as standalone servers - and built on it to create the concept of “snaplexes”, collections of JVMs that can sit in-the-cloud, or on-premise, elastically scale-up to handle larger workloads, and use Amazon’s EC2 and S3 compute and storage clouds to perform any data transformations en-route. Data being transformed in SnapLogic streams through the system with low latency and using web protocols, and the whole thing is just neatly designed to run native on the cloud.

Where tools like SnapLogic do fall short though is on the “last mile” into the data warehouse, where tools like ODI come with lots of templates, database integration features and so forth to handle slowly-changing dimensions, dimension lookups, analytic functions and the like, but cloud-native tools really focus on basic transformations and getting the data from source to target. Where this of course gets interesting is when SnapLogic is used to load databases such as Amazon Redshift, for example, in combination with Tableau, as some of the ETL tool features we’re used to using with tools such as ODI and Informatica just aren’t there yet. What this means in practice is that if you’re looking to move OBIEE into the cloud, along with an Oracle database, you’ll probably still want to use a tool like ODI to do your data load, as these tools are just so mature when it comes to loading relational data warehouses.

So finally, then, onto what I probably think will be the future of ETL - “invisible ETL”, or ETL so abstracted away that you don’t even think of it as a separate product in itself. If you think about it, ETL is a necessary evil when it comes to BI - customers just want their data loaded, they don’t want to worry about how it’s transported, which data loading tool you use and so on. Oracle have had a number of initiatives over the past few years to automate the creation of ETL code from logical table source mappings in the OBIEE RPD, and one of the major drivers in the BI Apps product roadmap is to reduce the amount of manual work propagating application data model changes through the various layers of ETL and BI tool metadata.

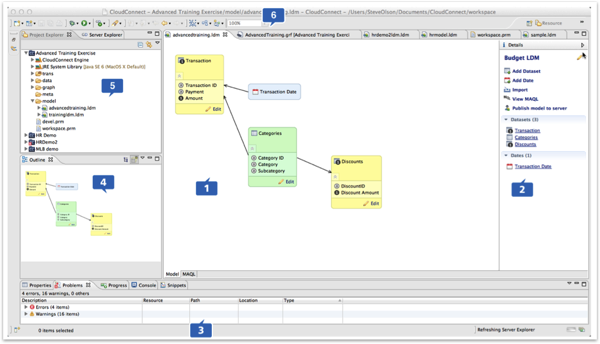

A really nice example though of taking this approach of hiding the complexity of ETL can be found with a company called Good Data, who sell a BI in the Cloud product that comes with data storage (via Vertica) and ETL all wrapped-up in a consumer-like service that’s focused on analytics and visualisations but supports data loading from a number of database and cloud application sources. The screenshot below from the GoodData CloudConnect LDM Modeler Guide shows GoodData’s logical data model development environment, with this part of the product handling the facts, dimensions, attributes and other user-facing data elements, and a more traditional ETL tool and server (based on Clover ETL, no less) doing the actual data heavy-lifting.

GoodData splits its data models into these logical and physical elements, which of course is exactly what ODI does - and what OBIEE does too. In fact, the more you look at GoodData, the more you think that all of the elements are already in place at Oracle to create a very similar product, with the added benefit of access to tools such as GoldenGate, EDQ and SOA Suite. Even SnapLogic’s Snaplex concept is conceptually very similar to ODI’s agents, but what they’ve done, and what GoodData, and Informatica and others have done, is wrap the products up into a service, made it all consistent (at least on paper), architected it for cloud-only, and hybrid on-premise/cloud environments, and built-out all of the third-party adapters. It’ll be interesting to see what Oracle’s long-term response to this will be - honestly I’ve no special insight into this part of the product roadmap so I’m just as interested as anyone as to how it will turn out.

So - is any approach better, is Oracle’s approach the worst, are vendors like SnapLogic the future, and what should we use if deploying OBIEE in the cloud? Well to my mind there’s no black-and-white answer, and the choice comes down to a number of factors including:

- Where are your sources and targets; the more that still reside on-premise, the more a traditional on-premise tool such as ODI makes sense

- To what extent are you interacting with non-Oracle targets and sources; the more non-Oracle ones you’re using, the more a non-Oracle tool will probably make sense

- How complex will your end data transformations be - if you’re expecting to do lots of SCD2 transformations, analytic queries, clever DW-stuff, a tool like ODI will make sense

- How much upfront investment do you want to make? Services like Informatica Cloud are far easier to get provisioned on, and involve far less up-front license costs, than an on-premise install

- Or are you just loading data from a single source into a managed cloud database - if so, one of the vendor-supplied utilities will probably do the job

Anyway - I’d be interested in others’ opinions, and whether I’ve missed anything out. But for now - that was some thoughts from me on running ETL “in the cloud”.