Connecting OBIEE 11.1.1.9 to Hive, HBase and Impala Tables for a DW-Offloading Project

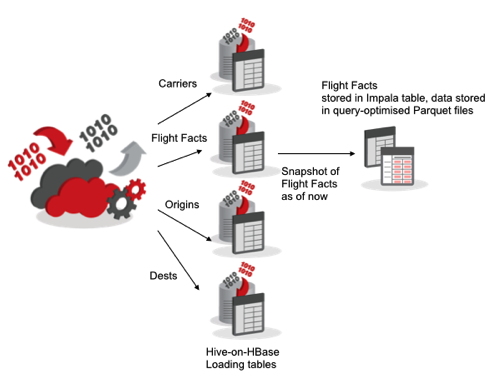

In two previous posts this week I talk about a client request to offload part of their data warehouse top Hadoop, taking data from a source application and loading it into Hive tables on Hadoop for subsequent reporting-on by OBIEE11g. In the first post I talked about hosting the offloaded data warehouse elements on Cloudera Hadoop CDH5.3, and how I used Apache Hive and Apache HBase to support insert/update/delete activity to the fact and dimension tables, and how we’d copy the Hive-on-HBase fact table data into optimised Impala tables stored in Parquet files to make sure reports and dashboards ran fast.

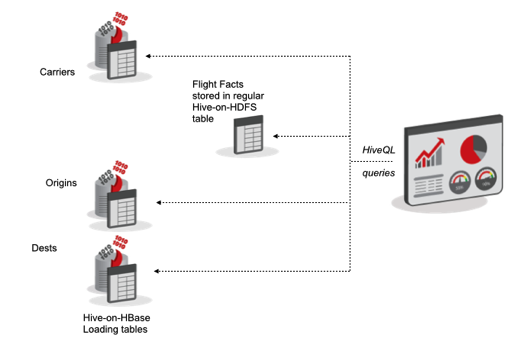

In the second post I got into the detail of how we’d keep the Hive-on-HBase tables up-to-date with new and changed data from the source system, using HiveQL bulk-inserts to load up the initial table data and a Python script to handle subsequent inserts, updates and deletes by working directly with the HBase Client and the HBase Thrift Server. Where this leaves us at the end then is with a set of fact and dimension tables stored as optimised Impala tables and updatable Hive-on-HBase tables, and our final step is to connect OBIEE11g to it and see how it works for reporting.

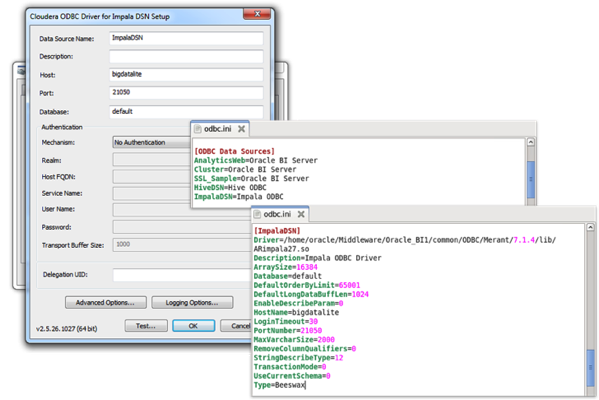

As I mentioned in another post a week or so ago, the new 11.1.1.9 release of OBIEE11g supports Cloudera Impala connections from Linux servers to Hadoop, with the Linux Impala drivers being shipped by Oracle as part of the Linux download and the Windows ones used for the Admin Tool workstation downloadable directly from Cloudera. Once you’ve got all the drivers and OBIEE software setup, it’s then just a case of setting up the ODBC connections on the Windows and Linux environments, and you should then be in a position to connect it all up.

In the Impala side, I first need to create a copy of the Hive-on-HBase table I’ve been using to load the fact data into from the source system, after running the invalidate metadata command to refresh Impala’s view of Hive’s metastore.

[oracle@bigdatalite~]$impala-shell

[bigdatalite.localdomain:21000]>invalidate metadata;

[bigdatalite.localdomain:21000]>create table impala_flight_delays

>stored as parquet

>as select *from hbase_flight_delays;

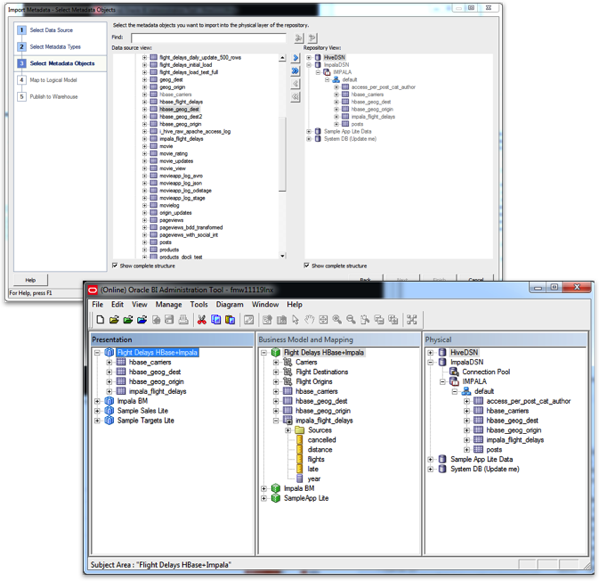

Next I import the Hive-on-HBase and the Impala table through the Impala ODBC connection - even though only one of the tables (the main fact table snapshot copy) was created using Impala, I still get the Impala speed benefit for the other three tables created in Hive (against the HBase source, no less). Once the table metadata is imported into the RPD physical layer, I can then create a business model and subject area as I would do normally, so my final RPD looks like this:

Now it’s just a case of saving the repository online and creating some reports. If you’re using an older version of Impala you may need to disable the setting where a LIMIT clause is needed for every GROUP BY (see the docs for more details, but recent (CDH5+) versions will work fine without this). Something you’ll also need to do back in Impala is compute statistics for each of the tables, like this:

[bigdatalite.localdomain:21000] > compute stats default.impala_flight_delays; Query: compute stats default.impala_flight_delays +-----------------------------------------+ | summary | +-----------------------------------------+ | Updated 1 partition(s) and 8 column(s). | +-----------------------------------------+ Fetched 1 row(s) in 2.73s [bigdatalite.localdomain:21000] > show table stats impala_flight_delays; Query: show table stats impala_flight_delays +---------+--------+---------+--------------+---------+-------------------+ | #Rows | #Files | Size | Bytes Cached | Format | Incremental stats | +---------+--------+---------+--------------+---------+-------------------+ | 2514141 | 1 | 10.60MB | NOT CACHED | PARQUET | false | +---------+--------+---------+--------------+---------+-------------------+ Fetched 1 row(s) in 0.01s

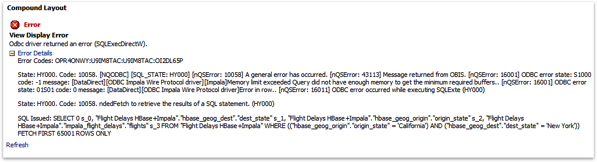

Apart from being generic “good practice” and giving the Impala query optimizer better information to form a query plan with, you might hit the error below in OBIEE if you don’t do this.

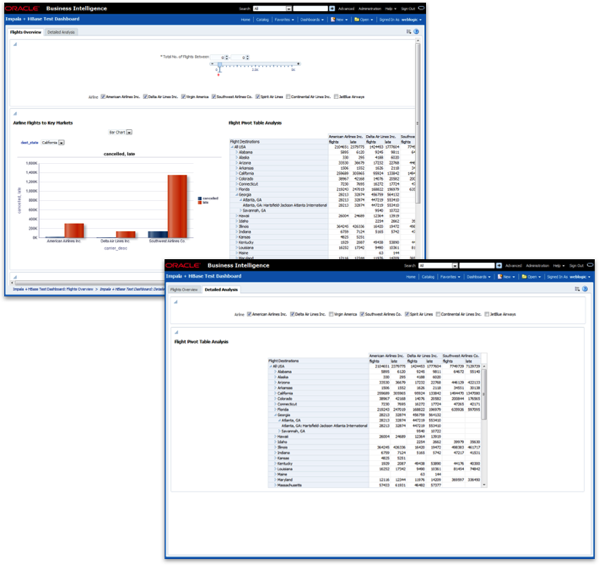

If you do hit this error, go back to the Impala Shell or Hue and compute statistics, and it should go away next time. Then, finally, you can go and create some analyses and dashboards and you should find the queries run fine against the various tables in Hadoop, and moreover the response time is excellent if you use Impala as the main query engine.

I did a fair bit of testing of OBIEE 11.1.1.9 running against Cloudera Impala, and my findings were that all of the main analysis features worked (prompts, hierarchies, totals and subtotals etc) and the response time was comparable with a well-turned data warehouse, maybe even Exalytics-level of speed. If you take a look at the nqquery.log file for the Impala SQL queries OBIEE is sending to Impala, you can see they get fairly complex (which is good, as I didn’t hit any errors when running the dashboards) and you can also see where the BI Server takes a more simple approach to creating subtotals, nested queries etc compared to the GROUP BY … GROUPING SETS that you get when using a full Oracle database.

select D1.c1 as c1,

D1.c2 as c2,

D1.c3 as c3,

D1.c4 as c4,

D1.c5 as c5,

D1.c6 as c6,

D1.c7 as c7,

D1.c8 as c8,

D1.c9 as c9,

D1.c10 as c10,

D1.c11 as c11,

D1.c12 as c12

from

(select 0 as c1,

D1.c3 as c2,

substring(cast(NULL as STRING ), 1, 1 ) as c3,

substring(cast(NULL as STRING ), 1, 1 ) as c4,

substring(cast(NULL as STRING ), 1, 1 ) as c5,

'All USA' as c6,

substring(cast(NULL as STRING ), 1, 1 ) as c7,

1 as c8,

substring(cast(NULL as STRING ), 1, 1 ) as c9,

substring(cast(NULL as STRING ), 1, 1 ) as c10,

D1.c2 as c11,

D1.c1 as c12

from

(select sum(T44037.late) as c1,

sum(T44037.flights) as c2,

T43925.carrier_desc as c3

from

hbase_carriers T43925 inner join

impala_flight_delays T44037 On (T43925.key = T44037.carrier)

where ( T43925.carrier_desc = 'American Airlines Inc.' or T43925.carrier_desc = 'Delta Air Lines Inc.' or T43925.carrier_desc = 'Southwest Airlines Co.' or T43925.carrier_desc = 'Spirit Air Lines' or T43925.carrier_desc = 'Virgin America' )

group by T43925.carrier_desc

) D1

union all

select 1 as c1,

D1.c3 as c2,

substring(cast(NULL as STRING ), 1, 1 ) as c3,

substring(cast(NULL as STRING ), 1, 1 ) as c4,

D1.c4 as c5,

'All USA' as c6,

substring(cast(NULL as STRING ), 1, 1 ) as c7,

1 as c8,

substring(cast(NULL as STRING ), 1, 1 ) as c9,

D1.c4 as c10,

D1.c2 as c11,

D1.c1 as c12

from

(select sum(T44037.late) as c1,

sum(T44037.flights) as c2,

T43925.carrier_desc as c3,

T43928.dest_state as c4

from

hbase_carriers T43925 inner join

impala_flight_delays T44037 On (T43925.key = T44037.carrier) inner join

hbase_geog_dest T43928 On (T43928.key = T44037.dest)

where ( T43925.carrier_desc = 'American Airlines Inc.' or T43925.carrier_desc = 'Delta Air Lines Inc.' or T43925.carrier_desc = 'Southwest Airlines Co.' or T43925.carrier_desc = 'Spirit Air Lines' or T43925.carrier_desc = 'Virgin America' )

group by T43925.carrier_desc, T43928.dest_state

) D1

union all

select 2 as c1,

D1.c3 as c2,

substring(cast(NULL as STRING ), 1, 1 ) as c3,

D1.c4 as c4,

D1.c5 as c5,

'All USA' as c6,

substring(cast(NULL as STRING ), 1, 1 ) as c7,

1 as c8,

D1.c4 as c9,

D1.c5 as c10,

D1.c2 as c11,

D1.c1 as c12

from

(select sum(T44037.late) as c1,

sum(T44037.flights) as c2,

T43925.carrier_desc as c3,

T43928.dest_city as c4,

T43928.dest_state as c5

from

hbase_carriers T43925 inner join

impala_flight_delays T44037 On (T43925.key = T44037.carrier) inner join

hbase_geog_dest T43928 On (T43928.key = T44037.dest and T43928.dest_state = 'Georgia')

where ( T43925.carrier_desc = 'American Airlines Inc.' or T43925.carrier_desc = 'Delta Air Lines Inc.' or T43925.carrier_desc = 'Southwest Airlines Co.' or T43925.carrier_desc = 'Spirit Air Lines' or T43925.carrier_desc = 'Virgin America' )

group by T43925.carrier_desc, T43928.dest_city, T43928.dest_state

) D1

union all

select 3 as c1,

D1.c3 as c2,

D1.c4 as c3,

D1.c5 as c4,

D1.c6 as c5,

'All USA' as c6,

D1.c4 as c7,

1 as c8,

D1.c5 as c9,

D1.c6 as c10,

D1.c2 as c11,

D1.c1 as c12

from

(select sum(T44037.late) as c1,

sum(T44037.flights) as c2,

T43925.carrier_desc as c3,

T43928.dest_airport_name as c4,

T43928.dest_city as c5,

T43928.dest_state as c6

from

hbase_carriers T43925 inner join

impala_flight_delays T44037 On (T43925.key = T44037.carrier) inner join

hbase_geog_dest T43928 On (T43928.key = T44037.dest and T43928.dest_city = 'Atlanta, GA')

where ( T43925.carrier_desc = 'American Airlines Inc.' or T43925.carrier_desc = 'Delta Air Lines Inc.' or T43925.carrier_desc = 'Southwest Airlines Co.' or T43925.carrier_desc = 'Spirit Air Lines' or T43925.carrier_desc = 'Virgin America' )

group by T43925.carrier_desc, T43928.dest_airport_name, T43928.dest_city, T43928.dest_state

) D1

) D1

order by c1, c6, c8, c5, c10, c4, c9, c3, c7, c2 limit 65001

Not bad though for a data warehouse offloaded entirely to Hadoop, and it’s good to see such a system handling full updates and deletes to data as well as insert appends, and it’s also good to see OBIEE working against an Impala datasource and with such good response times. If any of this interests you as a potential customer, feel free to drop me an email at [email protected], or check-out our Big Data Quickstart page on the website.