Oracle Big Data Discovery 1.1 now GA, and Available as part of BigDataLite 4.2.1

The new Oracle Big Data Discovery 1.1 release went GA a couple of weeks ago, and came with a bunch of features that addressed show-stoppers in the original 1.0 release; the ability to refresh and reload datasets from Hive, compatibility with Cloudera CDH and Hortonworks HDP Hadoop platforms, Kerberos integration, and the ability to bring in datasets from remote JDBC datasources. If you’re new to Big Data Discovery I covered the initial release in a number of blog posts over the past year or so:

- Introducing Oracle Big Data Discovery Part 1: “The Visual Face of Hadoop”

- Introducing Oracle Big Data Discovery Part 2: Data Transformation, Wrangling and Exploration

- Introducing Oracle Big Data Discovery Part 3: Data Exploration and Visualization

- Combining Oracle Big Data Discovery and Oracle Visual Analyzer on BICS

- Some Oracle Big Data Discovery Tips and Techniques

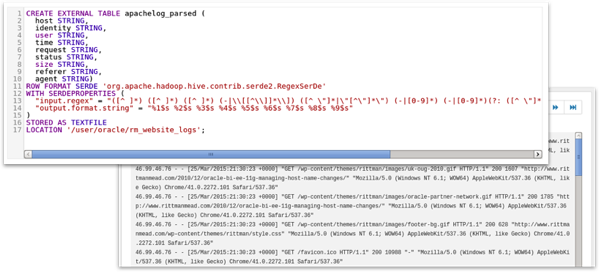

So let’s start by loading some data into Big Data Discovery so that we can explore what’s in it, see the range of attributes and their values and do some basic data clean-up and enrichment. As with Big Data Discovery 1.0 you import (or “sample”) data into Big Data Discovery’s DGraph engine either via file upload, or by using a command-line utility. Data in Hadoop has to be registered in the Hive HCatalog metadata layer, and I’ll start by importing a Hive table mapped to some webserver log files via a Hive SERDE:

To import or sample this table into BDD’s DGraph engine I use the following command to invoke the Big Data Discovery Data Processing engine, which reads the Hive table metadata, loads the Hive table data into the DGraph engine (either all rows, or a representative sample) and process/enriches the data to add geocoding, for example:

[oracle@bigdatalite edp_cli]$ ./data_processing_CLI -t apachelog_parsed

This then runs as an Apache Spark job under YARN, progress of which you can track either from the console or through Cloudera Manager / Hue.

[2015-09-04T14:20:43.792-04:00] [DataProcessing] [INFO] [] [org.apache.spark.Logging$class] [tid:main] [userID:oracle] client token: N/A diagnostics: N/A ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: root.oracle start time: 1441390841404 final status: UNDEFINED tracking URL: http://bigdatalite.localdomain:8088/proxy/application_1441385366282_0001/ user: oracle [2015-09-04T14:20:45.794-04:00] [DataProcessing] [INFO] [] [org.apache.spark.Logging$class] [tid:main] [userID:oracle] Application report for application_1441385366282_0001 (state: ACCEPTED)

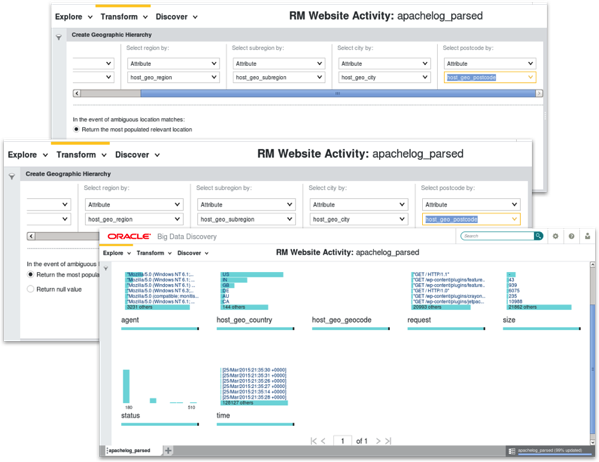

Going over to Big Data Discovery Studio I can then see the dataset within the catalog, and then load it into a project and start exploring and transforming the dataset. In the screenshots below I’m cleaning up the date and time field to turn it into a timestamp, and arranging the automatically-derived country, city, region and state attributes into a geography hierarchy. BDD1.1 comes with a bunch of other transformation enhancements including new options for enrichment, the ability to tag values via a whitelist and so on - a full list of new features for BDD1.1 can be found in MOS Doc.ID 2044712.1

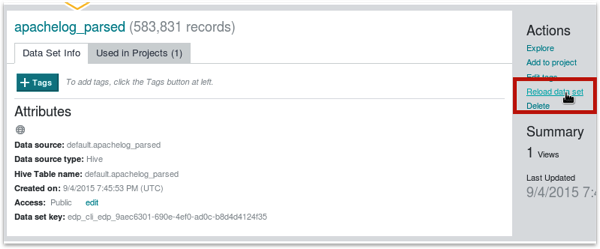

Crucially now in BDD1.1 you can either refresh a data set with new data from Hive (re-load), or do an incremental update after you’ve selected an attribute as the update (identity) column - in the screenshot below I’m doing this for a dataset uploaded from a file, but you can reload and update dataset from the command-line too which then opens-up the possibility of scripting, scheduling etc.

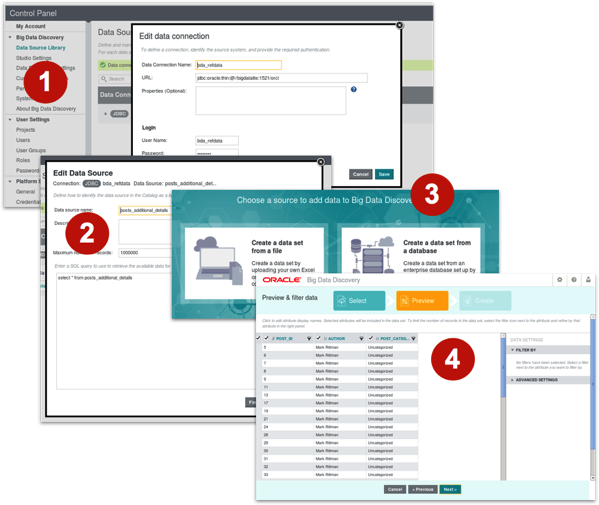

You can also define JDBC data connections in the administration part of BDD Studio, and then type in SQL queries to define data sources that can then be added into your project as a dataset - loading their data directly into the DGraph engine rather than having to stage it in Hadoop beforehand.

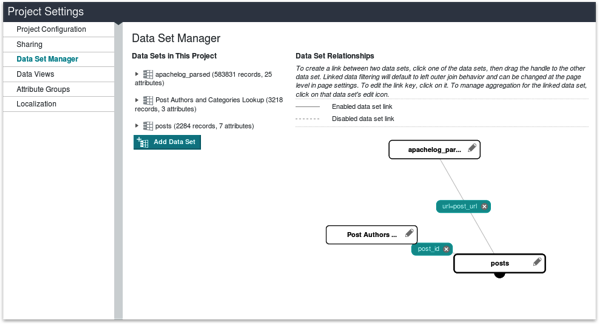

Then, as with the initial release of Big Data Discovery, you can define joins between the data sets in a project based on common attributes - in this case I’m joining the page URLs in the webserver logs with the page and article data extracted from our Wordpress install, sourced from both Hive and Oracle (via JDBC)

Other new features in BDD1.1 include the ability to define “applications”, projects and datasets that are considered “production quality” and include details on how to refresh and incrementally load their datasets (designed presumably to facilitate migrations from Endeca Information Discovery), and a number of other new features around usability, data exploration and security. You can download BDD1.1 from Oracle’s Edelivery site, or download it pre-installed and configured as part of the new Big Data Lite 4.2.1 Virtualbox virtual machine.