Oracle Endeca Week : What is the Endeca MDEX Engine?

Yesterday, I kicked-off our Endeca week with an overview of Endeca's product line prior to the recent acquisition by Oracle, looking at Endeca's two main products: Endeca Infront, which powers the "faceted" search feature behind many e-commerce sites, and Endeca Latitude, an "agile" BI tool designed to create web-based applications against the Endeca MDEX engine, which also powers Infront. As a recap, here's the postings so far, and the ones coming up:

- Day 1 : So Just What is Endeca?

- Day 2 : What is the Endeca MDEX Engine?

- Day 3 : Where Does Endeca Fit with Oracle BI and DW?

- Day 4 : How Do You Develop Endeca Latitude Applications?

So what is MDEX, and how does it compare to Oracle products such as Oracle Database and Oracle Essbase?

First of all, it's worth understanding the design goals behind MDEX compared to, say, an Essbase cube or an Oracle relational database. Oracle databases are designed to store lots of detail-level data in the most space-efficient way possible, and with fast retrieval times for individual rows of data (a generalization, I know...); Essbase cubes are designed to pre-compute and aggregate lots of detail-level data and then provide slices of it quickly, making strong assumptions about the query paths that users will take. MDEX though was designed to support Endeca's "search and discovery" uses cases, where the user can search and filter arbitrarily, and get fast aggregated views returned back to them. As such, Endeca position MDEX as a hybrid search/analytical database designed for analysis of diverse, and fast-changing, data.

To make this possible, the underlying data structure is made up of data records (analogous to facts) made up of attributes that contain arbitrary sets of key/value pairs. These attributes can contain hierarchical data (i.e. XML element hierarchies) which gives end-users the ability to drill-into record sets using applications built using Endeca Studio. The main approach with MDEX is to try and take an agile approach and do away with as much of the data modelling process as possible, with the MDEX database being mostly schema-less and designed to derive its structure from the actual data that is loaded; the design-goal then was to make MDEX as much "load and go" as possible, to support Endeca's focus on data discovery vs. the traditional analysis that you perform with tools such as Oracle BI or Essbase.

Under the covers, MDEX started-off as entirely in-memory, with this evolving over time to use a column-store on disk coupled with an in-memory cache, to make the product more scaleable. The in-memory cache aims to keep available a working set of data required for user queries, in order to support sub-second response times, with the cache itself containing sections of the data columns in active use as well as re-usable intermediate query results. The actual MDEX data is then persisted in a column-based database on disk, and mapped I/O is used to treat additional disk as RAM to extend the capacity of the cache element. All-in-all, most MDEX databases come in under under a terabyte in size, with numbers of data records in the low hundreds of millions, with the main limitation on size being the amount of RAM you can provide for the cache; MDEX is designed provide as much query data as possible from the cache, but with limitations in RAM size even on large servers, in the end too-large a data set stored in the disk-based column-store database is going to lead to slower response times for queries, which really need to return data in under a second for the type of search/discovery applications Endeca supports.

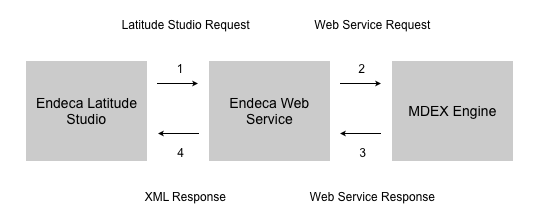

Once you've loaded data into MDEX, the data and metadata within it are then accessed via web service calls typically from Endeca Studio components. As such, there's no ODBC, JDBC or XML/A interface into MDEX, with communication between client and MDEX instead following the pattern below:

MDEX then provides sets of records out to the calling Endeca Studio application, which can contain dimensional drill structures based on the hierarchies contained with each record's attributes. This is not a formal dimensional model as you'd find with tools such as Essbase or Oracle BI Server, but it allows the user to explore and drill-into the dataset using Endeca's "faceted" search feature, with the MDEX engine being optimized for the support of very ad-hoc, search-based filtering. This is an interesting contrast with the use-cases supported by Essbase and the Oracle Database; Essbase is designed to pre-aggregate data, whereas MDEX assumes arbitrary filtering which makes pre-computing aggregates very difficult. In a way MDEX is more like a relational database, but aims to provide fast response times by caching the main working dataset and then storing the rest of the data in a compressed, column-store format, making un-cached queries also fast, something essential for data discovery-type applications where you can't make assumptions about what the user is going to query.

So, in summary, MDEX can be characterized in the following way:

- It's designed to be metadata and schema-light, with data stored in records made up of values and key/value pairs, which can contain hierarchies, giving us a form of dimensional model

- Storage is a combination of an in-memory cache coupled with a disk-based column-store database

- It's designed as "load and go", with little up-front data modeling and a design optimized for agile data discovery across disparate and jagged data sources

- Access is via web service calls, primarily from web-based applications written using Endeca Studio

- MDEX datasets are typically sub-terabyte, with the main limitation being on how much RAM cache you can provide

All interesting stuff, I'm sure you'll agree. For some additional background reading, here's some useful resources on the MDEX engine:

- Endeca's MDEX Technology (data sheet)

- In-Memory But Not Memory-Bound (Agile Analytics blog)

- How similar are faceted search and OLAP? See CIO Mag: 20 to Watch in 2010 (Agile Analytics blog)

- Vertical Stores for Vertical Web Search (Agile Analytics blog)

- Curt Monash on Endeca's product and MDEX technology

So where does this leave MDEX, and the rest of the Endeca product line, in relation to Oracle's current product set? Check back tomorrow for our thoughts on this.