OBIEE, ODI and Hadoop Part 2: Connecting OBIEE 11.1.1.7 to Hadoop Data Sources

In yesterday's post I looked at the key enabling technologies behind OBIEE and ODI's connectivity to Hadoop, and today I'll look at how OBIEE 11.1.1.7 can now access Hadoop data sources through two related technologies; Hive, and MapReduce.

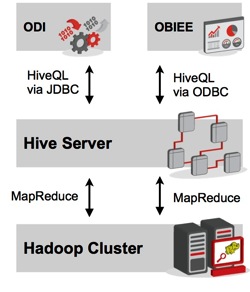

In my introduction to the topic I said that whilst writing MapReduce routines in Java, and then orchestrating them through other tools in the Apache Hadoop family could be quite complex technically, another tool called "Hive" provided an SQL-like query layer over Hadoop and MapReduce so that tools like OBIEE could access them. Rather than you having to write your own MapReduce routines in Java, for instance, Hive writes them for you, returning data to OBIEE and ODI via ODBC and JDBC drivers. The diagram below, also from yesterday's post, shows the data layers used in such an arrangement.

Under the covers, Hive has its own metadata layer, server engine and data store, with developers "loading" data into Hive "tables" which are then generally stored on the HDFS file system, just like any other data processed through MapReduce. Then, when a query is issued through Hive, the Hive Server dynamically generates MapReduce routines to query the underlying data, returning data to users in a similar way to an interactive database SQL session, like this:

markmacbookpro:~ markrittman$ ssh oracle@bigdatalite

oracle@bigdatalite's password:

Last login: Wed Apr 17 04:02:59 2013 from 192.168.2.200

=====================================================

=====================================================

Welcome to BigDataLite

run startx at the command line for X-Windows console

=====================================================

=====================================================

Host: bigdatalite.us.oracle.com [192.168.2.35]

[oracle@bigdatalite ~]$ hive

Hive history file=/tmp/oracle/hive_job_log_oracle_201304170403_1991392312.txt

hive> show tables;

OK

dwh_customer

dwh_customer_tmp

i_dwh_customer

ratings

src_customer

src_sales_person

weblog

weblog_preprocessed

weblog_sessionized

Time taken: 2.925 seconds

hive> select count(*) from src_customer;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_201303171815_0003, Tracking URL = http://localhost.localdomain:50030/jobdetails.jsp?jobid=job_201303171815_0003

Kill Command = /usr/lib/hadoop-0.20/bin/hadoop job -Dmapred.job.tracker=localhost.localdomain:8021 -kill job_201303171815_0003

2013-04-17 04:06:59,867 Stage-1 map = 0%, reduce = 0%

2013-04-17 04:07:03,926 Stage-1 map = 100%, reduce = 0%

2013-04-17 04:07:14,040 Stage-1 map = 100%, reduce = 33%

2013-04-17 04:07:15,049 Stage-1 map = 100%, reduce = 100%

Ended Job = job_201303171815_0003

OK

25

Time taken: 22.21 seconds

hive>

In the example above, I connected to the Hive environment, listed out the "tables" available to me, and then ran a count of "rows" in the src_customers table which in the background, caused a MapReduce routine to be written and executed in the background by the Hive server. Hive has been described as the "Hadoop Data Warehouse", but it's not really a data warehouse as you and I would know it - you wouldn't typically use Hadoop and Hive to store customer transaction data, for example, but you might use it as a store of Facebook interactions, for example, or most popular pages or interaction paths through your website, and someone working in Web Analytics might want to interactively query that set of data in a more user-friendly manner than writing their own Java routines. So how does OBIEE gain access to this data, and what extra software or configuration pieces do you need to put in-place to make it happen?

If you want to have OBIEE 11g access Hadoop data, you're best going with the 11.1.1.7+ release as this is where it's most tested and stable. You'll need to configure drivers at two points; firstly at the server level (Hadoop access is only supported with Linux server installations of OBIEE 11.1.1.7) and then at the Windows-based Administration tool level. Let's start with the BI Administration tool first, based on the instructions in the 11.1.1.7 Metadata Repository Builder's Guide.

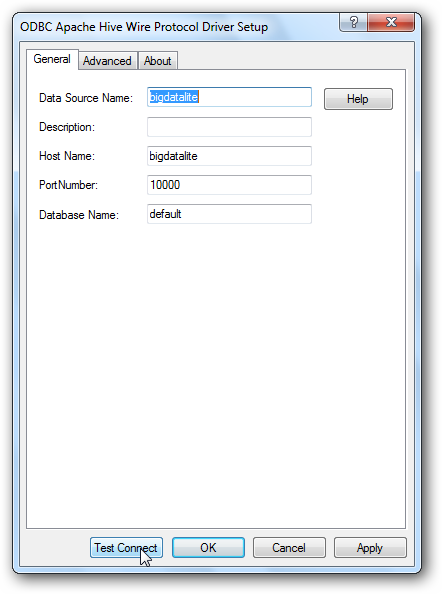

To have the BI Administration tool connect to a Hadoop/Hive data source, you'll need to download some ODBC drivers for Hadoop via a My Oracle Support download, DocID 1520733.1. This gives you a set of HiveODBC drivers along with a PDF explaining the installation process, and once you've installed the drivers, you'll need to open up the ODBC Data Source Administrator applet and create a new HiveODBC data source. In this instance, I call the datasource "bihdatalite" after the server name, and go with the default values for the other settings. Note that "default" is the name of the "database" within Hive, and the port number is the port that the Hive server is running on.

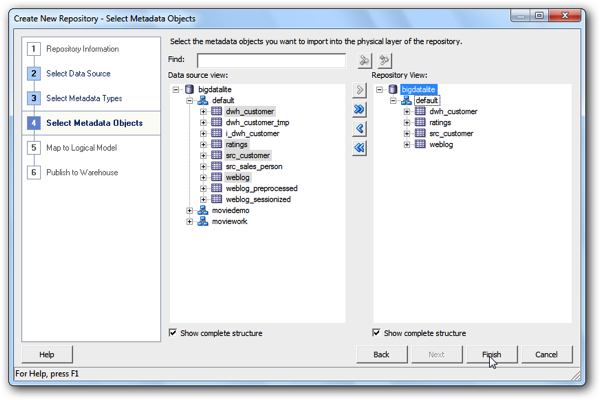

Now I can create a new repository offline, and connect to the Hive server via the HiveODBC connection to start importing table metadata into the RPD. Note that with the current implementation of this connectivity, whilst you can import tables from multiple Hive databases into the RPD, queries you issue can't span more than a single Hive database (i.e. you can't specify a schema name prefix for the table name, therefore can't join across two schemas).

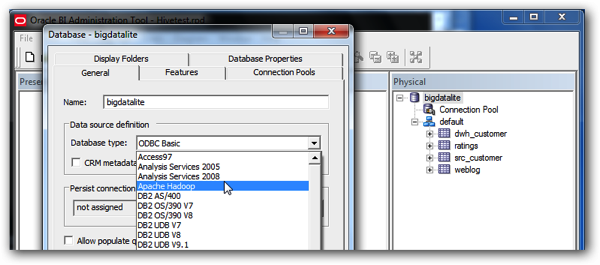

Then, once you've imported the Hive table metadata into the RPD, change the physical database type to "Apache Hadoop", from the default ODBC 3.5 setting that would have been added automatically by the metadata import process. Leave the connection pool call interface at ODBC2.0, put in any old username and password into the shared login details (or a valid username/password if Hive security is enabled), and then save the repository.

Then, once you've imported the Hive table metadata into the RPD, change the physical database type to "Apache Hadoop", from the default ODBC 3.5 setting that would have been added automatically by the metadata import process. Leave the connection pool call interface at ODBC2.0, put in any old username and password into the shared login details (or a valid username/password if Hive security is enabled), and then save the repository.

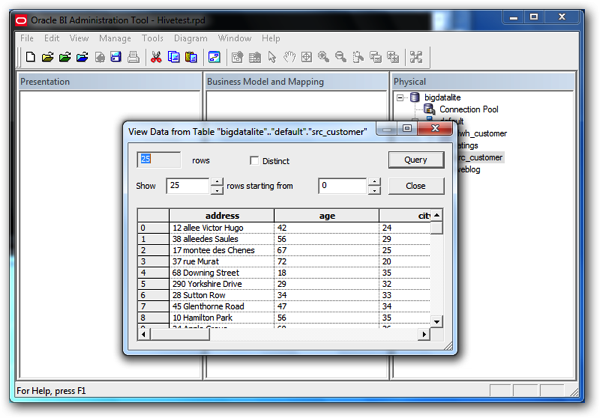

You should then be able to use the View Data feature in the BI Administration tool to view data in a particular Hive table, like this:

Now you need to move over to the server part of OBIEE, and configure the ODBC connection to Hive there too. OBIEE 11.1.1.7 comes with DataDirect drivers already installed that will connect to Hive, so it's just a case then of configuring a connection of the same name to the Hive datasource using OBIEE's odbi.ini file, like this:

[ODBC Data Sources]

AnalyticsWeb=Oracle BI Server

Cluster=Oracle BI Server

SSL_Sample=Oracle BI Server

bigdatalite=Oracle 7.1 Apache Hive Wire Protocol

[bigdatalite]

Driver=/u01/app/Middleware/Oracle_BI1/common/ODBC/Merant/7.0.1/lib/ARhive27.so

Description=Oracle 7.1 Apache Hive Wire Protocol

ArraySize=16384

Database=default

DefaultLongDataBuffLen=1024

EnableLongDataBuffLen=1024

EnableDescribeParam=0

Hostname=bigdatalite

LoginTimeout=30

MaxVarcharSize=2000

PortNumber=10000

RemoveColumnQualifiers=0

StringDescribeType=12

TransactionMode=0

UseCurrentSchema=0

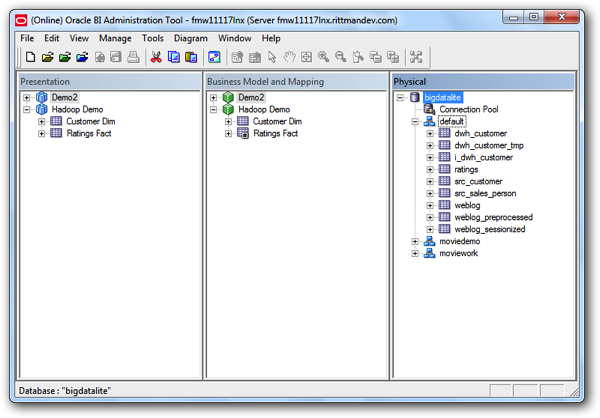

Note that you also need to configure OBIEE's OPMN feature to use the DataDirect 7.1 drivers rather than the default, older ones - see the docs for full details on this step. Then, as far as the RPD is concerned, you just need to make a business model out of the Hive table sources, upload it using EM so that its running online on your OBIEE server installation, and your RPD in the end should look similar to this:

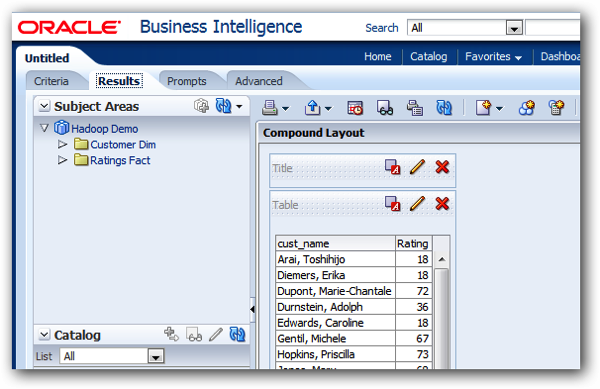

Then finally, you can create an OBIEE analysis using this data, and analyse it just like any other data source - except, of course, that there's quite a lot of lag and latency at the start of the query, as Hive spins up its Java environment, writes the MapReduce query, and then send the data back to OBIEE's BI Server.

So how do we get data into Hive in the first place, to create these tables that in the background, are access through Hadoop and MapReduce? Check back tomorrow, when I'll look at how Oracle Data Integrator can be used to load data into Hive, as well as perform other data integration tasks using Hadoop and other big data technologies.