OBIEE, ODI and Hadoop Part 3: A Closer Look at Hive, HFDS and Cloudera CDH3

In the first two parts in this series, I looked at the recently-added support for Apache Hadoop as a data source for OBIEE 11.1.1.7 and ODI 11.1.1.6, and explained how the Hadoop support was really enabled through a related technology called Hive. In the second part in the series I showed how OBIEE 11.1.1.7 could report against "big data" sources using Hadoop and this Hive technology, but this all of course pre-supposes that we have data in Hive in the first place. So what actually is Hive, how do you load data into it, and can ODI help with this process?

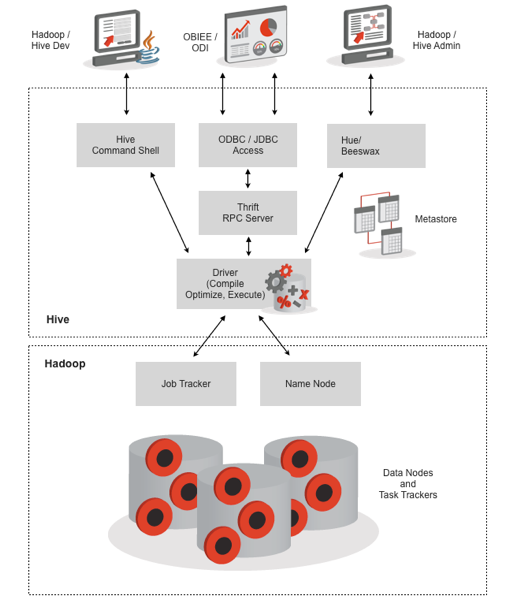

To take a few steps back, Apache Hive is a Hadoop-family project that provides a "data warehouse" layer over Hadoop, through a metadata layer not unlike OBIEE's RPD together with a SQL-like language called HiveQL. Coupled with ODBC and JDBC database drivers, BI tools like OBIEE use Hive to get access to big data sources, as the HiveQL language that Hive uses is very similar to SQL used to access databases such as Oracle, SQL Server or mySQL. Delving a bit deeper in the Hive product architecture, as shown in the diagram below Hive has a number of components including a "database engine", a metadata store, APIs for client access, and a link through to Hadoop to actually load, process and retrieve data in HDFS (Hadoop Distributed File System).

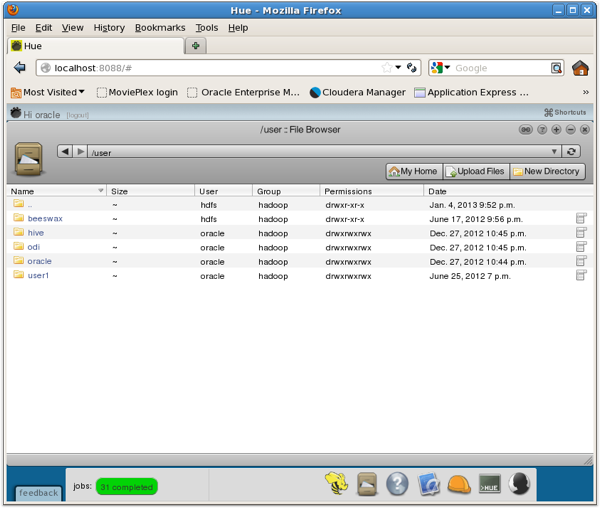

So what's HDFS then? HFDS is a fault-tolerant, distributed filesystem that's a core part of Apache Hadoop, and stores the data that MapReduce jobs then process via job trackers, task trackers and all the other Hadoop paraphernalia. HDFS is accessed through a URI (URL) rather than through your Linux filesystem browser, but distributions such as Cloudera's CDH3 and CDH4 ship with tools such as Hue, shown below, that provide a web-based interface into HDFS so that you can browse HDFS like a regular OS-level filesystem.

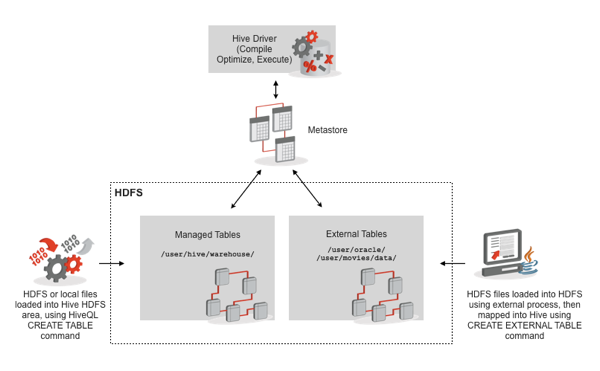

Notice how there's a "user" folder like we'd get with Linux, and within that folder there's a home folder for Hive? With Hive, generally the data you manage using Hive is actually loaded into a directory structure under the "hive" user, either using data taken from another directory area in HDFS or from external files. Hive's data is still in file form and accessed via MapReduce and Hadoop, but it's in a directory area away from everything else. You can, however, tell Hive to create tables using data held elsewhere in HDFS, analogous to Oracle's external tables feature, which then skips the data loading process and just maps table structures onto files held elsewhere in the Hadoop filesystem.

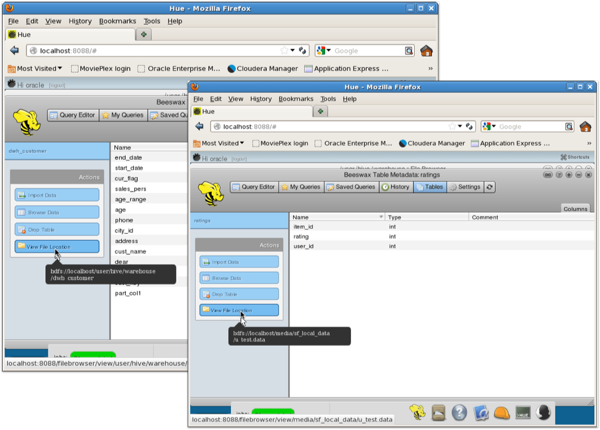

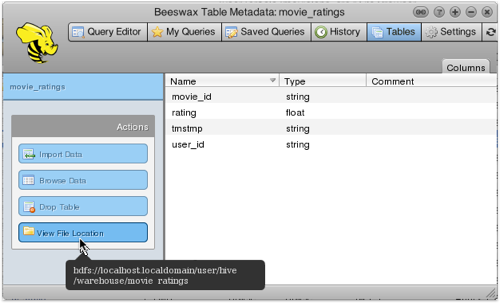

In most cases when we're considering OBIEE accessing Hadoop data via Hive, the data would have been loaded into Hive-mananged tables tables beforehand, though it's possible that Hive table metadata could have been mapped onto other data in HDFS. In your own particular Hive implementation and assuming you've got Hue installed, and Beeswax, a table browser for Hive that usually comes with Hue, you can see where each individual table within your Hive metastore is actually held; in the examples below, the dwh_customer Hive table is a managed table and has its data stored within the /user/hive/warehouse/ HDFS directory, whilst the ratings table has its data stored outside of Hive's directory structure, but still within the HDFS managed filesystem.

So how does one create a Hive table, load data into it and get it ready for OBIEE access, and can ODI help with this, as we asked earlier? Before we get into ODI then, let's take a look at how a Hive table is created and loaded, and then we'll see how ODI does the same job.

With thanks to the ODI product development team's David Allan, who put together some great Hive and ODI examples in this blog post, let's start by creating a Hive table against the same movie ratings data in the right-hand screenshot below, but this time with the data actually loaded into Hive's directory structure (i.e. a "managed" table). From the Hive command-shell, I type in the following commands to create the managed table, after SSH'ing into the VM running Hive:

officeimac:~ markrittman$ ssh oracle@bigdatalite

Warning: Permanently added the RSA host key for IP address '192.168.2.35' to the list of known hosts.

oracle@bigdatalite's password:

Last login: Mon Apr 22 10:59:07 2013 from 192.168.2.47

=====================================================

=====================================================

Welcome to BigDataLite

run startx at the command line for X-Windows console

=====================================================

=====================================================

Host: bigdatalite.us.oracle.com [192.168.2.35]

[oracle@bigdatalite ~]$ hive

Hive history file=/tmp/oracle/hive_job_log_oracle_201304250732_1523047910.txt

hive> create table movie_ratings (user_id string

, movie_id string

, rating float

, tmstmp string)

row format delimited fields terminated by '\t';

OK

Time taken: 3.809 seconds

hive>

At this point the table is created but there's no data in it; that part comes in a moment. I can see the table structure and its empty state from the Hive command-line:

hive> describe movie_ratings;

OK

user_id string

movie_id string

rating float

tmstmp string

Time taken: 0.168 seconds

hive> select count(*) from movie_ratings;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_201303171815_0021, Tracking URL = http://localhost.localdomain:50030/jobdetails.jsp?jobid=job_201303171815_0021

Kill Command = /usr/lib/hadoop-0.20/bin/hadoop job -Dmapred.job.tracker=localhost.localdomain:8021 -kill job_201303171815_0021

2013-04-25 07:40:51,581 Stage-1 map = 0%, reduce = 0%

2013-04-25 07:40:56,617 Stage-1 map = 0%, reduce = 100%

2013-04-25 07:40:58,640 Stage-1 map = 100%, reduce = 100%

Ended Job = job_201303171815_0021

OK

0

Time taken: 12.931 seconds

hive>

and also from the Beeswax web UI:

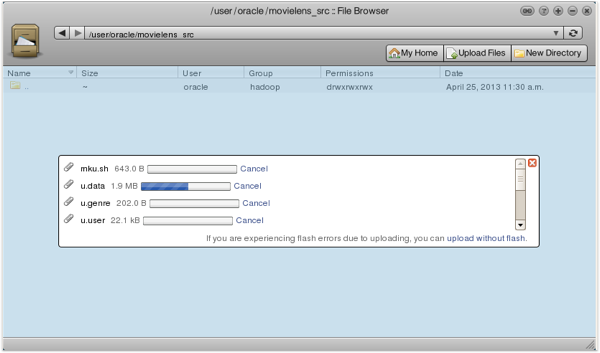

So how do we get the data into this table, without any tools such as ODI? I can either load data straight from files on my local workstation, or I can upload them, for example using Hue, into the HDFS filesystem first.

Now I can use the HiveQL LOAD DATA command to load from one of these HDFS tables into Hive, and then count how many rows have been loaded, like this:

hive> load data inpath '/user/oracle/movielens_src/u.data'

> overwrite into table movie_ratings;

Loading data to table default.movie_ratings

Deleted hdfs://localhost.localdomain/user/hive/warehouse/movie_ratings

OK

Time taken: 0.341 seconds

hive> select count(*) from movie_ratings;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_201303171815_0022, Tracking URL = http://localhost.localdomain:50030/jobdetails.jsp?jobid=job_201303171815_0022

Kill Command = /usr/lib/hadoop-0.20/bin/hadoop job -Dmapred.job.tracker=localhost.localdomain:8021 -kill job_201303171815_0022

2013-04-25 08:14:24,159 Stage-1 map = 0%, reduce = 0%

2013-04-25 08:14:32,340 Stage-1 map = 100%, reduce = 0%

2013-04-25 08:14:42,420 Stage-1 map = 100%, reduce = 33%

2013-04-25 08:14:43,428 Stage-1 map = 100%, reduce = 100%

Ended Job = job_201303171815_0022

OK

100000

Time taken: 26.32 seconds

hive>

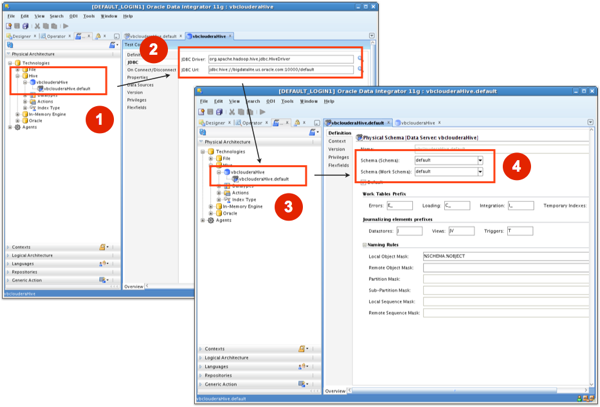

So how does this process look when using ODI to do the Hive data loading? Let's start with importing the Hive table metadata for the movie_ratings table I just created from the Hive command-line shell, by going over to the Topology navigator in ODI 11.1.1.6 - note that you'll need to configure ODI to connect to your Hive, HDFS and Hadoop environment beforehand, using the Oracle Data Integrator for Hadoop documentation as a guide, with this adapter being an extra-cost license option on top of base ODI Enterprise Edition.

Hive has its own technology type within the Topology navigator, and you create the connection through to Hive using the HiveJDBC driver, first adding the connection to the Hive server and then specifying the particular Hive database / namespace, in this case selecting the "default" database for my Hive system.

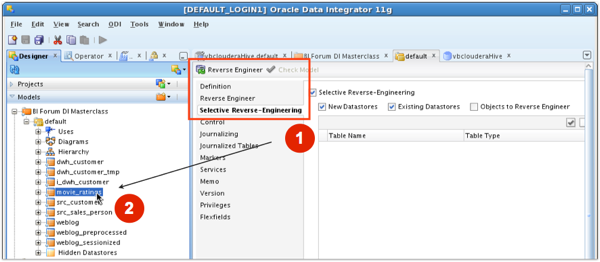

Now I can reverse-engineer the Hive table structures into a Designer navigator model, just like any other relational table structure.

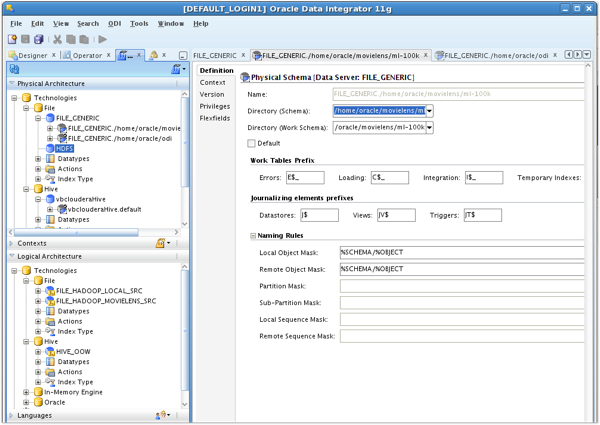

Within the ODI Topology navigator you can then create File technology connections either to files held in HFDS, or more likely with ODI to files on your workstation, or server, filesystem, like this:

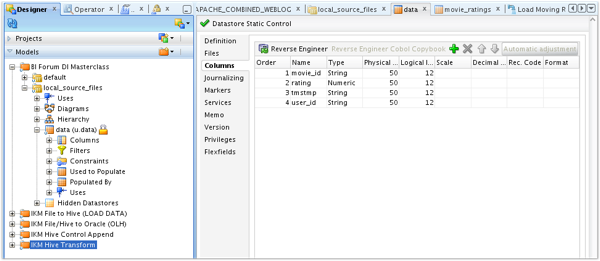

and then add the filedata stores to the Designer Navigator Model list, entering the correct delimiter information and reversing the column definitions into the datastore definition.

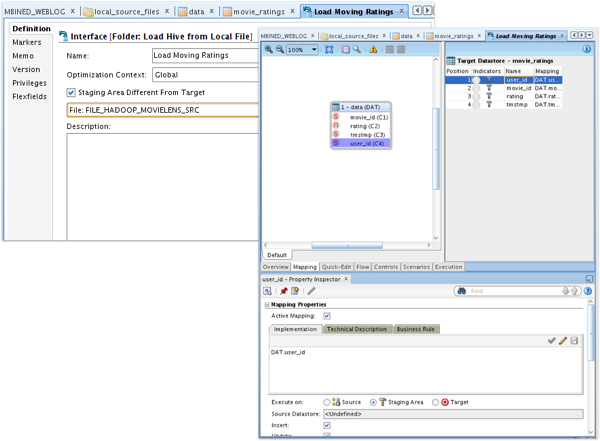

Now it's a case of creating an interface to load the Hive table. In this instance, I map each of the source file "columns" into the Hive table's columns, as the source file is delimited with an easily-usable structure.

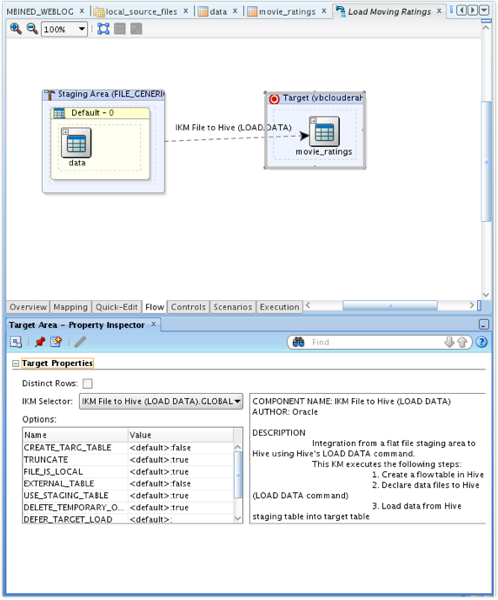

Then, over in the Flows tab for the interface, I make sure the IKM File to Hive knowledge module is selected, keep the default values for the KM options (more on these in a moment), and then save the interface.

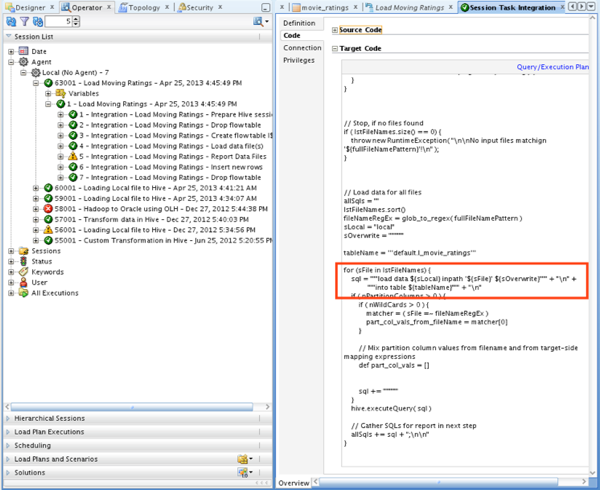

Now it's a case of running the interface, and checking the results. Notice in the Operator navigator code panel, the LOAD DATA command that ODI is generating dynamically, similar to the one I wrote manually earlier on in the article.

Going back to my Hive command-line session, I can see that there's now 100,000 rows in the movie_ratings Hive table.

hive> select count(*) from movie_ratings;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_201303171815_0024, Tracking URL = http://localhost.localdomain:50030/jobdetails.jsp?jobid=job_201303171815_0024

Kill Command = /usr/lib/hadoop-0.20/bin/hadoop job -Dmapred.job.tracker=localhost.localdomain:8021 -kill job_201303171815_0024

2013-04-25 16:59:12,275 Stage-1 map = 0%, reduce = 0%

2013-04-25 16:59:18,346 Stage-1 map = 100%, reduce = 0%

2013-04-25 16:59:29,467 Stage-1 map = 100%, reduce = 33%

2013-04-25 16:59:30,475 Stage-1 map = 100%, reduce = 100%

Ended Job = job_201303171815_0024

OK

100000

Time taken: 27.251 seconds

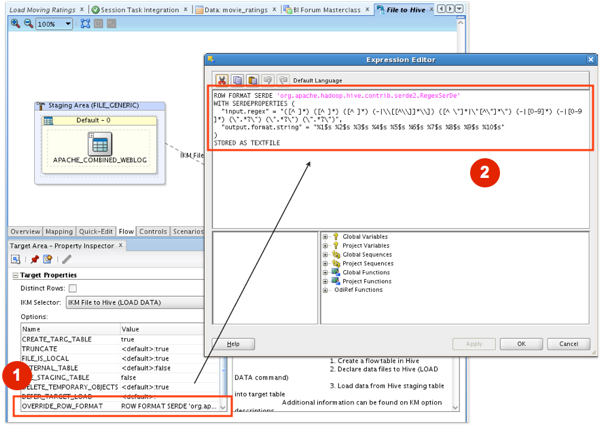

Now in many cases the data going into a Hive table isn't neatly arranged into columns within delimited files; it could be, for example, web log data that you'll need to parse using regular expressions or other APIs or standard parsers. When that's the case, you can use an option with the IKM File to Hive knowledge module to override the normal column-to-column mappings and instead use an expression, something Oracle have done in their demo environment for parsing these types of log files.

"ROW FORMAT SERDE" is a reference to Hive's "Serializer - Deserializer", or row-formatting feature, that gives you the ability to use regular expressions and other data manipulation techniques to, in this case, allocate incoming file data to the proper columns in the target hive table.

So now we're at the point where we can use ODI to populate the Hive tables that OBIEE in turn uses to access Hadoop data sources. But what if the data we want to load into Hive isn't in the format or shape we need, and we need to join, filter or otherwise work with Hive data and tables before we can report on it. And what if we want to get data out of Hive and into regular tables if a relational data store makes more sense than Hadoop, for a particular reporting requirement? Check back tomorrow for the final part in this series, where we'll answer these remaining questions.