Oracle BI Apps 11.1.1.7.1 – GoldenGate Integration - Part 4: Initial Load and Replication

This is the final post in my series on Oracle BI Apps 11.1.1.7.1 and GoldenGate Integration. If you have been following along up to this point, we have the Source Dependent Data Store schema setup and ready to accept data from the OLTP source via replication, the GoldenGate installations are complete on both the source and target servers, and the GoldenGate parameter files are setup and ready to roll. Before the replication is started, an initial load of data from source to target must be performed.

Initial Load

As I mentioned in my previous posts, I plan on performing the initial load of the SDS a slightly different way than described in the Oracle BI Applications documentation. Using the process straight out-of-the-box, we must schedule downtime for the source application, as we do not want to skip any transactions that occur during the processing of data from source to SDS target. With a slight customization to the OBIA-delivered scripts, we can ensure the initial load and replication startup will provide a contiguous flow of transactions to the SDS schema.

Oracle BI Applications Scripts

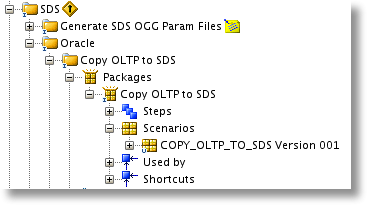

As with the other set-up processes for the SDS schema and GoldenGate parameter files, there is an ODI Scenario available to execute that will generate the initial load scripts. In ODI Studio, browse to BI Apps Project > Components > SDS > Oracle > Copy OLTP to SDS. Expand Packages > Copy OLTP to SDS > Scenarios and you will find the Scenario “COPY_OLTP_TO_SDS Version 001″.

The Scenario calls an ODI Procedure named "Copy SDS Data". When executed, it will generate a script with an insert statement for each target SDS table using a select over a database link to the OLTP source. The link must be manually created and specifically named DW_TO_OLTP, as the ODI Procedure has the dblink name hard-coded. This means that the link will need to be modified for each additional source, should there be multiple GoldenGate OLTP to SDS replication processes setup.

-Drop and recreate the database link. drop database link DW_TO_OLTPcreate database link DW_TO_OLTP

connect to SYSADM identified by SYSADM

using 'PSDEMO';--test the link.

select * from dual@DW_TO_OLTP;

The standard process would then be to execute the Scenario to generate the insert statements, schedule a downtime for the OLTP application, and run the initial load scripts. Rather than go through those steps, let's take a look at how to eliminate the source system unavailability with a slight change to the code.

Zero Downtime

The initial load process will be customized to use the Oracle database flashback query capability, selecting data from the transaction log as of a specific point-in-time, based on the source SCN (system change number). Before the initial load is run, the GoldenGate extract process will be started to capture any transactions that occur during the data load. Finally, the GoldenGate replicat process will be started when the initial load completes, after the initial load SCN, eliminating the chance to skip or duplicate transactions from the source.

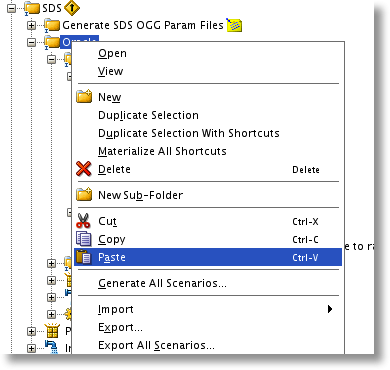

To perform the customizations, I recommend copying the entire "Copy OLTP to SDS" folder and pasting it in a new location. I simply pasted it in the same folder as the original and renamed it "RM Copy OLTP to SDS". One thing to note is that the Scenario will not be copied, since it must have a unique name throughout the work repository. We will generate the Scenario with a new name after we make our changes.

Open up the ODI Procedure "Copy SDS Data" from the copied directory. Click on the "Details" tab to review the steps. We will need to modify the step "Copy Data", which generates the DML script to move data from source to target. A review of the code will show that it uses the dictionary views on the source server, across the database link, to get all table and column names that are to be included in the script. The construction of the insert statement is the bit of code we will need to modify, adding the Oracle database flashback query syntax.

...

l_trunc_stmt := 'truncate table <$=jmodelSchema$>.' || col_rec.table_name;

l_sql_stmt := 'INSERT /*+ APPEND */ INTO <$=jmodelSchema$>.' || col_rec.table_name || ' (' || rtrim(l_column_list, ', ') || ') ' ||

'SELECT ' || rtrim(l_column_expr, ', ') || ' FROM ' || col_rec.table_name || '@<%=DBLinkName%> as of scn #INITIAL_LOAD_SCN';

...

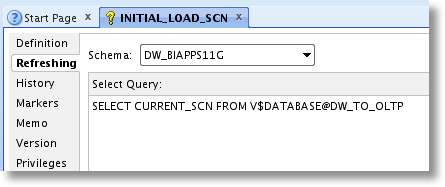

As you can see, #INITIAL_LOAD_SCN is a placeholder for an ODI Variable. I chose to use a variable to perform the refresh of the SCN from the source rather than hard-code the SCN value. I created the variable called INITIAL_LOAD_SCN and set the query on the Refreshing tab to execute from the data warehouse over the database link, capturing the current SCN from the source database.

The user setup to connect to the OLTP source will need to be granted the "select any dictionary" privilege, temporarily, in order to allow the select from V$DATABASE.

SQL> grant select any dictionary to SYSADM;

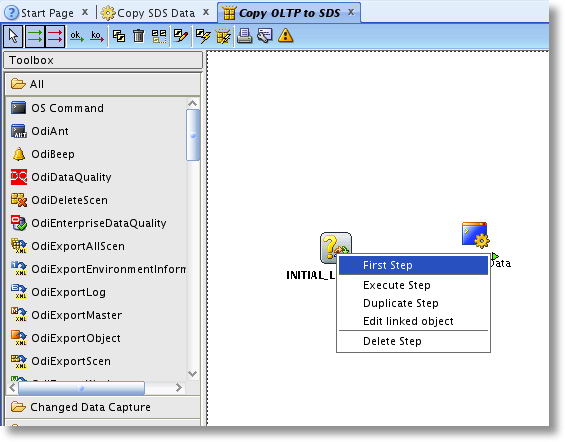

Now that the Variable is set and the Procedure code has been modified, we just need to put it all together in a Package and generate a Scenario. The Package "Copy OLTP to SDS" is already setup to call the Procedure "Copy SDS Data", so we can simply add the ODI Variable as a refresh step at the beginning of the Package.

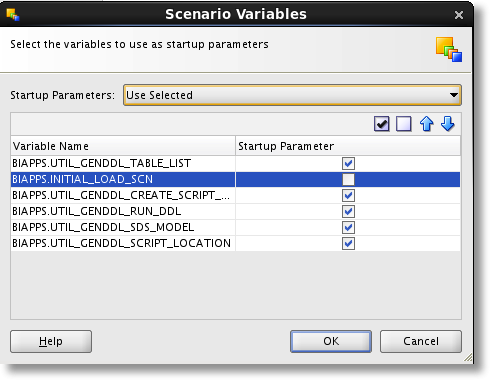

After saving the Package, we need to generate a Scenario to execute. When generating, be sure to set all Variables except for INITIAL_LOAD_SCN as Startup Variables, as their values will be set manually during the execution of the Scenario. Also, remember to provide a different name than the original Scenario.

GoldenGate Startup and Script Execution

All of the pieces are in place to kick-off the initial load of the Source Dependent Data Store and fire up the GoldenGate replication. Even though the goal is to have zero downtime for the OLTP application, it would be best if the process were completed during a "slow" period - when a minimal amount of transactions are being processed - if possible.

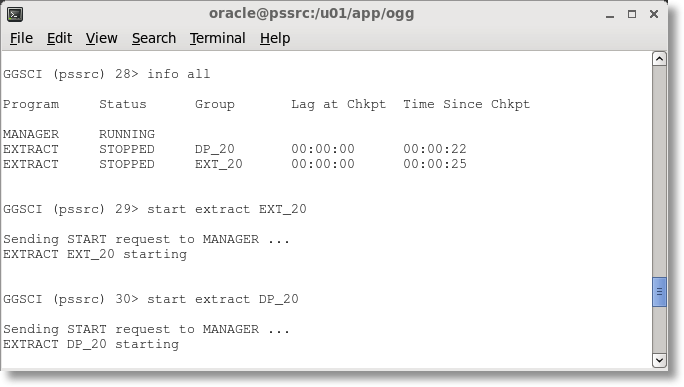

First, let's get the GoldenGate extract and data pump processes running and capturing source transactions. On the source server, browse to the GoldenGate directory and run the GGSCI application. Ensure the Manager is running, and execute the "start extract" command for each of the processes that need to be kicked off.

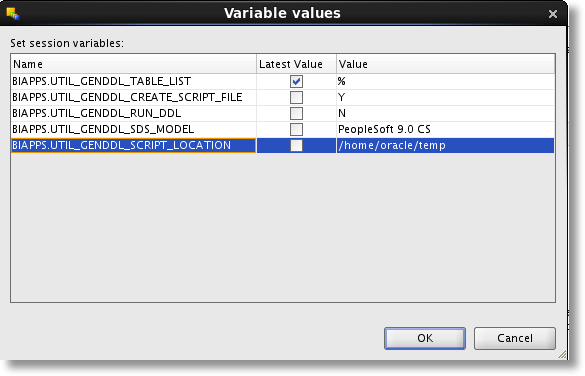

Now that the transactions are flowing into the source trail and across the network to the target trail, we can execute the Scenario to generate the initial load script files. When executed, a prompt will appear, allowing you to enter the appropriate value for each variable. The script can be filtered down by a specific list of tables, if necessary, by adding a comma-delimited list to the TABLE_LIST variable. We'll just use a wildcard value to generate the script for all tables. Other options are to generate a script file (Y or N) and to execute the DML during the execution of the Scenario (even though the Variable is named RUN_DDL). I have chosen to create a script file and run it manually.

The script, named "BIA_SDS_Copy_Data_<session_number>.sql", will disable constraints, drop indexes, and truncate each table in the SDS prior to loading the data from the source system. After executing the copy data script, we will want to run the "BIA_SDS_Schema_Index_DDL_<session_number>.sql" script to recreate the indexes.

SQL> @BIA_SDS_Copy_Data_885500.sql

SQL> @BIA_SDS_Schema_Index_DDL_880500.sql

One thing to note - in the SDS Copy Data script the primary key constraints are disabled for a more performant insert of the data. But, the SDS Schema Index DDL code is set to create the constraint via an alter table script, rather than enabling the existing constraints. To work around this bug, I opened the Copy Data script in SQL Developer, copied all of the lines that are set to disable the constraints, pasted them into a new window and switched the "disable" keyword to "enable" with a simple find and replace, and then executed the script against the SDS tables.

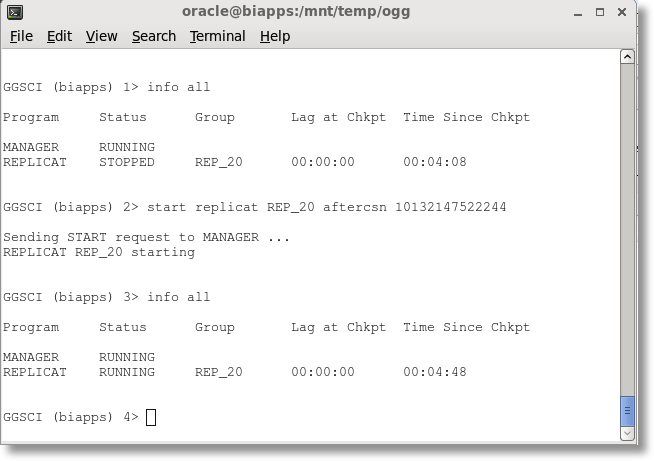

After copying the data and recreating the indexes in the SDS (and enabling the PK constraints), we can finally startup the replicat GoldenGate process on the target server. Again, login to GGSCI and ensure the Manager process is running. This time, when we start the process we will use the AfterCSN command, ensuring the replicat only picks up transactions from the trail file after the initial load SCN.

We now have our initial load of data to the SDS schema completed and GoldenGate replication started, all without any impact to the source OLTP application. The next time the Source Data Extract (SDE) Load Plan is executed, it will be just as if it were running directly against the source database - only faster - since it's pulling data from the SDS schema on the same server.

Be on the lookout for more blog posts on OBIA 11.1.1.7.1 in the future. And if you need a fast-track to Oracle BI Applications implementation, feel free to drop us a line here at Rittman Mead at [email protected].

Oracle BI Apps 11.1.1.7.1 – GoldenGate Integration

1. Introduction

2. Setup and Configuration

3. SDS and Parameter File Setup

4. Initial Load and Replication