End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.2 : Hive Table Joins, Aggregation and Loading

In this series of posts, I’m going to be looking at an end-to-end ETL process on Oracle Big Data Appliance, using Hadoop technologies to do the data manipulation and Oracle Data Integrator 12c to orchestrate the process. Over the five posts, I’ll be landing web server log data on the Hadoop cluster using Flume, loading that data into a Hive table, transforming and enhancing the dataset, and then loading the final dataset into an Oracle database using one of the Oracle Big Data Connectors. The first post in the list below has a schematic for the overall process, and over the rest of the week I’ll be adding the links in for the remaining posts in the series.

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.1 : Flume to Initial Hive Table

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.2 : Hive Table Joins, Aggregation and Loading

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.3 : Enhance with Oracle Reference Data via Sqoop, and CKMs

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.4 : Transforming Hive Data using Python & Streaming

- End-to-End ODI12c ETL on Oracle Big Data Appliance Pt.5 : Bulk Unload to Oracle

So in today’s step, I want to take the initial Hive table containing the full set of log data, and then do three things; first extract the page details out of the “request” column in the incoming Hive table, then join that to some reference data also in the BDA that’ll allow me to add details of the post author and title of the post, and finally aggregate and project just certain columns from the the dataset so I’ve got a Hive table of just page accesses by IP address, with the author and title details.

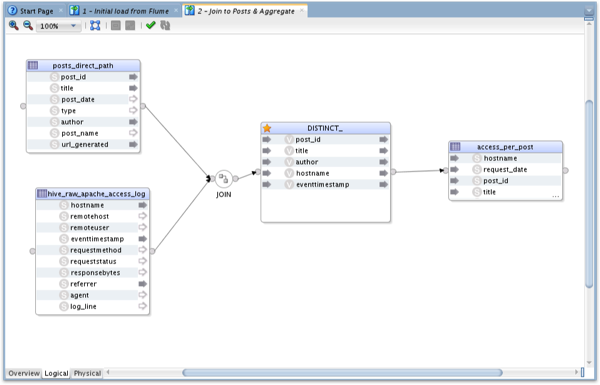

In fact this is the easiest out of the five steps to set up and bring together, as we’re just using basic ODI functionality, albeit with Hive tables rather than regular database tables. In the screenshot below you can see the incoming weblog hive table, with the individual columns split-out in the previous step via the RegEx SerDe, and I just join it to the table containing post and author details, apply a distinct on the join output and then load the results into a third Hive table.

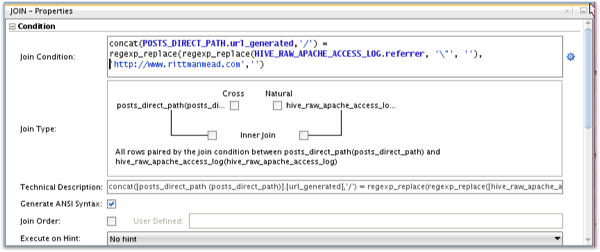

The join between the two tables is bit complex, because I need to extract just the URL details out of the request field (which also contains the transport method and other metadata), but its no different that joining two regular database tables. Also, I did need to check the Generate ANSI Syntax checkbox, as Hive expects table joins to be in this format rather than the “Oracle” format.

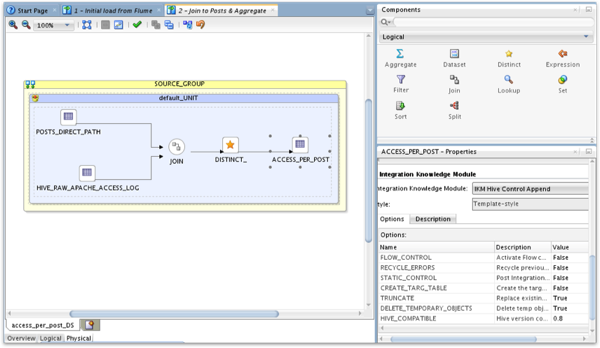

Going over to the Physical part of the mapping, I set the KM to IKM Hive Control Append, turn on the feature to enable table truncation prior to load, and I’m ready to go.

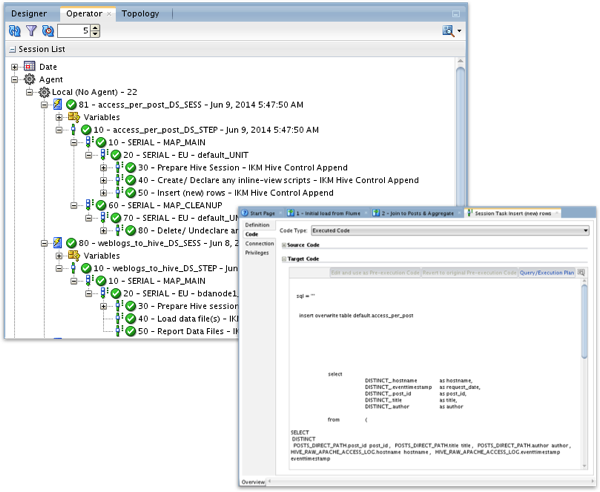

Then, when I execute the mapping, I can see its progress in the Operator navigator, and the code view shows me the HiveQL commands that the KM has generated to move data around the BDA.

So far so good, and as I said, this was the easy bit. For the next transformation I want to bring in some additional reference data from an Oracle database, so the question for me is whether I need to land that data into the BDA before doing the transformation, or whether I can create a join between the Oracle table and my Hive table? Check back tomorrow for the next installment...