Options for Enabling ODI11g+12c Standalone Agents for High-Availability (or ... Why JEE Agents are the Best Option)

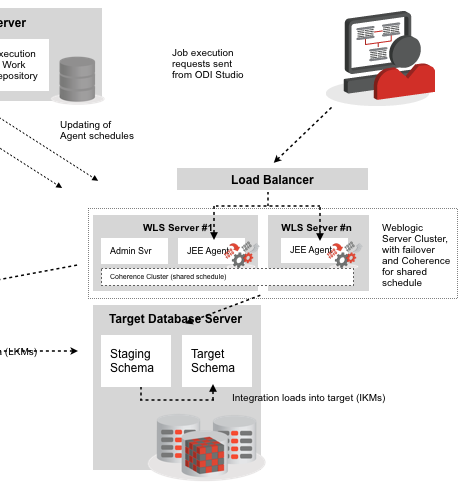

A few years ago we posted some articles on the blog around Oracle Data Integrator ETL restartability and resilience, and making ODI’s agents highly-availabile. The context around those posts was a large data integration project we were doing for a client who was deploying Oracle Fusion Middleware across the entire enterprise, and they wanted us to use the new ability within ODI11g to deploy agents within WebLogic Server Managed Servers to provide true high-availability for their ETL platform; ODI11g in this topology uses Oracle Coherence to provide a middleware-based data grid that holds a shared record of the agent schedule so that another agent can pick-up and resume tasks in the schedule if the main agent falls-over mid-way through execution. The diagram below shows this topology, which was then brought-forward into ODI12c and can be used now, through a feature called “JEE Agents”.

And this is our standard recommendation to clients who want to make their ODI agents as highly-available as possible, so that there’s always an active agent ready to receive execution or schedule requests, and schedules keep running even if the agent that’s running it falls-down mid-way through execution. But the JEE Agent approach has some significant prerequisites:

- You need to deploy them within Oracle WebLogic Server Enterprise Edition, with at least two machines in a cluster within the WebLogic domain, which can be expensive for clients without the required WebLogic Server licenses or who haven’t got suitable hardware available

- You also need Oracle Coherence for the shared record of ODI schedules that each JEE agent can get access to, and the client may not have a license for Coherence in their current WebLogic/Fusion Middleware license deal

- The customer might also, understandably, what to properly understand what they can do with just Standalone Agents before they shell-out for WebLogic Server Enterprise Edition, Coherence and a bunch of new servers

For anyone new to Oracle Data Integrator, agents are Java processes that either sit within WebLogic Server clusters or directly on source and target servers, and take the instructions within your ODI mappings, packages and loan plans and execute them as SQL instructions sent to databases, web service calls or whatever other interactions your ETL processes require. Agents can either receive instructions ad-hoc from the ODI Studio application or via command-line or web service calls, or they can be assigned on or more schedules that they then execute at the appropriate time to move data into your data warehouse or between various applications. ODI11g has two types of agents; JEE agents as mentioned earlier, and what are called Standalone Agents, agent that run in a Java JVM and are started from the command-line, that don’t require WebLogic Server and are mainly used because of their small footprint - you an run them directly on the database server that’ll be performing the Load and Transform part of an ELT job, for example, but they don’t have the HA capabilities of agents running in WebLogic Server and they don’t have access to all the “enterprise” Java features provided to agents running within WebLogic Server.

Borkur and I worked with just such a customer recently and it was a useful opportunity to dig into exactly how Standalone Agents handle failover, and how close you can get to JEE agents if you’d like some degree of high-availability for your ODI setup. As this was a new implementation of ODI for the customer and they were currently using OBIEE11g, their first though was to deploy ODI11g until such time as they could upgrade their entire Fusion Middleware stack to 12c; as we were only talking about Standalone Agents though my advice was that there was no harm in deploying ODI12c instead of the 11g version as it didn’t really involve any WebLogic integration, and they could then benefit from the improved developer features in ODI12c such as flow-based mappings and deployment specifications.

High-Availability for ODI11g Standalone Agents - OPMN and “Cross-Wiring” to Create a Single Physical/Logical Standalone Agent

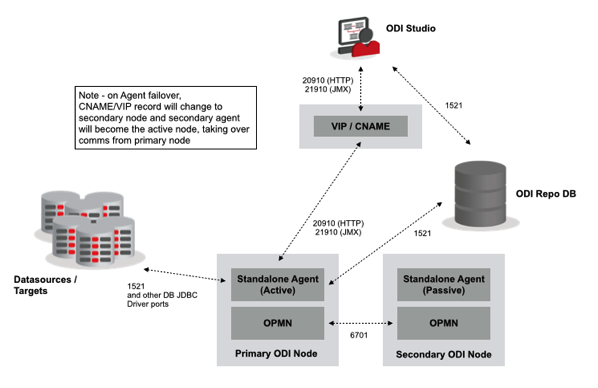

So let’s start with Standalone Agents within an ODI11g deployment. In this instance, the customer had two datacenters and wanted to put an agent in each to provide some degree of HA and disaster recovery, with one agent at any one time being the “primary” agent that received job execution requests and was assigned the scheduled daily load. In this instance we were able to also deploy OPMN (Oracle Process Manager and Notification Server), installed as part of the Oracle Web Tier Utilities download and the recommend way to provide some degree of high-availability for ODI11g Standalone Agents.

What we thought would be interesting here though would be to try and follow John Goodwin’s instructions on cross-wiring two Standalone Agent + OPMN installs to provide active/passive failover across two server nodes. In John’s case he’s talking about agents running Hyperion data loading jobs but it’s just as applicable to our situation, so we went with the topology below to try-out active/passive failover for our ODI11g Standalone Agents across two nodes.

To start with then, each Standalone Agent is now registered with their local OPMN instance so that opmntcl can now be used to stop, start and restart them. In our setup, the primary ODI node (odi11g-node1.rittmandev.com) currently has the active Standalone Agent instance running, as I can see by using the opmnctl command-line utility:

[oracle@odi11g-node1 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 21891 | Alive ohs1 | OHS | N/A | Down

If I switch over to the second node (odi11g-node2.rittmandev.com) I’m currently leaving that agent as disabled, as it’s the “passive” part of my active/passive arrangement.

[oracle@odi11g-node2 ~]$ cd /home/oracle/Middleware/Oracle_WT1/instances/instance1/bin [oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | N/A | Down ohs1 | OHS | N/A | Down

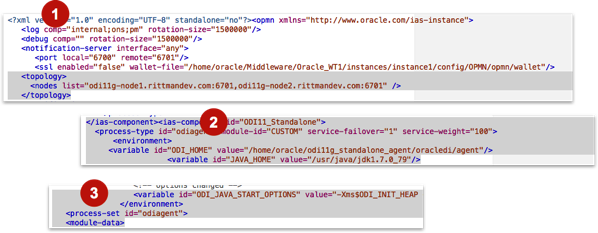

The clever part now, and as detailed in John Goodwin’s article, is registering each OPMN server with the other server in the active/passive setup, so that if one goes down, OPMN brings up the other one. This method doesn’t seem to be documented in the ODI11g documentation and later on I’ll explain why I think this is, but to “cross-wire” the OPMN servers in this way you have to make a few manual changes to each OPMN server’s opmn.xml configuration file, in my case located at /home/oracle/Middleware/Oracle_WT1/instances/instance1/config/OPMN/opmn/opmn.xml:

-

First you create a new <topology></topology> section within the file that lists out the hosts that have OPMN installed that you want to include in the failover cluster (use the remote 6701 port rather than the local 6700 port for this)

-

Next you add the service-failover=“1” and service-weight settings to the <process-type></process-type> section for the agent you want to

[oracle@odi11g-node1 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 22064 | Alive ohs1 | OHS | N/A | Down [oracle@odi11g-node1 bin]$ kill -9 22064 [oracle@odi11g-node1 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 22147 | Init ohs1 | OHS | N/A | Down [oracle@odi11g-node1 bin]$ kill -9 22147 [oracle@odi11g-node1 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | N/A | Down ohs1 | OHS | N/A | Down

and then moving over to the secondary’s node OPMN instance, you can see that it’s agent has now changed from disabled, to running:

[oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | N/A | Down ohs1 | OHS | N/A | Down [oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 10263 | Alive ohs1 | OHS | N/A | Down

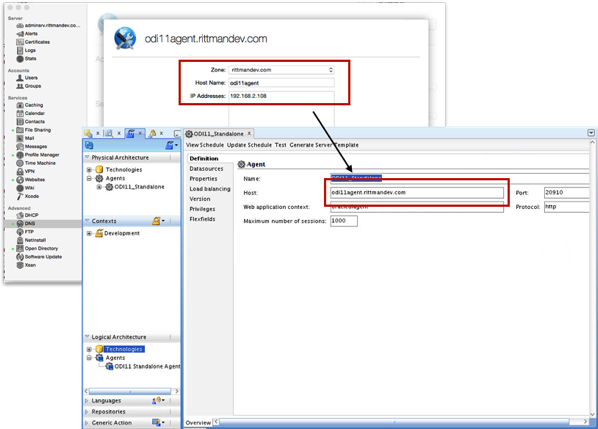

… which is pretty cool. But there’s a couple of drawbacks with this approach. The first is that whilst OPMN can move the active agent between two or more server nodes, it doesn’t update the agent’s hostname in the ODI Master Repository, which means you either need to hack around with the ODI Master Repository tables to update its record of the hostname each time the agent fails-over, or you do what I did and create a CNAME or VIP entry in the DNS server’s records so that the agent is reached by a virtual hostname, in our case odi11agent.rittmandev.com

The problem though, is that you need a means of updating this CNAME or VIP record when OPMN fails-over the agent to the secondary node, otherwise any further requests to this agent through ODI Studio will direct to the failed node, not the one that’s now running the active Standalone agent. In-practice you could set-up something that pings both agents and updates the CNAME record in your DNS zone when the primary agent moves, but this involves working with your organization’s network or sysadmin team which can sometimes be a challenge.

The other issue is what happens when an ad-hoc or scheduled job is running when the active agent goes down. Let’s check this now by creating a Load Plan and then running it through what’s currently the active agent:

I’ll now kill the process, and let OPMN restart it on that server node:

[oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 10263 | Alive ohs1 | OHS | N/A | Down [oracle@odi11g-node2 bin]$ kill -9 10263 [oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 10452 | Alive ohs1 | OHS | N/A | Down

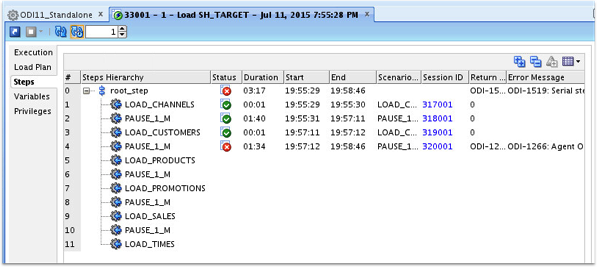

Notice how the pid (process ID) has now changed for the agent, which indicates that OPMN has restarted it on that node. Going back to ODI Studio, after a while I can see that the agent on restart has marked the load plan execution as having failed:

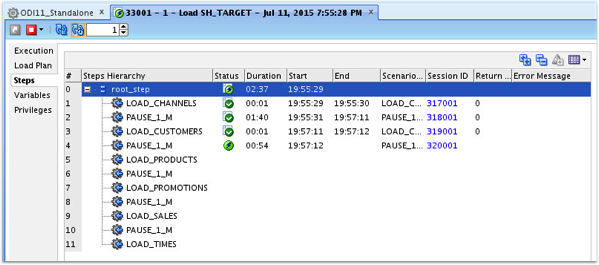

If I restart the load plan execution though, a new load plan execution instance is created which automatically skips the steps that ran successfully before:

If I kill the agent’s process three times in-a-row though, like this:

[oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 10452 | Alive ohs1 | OHS | N/A | Down [oracle@odi11g-node2 bin]$ kill -9 10452 [oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 10557 | Init ohs1 | OHS | N/A | Down [oracle@odi11g-node2 bin]$ kill -9 10557 [oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | 10602 | Init ohs1 | OHS | N/A | Down [oracle@odi11g-node2 bin]$ kill -9 10602 [oracle@odi11g-node2 bin]$ ./opmnctl status Processes in Instance: instance1 ---------------------------------+--------------------+---------+--------- ias-component | process-type | pid | status ---------------------------------+--------------------+---------+--------- ODI11_Standalone | odiagent | N/A | Down ohs1 | OHS | N/A | Down

the other agent will become the active agent, but it may take some time before it sync’s up correctly with the Master Repository because the CNAME created to point to the active node might take a while to change or get updated. In my case, the load plan continues to display as “running” for a couple of minutes more, until it’s marked as failed.

What it is good to see, though, is that this agent that OPMN has failed-over too still has the schedule assigned to it; as far as the Master Repository is concerned it’s still the same logical/physical agent, even though OPMN has moved it to a new node - this is because we access it via the special odi11agent.rittmandev.com virtual host name.

So using OPMN and this active/passive failover-setup has the advantage of making sure there’s always an agent ready to receive ad-hoc job requests or run jobs on the schedule, but it requires you to maintain a CNAME or Virtual IP for the agent and restart failed mappings to make it work. So how does this all look with ODI12c?

High-Availability for ODI12c Standalone Agents - Node Manager and an Active/Active Setup

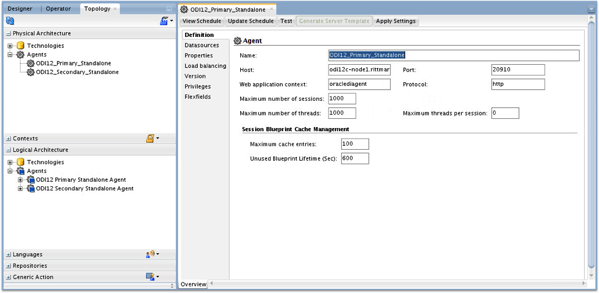

ODI12c has the same Standalone Agents and JEE Agents that ODI11g does, but introduces a third type of agent, Co-Located Agents, that confuses things a bit more. Moreover, ODI12c Standalone Agents are managed by Node Manager not OPMN, which has been removed from the overall Fusion Middleware 12c architecture, with Node Manager in this case running within a lightweight, pseudo-domain especially for ODI12’c Standalone Agent in this case, and with the new Co-Located Agents running within a full WebLogic domain but outside of a WebLogic Server Managed Server.

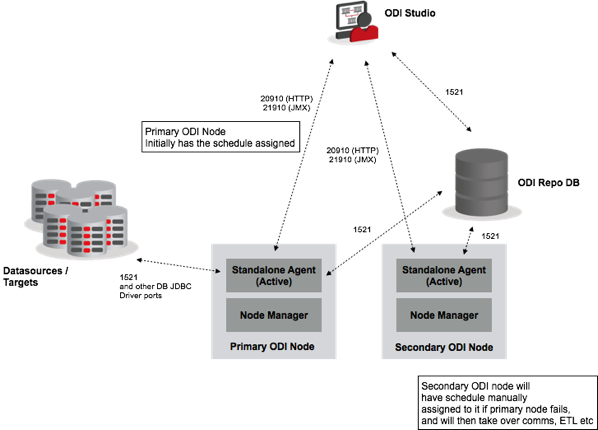

So what this means in-practice is that each node’s Standalone Agent with an ODI12c install is managed by Node Manager rather than OPMN, but as Node Manager outside of a full WebLogic domain can’t link-up with another Node Manager for an active/passive high-availability setup, each Standalone Agent has to be active all the time (or as much as possible) in what in this case is now an active/active HA setup.

Within ODI Studio and the Master Repository topology then, each agent is registered separately and it’s the choice of the operator which agent jobs are sent to, and which agent runs the daily ETL schedule. You could of course set these agents up in a load-balancing cluster with a parent or master agent accepting all the job execution requests, but then you’d need to make that master agent highly-avaialble, where would it go, and you’re back to the same issue again.

Each agent in this instance can either be started via the agent.sh utility, or via Node Manager like this (edited for brevity):

[oracle@odi12c-node1 bin]$ ./startComponent.sh ODI12_Primary_Standalone Starting system Component ODI12_Primary_Standalone ... Initializing WebLogic Scripting Tool (WLST) ... ... Welcome to WebLogic Server Administration Scripting Shell Type help() for help on available commands Reading domain from /home/oracle/odi12c/user_projects/domains/base_domain Please enter Node Manager password: Connecting to Node Manager ... Successfully Connected to Node Manager. Starting server ODI12_Primary_Standalone ... Successfully started server ODI12_Primary_Standalone ... Successfully disconnected from Node Manager. Exiting WebLogic Scripting Tool. Done

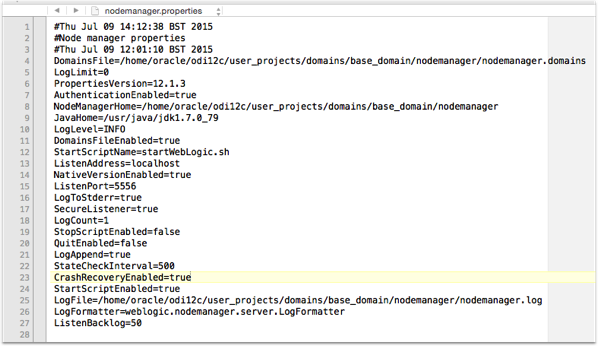

By default, like ODI11g Standalone Agents and OPMN, Node Manager won’t automatically restart an agent under it’s control if it falls-over. If, however, you set the CrashRecoveryEnabled setting to “true” in the /home/oracle/odi12c/user_projects/domains/base_domain/nodemanager/nodemanager.properties file as shown in the screenshot below, ODI12c Standalone Agents that were started by NodeManager will get auto-restarted if the process fails.

As you can see in the Terminal session screenshot below, Node Manager picks up the fact that the ODI12c Standalone Agent process is started is no longer running (by checking running processes against lock files the system component created on startup), then automatically restarts it so that it’s running again at the end.

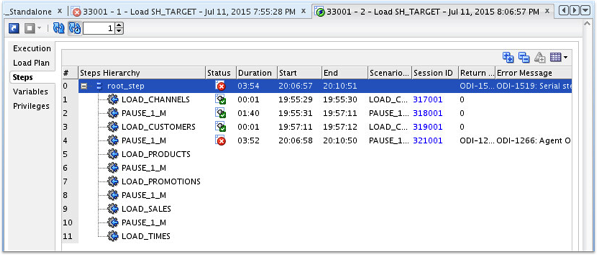

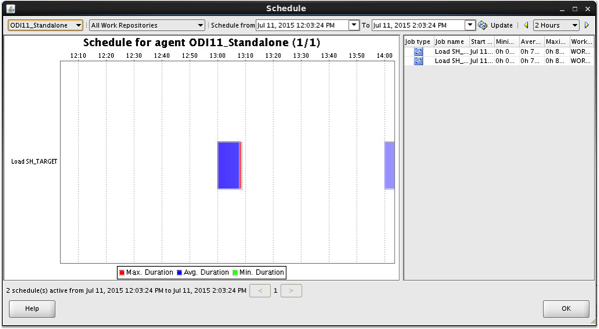

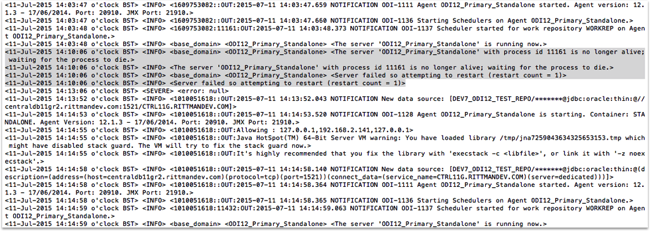

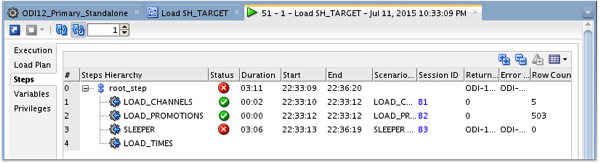

As with ODI11g’s Standalone Agents and OPMN, load plans that are running when an ODI12c Standalone Agent fails then fail themselves, as shown in the screenshot below:

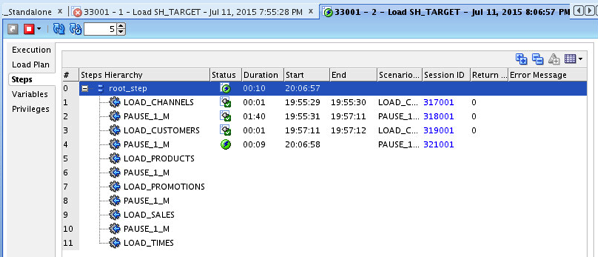

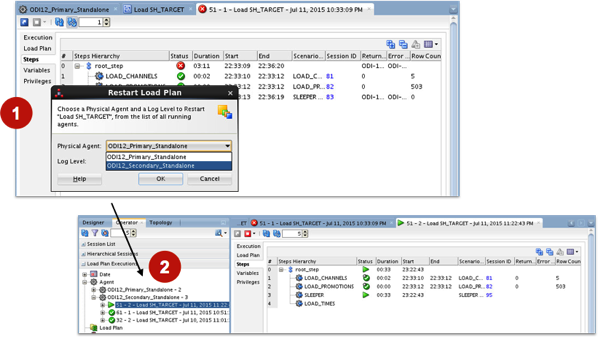

You can in-fact restart failed load plans with either active agent - in this case I’m restarting it with the secondary Standalone Agent, simulating a complete failure of the first node rather than a process failure that Node Manager could automatically deal with.

Where things do get complicated with this active/active setup is when the agent you’ve assigned the schedule to fails; in this case, unlike ODI11g where there was just a single logical agent, in this instance the second agent is completely independent of the first, and will need to have the schedule assigned to it if the primary agent fails; the primary agent, once the server node is running again, will need to have the schedule taken away from it otherwise you’ll have two agents acting as the scheduler each one running it’s own copy of the daily data load. One way around this would be to have the scheduling done by a third-party scheduler, which would either access the two active/active agents through a load-balancer and round-robin approach, or try and use one agent where possible falling over to the other one.

Conclusions - And why JEE Agents, WebLogic and Coherence Aren’t Such Overkill, After All...

Once you’ve been through all of this and looked at the limitations of a Standalone Agent-only setup, you can sort-of see why Oracle went down the WebLogic and Coherence route for their fully HA-capable agent topology; Node Manager can work across multiple servers when you run it within a WebLogic Server domain, and using Coherence to store a local (middleware-level) copy of the schedule means that all of the agents can keep track of what’s been executed and where they are in the schedule. Coupled with a load balancer and a Virtual IP that provides a consistent address for all of the JEE agents in the cluster, it’s a bit more engineering but you can see why it was needed; in the meantime, the customer went with 12c in the end and put in-place manual processes to deal with Standalone Agent failure in this active/active setup, which should be enough to tide-them over until the next budget round when they can put additional hardware and the JEE Agent setup into place.