Taking a Closer Look at Knowledge Modules in ODI12c - Component-Style and Multi-Connect KMs

Another question that came up from the ODI12c Bootcamp Course I’m delivering for a client in London at the moment is how to choose between the different knowledge modules that come with ODI12c. What with the choice now between template-style KMs and the new component-style KMs, the new option of multi-connect KMs, and the general question around which KM you pick within a KM type when building a table mapping, I thought it’d be interesting to take a closer look at how knowledge modules work with ODI12c and how you go about making the right choice of KM when creating a mapping.

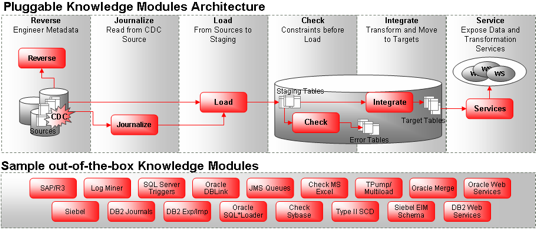

As a quick primer, Oracle Data Integrator up until the recent 12c release had six types of knowledge module you could use in a data mapping:

They were:

- Load Knowledge Modules, for loading source data out of the source database server and into a staging table typically on the target database platform

- Integrate Knowledge Modules, for taking that staging data and integrating (inserting, updating, merging etc) it into the target table

- Reverse Knowledge Modules, for reverse-engineering the table metadata from a source system

- Check Knowledge Modules, for performing data quality checks on source and target tables

- Journalise Knowledge Modules, for setting up change data capture on a source table or table set

- Service Knowledge Modules, for exposing tables or other datastore as CRUD-type web services

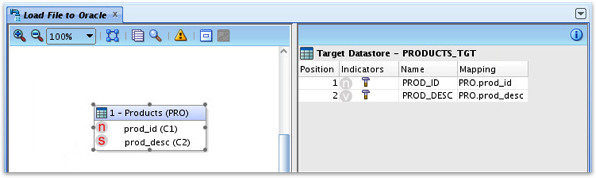

Using ODI11g as an example, when you created a new mapping you selected an LKM for extracting data out of your source database, an IKM for integrating the results into the target table, and optionally a CKM or JKM if you needed to run data quality checks or use table journalization (CDC). In all cases you had to first import the knowledge module definitions into the ODI11g Work Repository and your project before you could use them. To take an example, an ODI11g mapping where the source was a file and the target, an Oracle database, might look like this as a Mapping diagram:

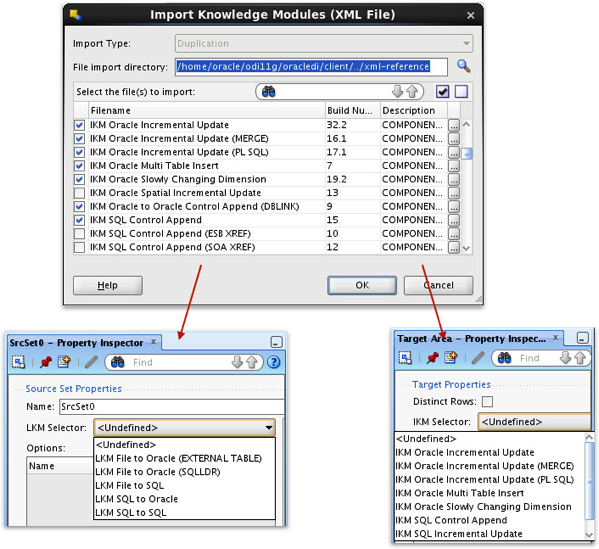

Looking at the Flow diagram, at the start there are no knowledge modules to select from as none have yet been imported:

I therefore import a selection of IKM, LKM and other KMs for the technologies I’m using, and then I’m able to assign an LKM and IKM to my flow diagram.

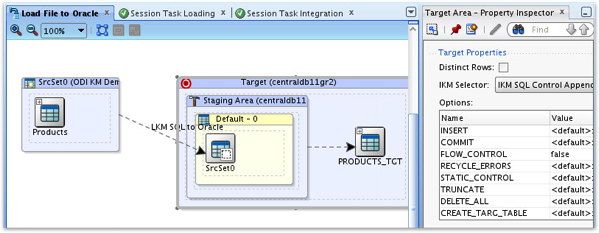

For both Load Knowledge Modules (LKMs) and Integration Knowledge Modules (IKMs), you have a number of options ranging from generic JBDC/SQL-type modules that connect to a source and then transfer the data using JDBC batch routines, through to highly-specialized ones that leverage particular platform technologies. I typically start the prototyping phase of my ODI projects by selecting the simplest, most generic LKM and IKM I can find, and once I’ve got the mapping logic correct then shift to one that uses more of the underlying database’s features - the docs also have a nice guide for making your KM selection. For example, I might assign the LKM SQL to Oracle knowledge module to the source table and the IKM SQL Control Append one to the target, like this:

In this case, ODI will first create a Java routine that extract via a JDBC connection the rows of data from the file, and then load that data into a staging table on the target database server. Then, that staging data will be integrated into the target table using a regular SQL INSERT statement.

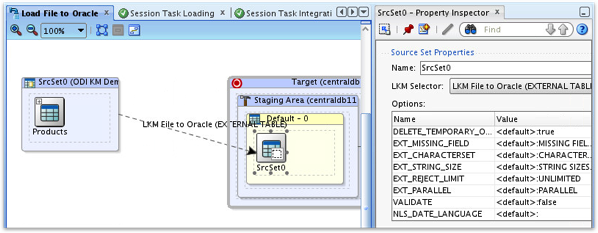

Of course I could do this more efficiently using an Oracle External Table. Let’s select the LKM File to Oracle (EXTERNAL TABLE) load knowledge module and also change the IKM to IKM Oracle Incremental Update; note that this would only work if the target database server could see the file we’re loading in via this mechanism - if the file had to stay on a remote server then I’d have to stick with the IKM SQL Control Append and ODI would effectively ignore the request to use an Oracle External Table.

With ODI12c, things are a bit different due to the introduction of another type of knowledge module: “Component-Style” Knowledge Modules. Component-style KMs were introduced for a few reasons with ODI12c; they made migration of mappings and projects from OWB easier as OWB mappings are made up of lots of arbitrarily-arranged mapping operator components that can be combined into all-types of data flow, and they also made it possible for Oracle to create lots more of these granular mapping components and use them across all technology types. For example as Oracle’s David Allen talks about in this comment on one of our previous blog posts and in this follow-up blog of his own, Oracle could create a generic Table Function component-style KM and have it apply a SQL table function for Oracle sources, or run a Pig relation through an arbitrary Pig Latin script as I did in a more recent blog post.

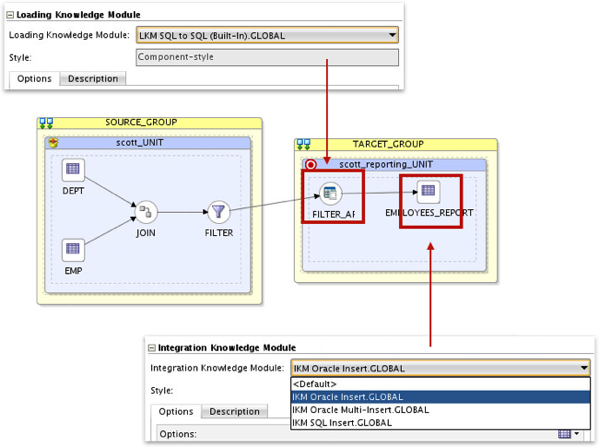

These new component-style KMs come built-in to ODI12c which means that you don’t actually have to import any template-style KMs in to get started with a mapping; in the example below from my blog post earlier today on ODI12c and Oracle Streams, I can run the mapping I’ve just created by just selecting from the built-in component-style KMs that ship with ODI12c out-of-the-box.

So should we now use component-style KMs when creating mappings and avoid using the old template-style ones? The docs don’t say this explicitly, but my impression is that component-style KMs are the way Oracle wants to take things forward and in most cases, ODI will automatically select suitable component-style LKMs and IKMs when you create the physical mapping and this is usually the best option; only time I switch to a template-style IKM or LKM is if I’m using a platform technology that component-style KMs don’t yet cover, or I’m working on some edge-case - by default though I go with component-style LKMs and IKMs. Of course you still need to import JKMs and other KM types and presumably Oracle will extend component KMs beyond IKMs and LKMs over time, but that’s my recommendation for now.

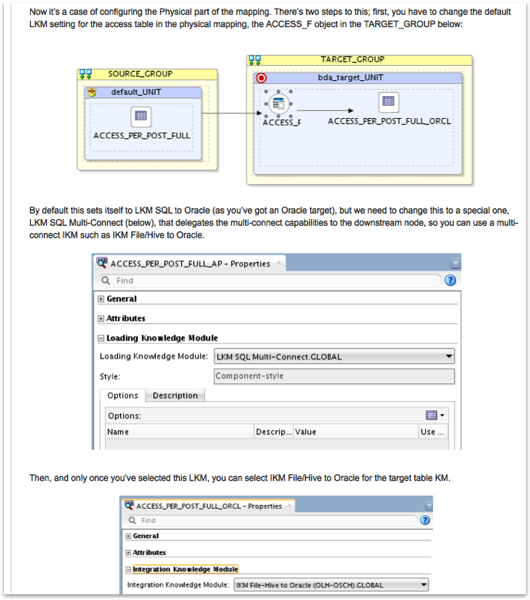

So now we’ve got what component-style KMs are, there’s another new KM concept that came along with ODI12c - “Multi-Connect” KMs. Multi-Connect KMs are a special type of template-style KM that allows the staging area to be on a separate data server to the target data warehouse, whereas most template-style KMs assume the staging and target data schemas are on the same data server. I used a multi-connect IKM File-Hive to Oracle knowledge module towards the end of another article where I used Oracle Loader for Hadoop to export data out of Hadoop and into an Oracle Database; normally when you use an IKM the staging and target areas are on the same database, but in this case the staging table for the mapping was on the Hadoop (Hive) side and I therefore had to select LKM SQL Multi-Connection as the load knowledge module, whereapon I could then select IKM File-Hive to Oracle (OLH/OSCH) as the integration knowledge module.

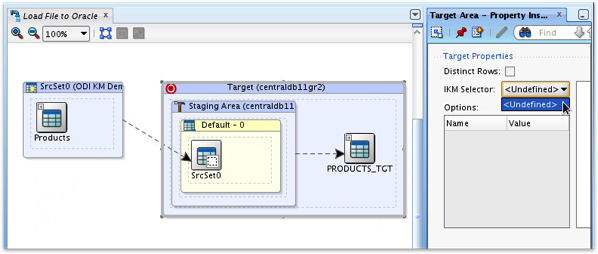

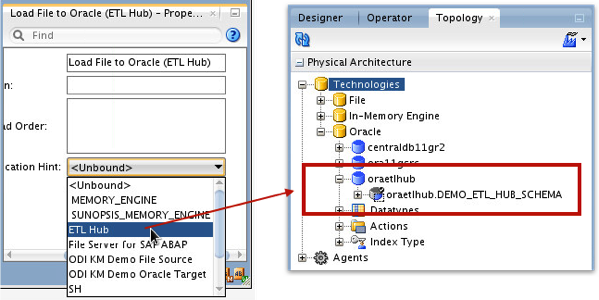

Similarly, with our ODI12c mapping if I moved the staging area to the source database, or more commonly a separate “ETL-hub”-style database where the customer wants a more ETL (compared to ELT)-style integration setup, I can request that the staging location for the mapping moves to this hub database using a new feature in the logical mapping editor:

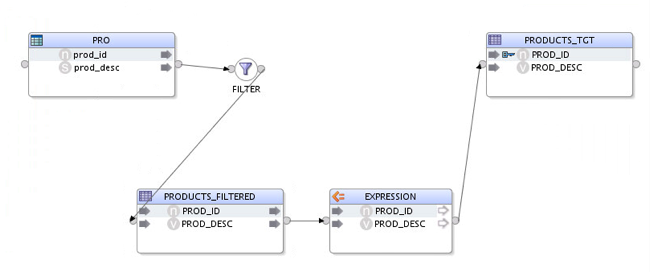

Then in the mapping I can add some transformations that take place on the ETL hub database, something you’d most probably do to avoid licensing ODI on their full data warehouse database server.

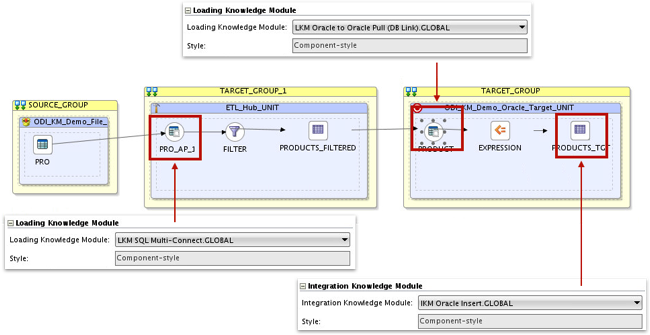

Then when I switch to the Physical mapping view I can see this additional execution unit with the transformations in it, I first select the LKM SQL Multi-Connect to bring the file data in, then I can select an LKM and IKM combination that supports multi-connect but uses an Oracle-specific technology (in this case, dblinks) to move the data from the ETL hub to the target database.

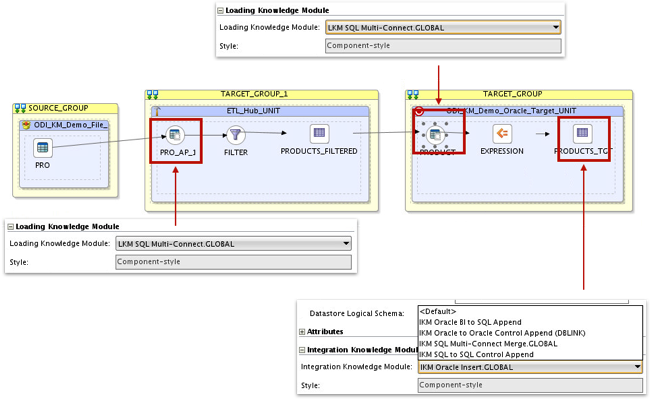

Or, if I didn’t like using dblinks or the ETL hub was on a non-Oracle platform, I could use the component-style LKM SQL Multi-Connect LKM at the access point and then a mono-source component-style IKM to do the data integration - note however that whenever we use generic JDBC connections and batch-extraction to bring data across to the target platform the data flows through the agent, which won’t be as efficient as using the multi-connect template-style KM that transfers data using SQL*Net and dblinks.

So - a few thoughts on the new knowledge module setup in ODI12c, and what these new component KMs and multi-connect KMs are actually for. You can find more articles on ODI12c and data integration over on our blog, if you’re interested in reading more about ODI12c development and internals.