Using Streams with ODI12c for Oracle-to-Oracle Change Data Capture

Although Oracle GoldenGate replaced Oracle Streams a couple of years ago as the recommended data replication and change data capture technology for Oracle databases, many customers still on Oracle Database 11gR2 or earlier still use Streams for Oracle-to-Oracle change data capture as it works and compared to GoldenGate doesn’t require any additional licensing. Oracle’s GoldenGate Statement of Direction paper from 2014 states that streams in Oracle 11gR2 will continue to be supported but no future versions of the Oracle Database will come with Streams included, but if you’re on 11gR2 and you just want to trickle-feed capture between two Oracle databases it’s an interesting option.

I covered Oracle-to-Oracle data replication using Streams a few times in the past including this OTN article on ODI and Change Data Capture back from before 2007 or so, this article on OWB11gR2 and Change Data Capture from 2010 and once from back in 2006 that went into the details of setting up asynchronous hotlog change data capture with the new “Paris” OWB10gR2 release. We’re now on the 12c release of Oracle Data Integrator and I’m teaching our ODI12c Bootcamp course to a client next week who’s particularly interested in using Streams with ODI12c, so I thought it’d be worth taking a look at this feature in more detail to see if much has changed since the earlier articles.

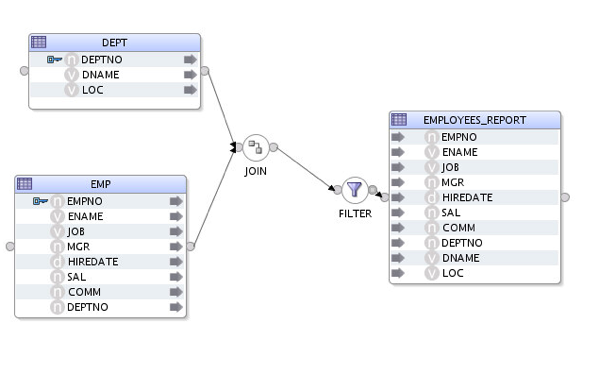

Let’s start then with an ODI12c 12.1.3 install with a regular mapping set-up to copy and join the DEPT and EMP tables from one Oracle database into a denormalized table in another Oracle Database. Both are Oracle Database 11gR2 (11.2.0.3) and the initial mapping looks like this:

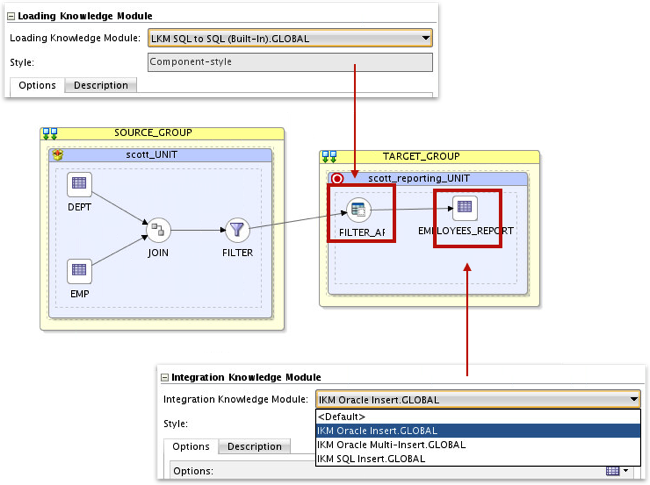

One thing that many ODI developers don’t know about the 12c release is that it comes with a set of “component-style” knowledge modules built-into the tool, which you can use straightaway to get a mapping running without having to select and import IKMs, LKMs and other KMs from the ODI Studio filesystem. In my case the Physical mapping looks like the screenshot below with two execution units (one for each Oracle Database server) and a number of built-in component-style KMs available for selection. I choose the LKM SQL to SQL (Built-in) load knowledge module which uses a generic JDBC connection to load source records into the staging table on the target server, and then the IKM Oracle Insert integration knowledge module to take that staging data and integrate it into the target table.

I then run this mapping and see that ODI extracted data from the source database using a Java routine and batch transfers into the Oracle staging table, and then integrated the contents of that staging table into the target Oracle table. I could of course improve this by using the LKM Oracle to Oracle (DBLink) knowledge module and thereby avoid loading in two steps, but what I’d instead like to do is use Oracle Streams to trickle-feed new and changed data from my source tables over to the target database server, as shown in the diagram below.

In the OWB and Asynchronous Change Data Capture article i linked to earlier in the post, setting up change data capture involved quite a few steps; the database had to be put into archivelog mode, the GLOBAL_NAMES parameter had to be set and a whole bunch of PL/SQL procedures had to be called to set up the source-to-target connection. Once it’s running, Streams takes transactions off of the redo log files on the source database and send them across the network to the target database server in a similar way to how GoldenGate sends transactions in the trail file across to target database servers - except it’s Oracle-to-Oracle only and in my experience is a lot more fragile than GoldenGate, which is why we and most other customers switched to GoldenGate when it came out.

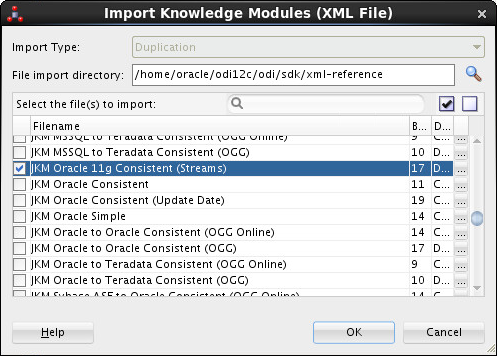

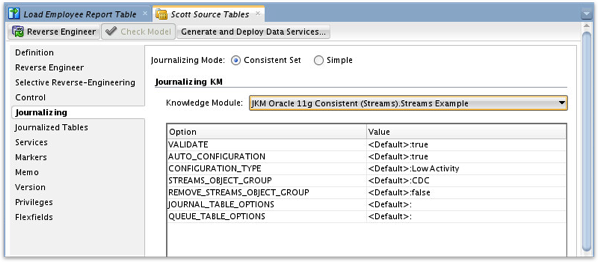

ODI12c comes with a number of change data capture or “journalizing” knowledge modules that use either database triggers, UPDATE_DATE fields, Oracle Streams or GoldenGate to replicate data from source system to the target data warehouse. The journalizing knowledge module we’ll use, JKM Oracle 11g Consistent (Streams) is a template-style KM that needs to be imported first from the filesystem where ODI Studio was installed, as shown in the screenshot below - note also when you do this yourself that there’s a big “deprecated” notice next to it, saying that it could be removed at any time (presumably in-favour of the GoldenGate-based ones)

ODI and the JKM Oracle 11g Consistent (Streams) KM takes a more “fck it, let’s just to it” approach to database configuration than my OWB10gR2 version did, automatically configuring the source database for Streams by running all of the setup PL/SQL routines leaving you just to put the database in archivelog mode if it’s not already, and granting the connecting user (in my case, SYSTEM) streams administrator privileges. Moving over to SQLPlus on the source database I therefore run the setup commands listed in the KM notes like this:

SQL*Plus: Release 11.2.0.3.0 Production on Sun Jul 26 06:34:44 2015

Copyright (c) 1982, 2011, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> grant dba to system;

Grant succeeded.

SQL> begin

2 dbms_streams_auth.grant_admin_privilege(

3 grantee => 'system',

4 grant_privileges => true);

5 end;

6 /

PL/SQL procedure successfully completed.

SQL> shutdown immediate;

Database closed.

Database dismounted.

ORACLE instance shut down.

SQL> startup mount;

ORACLE instance started.

Total System Global Area 2471931904 bytes

Fixed Size 2230872 bytes

Variable Size 570426792 bytes

Database Buffers1895825408 bytes

Redo Buffers 3448832 bytes

Database mounted.

SQL> alter database archivelog;

Database altered.

SQL> alter database open;

Database altered.

Next I’ll go back to ODI Studio and enable the source Model for journalising by double-clicking on the model in ODI Studio and then selecting the JKM from the Journalizing tab, like this:

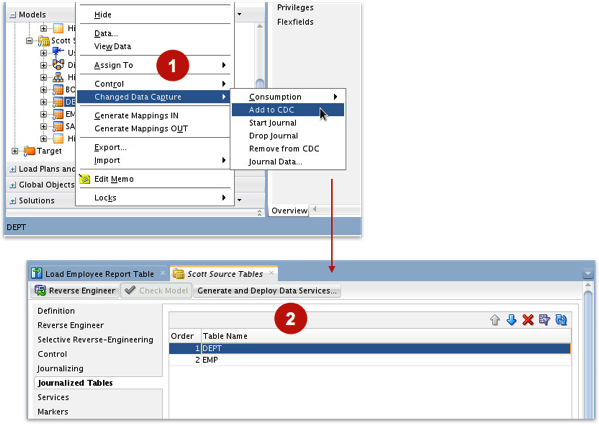

I then right-click on the EMP and DEPT tables within the source model and select Changed Data Capture > Add to CDC, where I can fine-tune the replication order so that new departments (DEPTNO) employees link to will always have been created before the employee record hits.

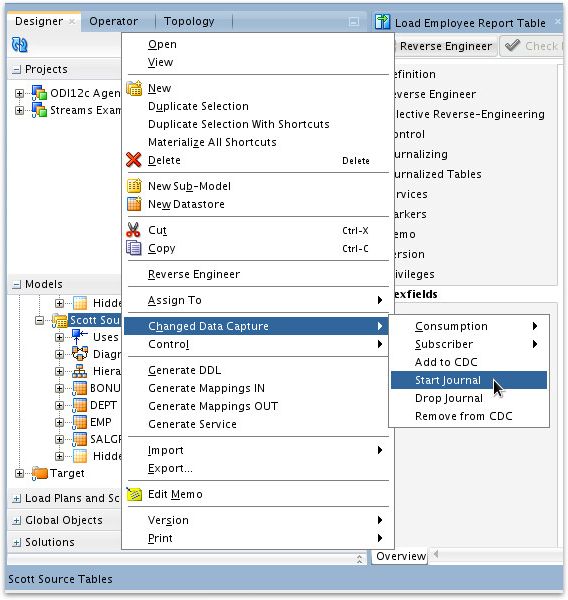

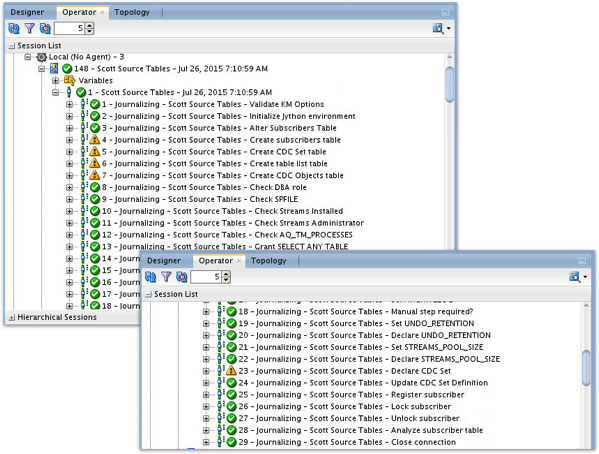

It’s at this next stage, when I enable journalizing, that Streams is set-up on the source and target database servers and all the supporting tables and views are created. To enable journalizing I click on the model, not the individual tables, and select Changed Data Capture > Start Journal, like this:

If you’ve read any of our previous posts on ODI and changed data capture you’ll realise that this setup process is that same regardless of the underlying replication technology, which makes it easy to start with database-centric CDC technologies such as this and then move to GoldenGate later on without lots of rework or re-training. For now though lets run this setup process using the local agent and then check the Operator navigator to see what it did (and whether it worked…)

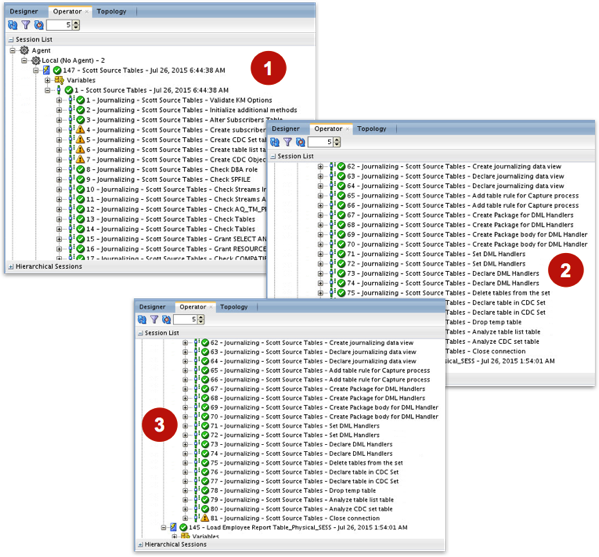

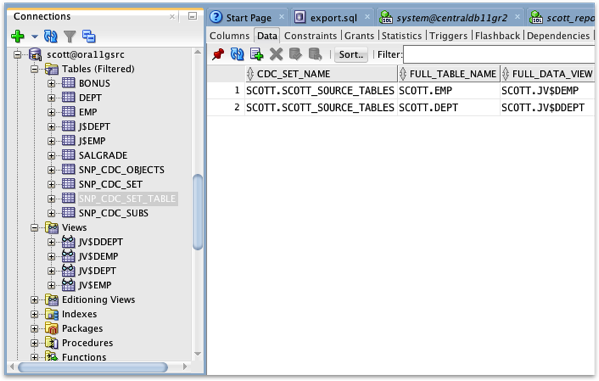

And it did work. Enabling journalising with the Oracle Streams JKM involves quite a few setup steps including checking that all the database settings and parameters are enabled correctly, then running the various DBMS_STREAMS and other packages to setup the capture and transmission process. Then, as with all of the ODI JKMs a set of J$ tables are created to hold the primary keys of new and changed records coming from the source system, along with JV$ views that join those primary keys to the full incoming replicated rows - this blog post by the Oracle ODI team explains the background to JKMs very well if you want to understand them in more detail. Looking at the source SCOTT schema in SQL*Developer I can see the CDC and J$/JV$ tables and views created in the schema; if I didn’t want these tables created in the actual data schema I could have specified a different schema as the WORK schema when I created the database connection in ODI Studio prior to this exercise.

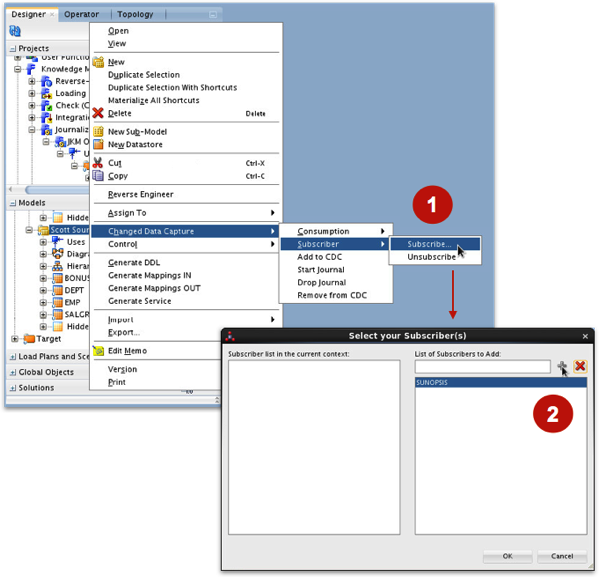

Next I have to define one or more “subscribers’ to the journals; for these more advanced “consistent set JKMs” of which the Oracle Streams one is one, you can define multiple consumers or “subscribers” to the changed data so that one can be further down the queue than the other (Simple JKMs only allow a single subscriber). I call my subscriber “SUNOPSIS” as this is the default subscriber name ODI adds to the mappings downstream.

Pressing OK after adding the subscriber again brings up the prompt to select an agent, and going over to the Operator navigator I can see that another set of steps have run, again doing some streams setup but also adding details of the journal subscribers to the tables created on the source database.

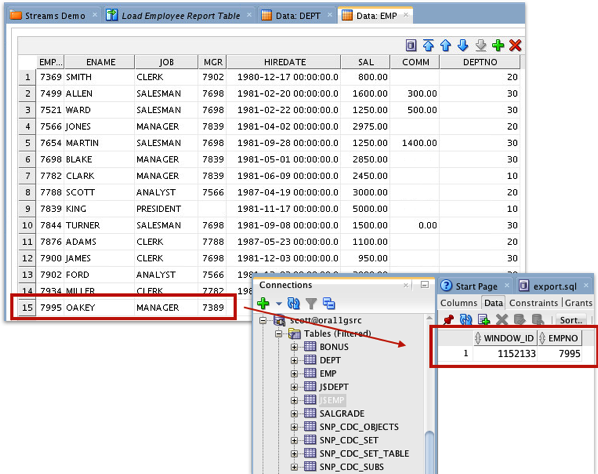

I can check that journalising is now working by using the View Data… feature in the Designer navigator to insert new rows into the EMP and DEPT tables, and then checking the J$ tables in the source database schema to see if the rows’ primary keys have been added - which they have been.

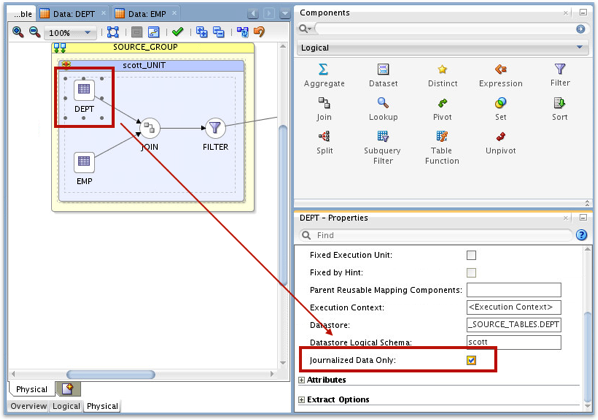

To now just read journalised new and changed data into my mapping, all I do then is go to the Physical mapping diagram, select the first source table and check the Use Journalized Data Only checkbox, then do the same for the other table (note it is the table source you select, not the access point for that table into the target execution block).

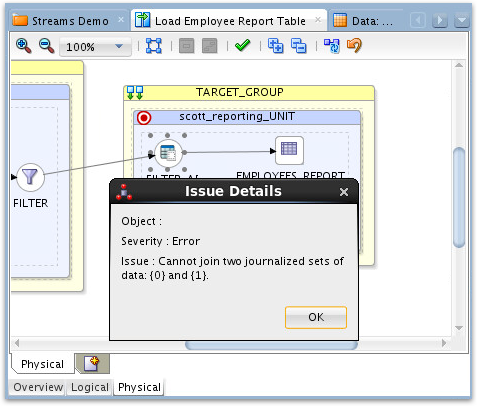

So now I’ll run the mapping and check the results in the Operator navigator … but instead, I get an error:

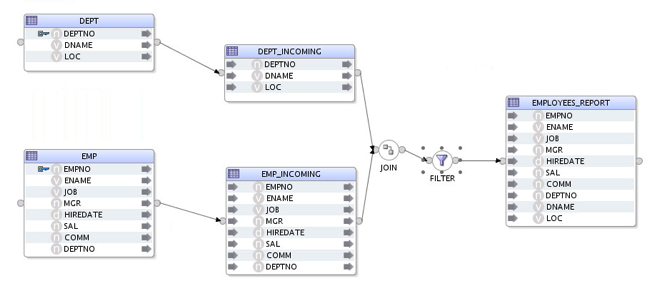

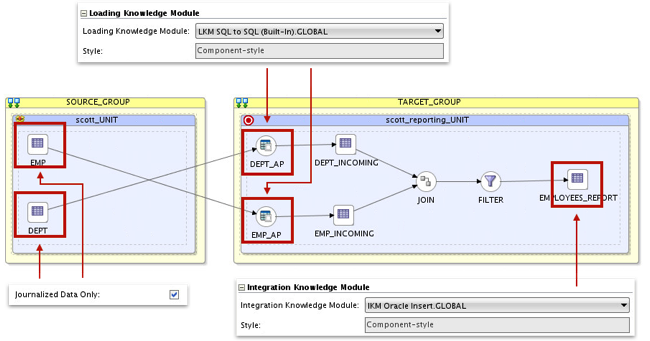

This is because, given the way that ODI handles CDC and journalising, it can’t allow two journalised tables to be directly joined in a mapping - we think this is because ODI can’t guarantee both tables are in the same update “state” and therefore makes you copy their data into a staging or intermediary table before you can do the join. I therefore amend the mapping to load the journalised tables into staging tables on the target database server, amend the joins and filter to reference the staging tables, and then join their contents to then filter and load into the target reporting table, like this:

With the Physical mapping details, the two incoming Oracle staging tables are loaded by the same LKM SQL to SQL component-style mapping as we used before to extract data from the two journalised tables, and the Journalized Data Only flag is still set for the source tables, as you can see below.

What this also highlights is a key difference between the way I trickle-fed transactions across from my source database back with the OWB and Changed Data Capture article back at the start of this post, and the way ODI’s JKMs do it; in the OWB example I set up an actual trickle-feed process outside of OWB which transferred changed data across the network to my target data warehouse, whereapon I then read those change tables and used them to update the target DW tables in my data warehouse.

And this is actually how the GoldenGate KMs work with ODI - a GoldenGate replication process copies new and changed data from the source database to the target and ODI then reads from these change tables, whereas the Streams (and other non-GoldenGate) JKMs create the change capture tables back on the source database (the various J$ and JV$ tables I reviewed using SQL*Developer earlier on), with ODI then reading from those remote change tables and bringing across the new and changed data to the target database server. This I guess makes things easier to set up - you don’t have to worry about configuring the target database for streams replication - but it does mean that you still incur the network traffic every-time you micro-batch the changes across the network rather than spreading that traffic transaction-by-transaction.

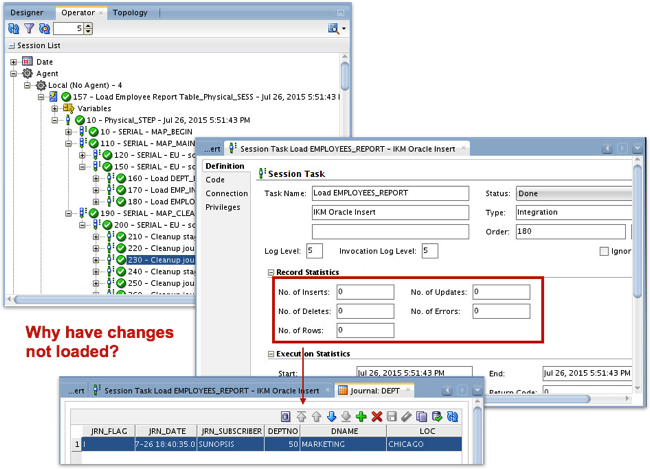

Anyway, back to ODI and this time, when I run the mapping it works, though looking at the Operator navigator again I can see that no new data came across, and the journal data is still waiting to be consumed afterwards. Why is this?

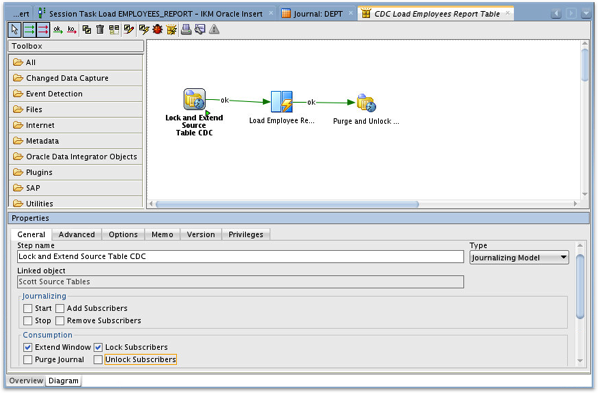

If you’ve only used the Simple CDC JKMs from Oracle before this is normally all you need to do, but with Consistent Set ones such as this one, or the GoldenGate JKMs, you need to lock the subscriber view of the journalised data and extend the CDC window before you can access the journal records; for the Simple JKMs the IKM (Integration Knowledge Module) takes care of the unlock, extend, purge and lock operations for you automatically in the background, whereas with Consistent Set ones you typically do this as part of a wider ODI package as shown in the screenshot below, with the first and last tasks created by dragging and dropping the journalized model onto the package canvas and selecting Journalizing Model as the type (the subscriber name, “SUNOPSIS” in this case is typed-in below those settings and is off-screen in the screenshot)

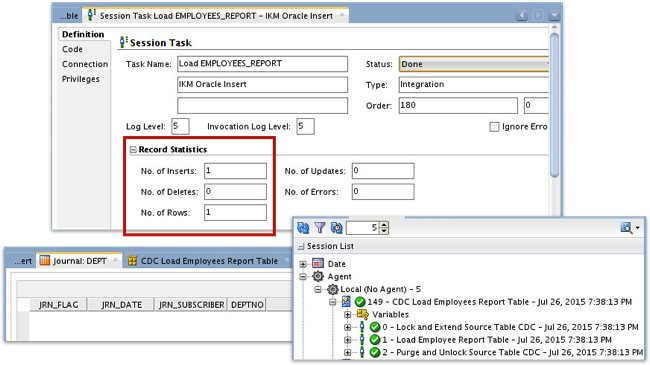

Now when I run this package, as opposed to the mapping on its own, I get the row of new data I was expecting and the journal table is now empty, as I was the only subscriber.

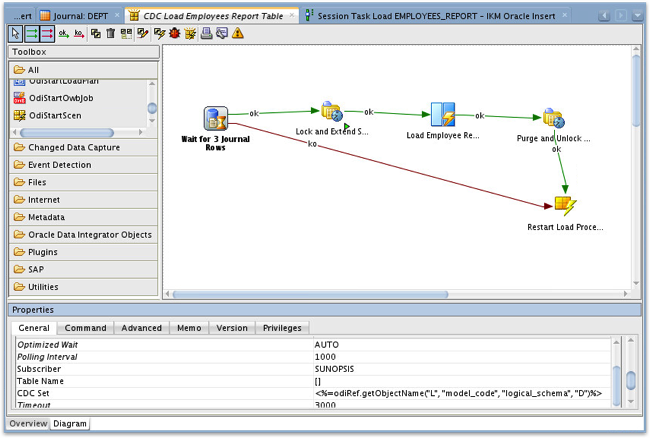

Finally, if I was looking for real-time continuous loading into this target table, I could wrap the package in an event-detection loop that waits for in this case ten seconds for three journal rows to be written, then either processes the three as soon as the third arrives or loops around again every ten seconds (obviously in-reality you’d want to put in a mechanism to halt the loop if needed, but in my case I’ll just kill the job from the Operator navigator when I want it to stop)

So that’s the basics of using ODI with Oracle Streams for Oracle-to-Oracle changed data capture; if you’re just copying data between two Oracle databases and you’re on 11gR2 or earlier this might be an option, but long-term you’ll need to think about GoldenGate as Oracle aren’t developing Streams beyond the 11gR2 release. Note also that all the streams activity happens over on the source database server so you still need this additional step to copy the journaled data across to the target data warehouse, but it’s still a fairly non-invasive way to capture changes on the source Oracle database and it does have the considerable advantage (compared to GoldenGate) of being free-to-use.