Oracle Exalytics, Oracle R Enterprise and Endeca Part 3 : Flight Delays Analysis using OBIEE, Endeca and Oracle R Enterprise on Exalytics

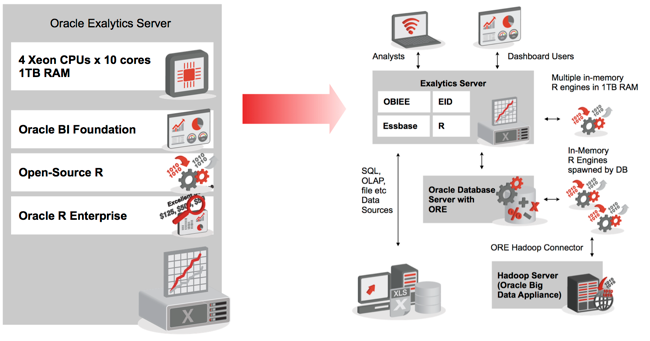

So far in this series we've looked at Oracle's BI, Big Data and Advanced Analytics strategy and seen how Exalytics can benefit both Endeca Information Discovery and Oracle R Enterprise through its 40 CPU cores and 1TB of RAM. For a recap on the two earlier postings in this series the links are provided below, and in this final posting we'll look at an example where all three tools are brought together on the Exalytics platform to provide different types of "big data" analysis, with big data defined as data of high volume, high variety and high velocity:

- Oracle Exalytics, Oracle R Enterprise and Endeca Part 1 : Oracle's Analytics, Engineered Systems, and Big Data Strategy

- Oracle Exalytics, Oracle R Enterprise and Endeca Part 2 : Oracle Endeca, the Advanced Analytics Option and Oracle Exalytics

- Oracle Exalytics, Oracle R Enterprise and Endeca Part 3 : Flight Delays Analysis using OBIEE, Endeca and Oracle R Enterprise on Exalytics

In this example, the big data bit is slightly tenuous as the data we're using is transactional data (numbers of flights, numbers of cancellations etc) accompanied by some unstructured/semi-structured data (reasons for flight delays). There's no sensor data though, or data arriving at high velocity, but the dataset is large (around 123m rows), and diverse (sort of), and Oracle R Enterprise could easily connect to a Hadoop cluster via the Oracle R Connector for Hadoop if required. All of this also could run on a regular server but if it's hosted on Exalytics then we additionally benefit from the 1TB RAM and 40 cores that this hardware can bring to bear on the analysis, with the R client running on Exalytics along with Endeca Information Discovery, and the Exalytics server then connecting via the two InfiniBand ports to an Exadata Server, an Oracle Big Data Appliance server, or other data sources via its 10GB and 1GB ethernet interfaces.

The flight delays dataset is a fairly well-known set of public data made available by the Bureau of Transportation Statistics, Research and Innovative Technology Administration within the United States Department of Transportation, and in it's full incarnation contains 123M rows of non-stop US domestic flight legs. For each flight leg it contains the source and destination airports, operator, and aircraft type, whilst for delays it holds the type and duration of delay, the delay reason and other supporting numeric and textual information. If you've seen the standard Exalytics Airlines demo, this is the dataset it uses, but it can also be used by Endeca and Oracle R Enterprise, as we'll see in this post.

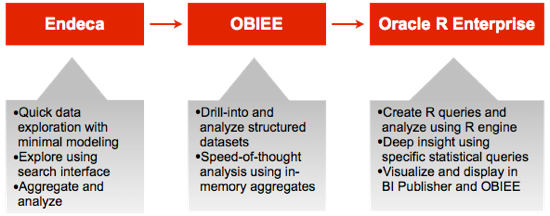

So given the three Exalytics tools we'll be using for this example (OBIEE, Endeca Information Discovery and Oracle R Enterprise), at a high-level what is each tool good at? A good first start would be to say:

- OBIEE is good for dashboard analysis of structured data(numeric + attribute with a clearly-defined data model), together with ad-hoc analysis, scorecards and other traditional BI-type analysis

- Oracle Endeca Information Discovery is good for the initial exploration and discovery of the data set, allowing us to quickly bring in disparate structured and unstructured data and then aggregate and analyse it, usually as the pre-cursor to more structured dashboard analysis using OBIEE

- R, and Oracle R Enterprise, is good at providing deep insight into specific questions, such as "are some airports more prone to delays than others", and "for American Airlines, how has the distribution of delays for departures and arrivals evolved over time?"

If we take this model of Endeca first to initially discover the full dataset, then OBIEE for answers to the questions we've now defined, and R/ORE to dig deeper into specific topics, our BI approach on Exalytics would look something like this:

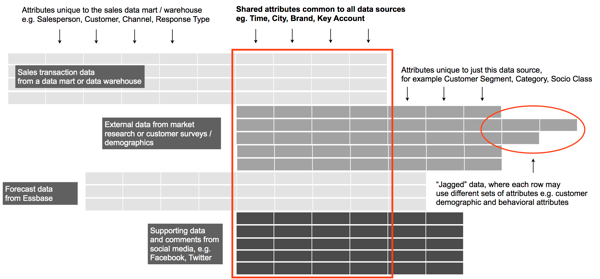

So let's start now with the Endeca element. If you read my series of postings on Endeca just after the Oracle acquisition, you'll have read how one of the main use-cases for Endeca Latitude and the MDEX engine (now known as Oracle Endeca Information Discovery, and the Endeca Server, respectively) was in situations where you had a whole range of potentially interesting data that you wanted to load up and quickly analyse, but you didn't want to spend an inordinate amount of time creating a conformed dimensional data model; instead, the key-value pair database loads data up as records, each one of which contains a number of attributes that effectively contain their own schema. What you often end up with then is what Endeca termed a "jagged database", where each record had at least one attribute in common with the others (typically, more than one attribute as shown in the diagram below), but records that originated from each different source system or database table might have different attribute sets to the other, or even different to other records in that dataset. The net effect of this is that upfront-data modelling is minimised and you don't need to reject incoming data just because it doesn't fit into your conformed data model. The diagram below shows a conceptual view of such an Endeca Server datastore, with the first incoming set of rows containing sales transaction data made up of dimension IDs and some attributes unique to sales data, with the next set of rows containing market research information that shares some key values with the previous dataset, but then contains its own unique attributes that may or may not be present in all of its records.

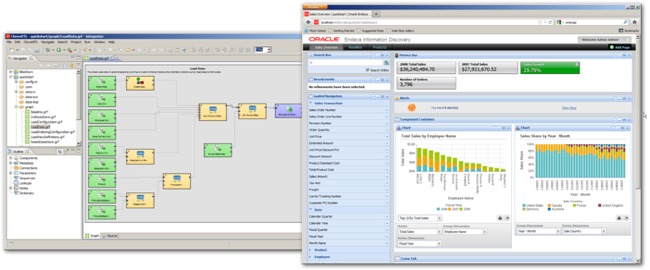

Endeca Server datastores (as their databases are called) are created and loaded via web service calls, typically constructed using Endeca Information Discovery Integrator, an ETL tool built-off of the Eclipse/CloverETL open-source platform and enhanced with specific components for Endeca Server administration. Once the datastore is loaded, the front-end dashboard application is created using Endeca Information Discovery Studio, with the two GUI tools looking as in the screenshots below. For more details of the Endeca Information Discovery development process, see this series of postings that I put together earlier in the year where I go through an end-to-end development process using the Quickstart/Bikestore Endeca demo dataset, and the set of videos on YouTube page that takes you through the process with narrative explaining what's going on.

Where the Endeca Server differentiates itself from OBIEE's BI Server and its traditional RDBMS sources, and Essbase and other multi-dimensional OLAP servers, is that it's got a bunch of features and capabilities for analysing textual data and extracting meaning, sentiment and other semantics from it. Using Integrator or direct web service calls to the Endeca Server, incoming unstructured data can be analysed using features such as:

- Keyword search, boolean search, parametric search, wildcard search, dimension search and dimension filters

- Dimension precedence rules

- Numeric range, geospatial, date/time and security filters

- Spell correction/suggestion, and “do you mean”-type alternative presentation

- Find similar, and 1 and 2-way synonyms

- Stemming and lemmatisation

- Keyword-in-context snippeting

- Results clustering, relevance ranking, sorting and paging

- Support for Multiple languages

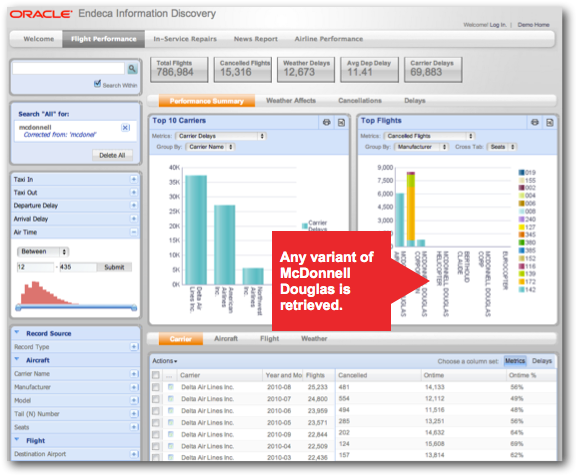

So what do Endeca Information Discovery dashboards look like once they're created, and connected to a suitable Endeca Server datastore? In the example of the flight delays data we're using across the various tools, there are a number of unique features that EID brings to the dataset, starting with the first dashboard we'll look at, below.

The flight delays dataset contains lots of free-form text, so that there are, for example, many different mis-spellings of McDonnell Douglas, an aircraft manufacturer. After being loaded into the Endeca Server datastore and then processed using the Endeca Server's document analysis capabilities, cleaned-up and standardised versions of these mis-spellings are used to populate a manufacturer attribute that groups all of them together, for easy analysis.

I mentioned earlier that one of the main uses of Endeca Information Discovery is searching across the entire dataset to find attributes and records of interest, which will then form the focus of the more structured data model that we'll then use as a data source for OBIEE. In the screenshot below, the Value Search feature is initially used to display all occurrences of the typed-in value in all attributes in the recordset, with the search highlighting attributes as the search term is typed in. In addition, what's termed a record search can then be performed that takes an attribute value and uses it to filter the displayed set of records based on groups of attributes called "search interfaces". As the set of records is narrowed down by the record search, graphs and other visuals on the dashboard page immediately show metric numbers aggregated for this record set, showing the dual search/analytic capabilities of the Endeca Server. When run on the Exalytics platform, all of this potentially takes place much quicker as the Endeca Server can parallelise search operations as well as any indexing that needs to take place in the datastore. The 1TB of RAM on the server can also be useful as the Endeca Server will try and keep as much of the analysis dataset in memory as is possible, with the disk-based column store database more there as a persistence store.

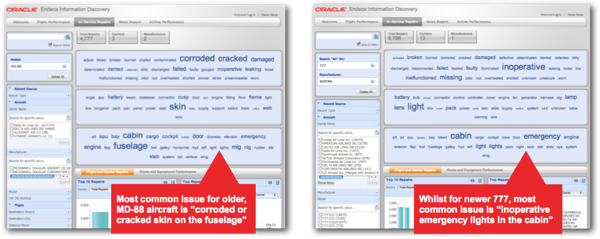

Finally, the text search and analysis features in the Endeca Server are useful for pulling out themes and sentiments from the incoming data; in the screenshot below, we can see that MD-88 aircraft typically are involved in delays that are down to the age of the aircraft, whilst delays involving the newer Boing 777 are more often down to issues such as lights not working, crew areas now being serviceable and so on.

Armed with all of this information and a subsequent better understanding of the data available to us, we can now start thinking about a more structured data model for use with OBIEE.

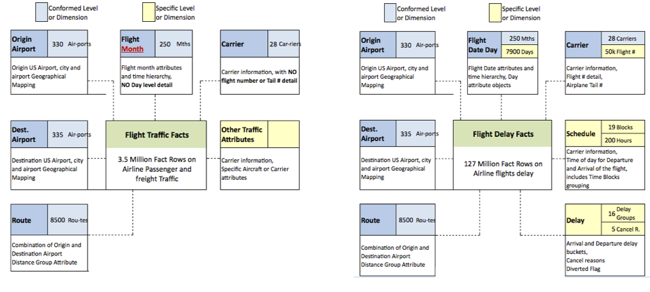

The flight delays dataset, once you look into it in more detail, really contains two main star schemas we're interested in; one based around a flight leg fact dimensioned by carrier, flight month, origin and destination airport, route and so forth. The other fact would be around the actual flight delays, sharing some of these dimensions but also with its actual reason for the delay, like the diagram below:

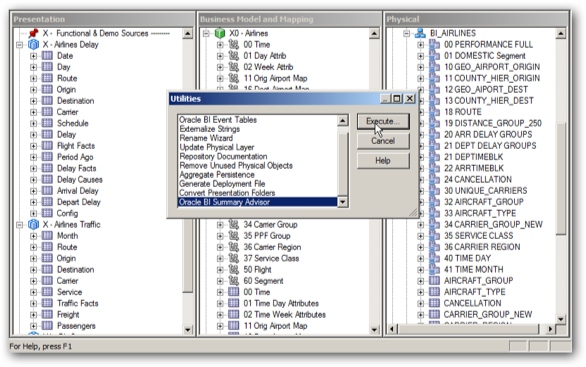

This dimension model would then map fairly easily into an Oracle BI Repository semantic model, with a single data source and business model and with two subject areas, one for each star schema. As we're running on Exalytics though, we can then generate some aggregate recommendations, based either on database optimiser statistics (if the system is otherwise unused by end-users), or on actual query patterns taken from the usage tracking and summary statistics tables maintained by the BI Server. To generate these recommendations and then create a script for their implementation, you'd then use the Oracle BI Summary Advisor that's only available on Exalytics systems.

Full details on what happens when you use the Summary Advisor are in this previous blog post and my article on the topic for Oracle Magazine, but once you've generated your aggregates and created your dashboards and analyses, your dashboards would look something like the screenshots below. Note that whilst these examples are focusing on Exalytics, a cut-down version of the Flight Delays data along with dashboards and analyses are available as part of SampleApp v207, along with the R dashboards that we'll see later on.

What OBIEE does well here is display, in a very rich graphical form, lots of aggregated data with supporting attributes to enable slice-and-dice, analysis, KPIs, scorecards and maps. When run on Exalytics, all of the prompts have their "Apply" buttons removed so that changes in parameter values are reflected in the dashboard immediately, whilst the TimesTen in-memory database ensures that response-times are within the sub-second range, even when the underlying dataset has millions of detail-level rows within it.

So now on to R, and Oracle R Enterprise. R is typically used to answer more in-depth, focused questions using more advanced statistical functions than you'd get in regular SQL, such as:

- Are some airports more prone to delays than others? Are some days of the week likely to see fewer delays than others? And are these differences significant?

- How do arrival delay distributions differ for the best and worst 3 airlines compared to the industry? Moreover, are there significant differences among airlines?

- For American Airlines, how has the distribution of delays for departures and arrivals evolved over time?

- How do average annual arrival delays compare across select airlines, and what is the underlying trend for each airline?

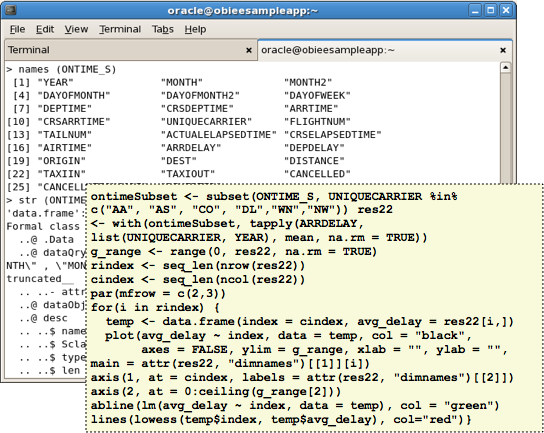

To analyse the airlines dataset using R, luckily enough a cut-down version of the dataset ships also with ORE (ONTIME_S) and comes pre-installed with Oracle R Enterprise (ONTIME_S is also described in this Oracle R Enterprise Blog post, where you can see examples of R functions being used on the dataset). To work with the flight delays dataset then, you'd go through a process of creating "frames" within ORE using data from the Oracle Database, and then create R scripts to manipulate the dataset and provide answers to your questions. Again, teaching R is outside the scope of this posting, but the screenshots below show the ONTIME_S dataset being loaded up in the R client that's included in SampleApp v207, along with an R script that provides one of the analyses used in the dashboard I'll show in a moment.

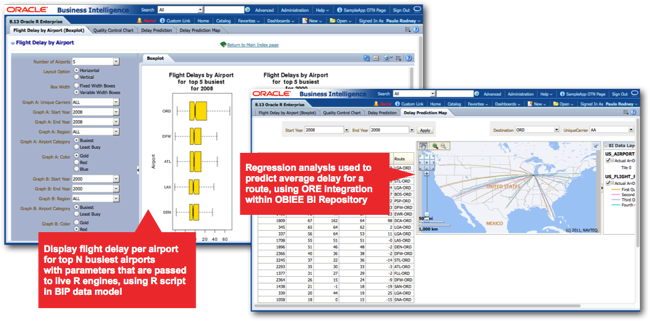

Scripts created using R can be utilised within Oracle BI in a couple of main ways; R scripts stored within the Oracle database using ORE can be referenced directly using BI Publisher, with R's XML output then being used to create an image that can be displayed using RTF templates, or you can reference R scripts held within ORE directly within OBIEE's BI Repository, as PL/SQL functions similar to regular ones such as AVG, LAG/LEAD and REGEXP (with details explained in a training PDF on Operationalizing R Scripts on the Oracle website). The OBIEE SampleApp v207 comes with a set of dashboards that show how both types of output might look, with the dashboard page on the left displaying a parameterised BI Publisher report embedded within, showing flight delays per airport calculate live by R engines on the Exalytics server. The dashboard page on the right, by contrast, shows a regression analysis calculated using functions referenced in the BI Repository RPD, displaying the output as both a table and an interactive map.

So, it was a bit of a whistle-stop tour but hopefully it sets out the different types of analysis made available by Oracle Endeca Information Discovery, OBIEE and Oracle R Enterprise, and how you might use one, two or all of them on a typical BI project. I've left out Essbase of course which also has a role to play, and the "big data" element is a bit superficial as I'm not doing anything with Hadoop, MapReduce and so on. But hopefully it gives you a flavour of the different tools and how they might benefit from being run on the Exalytics platform. For more information on Rittman Mead and Endeca, check out the Rittman Mead Endeca homepage, whilst for more information on Exalytics, check out our Exalytics resource centre, where you can also read about our Exalytics Test Centre in London, UK, where we can prototype this sort of analysis using our own, dedicated Exalytics server, working in conjunction with our OBIEE, Endeca and R consulting and implementation team.