ODI 11g in the Enterprise Part 4: Build Automation and Devops using the ODI SDK, Groovy and ODI Tools

Happy New Year! In this five-part series over the Christmas break, we've been looking at some of the more enterprise-orientated features in Oracle Data Integrator 11g, starting off with its support for multi-dimensional, message-based and big data sources and targets, and yesterday looking at its integration with Oracle Enterprise Data Quality. Today we're going to look at how a typical ODI 11g project is deployed in terms of repositories and environments, and look at how the ODI SDK ("Software Development Kit") allows us to automate tasks such as creating new repositories, defining projects, interfaces and other project elements, or perform any other task that you can currently do with the ODI Studio graphical interface. As a quick recap before we start, here's the links to the other postings in this series:

- ODI11g in the Enterprise Part 1: Beyond Data Warehouse Table Loading

- ODI11g in the Enterprise Part 2 : Data Integration using Essbase, Messaging, and Big Data Sources and Targets

- ODI 11g in the Enterprise Part 3: Data Quality and Data Profiling using Oracle EDQ

- ODI 11g in the Enterprise Part 4: Build Automation and Devops using the ODI SDK, Groovy and ODI Tools

- ODI 11g in the Enterprise Part 5: ETL Resilience and High-Availability

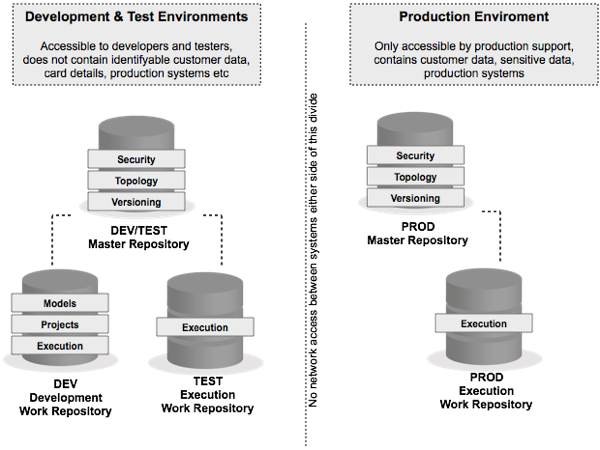

Once a project goes beyond the initial stage of discovery, prototyping and first deployment, most organisations want to create separate development, test and production environments so that developers can develop code without impacting on production systems. Typically, the production environment sits on a separate network to development and test, and ODI developers do not have the ability to deploy code to production, instead using a production support team to create separation between the roles. Because of this, production typically has its own ODI master repository which then links to an production execution work repository, whilst development and test share the same master repository, like this:

The DEV repository is a regular "development" work repository, linked to the shared master repository, and supports the creation, editing and deleting of any ODI project element. Once development has completed for a particular part of the project, the relevant packages are compiled as scenarios, exported from the development repository and then imported into the test, "execution" work repository that only allows scenario imports, not full development. Then, once testing completes, the testes scenarios are exported out of the test repository, passed to production support, and then imported into the production execution work repository. This production execution work repository has its own master repository, in the production environment, as a single master across all environments would work due to network restrictions between DEV/TEST and PROD.

Of course all of this takes quite a bit of setting up and administration if you do it all manually using ODI Studio, and there's scope for operator error at the various stages. A more preferable approach to creating new environments, configuring them and then migrating project artefacts through repositories and environments is to use scripting, ideally in conjunction with release management tools such as Jenkins, Ant and Maven commonly used in larger organisations. In fact this scripting of development operations tasks now has it's own name - "DevOps" that's all about automating the provisioning and configuring of development environments, which came about because organisations want to adopt increasingly agile ways of developing software, using techniques such as continuous integration to quickly identify whether changes introduced to the build have actually broken it. So how can we use some of these DevOps, agile and continuous integration ideas with ODI, so our favourite data integration tool can play a proper, first-class role in enterprise development projects? Let's take a look now.

We'll start with moving project elements out of the development work repository and into the test execution work repository. There are various methods for doing this, but our standard approach when promoting objects between environments is to:

- Optionally, create a new version of the package (if you use ODI's built-in version control system)

- Create new scenarios for each of the packages you're looking to promote,. which encapsulates all the scenario will need to then run in a separate execution work repository

- Export the scenarios to the filesystem as XML files, then hand these XML files to production support

- Product support then import these XML files into the production execution work repository, which then creates these new scenarios (containing packages and related objects) ready for running in PROD

As well as doing these tasks manually using the ODI Studio GUI, you can also use a set of ODI Tools (utilities) to do the same from the command-line (or from within an ODI procedure, if you want to). Tasks these tools cover include:

- Exporting scenarios to XML files (OdiExportScen and OdiExportAllScen)

- Importing scenarios and other objects from XML file exports (OdiImportScen, OdiImportObject)

- Generating scenarios from packages, interfaces etc (OdiGenerateAllScen)

as well as other tools to export logs, other objects, delete scenarios and so forth. When run from the command-line, ODI Tools use the [ODI_Home]/oracle_di/agent/bin/startcmd.bat|.sh batch or shell script file that uses a particular standalone agent to execute your requests. For example, to use the OdiImportScen tool to import a previously exported scenario XML file into a production execution repository, you might use a command like:

cd c:\oracle\product\11.1.1\Oracle_ODI_2\oracledi\agent\bin

startcmd.bat OdiImportObject -FILE_NAME=c:\Test_Build_Files\SCEN_LOAD_PROD_DIM_Version_001.xml -WORK_REP_NAME=PROD_EXECREP -IMPORT_MODE=INSERT_UPDATE

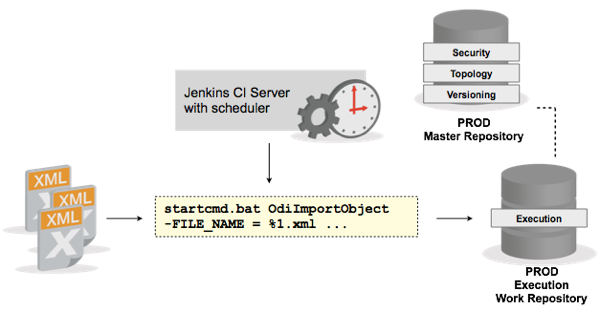

where the ODI Home that you're running startcmd.bat from is one associated with an agent that is declared in the production master repository, and PROD_EXECREP is the name of the execution work repository associated with this master repository that you want to import the scenario into. Now armed with this ODI Tool and the others that are available to you, you could automate the deployment of new versions of your project by iterating through the packages and scenarios and moving them one-by-one into your production repository, for example using an approach like the one suggested in this blog post by Uli Bethke. But, many larger organisations now use what are called "build-automation" tools to automate the process of creating software releases, and it'd be handy if ODI could work with these tools to allow its project artefacts to be integrated into a build release, as per the diagram below:

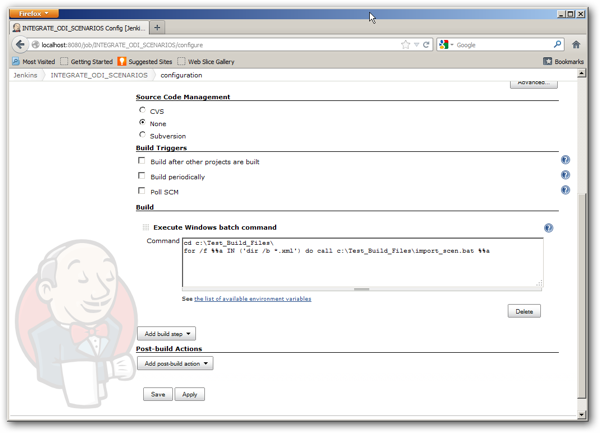

Using a tool like the aforementioned Jenkins, a popular open-source build automation tool that's freely-downloadble, you can do this by having one of the build instructions call OdiImportObject or OdiImportScenario from a Windows batch file or Unix shell script, which can either then periodically poll a folder for new scenario export files, or even better detect uploads to a source control system and continuously integrate new export files into a current version of the build. In the screenshot below, we've configured a Jenkins job to poll a folder for ODI export files to a schedule, with each run of the job task going through all XML files in the directory and passing them to another batch file that calls the OdiImportObject tool.

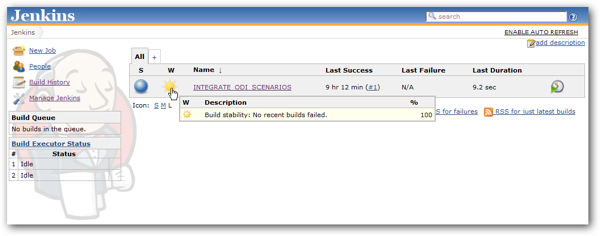

In the screenshot below, we're looking at the state of a particular project, and we can see that as of the last build, the process completed successfully with no imports erring or otherwise causing the build to fail.

A major advantage of using a build, or "continuous integration" server like Jenkins is that you can link it to a source control system such as SubVersion or Git, and then tell it to automatically import any new project artefacts into a separate continuous integration, or "smoke test" environment that you can execute test scripts against continuously to see if a recent change has "broken the build". This smoke test or continuous integration environment exists separate to DEV, TEST and PROD and is particularly useful in large, complex and agile projects where multiple developers are updating and adding code all of the time - the CI environment then gives you an early warning about a code commit that's "broken the build", detected by continuously running as set of regression test scripts, giving you the confidence that, assuming these scripts are still passing as OK, your code is in a fit state to ship.

You can also go a couple of steps further, using tools such as Ant and Maven to incorporate build instructions for ODI into a larger build plan encompassing Java code, database DDL scripts and other project artefacts, with tools such as Jenkins having built-in support for Maven build pipelines. I guess you could also create shell scripts or batch files that use the OdiGenerateScen tool to extract the XML export files directly out of the ODI development work repository, but we use the manual process of having the developer generate the XML file as a way of signifying "this bit of code is now ready to incorporate into the daily build" - your own personal mileage will of course vary.

So that takes care of automated builds and continuous integration, but what about tasks such as creating new work repositories, or populating existing master repository topologies with data source connections, contexts and other metadata elements required to stand-up a new development or testing environment? For this, we can use the ODI Software Development Kit (SDK) API introduced with ODI11g to perform all of the tasks normally done using the ODI Studio GUI, used in combination either with regular Java code, or through the Java scripting environment called "Groovy".

The ODI SDK is covered pretty well in the online documentation, and there's also a number of blog posts and articles on the internet that can give you a good introduction to this API, including:

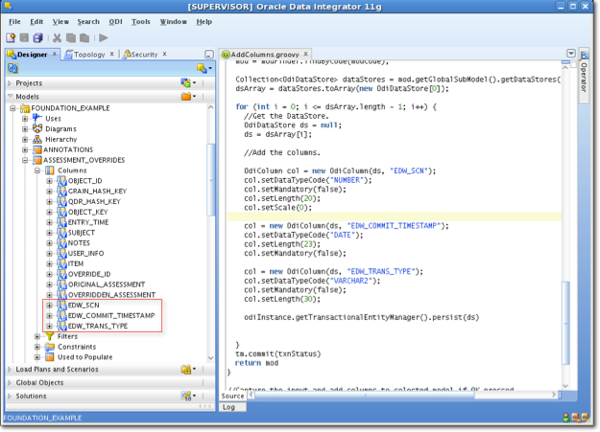

- "Oracle Data Integrator 11g Groovy: Add Columns to a Datastore" - Rittman Mead's Michael Rainey

- "ODI 11g - Getting Groovy with ODI" - Oracle's David Allan, who also wrote the following ODI SDK blog posts:

- "ODI 11g – Insight to the SDK"

- "ODI 11g - Scripting the Model and Topology"

- "ODI 11g – More accelerator options" - scripts to auto-create and auto-map interfaces

- ODI SDK Code Samples website

import oracle.odi.domain.project.OdiProject;

import oracle.odi.core.persistence.transaction.support.DefaultTransactionDefinition;

txnDef = new DefaultTransactionDefinition();

tm = odiInstance.getTransactionManager()

txnStatus = tm.getTransaction(txnDef)

project = new OdiProject("Project For Demo", "PROJECT_DEMO")

odiInstance.getTransactionalEntityManager().persist(project)

tm.commit(txnStatus)

Java applications that use the ODI SDK typically have to be compiled into a Java class before they can be executed, but recent versions of ODI11g have include a new script interpreter for Groovy, an interpreted (rather than compiled) scripting environment for Java that you can run from within ODI Studio, and allows you to create ODI SDK utility scripts that you can use to perform basic administration tasks. Michael Rainey's blog post showed how you can use Groovy and the ODI SDK to add columns to datastores within a model, with the code itself looking like this within ODI's Groovy editor.

If you're familiar with OWB's OMB+ scripting environment, this is all very similar except it's more standards-based, using a standard Java API format for the SDK, and Java (together with Groovy, if appropriate) to orchestrate the process. Typically, the ODI SDK would be used with Groovy for small, admin-type tasks, with the full compiled Java environment used for more complex applications, such as integration of ODI functions into a wider Java application.

So - that's a few ideas on build automation and scripting for ODI. In the final posting tomorrow, we'll look at high-availability considerations and the new Load Plan feature, and see how these features can be used to create highly-resilient ETL processes for mission-critical enterprise applications.