ODI 11g in the Enterprise Part 5: ETL Resilience and High-Availability

In this final part of our five part series on ODI 11g in the Enterprise, we'll take a look at a topic that's become increasingly important as ODI and ETL becomes more mainstream; data integration and loading routines that were once considered "nice to have", are now mission-critical as they support operational systems that price products, segment customers, manage supply chains and combat fraud. Before we do that though, here's a quick recap of the links to the previous postings in this series:

- ODI11g in the Enterprise Part 1: Beyond Data Warehouse Table Loading

- ODI11g in the Enterprise Part 2 : Data Integration using Essbase, Messaging, and Big Data Sources and Targets

- ODI 11g in the Enterprise Part 3: Data Quality and Data Profiling using Oracle EDQ

- ODI 11g in the Enterprise Part 4: Build Automation and Devops using the ODI SDK, Groovy and ODI Tools

- ODI 11g in the Enterprise Part 5: ETL Resilience and High-Availability

So what features exist within ODI to make data integration routines resilient and highly-available, and what design patterns and development approaches can we use to create code that's inherently more resilient and "fails gracefully"?

When I think about how an ETL process can fail, there's two main scenarios I'm thinking of:

- The ETL process itself fails, because some unexpected data comes through or there's a problem with the ETL logic, or there's some issue with the source or target database such as disk space running out, a network connection failing etc.

- The server process that's running the ETL process fails; in the case of ODI, this would be the agent failing, or the ODI master or work repository failing

For a failure within the actual ETL process, what I want is for that process to fail gracefully, or even better take corrective action and then resume. At least if it fails gracefully though, there's more chance of production support fixing the issue, rather than one of my team getting a phone call at 2am in the morning to reconstitute the ETL process. For ODI infrastructure failures, ideally I want there to be some redundancy or failover, so we don't ever get a call. Let's take a look at these two scenarios in turn, starting with failures within the ETL process itself.

Stewart Bryson wrote an excellent conference presentation and paper a couple of years ago entitled "The Three R's of Data Warehouse Fault Tolerance", with the "R's" referring to resuming (typically after an out-of-space disk allocation error); restarting (rolling-back the ETL process after a catastrophic error, so it can be run again); and restoring (backing up and recovering the large datasets typically stored in a data warehouse). The presentation focused mostly on Oracle database features such as resumable space allocation, flashback table and database, and whether a data warehouse should run in archivelog mode or not, but it gives us a good starting point to think about similar issues with ODI data integration processes.

So what happens to a typical ODI package when an out-of-disk-space error is reported by the target database, for example an Oracle 11gR2 database? Let's take a quick look, by creating a copy of the SH.SALES table in a new schema, and allocating that schema a tablespace and datafile that's too small to load all of the table's rows.

conn / as sysdba

create tablespace sales_ts logging datafile 'sales_ts.dbf' size 50m;

alter database datafile 'sales_ts.dbf' resize 100m;

create user dw_spacetest identified by password default tablespace sales_ts;

grant connect, resource to dw_spacetest;

create table dw_spacetest.sales as select * from sh.sales where 1=0;

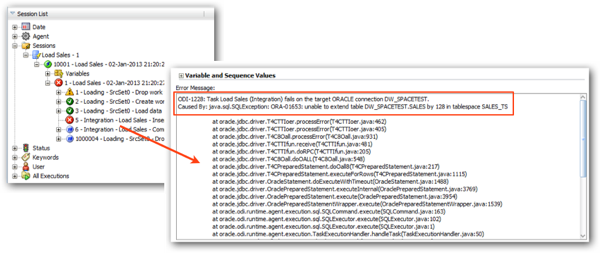

I then create a new ODI 11g project, set up the two data servers and physical/logical schemas, then put together a simple interface that loads from the source table into target, using a control append IKM (so that I don't need to set up a primary key on the SALES table, which doesn't have one by default). When I execute the interface, it quickly fails with an out-of-disk-space error.

If I then resize the datafile to make it a maximum of 100MB in size, and then restart the failed session, this works but the whole set of steps are executed again, re-inserting all rows back into the table - something that could take a fair bit of time on a real data warehouse fact table load. The ODI Operator doesn't show the old failed session though, instead replacing it with the one that's now completed successfully.

So what if we use Stewart's resumable space allocation idea, by first granting the "alter session" and "resumable" privileges to the target database account using SQL*Plus:

conn / as sysdba

grant alter session to dw_spacetest;

grant resumable to dw_spacetest;

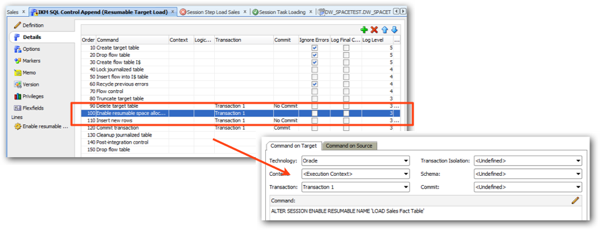

and then adding an extra step into a customised version of the IKM Control Append knowledge module to enable resumable space allocation for this session:

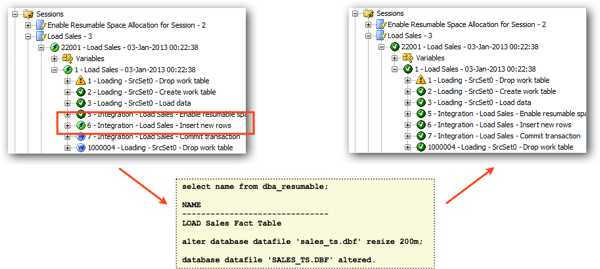

Now, when I re-run the interface with this custom knowledge module, when the session hits the point where the tablespace datafile runs out of space, the session just hangs. You can then detect the suspended session using the DBA_RESUMABLE view, allowing the administrator to extend the datafile and the session to automatically resume and complete successfully.

Of course you could set the tablespace datafile to auto extend automatically anyway, but some organisations don't allow this and anyway, the datafile might have reached its auto extend limit. In practice, it would make sense to set up a monitoring system to periodically check the DBA_RESUMABLE system table, or you could set a trigger against it to raise an alert, but the key thing here is that the session resumes from where it left off, avoiding a complete reload of the table in question.

The second "R" of Stewart's presentation referred to restartability, when an ETL process fails so catastrophically that you need to in-effect "rewind the database" to its state prior to the load. If all you are doing is truncating a few tables and then inserting fresh data into them, all you'd typically need to do is just re-run the load process from the start, but if you're inserting and updating rows, or otherwise making changes that can't be easily "un-picked", the Oracle database "flashback" technologies allow you to return individual tables, row versions and even the whole database to a particular point-in-time. Flashback database requires you to be running your database in archivelog mode, but flashback table works with both archivelog and noarchivelog-mode databases and is relatively easy to work with.

In the next example then, we're going to use the flashback table feature to roll-back changes to the SALES table we're loading, if an error occurs during the load process. We'll create a two-stage process to update the table; the first one will execute some SQL code to update a sample set of rows, and the second will attempt a data load, but will fail due to an out-of-disk-space error (resumable space allocation won't be enable in this instance). What we want to then happen is an automatic flashback table operation on the SALES table so that it's returned to its original state; to do this, we'll use a new feature introduced with recent releases of ODI11g called a load plan.

Load Plans were introduced with the 11.1.1.5 release of ODI, and are an alternative to packages for when you need to sequence a series of interfaces and other steps together into a hierarchical set of load steps. I covered load plans in an article for Oracle Magazine a few months ago ("Lighten the Load", September/October 2012), and they introduce a couple of new features that improve on what's possible with packages;

- They make it clearer what tasks are to be run serially, or in parallel, compared to packages which bury parallel execution away under the "synchronous/asynchronous" setting available for scenarios)

- You can declare exceptions, steps that are executed when a particular load plan step fails, which can be used to try and recover a situation, or run a process that corrects or cleanses your data

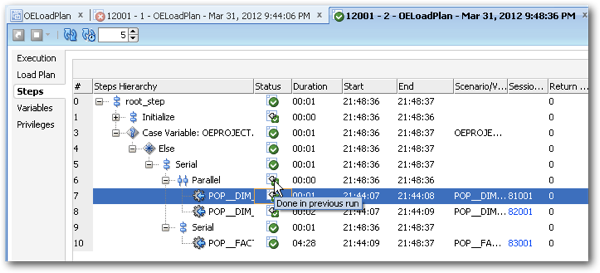

- Load plans can be configured to restart at a particular point rather than right back at the beginning

… all of which sound very useful in the context of ETL process resilience.

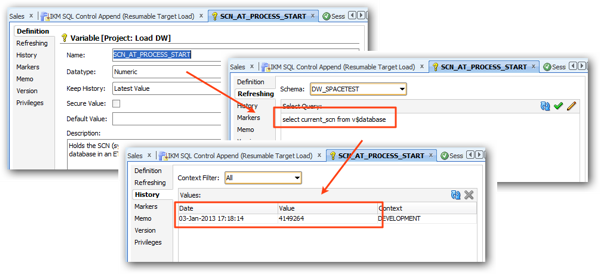

Load plans are actually considered "executable objects" and are the only other type of project artefact that you can load into an execution work repository, and as we'll see in a moment they go well beyond scenarios in terms of visibility of failed processes, restart points and so on. To set this example up, I'll first create a project variable that I'll use to store the current SCN (system change number) before we start the table update; then, when we declare the load plan exception, we'll use this SCN with the flashback table command to return the table to its previous state prior to the ETL process running. To get the variable update to run using non-DBA credentials, you'll need to ensure that the target database username has SELECT access on the V$_DATABASE data dictionary view.

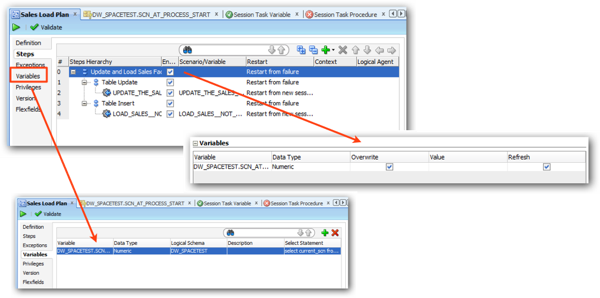

I then set up an ODI procedure that updates a number of rows in the target sales table, and create a new load plan that includes a reference to the variable, refreshes it when the root step runs, and then adds the procedure and the modified interface in to perform the table update and load.

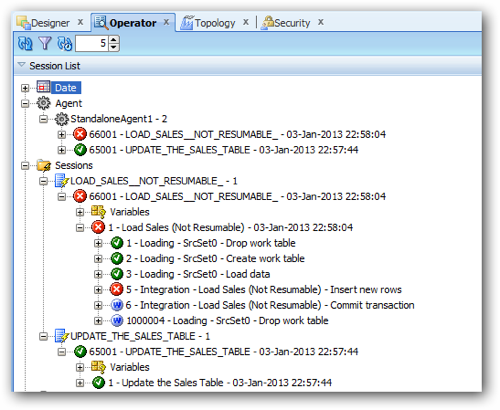

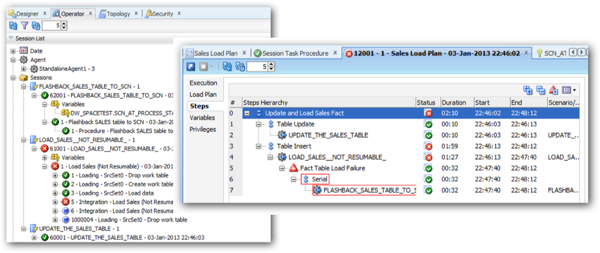

When I run the load plan, as you would expect, the first step completes successfully, whilst the final one fails with an out of space error.

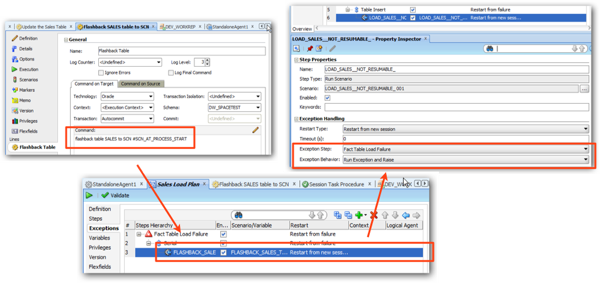

This is where the load plan exception comes in, which we'll set up so that when this step fails, the load plan flashes the SALES table back to its previous state, prior to the update and the insert - by default, the update would still be there, as that part of the load plan completed and committed, leaving the table in a half-loaded state. To create the exception, I first create another procedure that runs the flashback table command, and references the variable that I refreshed using the root step in the load plan. Then, I create the exception in the load plan, reference the procedure and then configure the final table loading load plan step to run the exception steps if it fails.

Let's run the load plan again, this time with the exception enabled and the flashback table set set up. This time, when the final step fails, the exception is raised and the flashback table command is executed, returning the target back to its original state.

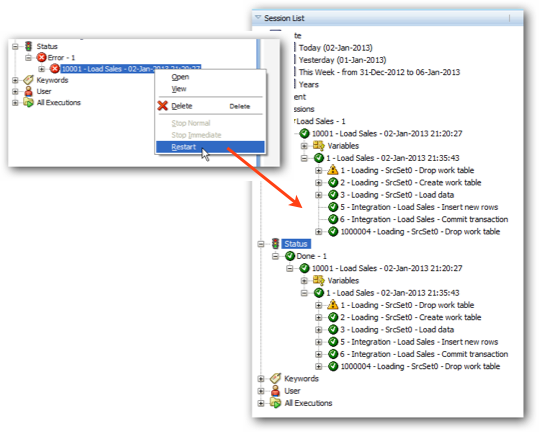

Of course flashing the table back to its original state is a pretty drastic action to take as an automatic step, so you could instead have the exception raise an alert, or perform a task that might then allow the process to complete successfully. When you configure the step's exception handling, you can make it so that the load plan skips the steps that executed successfully when it re-runs, picking up either at the task that failed, or that task's parent task, as I demonstrated in the Oracle Magazine article linked to earlier, and with it's load plan execution after the initial exception raise shown below.

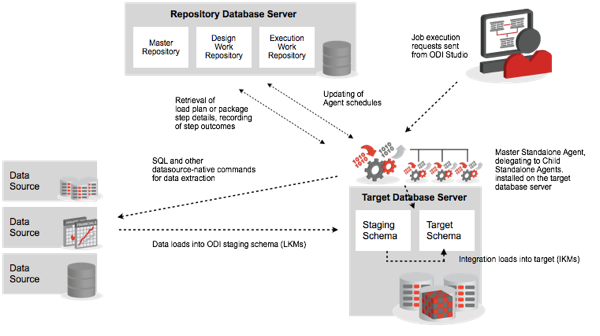

Moving on to the second type of process failure that I mentioned at the start of the article, the other main way that an ODI ETL process can fail is if the actual ODI agent infrastructure goes wrong; for example, an agent process may fail during the processing of a job execution, or the master and work repositories may become unavailable, because of a database corruption or crash. Let's take a look now at what a standard ODI environment might look like that uses standalone runtime agents to process job executions, in this case using the agent load balancing feature that allows you to define a master runtime agent, against which you schedule jobs and send ad-hoc execution requests, and a number of child runtime agents that actually run the integration tasks.

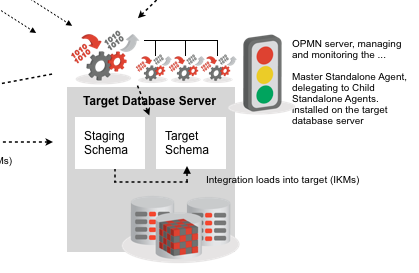

This is the most basic of ODI infrastructure setups, and having more than one standalone runtime agent running on the target server ensures that, if one of the child agents crashes, there will be others (hopefully) who can take up the rest of the tasks in the job execution. But if an agent goes down, you'll have to manually restart it, which is useful in the early days of a project when you want to know about problems with your system, but later on once the system is in production, you just want the agent to be restarted with the minimum of fuss. You can set up automatic restart for agents using another piece of Oracle technology called Oracle Process Manager and Notification server (OPMN) which many of you will know from its role in OBIEE 11g's architecture, but OPMN does not come pre-installed with ODI and you have to set it up manually (and it may involve an extra license cost, as part of the Oracle Web Tier product). Once you have this in place, OPMN then starts, stop, monitors and restarts the individual standalone runtime agents, ensuring they are restarted (if possible) if they crash.

The other main issue with this type of setup is that you've got a fairly big dependency on the master/parent standalone runtime agent, in that it's the one that you've assigned the job execution schedule to. If this agent fails and can't be restarted, you've effectively lost your job schedule as the child agents don't have any scheduled jobs assigned to them directly; this isn't such an issue if you've using an external scheduler such as BMC's Control-M, as the master/parent standalone runtime agent won't have it's own internal schedule - the external scheduler will just send jobs across on an ad-hoc basis according to its own internal scheduled job list. But if you want to use ODI's scheduler, and also if you want to improve on the lightweight Java hosting environment that standalone agents use, this is where the new JEE agents and their WebLogic Server integration come in, introduced with the 11g release of ODI.

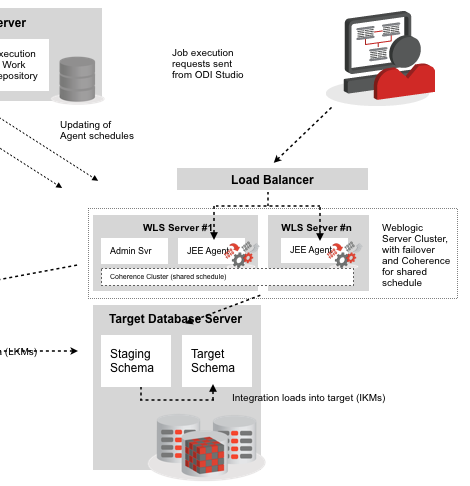

When you set up WebLogic Server and install the JEE agents via the ODI weblogic domain template, as well as the ODI elements you also configure Oracle Coherence, an in-memory clustered data cache used in WebLogic Java applications to hold a shared, highly-available copy of the agent schedule. Along with the WebLogic elements, you also need to set up and configure a separate load balancer for accessing the JEE runtime agents, so that your agent and target database infrastructure now looks like this:

In this arrangement, there is no longer a parent/master agent or any child agents; each agent is equal, and when the agents start up, one of them elects to be the agent responsible for the schedule, reading its list of scheduled jobs from the coherence cache. Job requests and new schedules are then sent to the load balancer, rather than any individual agent, and if the agent that is responsible for the schedule goes down, another one picks up its responsibilities and takes over running the schedule (there are some exceptions to this schedule failover though, see the High Availability for Oracle Data Integrator docs for details). If an individual WebLogic managed server goes own (analogous to the unmanaged JVMs that standalone agents use) then the WebLogic admin server should detect the failure, restart it, and if all else fails migrate the process to another available managed server in the cluster. This additional schedule and process protection is the main reason that you'd implement JEE agents and WebLogic within ODI (though you also get better support for data sources, plus the ability to run Enterprise Manager and ODI Console, pretty useful features), though of course you do need to balance this against the additional cost of WebLogic Server Enterprise Edition licenses, if your organisation doesn't already have licenses.

So, there we have it with ETL resilience and high-availability for ODI when deployed "in the enterprise". That's it also for the series, and hopefully it's been useful. If you're at the BIWA Summit 2013 next week in San Francisco, I'm presenting on this topic there, so if you've got any feedback or ideas prior to the presentation, add them as comments to this post.