Call OpenAI from Oracle Query

Introduction

The Old meets the New

ChatGPT's impact on our daily lives has been surprisingly significant in the short time since its release. Reshaping work practices, OpenAI's ChatGPT is expected to drive further change, even disruption in the coming years. Meanwhile, relational databases, the workhorses of data storage for decades, have survived countless attempts to make them redundant. Can the old and the new work together?

About OpenAI API

OpenAI offers a versatile API that grants access to a variety of AI models. While many focus on text processing and generation, others are tailored for image inputs. Beyond that, specialized models can convert text to speech (TTS), or process images to generate text. Furthermore, some models accept both text and image inputs, producing images as output. This broad capability makes the OpenAI API a powerful tool for diverse AI applications.

The Cost of Using AI

OpenAI's API operates on a pay-as-you-go token-based pricing model, with costs varying between models. Think of tokens as units representing the quantity of processed text (approximately 750 words per 1000 tokens). For pricing details, visit the OpenAI website: https://openai.com/pricing.

Model costs range significantly. Here is a cost comparison:

- Low-cost models: Older models like gpt-3.5-turbo-0125 can start at $0.50 per million input tokens and $1.50 per million output tokens.

- High-cost models: Newer, powerful models like gpt-4-32k can cost $60.00 per million input tokens and $120.00 per million output tokens.

While manually generating enough input to incur significant costs is time-consuming, the real expense lies in processing large datasets. Using OpenAI on extensive database records can quickly become costly if not carefully managed

The Limitations of Using AI

Relational databases, with their structured data, seem ideal as inputs for text-based AI models. Tasks like data interpretation, enrichment, classification, and summarisation offer exciting possibilities. However, it is crucial to manage expectations.

While impressive, purely generative models like GPT-3.5 have limitations when integrating with business data. For more robust solutions, consider exploring Retrieval Augmented Generation (RAG) models. Data scientist Iain Read's blog offers a deeper exploration of the risks associated with relying solely on generative models for this purpose and the opportunities when using RAG models.

This does not mean purely generative models lack utility. For quick prototyping, proof-of-concept creation and for some limited business use cases, they can be valuable tools. Proceed to the next chapter for further exploration.

Integrating Oracle DB with OpenAI

From Reviews to Insights: Building a Customer Sentiment Classifier

Let us consider a practical example: classifying customer reviews for our product stored in an Oracle Database table. Our goal is to understand the sentiment behind these reviews - are they positive, negative, or neutral? This is a perfect task for AI.

Accessing OpenAI API

Before you can start using the OpenAI API, you will need to create an account and will likely need to purchase tokens to use the API. Afterwards, you will need to create an API key. The OpenAI API documentation can be found here.

Options for Integration

To call the OpenAI API from Oracle DB, we need to make an HTTP request (including API key and payload) and parse the JSON response. This could be done with the Oracle PL/SQL UTL_HTTP package. However, I decided to write my integration function in Java, compile a jar and upload it to my Oracle database. (Java is so much nicer than PL/SQL!)

The easy way of adding a Java function to Oracle DB is by using this statement (no jar required):

CREATE OR REPLACE AND COMPILE JAVA SOURCE NAMED "HelloJavaClass" AS

// Java class definition goes hereThe next and last step before using the class function above would be creating a wrapper like this:

CREATE OR REPLACE FUNCTION factorial(n IN NUMBER) RETURN NUMBER AS

LANGUAGE JAVA NAME 'HelloJavaClass.helloFunction(int) return int';

/However, this method limits us to the standard Java SDK. To enable the use of custom libraries, compile a JAR file and upload it to the database - this is the approach I chose. This also allows for code in Scala or other JVM languages.

Step 1: The Java Class

The analyseSentiment function accepts a customer review as input and (typically) returns a sentiment: Positive, Negative or Neutral.

import javax.json.Json;

import javax.json.JsonArray;

import javax.json.JsonObject;

import java.io.*;

import java.net.HttpURLConnection;

import java.net.URL;

import java.nio.charset.StandardCharsets;

public class OpenAI {

public static String analyseSentiment(String prompt, String apiKey, String modelName) throws IOException {

String openAiEndpoint = "https://api.openai.com/v1/chat/completions";

URL requestUrl = new URL(openAiEndpoint);

HttpURLConnection connection = (HttpURLConnection) requestUrl.openConnection();

connection.setRequestMethod("POST");

connection.setRequestProperty("Content-Type", "application/json");

connection.setRequestProperty("Authorization", "Bearer " + apiKey);

connection.setDoOutput(true);

String systemContent = "You are a helpful assistant who prefers concise, single word answers.";

String userContent = "Is this customer review positive, negative or neutral: '" + prompt + "'";

String requestJson = "{\"model\": \"" + modelName + "\", " +

"\"messages\": [" +

"{\"role\": \"system\", \"content\": \"" + systemContent + "\"}," +

"{\"role\": \"user\", \"content\": \"" + userContent + "\"}" +

"]," +

"\"max_tokens\": 30}";

try (OutputStream outputStream = connection.getOutputStream()) {

byte[] input = requestJson.getBytes(StandardCharsets.UTF_8);

outputStream.write(input, 0, input.length);

}

StringBuilder response = new StringBuilder();

try (BufferedReader reader = new BufferedReader(

new InputStreamReader(connection.getInputStream(), StandardCharsets.UTF_8)

)) {

String responseLine;

while ((responseLine = reader.readLine()) != null) {

response.append(responseLine.trim());

}

}

if (connection.getResponseCode() == HttpURLConnection.HTTP_OK) {

JsonObject returnJsonObject = Json.createReader(new StringReader(response.toString())).readObject();

JsonArray choicesArray = returnJsonObject.getJsonArray("choices");

JsonObject firstChoice = choicesArray.getJsonObject(0);

JsonObject message = firstChoice.getJsonObject("message");

return message.getString("content");

} else {

throw new IllegalArgumentException("HTTP response code not 200. Response: " + response.toString());

}

}

}Compile the Java class and create a JAR file.

Step 3: Create Schema and Tables

I used the following scripts to set up the schema and the input and output tables and their data:

Schema setup.

Execute as DB admin:

CREATE USER OPENAI_TEST IDENTIFIED BY OPENAI_TEST

DEFAULT TABLESPACE USERS

QUOTA UNLIMITED ON USERS;

GRANT create session TO OPENAI_TEST;

GRANT create table TO OPENAI_TEST;

GRANT create view TO OPENAI_TEST;

GRANT create any trigger TO OPENAI_TEST;

GRANT create any procedure TO OPENAI_TEST;

GRANT create sequence TO OPENAI_TEST;

GRANT create synonym TO OPENAI_TEST;

BEGIN

dbms_java.grant_permission(

'OPENAI_TEST',

'SYS:java.net.SocketPermission',

'api.openai.com:443',

'connect,resolve'

);

END;Input Table Creation and Setup.

Execute as OPENAI_TEST:

CREATE TABLE FEEDBACK_INPUT (

"ID" NUMBER(10,0) PRIMARY KEY,

"CUSTOMER_FIRST_NAME" VARCHAR(100 CHAR) NOT NULL,

"CUSTOMER_LAST_NAME" VARCHAR(100 CHAR) NOT NULL,

"FEEDBACK" VARCHAR(1000 CHAR) NOT NULL

);

INSERT INTO FEEDBACK_INPUT VALUES (1, 'Xavier', 'Lopez', 'Impressed as always!');

INSERT INTO FEEDBACK_INPUT VALUES (2, 'Emily', 'Nguyen', 'Very bad! Never again!');

INSERT INTO FEEDBACK_INPUT VALUES (3, 'Mason', 'Patel', 'Awesome, best product ever! 10/10');

INSERT INTO FEEDBACK_INPUT VALUES (4, 'Sophia', 'Garcia', 'I suppose I will buy this amazing product again.');

INSERT INTO FEEDBACK_INPUT VALUES (5, 'Liam', 'Kumar', 'I suppose you expect me to buy this ''amazing'' product again...');

INSERT INTO FEEDBACK_INPUT VALUES (6, 'Isabella', 'Smith', 'I suppose you expect me to buy this amazing product again...');

INSERT INTO FEEDBACK_INPUT VALUES (7, 'Oliver', 'Jones', 'All was good until the product caught fire and my house burned down.');

INSERT INTO FEEDBACK_INPUT VALUES (8, 'Ava', 'Brown', 'Five stars!');

INSERT INTO FEEDBACK_INPUT VALUES (9, 'William', 'Martinez', '*');

INSERT INTO FEEDBACK_INPUT VALUES (10, 'Charlotte', 'Lee', '*****');

INSERT INTO FEEDBACK_INPUT VALUES (11, 'James', 'Gupta', 'Have you ever tried your own products?');

INSERT INTO FEEDBACK_INPUT VALUES (12, 'Amelia', 'Kim', '10/10');

INSERT INTO FEEDBACK_INPUT VALUES (13, 'Isaac', 'McDonald', 'Amazing! I will recommend your amazing product to all my enemies!');Output Table Creati0n.

CREATE TABLE FEEDBACK_OUTPUT (

"ID" NUMBER(10,0) PRIMARY KEY,

"CUSTOMER_FIRST_NAME" VARCHAR(100 CHAR) NOT NULL,

"CUSTOMER_LAST_NAME" VARCHAR(100 CHAR) NOT NULL,

"FEEDBACK" VARCHAR(1000 CHAR) NOT NULL,

"SENTIMENT" VARCHAR(50 CHAR) NOT NULL,

"MODEL" VARCHAR(100 CHAR) NOT NULL

);Step 3: Upload Jar to Oracle DB

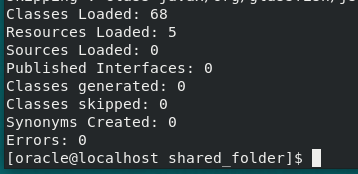

While JAR loading can be done via SQL*Plus, typically it takes a few attempts to get it right and I could not get it to produce informative error messages. What worked much better was the loadjava command from the command line, especially with the -verbose option:

loadjava -thin -user OPENAI_TEST/OPENAI_TEST@localhost:1521/ORCLPDB1 -verbose -resolve -force /mnt/shared_folder/OpenAI4OracleDB.jarThe command will display informative error messages if any and will give a summary at the end:

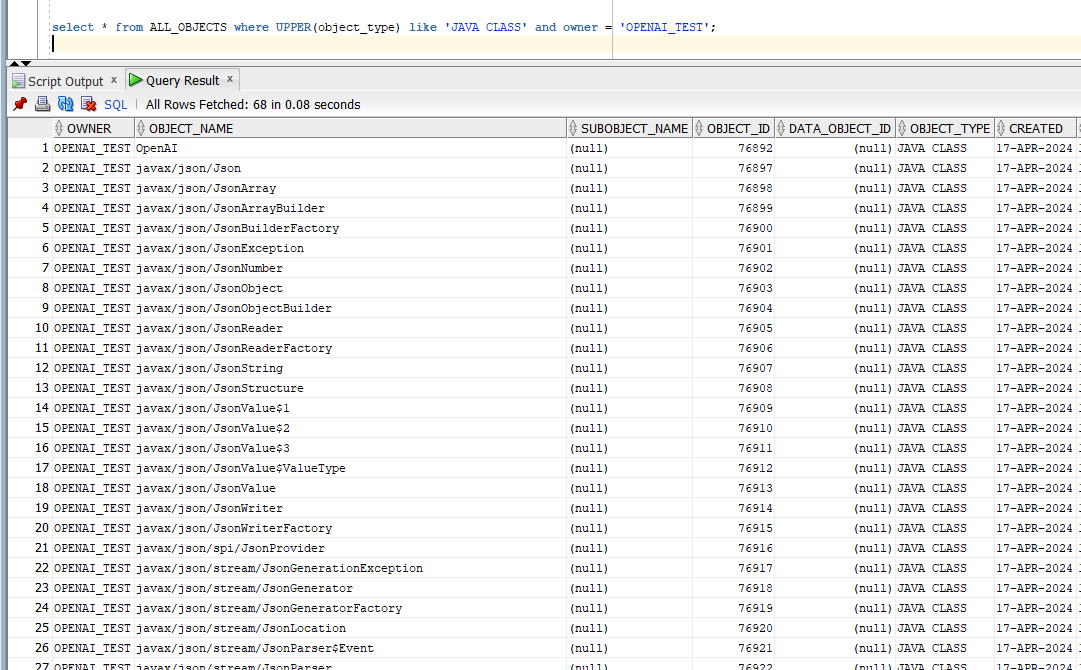

After uploading the JAR, verify that the database recognizes the new classes:

select * from ALL_OBJECTS where UPPER(object_type) like 'JAVA CLASS' and owner = 'OPENAI_TEST';Our OpenAI class is at the top of the list here:

Step 4: Create Wrapper PL/SQL Function

With the classes successfully loaded, create a PL/SQL wrapper for the analyseSentiment function in the OpenAI class.

CREATE OR REPLACE FUNCTION OPENAI_ANALYSE_SENTIMENT(prompt IN VARCHAR, apiKey IN VARCHAR, modelName IN VARCHAR) RETURN VARCHAR AS

LANGUAGE JAVA NAME 'OpenAI.analyseSentiment(java.lang.String, java.lang.String, java.lang.String) return java.lang.String';Important Note: When referencing Java data types in the wrapper:

- Use

intfor integers (e.g.,MyMaths.addition(int, int) return int). - Use the full class path

java.lang.Stringfor String values.

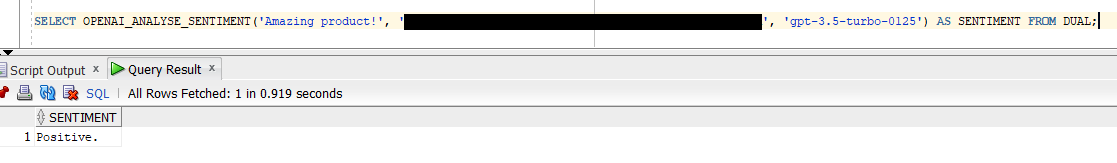

The function is now ready for use. Let's test it (replace API_KEY with your own key):

SELECT OPENAI_ANALYSE_SENTIMENT('Amazing product!', 'API_KEY', 'gpt-3.5-turbo-0125') AS SENTIMENT FROM DUAL;The result should look like this:

And I agree - "Amazing product!" does indeed sound like a positive review.

Step 5: OpenAI with Oracle Table Data

Let us analyse the reviews in the FEEDBACK_INPUT table. Important notes:

- Expect some delay: Even with a turbo model, responses will not be instantaneous.

- Record-by-record analysis: We are processing records individually. (I could not find a good way of batching records with my chosen model.)

- Cost awareness: Be mindful of API call costs, especially with large datasets.

- Model choice: We are using

gpt-3.5-turbo-0125for its affordability.

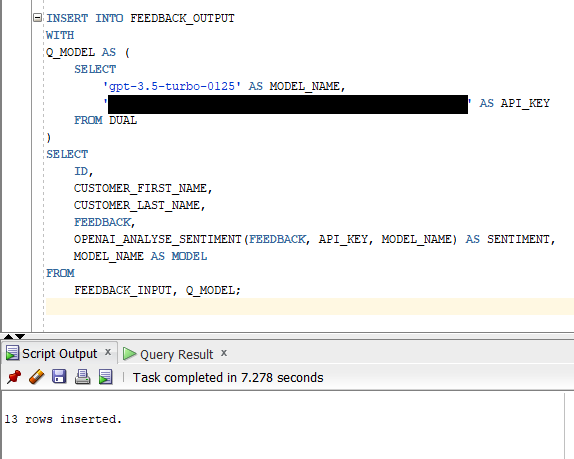

INSERT INTO FEEDBACK_OUTPUT

WITH

Q_MODEL AS (

SELECT

'gpt-3.5-turbo-0125' AS MODEL_NAME,

'API_KEY' AS API_KEY -- replace this with your own API key

FROM DUAL

)

SELECT

ID,

CUSTOMER_FIRST_NAME,

CUSTOMER_LAST_NAME,

FEEDBACK,

OPENAI_ANALYSE_SENTIMENT(FEEDBACK, API_KEY, MODEL_NAME) AS SENTIMENT,

MODEL_NAME AS MODEL

FROM

FEEDBACK_INPUT, Q_MODEL;This is what it looks like in SQL Developer:

The end result in the FEEDBACK_OUTPUT table looks like this:

I noticed that the output is inconsistent. For example, the "10/10" feedback was incorrectly marked as "Neutral" in my earlier attempts. Also, it did not always recognise sarcasm in the review records 6 and 13. Sarcasm is not recognised for the review record 11.

The result above looks good - GPT-3.5 is clearly quite capable of classifying review sentiment despite being a cheap model.

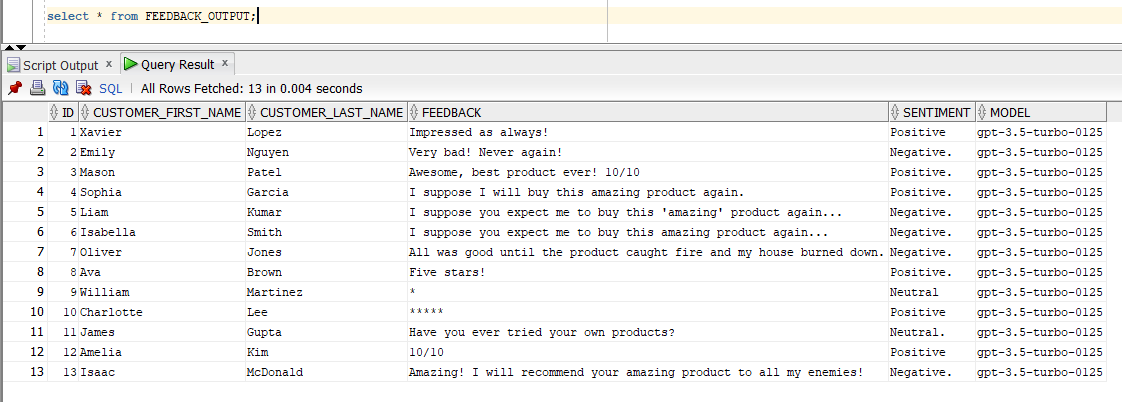

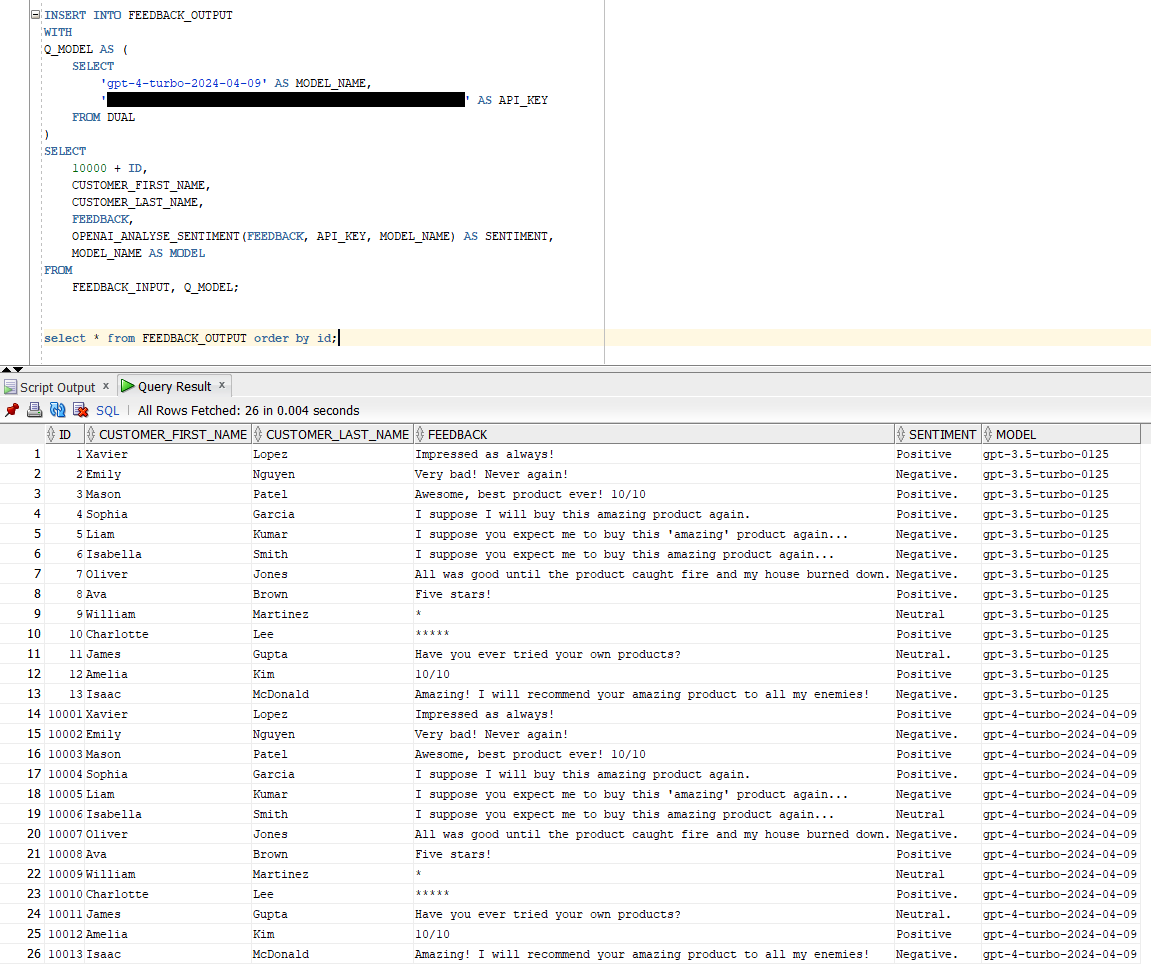

Now let us try the same with a more recent model, gpt-4-turbo-2024-04-09, which is also more expensive.

The results do not differ by much. In fact, the only difference is the review 6: I suppose you expect me to buy this amazing product again... , which is recognised as Neutral by the GPT-4 and as Negative by GPT-4.5 - because of detected sarcasm. I am not entirely sure which model is right. The review 11 Have you ever tried your own products? is not recognised as anger or sarcasm by either model, therefore not marked as Negative.

AI's arrival marks a new era of possibilities. While we are still exploring its applications, the AI's role will be to enhance existing technologies, not replace them.