ODI 11g in the Enterprise Part 3: Data Quality and Data Profiling using Oracle EDQ

In this special Christmas Break series of postings on Oracle Data Integrator 11g, we've been looking at how ODI has moved beyond being just a relational data movement tool to one that can connect to multi-dimensional databases such as Oracle Essbase, or perform a bulk-data movement role within an Oracle Fusion Middleware-based SOA environment. In yesterday's posting we saw how recent extensions to ODI 11g have now given it the ability to connect to Hadoop clusters and run MapReduce jobs via Apache Hive, and how it can make use of the new Oracle Loader for Hadoop connector to move data out of Hadoop and into the data warehouse "proper". Here's a quick recap of the links to those postings, and the remaining two in the series.

- ODI11g in the Enterprise Part 1: Beyond Data Warehouse Table Loading

- ODI11g in the Enterprise Part 2 : Data Integration using Essbase, Messaging, and Big Data Sources and Targets

- ODI 11g in the Enterprise Part 3: Data Quality and Data Profiling using Oracle EDQ

- ODI 11g in the Enterprise Part 4: Build Automation and Devops using the ODI SDK, Groovy and ODI Tools

- ODI 11g in the Enterprise Part 5: ETL Resilience and High-Availability

In all of these discussions though there's an implicit assumption that the data you're processing, integrating and reporting on is trustworthy, and you can rely on it's quality and consistency - but what if you can't? How many otherwise technically sound projects with clear business needs have failed at the last minute because the data just wasn't up to the job, an outcome often made much worse by the fact it's often the end-users who discover this (and usually after everyone's assured you at the project start that "we don't have an issue with data quality")? This issue of data quality, data profiling and data cleansing is now addressed by the second new enterprise feature that Oracle Data Integrator 11g comes with - integration with a new Oracle product called Oracle Enterprise Data Quality.

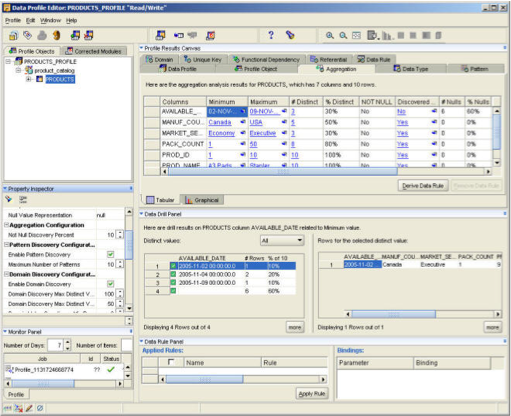

We're now coming up to the tenth anniversary of this blog soon, and readers who've been there from the start will probably know of various Oracle "data quality" products that have been released over the years. The first such product I used to any great extent was the Data Quality Option for Oracle Warehouse Builder 10g, a pay-extra option that provided tools for profiling data for datatypes, data distribution, correlations and other characteristics and statistics, which you could then use to automatically generate OWB mappings that applied data rules and corrected the data. I covered this feature in an article for OTN in 2006 and was a big fan of it at the time, but it was effectively sunsetted along with OWB when Oracle went on to acquire Sunopsis, and so didn't get developed any further. The screenshot below shows the Data Profile Editor in OWB 10gR2 with a series of tabs showing various statistics on the profiled data set, and panels to the left and bottom for deriving data rules, data domains and patterns.

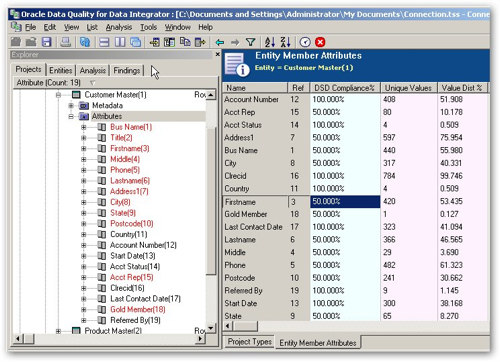

OWB's data quality features were a pretty good first attempt at building this type of functionality into an Oracle ETL tool, but when the focus moved to Oracle Data Integrator Oracle took a different approach, licensing and reselling products and technology from Trillium rather than building out their own functionality. Oracle Data Profiling and Quality for Oracle Data Integrator (ODQ) was a standalone product with some fairly loose integration with ODI that went beyond OWB's features in terms of core profiling, matching and cleansing capabilities, but didn't have the same close integration in terms of automatically generating data corrections and so forth. I covered ODQ in this blog post back in 2009 and compared it with OWB, but I guess this product was a dead-end for Oracle as they didn't own the IP and there were limited options for integrating it closed with ODI, let along products such as E-Business Suite, Siebel or the new Fusion Apps. The screenshot below shows ODQ displaying the output of a data profiling exercise, with the blog post mentioned before going through a typical profiling/correction lifecycle culminating with the ODQ job being called from within an ODI package.

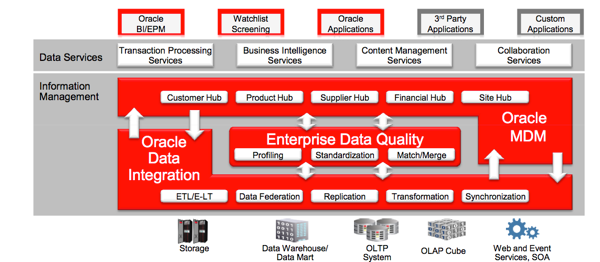

So that now takes us to the present, where around a year ago Oracle completed the acquisition of Datanomic, a specialist data quality tool vendor that finally gave Oracle "best of breed" DQ capabilities but where it owned the IP. Together with the Solver Creek Systems acquisition before it, the plan now is for Datanomic's main tools, Dn:Director (now renamed "Oracle Enterprise Data Quality" and Dn:Sentry (now renamed "Oracle Watchlist Screening") to form the core of Oracle's data quality offering, sitting within Fusion Middleware 11g along with ODI, Goldengate and Oracle Data Services Integrator, as shown in the Oracle graphic below.

As with most data quality tools, Oracle Enterprise Data Quality provides a number of key functions, including data profiling and exploration, text parsing, match/merge/enrich, but also a fairly well-developed framework for defining and managing DQ cases and then monitoring their resolution. Built, like the rest of Oracle Fusion Middleware, on Java technology and deployable on WebLogic Server, Oracle EDQ is a fairly easy fit into the general FMW11g framework though as we'll see later, integration with ODI is at a fairly early stage at the moment. The Oracle graphic below, from the recent Openworld conference, sets out the key capabilities of the core Oracle EDQ platform.

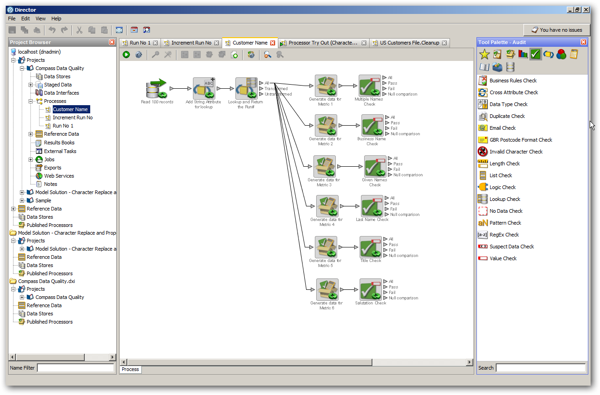

So how does Oracle EDQ work in practice? Well our own Koen Vantomme put together an introductory blog post for EDQ back in August which went through the basics, but in essence as with most DQ tools you've got a repository, a server engine that runs the profiling and executes any data cleansing, and a set of client tools that are aimed at the end-user or data steward. The screenshot below shows a typical project in the main EDQ tool called Director, with a project folder on the left-hand side, a mapping canvas in the middle and a palette of operators available for use on the right.

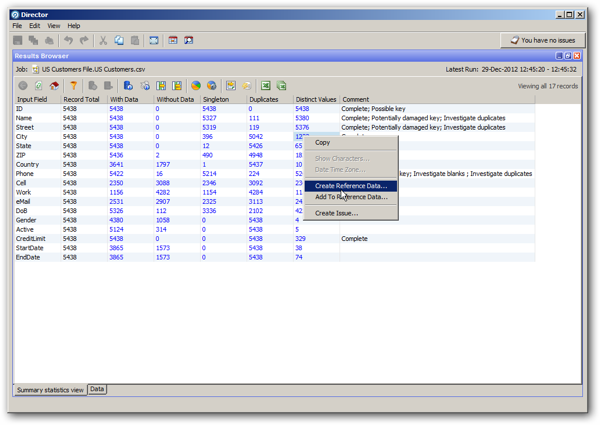

The screenshot below is the equivalent of the OWB and ODQ data profiling output screen, with the user then able to create data domain, data rules and other derived bits of metadata based on the output of the profiling job.

Oracle EDQ is actually a pretty good product, going far beyond anything Oracle's had before in terms of managing the data exploration and management process, support for specific vertical and subject area requirements, data auditing capabilities and support for a wide range of data sources. Two presentations on the OTN website set out the key product capabilities and features, and EDQ's statement of direction, and material we've seen separately sets out how EDQ is being integrated with Endeca, and embedded in the Fusion Applications to provide platform-level DQ capabilities, so I think Oracle have finally settled on their DQ technology of choice after a number of false starts and more tactical solutions. I'll be covering EDQ in more detail in the New Year along with some of my colleagues from Rittman Mead, and we're seeing EDQ being licensed as part of wider Fusion Middleware deals at a number of customer sites, so I think we'll see more of this product than we did with ODQ and OWB's Data Quality Option in the past.

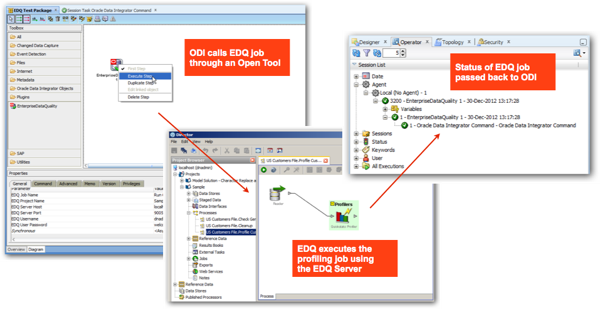

Where EDQ does fall a bit short at the moment, like ODQ before it, is integration with ODI itself. This is of course understandable as Oracle have only just taken on the product, but like ODQ before it the only real integration is the ability to call EDQ jobs from within an ODI package via a new Enterprise Data Quality Open Tool, with the EDQ job being executed either synchronously or asynchronously, and the job status passed back to ODI as with any other package step.

Over time though, Oracle's plans are to package EDQ in a number of ways to integrate and enhance ODI, with a data sheet on the Oracle website titled "Oracle Enterprise Data Quality Products for Oracle Data Integrator" setting out two ODI-related products that are in fact two licensing options for subsets of the overall EDQ platform. As well as the Open Tool though, of course EDQ and ODI can share the same application server type (WebLogic Server) and the EDQ result (staging / snapshot) schema can be on the same database as where ODI resides, reducing the amount of data movement, and EDQ job calls can be included in ODI KMs, to for example cleans data on an ODI "$" table directly.

So this is all great news for the ODI developer … but, what happens when ODI gets accepted into your wider middleware project, and it comes to release time? How do we package up ODI code elements and release them as part of a wider deployment, and can we automate any of the setup, versioning and other project lifecycle elements similar to what's going on with the "DevOps" movement? Check back with the next instalment in this series, where we'll answer just that question.