GoldenGate and Oracle Data Integrator - A Perfect Match... Part 2: Replicate to Staging and Foundation

It has been quite a hectic month since my last post, GoldenGate and Oracle Data Integrator – A Perfect Match Part 1: Introduction. Plenty of client work and delivery of two ODI 11g Bootcamp training courses have kept me busy. In the previous post, I described how the Oracle Reference Data Warehouse Architecture could be used to support a real-time data warehouse implementation. I also introduced the integration between ODI 11g and Oracle GoldenGate. In this post, I will describe the implementation and configuration of GoldenGate via ODI 11g at a high level. The detailed implementation has already been covered in a previous post, Extreme BI: Capturing Change with Oracle GoldenGate and ODI: Part 2 (Implementation), so here I will just focus on the highlights. In the final post in the series, I will switch the focus to ODI Change Data Capture (CDC), walking through several options to using CDC in downstream ETL processes.

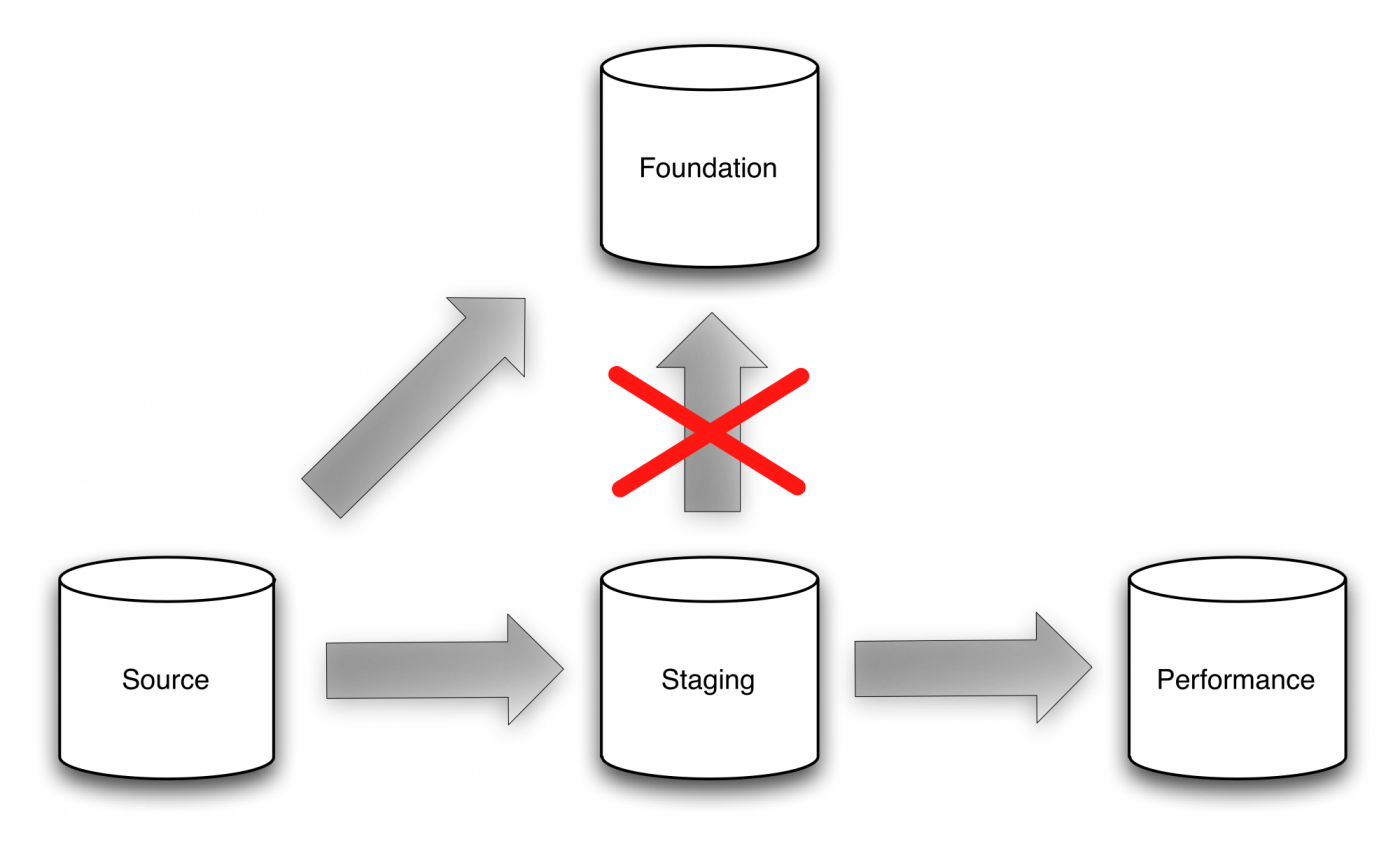

Oracle GoldenGate and Oracle Data Integrator will be used to load each layer of the Oracle Reference Data Warehouse Architecture. GoldenGate is implemented to capture changes on the source and replicate data to the Staging layer (fully replicated source data) database tables. GoldenGate will also load the Foundation layer (transactional history) directly from the source, as the product is able to capture changes once, and then distribute the change rows to multiple locations in parallel without any additional impact to the source or target systems. The need for ETL mappings to incrementally load Foundation from Staging is eliminated, along with the added maintenance. Finally, ODI Interfaces are built to execute ETL mappings that will load the Access and Performance layer.

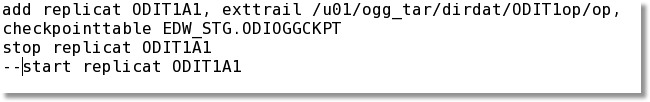

The key to the ODI and GoldenGate integration is a Journalizing Knowledge Module in ODI named “JKM Oracle to Oracle Consistent (OGG)”. The JKM, which will be applied to the Staging and Foundation Models, must be slightly modified to allow the capture of historical transactional data in the Foundation schema. The addition of the parameter “INSERTALLRECORDS” to the replicat parameter file loading the Foundation schema will apply every transaction as an insert into the target, regardless of whether it was an insert, update, or delete on the source. The addition of data warehouse audit columns, described below, will allow the tracking of transactional history in each table.EDW_SCN (System Change Number)

EDW_COMMIT_TIMESTAMP (when the change was committed)

EDW_TRANS_TYPE (DML type for the transaction: insert, update, delete)

EDW_ROW_RANK (Intra-commit ranking for each transaction)

Journalizing within Oracle Data Integrator allows ETL developers to use the change records, stored in the J$ change tables, as the source of the ODI Interface. The JKM will setup GoldenGate processes to replicat the change data from the source to the change tables. When a change occurs in the source, the primary key and changed data are stored in the change table, along with the transaction DML type. Using the change data allows for near real-time processing of ETL mappings when loading downstream data warehouse tables.

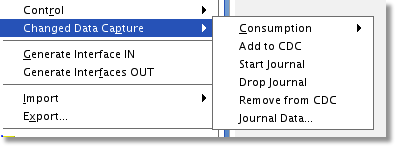

To setup the integration between ODI and GoldenGate, begin by creating the ODI Models and Datastores for the Staging and Foundation tables. I will typically reverse engineer the source tables first, then copy them to the Staging and Foundation Models. This approach will ensure the column names and data types remain consistent with the source. I then execute a Groovy script to create the additional data warehouse audit columns in each of the Foundation Datastores. Now that we have the Models set, apply the customized “JKM Oracle to Oracle Consistent (OGG)” to the Staging and Foundation Models and set the options appropriately, as described in the aforementioned “Extreme BI: Capturing Change with GoldenGate…” blog post. From the tree structure under the Model, right-click each Datastore and choose “Add to CDC”. Then, choose “Start Journal” at the Model level. Based on the options set in the JKM, the Start Journal process will: create the ODI CDC framework (“J$” change tables, “JV$” change views, etc.) and generate the GoldenGate parameter files, configuration batch scripts (called Obey files), and instructions on how to complete the setup.

GGSCI> start replicat ODIT1A aftercsn 123456GoldenGate has been setup via ODI and is now replicating source changes to the Staging and Foundation schema tables. It will also load the “J$” change tables for use with ODI change data capture. In Part 3, the final post in the series, I will discuss how to meet some real-time ETL challenges using various development methods and Journalizing within Oracle Data Integrator.

GoldenGate and Oracle Data Integrator - A Perfect Match...

1. Introduction

2. Replicate to Staging and Foundation

3. Real-time ETL Challenges