Why ODI, DW and OBIEE Developers Should Be Interested in Hadoop

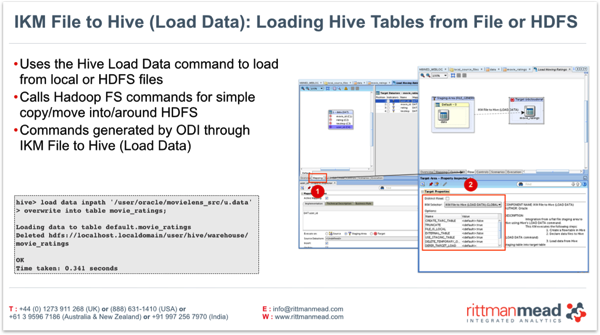

Over the past few months I’ve been posting a number of articles about Hadoop, and how you can connect to it from ODI and OBIEE. From an ODI perspective, I covered Hadoop as one of a number of new data sources ODI11g could connect to, then looked at how it leveraged Hive to issue SQL-like data extraction commands to Hadoop, and how it used Oracle Hadoop connector tools to transfer Hadoop data into the Oracle Database, and directly work with data in HDFS files. For OBIEE, I went through the background to Hadoop, Hive and the other “big data” technologies, stepped through a typical Hive query session, then showed how OBIEE 11.1.1.7 could connect to Hadoop through its newly-added Hive adaptor, then finally built a proof-of-concept OBIEE connection through to Cloudera Impala, then extended that to a multi-node Hadoop cluster.

But why all this interest in Hadoop - what’s it really got to do with OBIEE and ODI, and why should you as developers be interested in what’s probably yet another niche BI/DW datasource? Well in my opinion, Hadoop is the classic disruptive technology - cheap, and starting-off with far less functionality than regular, relational databases - but it’s improving fast, and as BI&DW developers it offers the potential of both massive benefits - significantly lower TCO for basic DW work, and support for lots of modern, internet-scale use-cases - and threats - in that if we don’t understand it and see how it can benefit our customers and end-users, we risk being left-behind as technology moves on.

To my mind, there are two main ways in which Hadoop, Hive, HDFS and the other big-data ecosystem technologies are used, in the BI/DW context:

-

Standalone, with their own query tools, database tools, query languages and so forth - your typical “data scientist” use case, originating from customers such as Facebook, LinkedIn etc. In this context, there’s typically no Oracle footprint, users are pretty self-sufficient, any output we see is in the form of “insights”, marketing campaigns etc.

-

Alongside more mainstream, for example Oracle, technologies. In this instance, Hadoop, Hive, HDFS, NoSQL etc are used as complementary, and supporting, technologies to enhance existing Oracle-based data warehouses, capture processes, BI systems. In some cases, Hadoop-type technologies can replace more traditional relational ones, but mostly they’re used to make BI&DW systems more scaleable, cheaper to run, able to work with a wider range of data sources and so forth. This is the context in which Hadoop can be relevant to more traditional Oracle BI, ETL and DW developers.

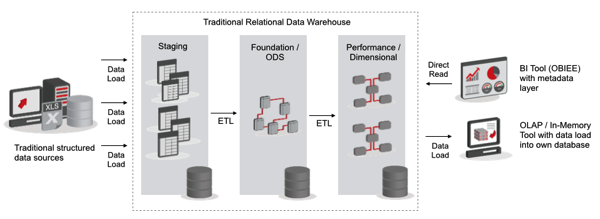

To understand how this happened, let’s go through a bit of a history lesson. Five years ago or so, your typical DW+BI architecture looked like this:

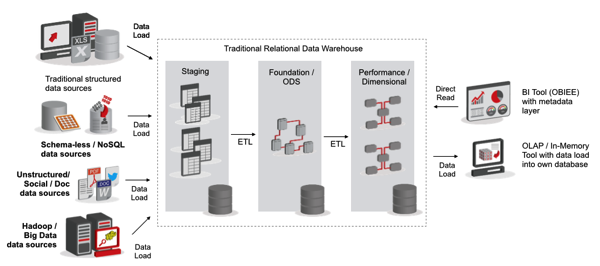

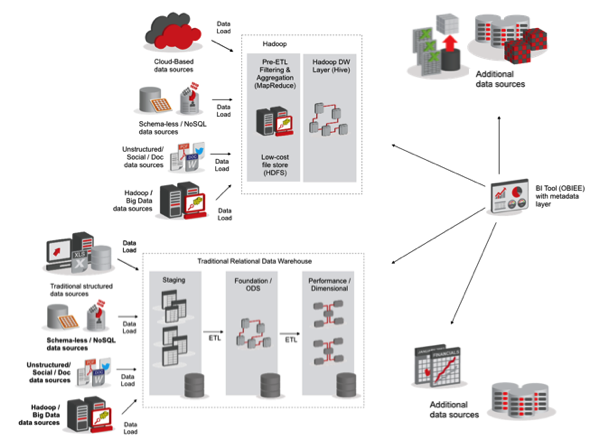

The data warehouse was typically made-up of three layers - staging, foundation/ODS and performance/dimensional, with data stored in relational databases with some use made of OLAP servers, or some of the newer in-memory databases like Qlikview. But over the intervening years, the scale and types of data sources have increased, with customers now looking to store data from unstructured and semi-structured sources in their data warehouse, take in feeds from social media and other “streaming” sources, and access data in cloud systems typically via APIs, rather than traditional ETL loading. So now we end up with a data warehouse architecture that looks like this:

But this poses challenges for us. From an ETL perspective, how do we access these non-traditional sources, and once we’ve accessed them - how do we efficiently process them? The scale and “velocity” of some of these sources can be challenging for traditional ETL processes that expect to log every transformation in a database with transactional integrity and multi-version concurrency control, whilst in some cases it doesn’t make sense to try and impose a formal data structure on incoming data as you’re capturing it, instead giving it the structure when we finally need it, or when we choose to access it in a query.

And then came “Hadoop”, and its platform and tool ecosystem. At its core, Hadoop is a framework for processing, in a massively-parallel and low-cost fashion, large amounts of data using simple transformation building blocks - filtering (mapping) and aggregating (reducing). Hadoop and MapReduce came out of the US West Coast Internet scene as a way of processing web and behavioural data in the same massively-distributed way that companies provided web search and other web 2.0 activities, and a core part of it was that it was (a) open-source, like Linux and (b) cheap, both in being open-source but also because it was designed from the outset to run on low-cost, commodity hardware that’s expected to fail. Pretty much the opposite of Oracle’s business model, but also obviously very attractive to anyone looking to lower the TCO of their data warehouse system.

So as I said - the Hadoop pioneers went-out and built their systems without much reference to vendors such as Oracle, IBM, Microsoft and the like, and being blunt, they won’t have much time for traditional Oracle BI&DW developers like ourselves. But those customers who are largely invested in Oracle technology, but see advantages in deploying Hadoop and big data technologies to make their systems more flexible, scaleable and cheaper to run - that’s where ODI and OBIEE’s connectivity to these technologies becomes interesting.

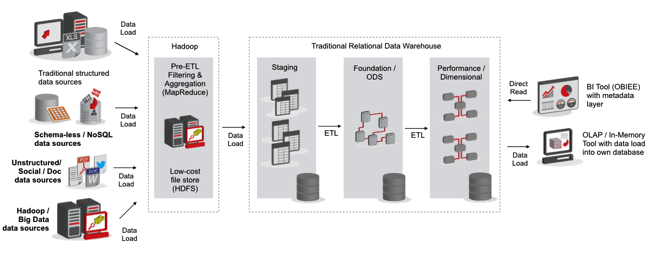

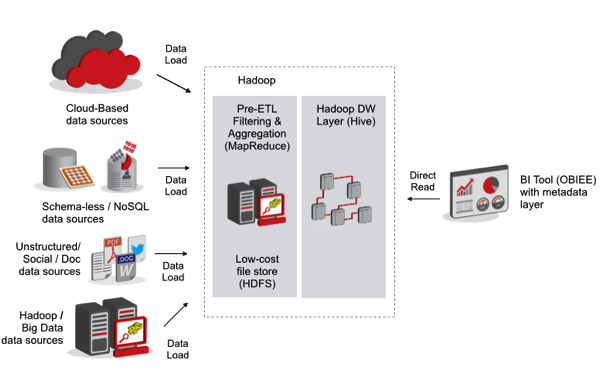

To take the example of customers who are looking to deploy Hadoop technologies to enhance their Oracle data warehouse - a typical architecture going down this route would look like this:

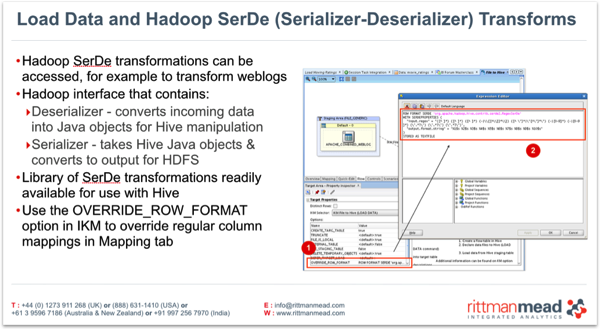

In this example, we’re using HDFS - Hadoop Distributed File System - as a pre-staging area for the data warehouse, storing incoming files cheaply, and with build-in fault tolerance, to the point where storage is so cheap that you might as well keep stuff you’re not interested in now, but you think might be interesting in the future. Using Oracle Direct Connector for HDFS, you can set up Oracle Database external tables that map onto HDFS just like any other file system, so you can extract from and otherwise work with these files without worrying about writing MapReduce jobs; ODI, through Oracle Data Integration Adaptor for Hadoop, you can connect ODI to these table sources as well, and work with them just like any other topology source, as I show in the slide below from my upcoming UKOUG Tech’13 session on ODI, OBIEE and Hadoop that’s running in a couple of week’s time in Manchester:

As well as storing data, you can also do simple filtering and transformation on that data, using the Hadoop framework. Most upfront data processing you do as part of an ETL process involves filtering out data you’re not interested in, joining data sets, grouping and aggregating data, and other large-scale data transformation tasks, before you then load it into the foundation/ODS layer and do more complex work. And this simple filtering and transformation is what Hadoop does best, on cheap hardware or even in the cloud - and if your customer is already invested in ODI and runs the rest of their ETL process using it, its relatively simple to add Hadoop capabilities to it, using ODI to orchestrate the data processing steps but using Hadoop to do the heavy lifting, as my slide below shows:

Now some customers, and of course Hadoop vendors, say that in reality you don’t even need the Oracle database if you’re going to build a data warehouse, or more realistically a data mart. Now that’s a bigger question and probably one that depends on the particular customer and circumstances, but a typical architecture that takes this approach might look like this:

In this case, ODI again has capabilities to transform data entirely within Hadoop - with ODI acting as the ETL framework and co-ordinator, but Hadoop doing the heavy-lifting - and there’s always the ability to get the data of Hadoop and into a main Oracle data warehouse, if the Hadoop system is more of a data mart or deparment-specific analysis. But whichever way - in most cases the customer is going tho want to continue to use their existing BI tool, particularly if their BI strategy involves bringing together data from lots of different systems, as you can do with OBIEE’s federated query capability - giving you an overall architecture that looks like this:

So it’s this context that makes OBIEE’s connectivity to Hadoop so important; I’m not saying that someone creating a Hadoop system from scratch is going to go out and buy OBIEE as their query tool - more typically, they’ll use other open-source tools or create models in tools like R; or they might go out and buy a lightweight data visualisation tool like Tableau and use that to connect solely to their Hadoop source. But the customers we work with have typically got much wider requirements for BI, have a need for an enterprise metadata model, recognise the value of data and report governance, and (at least at present) access most of their data from traditional relational and OLAP sources. But they will still be interested in accessing data from Hadoop sources, and OBIEE’s new capability to connect to this type of data, together with closer integration with Endeca and its unstructured and semi-structured sources, addresses this need.

So there you have it - that’s why I think OBIEE and ODI’s ability to connect to Hadoop is a big deal, and it’s why I think developers using those tools should be interested in how it works, and should try and set up their own Hadoop systems and see how it all works. As I said, I’ll be covering this topic in some detail at the UKOUG Tech’13 Conference in Birmingham in a couple of weeks time, so if you’re there on the Sunday come along and I’ll try and explain how I think it all fits together.