Introducing Oracle Big Data Discovery Part 1: "The Visual Face of Hadoop"

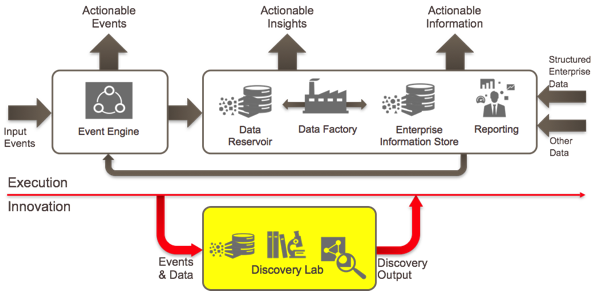

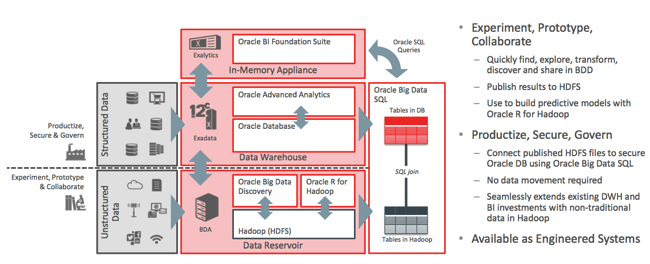

Oracle Big Data Discovery was released last week, the latest addition to Oracle's big data tools suite that includes Oracle Big Data SQL, ODI and it’s Hadoop capabilities and Oracle GoldenGate for Big Data 12c. Introduced by Oracle as “the visual face of Hadoop”, Big Data Discovery combines the data discovery and visualisation elements of Oracle Endeca Information Discovery with data loading and transformation features built on Apache Spark to deliver a tool aimed at the “Discovery Lab” part of the Oracle Big Data and Information Management Reference Architecture.

Most readers of this blog will probably be aware of Oracle Endeca Information Discovery, based on the Endeca Latitude product acquired as part of the Endeca aquisition. Oracle positioned Endeca Information Discovery (OEID) in two main ways; on the one hand as a data discovery tool for textual and unstructured data that complemented the more structured analysis capabilities of Oracle Business Intellligence, and on the other hand, as a fast click-and-refine data exploration tool similar to Qlikview and Tableau.

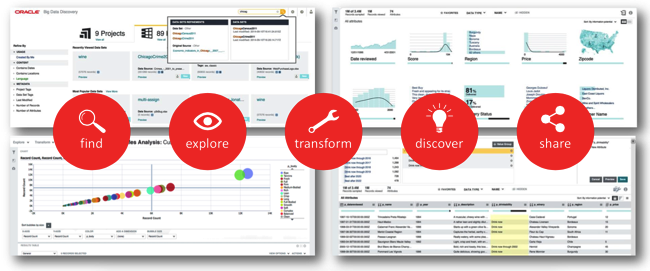

The problem for Oracle though was that data discovery against files and documents is a bit of a “solution looking for a problem” and doesn’t have a naturally huge market (especially considering the license cost of OEID Studio and the Endeca Server engine that stores and analyzes the data), whereas Qlikview and Tableau are significantly cheaper than OEID (at least at the start) and are more focused on BI-type tasks, making OEID a good too but not one with a mass market. To address this, whilst OEID will continue as a standalone tool the data discovery and unstructured data analysis parts of OEID are making their way into this new product called Oracle Big Data Discovery, whilst the fast click-and-refine features will surface as part of Visual Analyzer in OBIEE12c.

More importantly, Big Data Discovery will run on Hadoop making it a solution for a real problem - how to catalog, explore, refine and visualise the data in the data reservoir, where data has been landed that might be in schema-on-read databases, might need further analysis and understanding, and users need large-scale tooling to extract the nuggets of information that in time make their way into the “Execution” part of the Big Data and Information Management Reference Architecture. As some who’s admired the technology behind Endeca Information Discovery but sometimes struggled to find real-life use-cases or customers for it, I’m really pleased to see its core technology applied to a problem space that I’m encountering every day with Rittman Mead’s customers.

In this first post, I’ll look at how Big Data Discovery is architected and how it works with Cloudera CDH5, the Hadoop distribution we use with our customers (Hortonworks HDP support is coming soon). In the next post I’ll look at how data is loaded into Big Data Discovery and then cataloged and transformed using the BDD front-end; then finally, we’ll take a look at exploring and analysing data using the visual capabilities of BDD evolved from the Studio tool within OEID. Oracle Big Data Discovery 1.0 is now GA (Generally Available) but as you’ll see in a moment you do need a fairly powerful setup to run it, at least until such time as Oracle release a compact install version running on VM.

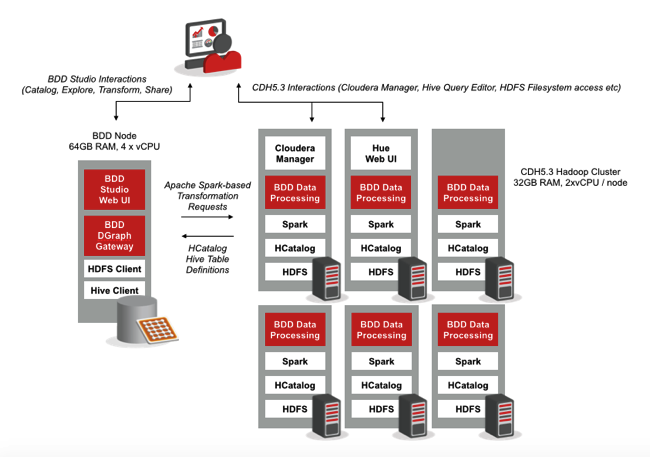

To run Big Data Discovery you’ll need access to a Hadoop install, which in most cases will consist of 6 (minumum 3 or 4, but 6 is the minimum we use) to 18 or so Hadoop nodes running Cloudera CDH5.3. BDD generally runs on its own server nodes and itself can be clustered, but for our setup we ran 1 BDD node alongside 6 CDH5.3 Hadoop nodes looking like this:

Oracle Big Data Discovery is made up of three component types highlighted in red in the above diagram, two of which typically run on their own dedicated BDD nodes and another which runs on each node in the Hadoop cluster (though there are various install types including all on one node, for demo purposes)

- The Studio web user interface, which combines the faceted search and data discovery parts of Endeca Information Discovery Studio with a lightweight data transformation capability

- The DGraph Gateway, which brings Endeca Server search/analytics capabilities to the world of Hadoop, and

- The Data Processing component that runs on each of the Hadoop nodes, and uses Hive’s HCatalog feature to read Hive table metadata and Apache Spark to load and transform data in the cluster

The Studio component can run across several nodes for high-availability and load-balancing, which the DGraph element can run on a single node as I’ve set it up, or in a cluster with a single “leader” node and multiple “follower” nodes again for enhanced availability and throughput. The DGraph part them works alongside Apache Spark to run intensive search and analytics on subsets of the whole Hadoop dataset, with sample sets of data being moved into the DGraph engine and any resulting transformations then being applied to the whole Hadoop dataset using Apache Spark. All of this then runs as part of the wider Oracle Big Data product architecture, which uses Big Data Discovery and Oracle R for the discovery lab and Oracle Exadata, Oracle Big Data Appliance and Oracle Big Data SQL to take discovery lab innovations to the wider enterprise audience.

So how does Oracle Big Data Discovery work in practice, and what’s a typical workflow? How does it give us the capability to make sense of structured, semi-structured and unstructured data in the Hadoop data reservoir, and how does it look from the perspective of an Oracle Endeca Information Discovery developer, or an OBIEE/ODI developer? Check back for the next parts in this three part series where I’ll first look at the data transformation and exploration capabilities of Big Data Discovery, and then look at how the Studio web interface brings data discovery and data visualisation to Hadoop.