OpenAI Gives Vision to Oracle DB, Part 1: Preparing the Image Data

Image Data in Oracle DB

OpenAI's newly released GPT-4o model, with its ground-breaking multimodal processing capabilities, has generated significant interest in the AI community. Building on my previous exploration of integrating OpenAI's text-based models with Oracle database queries, this series of articles will delve into utilizing GPT-4o's image recognition capabilities within the same framework. We will investigate the methodologies involved in passing image data from an Oracle database query and receiving a corresponding textual response from GPT-4o.

While the storage of binary data in Oracle databases through the BLOB data type is well-established, the prevalence of images in standard database tables remains relatively uncommon. This may be attributed to the historically limited capabilities for analysing and classifying such data. However, with the advent of advanced image recognition technologies, the integration of images within databases presents new opportunities. In this article, we will explore the foundational steps of preparing image data for storage in Oracle databases, a domain that may be uncharted territory even for seasoned Oracle developers.

Use Python

Python, with its extensive libraries for database connectivity and image manipulation, offers an efficient solution for automating the preparation, optimisation and insertion of multiple image files from a folder into an Oracle database table, eliminating the need for tedious manual processes.

Preparing Image Data

While images optimized for viewing typically require larger file sizes, higher resolutions and colour depth, these attributes can often be reduced for efficient image recognition by AI models without significant impact on accuracy.

Following optimization, images must be encoded in base64 format for transmission via web service requests to the OpenAI model.

Python facilitates efficient JPEG image scaling, quality adjustment, and base64 encoding.

Source Data and Target DB Table

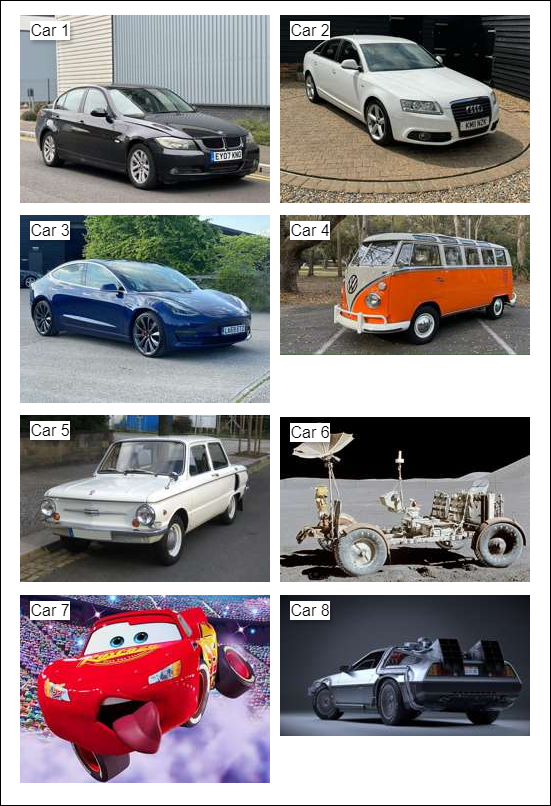

For our source data, we will use a diverse set of eight car images sourced from the internet. A representative example, in its original size, is shown below:

Our objective is to employ the OpenAI GPT-4o model to identify the make, model, and colour of each car. Additionally, we will track the number of tokens consumed during analysis to gauge the cost associated with OpenAI API calls.

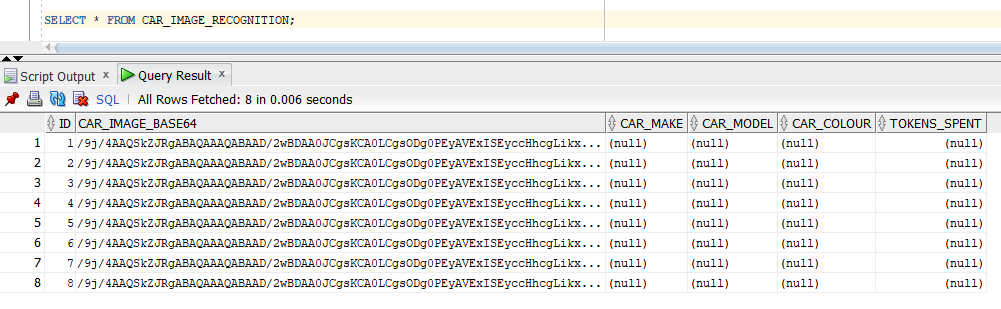

To store the results, we have created the following Oracle database table:

CREATE TABLE CAR_IMAGE_RECOGNITION (

ID NUMBER(10,0) PRIMARY KEY,

CAR_IMAGE_BASE64 CLOB NOT NULL,

CAR_MAKE VARCHAR(250 CHAR) NULL,

CAR_MODEL VARCHAR(250 CHAR) NULL,

CAR_COLOUR VARCHAR(250 CHAR) NULL,

TOKENS_SPENT NUMBER(10,0) NULL

);In this blog post, we will focus on populating the first two columns of the CAR_IMAGE_RECOGNITION table. The remaining columns will be addressed in the next blog post.

Writing the Python Script

We will use the following Python packages to prepare our image data and insert it into the Oracle database:

Pillowpackage - for manipulating JPEG files.base64package - for converting binary content to base64 text content.oracledbpackage - for accessing the Oracle database. (Please note that thecx_Oraclepackage is now obsolete and has been superseded by theoracledbpackage.)

We start by setting up database access parameters, JPEG image root folder and JPEG file optimisation attributes:

db_user = 'OPENAI_TEST'

db_password = 'OPENAI_TEST'

db_dsn = 'localhost:1521/ORCL'

image_folder = './images'

optimised_image_max_size = (250, 250) # image will be scaled down so that it does not go outside this box

optimised_image_jpg_quality = 60 # jpeg quality score

use_optimised_images = True # if set to False, the original, non-optimised images will be inserted into the Oracle table- The maximum image dimension of 250 pixels, while maintaining aspect ratio, does not necessitate square images.

- A JPEG compression quality score of 60, while introducing visible compression artifacts, typically maintains sufficient image fidelity for recognition purposes.

- A

use_optimized_imagesflag is introduced to optionally disable image optimization, enabling comparison of AI model efficiency between original and optimised images.

optimise_images function

def optimise_images():

print('-= Optimise JPG Image size and quality for Image Recognition =-')

print(f'Image folder: {image_folder}')

summary_original_image_size = 0

summary_optimised_image_size = 0

files_processed = 0

for filename in os.listdir(image_folder):

if filename.endswith('.jpg') and not filename.endswith('.optimised.jpg'):

print(f'\nProcessing file {filename}:')

original_image_path = os.path.join(image_folder, filename)

optimised_image_path = original_image_path[:-4] + '.optimised.jpg'

with Image.open(original_image_path) as img:

img.thumbnail(optimised_image_max_size)

img.save(optimised_image_path, 'JPEG', quality=optimised_image_jpg_quality)

original_image_size = os.path.getsize(original_image_path)

optimised_image_size = os.path.getsize(optimised_image_path)

print(f'\tOriginal size :{original_image_size} ({original_image_size/1024} KB)')

print(f'\tOptimised size :{optimised_image_size} ({optimised_image_size/1024} KB)')

print(f'\tSize reduction: {round(optimised_image_size / original_image_size * 100, 1)}%')

summary_original_image_size += original_image_size

summary_optimised_image_size += optimised_image_size

files_processed += 1

print('\nSUMMARY:')

print(f'\tNumber of files processed: {files_processed}')

print(f'\tTotal original file size: {summary_original_image_size / 1024} KB')

print(f'\tTotal optimised file size: {summary_optimised_image_size / 1024} KB')

print(f'\tTotal size improvement: {round(summary_optimised_image_size / summary_original_image_size * 100, 1)}%')

print('\n')- The function selects all JPEG images within the "images" folder, applies transformations using the Pillow package, and saves the modified versions with the

.optimised.jpgextension in the same directory. - The function, upon saving the optimised image, compares the original and optimized JPEG file sizes to determine the percentage improvement achieved.

establish_connection function

def establish_connection():

return oracledb.connect(user=db_user, password=db_password, dsn=db_dsn)- An Oracle database connection is established via the

oracledbpackage. - Notably, the

connectfunction accommodates awallet_locationparameter if database access necessitates a wallet.

insert_image function

def insert_image(connection, cursor, record_id, image_data):

delete_sql = 'DELETE FROM CAR_IMAGE_RECOGNITION where ID = :id'

cursor.execute(delete_sql, id=record_id)

connection.commit()

insert_sql = 'INSERT INTO CAR_IMAGE_RECOGNITION (ID, CAR_IMAGE_BASE64) VALUES (:id, :img_data)'

clob_var = cursor.var(oracledb.CLOB)

clob_var.setvalue(0, image_data.decode('utf-8')) # Decode to string - required for CLOB

cursor.execute(insert_sql, id=record_id, img_data=clob_var)

connection.commit()- Prepared image data, now in base64 text format, is inserted into the

CAR_IMAGE_RECOGNITIONtable using the CLOB datatype. - The CLOB datatype is used due to the base64 encoding of the image data - when passed to this function, the data is no longer binary.

insert_images function

def insert_images():

with establish_connection() as connection:

cursor = connection.cursor()

print('-= Insert images into Oracle database =-')

record_id = 1

for filename in os.listdir(image_folder):

if (use_optimised_images and filename.endswith('.optimised.jpg')) or (not use_optimised_images and filename.endswith('.jpg') and not filename.endswith('.optimised.jpg')):

image_path = os.path.join(image_folder, filename)

with open(image_path, 'rb') as image_file:

raw_image_data = image_file.read()

base64_image_data = base64.b64encode(raw_image_data) # binary to base64 conversion required because image is passed as a string to the OpenAI API

insert_image(connection, cursor, record_id, base64_image_data)

print(f'\tInserted image: {filename}, raw size: {len(raw_image_data)}, base64 size: {len(base64_image_data)}, in Tokens: {len(base64_image_data) // 4}')

record_id = record_id+1

cursor.close()- Optimized JPEG files within the image root folder are iterated over.

- The

base64package is employed to convert binary file content into base64 format. - Each image is inserted into the Oracle database using the

insert_imagefunction. - Inserted image sizes are displayed before and after base64 conversion, along with estimated OpenAI token usage (note that this estimation does not accurately reflect actual OpenAI token consumption, which typically is much lower).

Running the Script

To run the process end-to-end, execute these two functions:

optimise_images()

insert_images()

print('Done.')The Result

The console output, when running the script above, looks like this:

-= Optimise JPG Image size and quality for Image Recognition =-

Image folder: ./images

Processing file car_01.jpg:

Original size :75942 (74.162109375 KB)

Optimised size :8288 (8.09375 KB)

Size reduction: 10.9%

Processing file car_02.jpg:

Original size :199448 (194.7734375 KB)

Optimised size :9283 (9.0654296875 KB)

Size reduction: 4.7%

Processing file car_03.jpg:

Original size :243207 (237.5068359375 KB)

Optimised size :8879 (8.6708984375 KB)

Size reduction: 3.7%

Processing file car_04.jpg:

Original size :458438 (447.693359375 KB)

Optimised size :7669 (7.4892578125 KB)

Size reduction: 1.7%

Processing file car_05.jpg:

Original size :193411 (188.8779296875 KB)

Optimised size :6711 (6.5537109375 KB)

Size reduction: 3.5%

Processing file car_06.jpg:

Original size :194297 (189.7431640625 KB)

Optimised size :7822 (7.638671875 KB)

Size reduction: 4.0%

Processing file car_07.jpg:

Original size :58734 (57.357421875 KB)

Optimised size :10164 (9.92578125 KB)

Size reduction: 17.3%

Processing file car_08.jpg:

Original size :686737 (670.6416015625 KB)

Optimised size :4431 (4.3271484375 KB)

Size reduction: 0.6%

SUMMARY:

Number of files processed: 8

Total original file size: 2060.755859375 KB

Total optimised file size: 61.7646484375 KB

Total size improvement: 3.0%

-= Insert images into Oracle database =-

Inserted image: car_01.optimised.jpg, raw size: 8288, base64 size: 11052, in Tokens: 2763

Inserted image: car_02.optimised.jpg, raw size: 9283, base64 size: 12380, in Tokens: 3095

Inserted image: car_03.optimised.jpg, raw size: 8879, base64 size: 11840, in Tokens: 2960

Inserted image: car_04.optimised.jpg, raw size: 7669, base64 size: 10228, in Tokens: 2557

Inserted image: car_05.optimised.jpg, raw size: 6711, base64 size: 8948, in Tokens: 2237

Inserted image: car_06.optimised.jpg, raw size: 7822, base64 size: 10432, in Tokens: 2608

Inserted image: car_07.optimised.jpg, raw size: 10164, base64 size: 13552, in Tokens: 3388

Inserted image: car_08.optimised.jpg, raw size: 4431, base64 size: 5908, in Tokens: 1477

Done.

Process finished with exit code 0

The optimization process significantly compressed the 8 car images, resulting in a total file size reduction to 3% of the original. All optimized images are under 10 KB. The estimated token count, as we will see in my next blog post, proved inaccurate and exceeded actual usage, rendering the figures in the output above unreliable for image data.

Let us take a look at the database table... As we can see, the data has been successfully loaded:

How do the optimized images appear? Let us take a look:

Note that while the images are sufficiently large to identify the make and the model, it cannot be used to reliably read number plates, which is possible from the original images.

The Next Steps

The image data, now size-optimized and converted to base64 format for compatibility with OpenAI models, has been successfully inserted into the Oracle database. This completes our data preparation stage, paving the way for analysis using the OpenAI's new GPT-4o model. The process of passing this data to the model and interpreting its insights will be the focus of my next blog post.