Oracle Exalytics Week : The Case for Exalytics

OBIEE 11.1.1.6 was released last week, and what with all the news on the new features, and support for version control in the BI Administration Tool, the thing that most excited me about the release wasn't what the release specifically contained, but instead what it enabled - Oracle Exalytics In-Memory Machine. The team here at Rittman Mead are particularly excited about Exalytics and what it means for Oracle BI systems, so this week we're going to run a five-part series on Exalytics, going into what it does, where it fits in with the Oracle BI architecture, how it provides the reported performance gains, and how some of the new Exalytics-specific tools work. Here's the week's agenda, and I'll add the links in as week post each article:

- Oracle Exalytics Week : The Case for Exalytics

- Oracle Exalytics Week : Introducing Oracle Exalytics In-Memory Machine

- Oracle Exalytics Week : Exalytics & TimesTen - Under the Covers

- Oracle Exalytics Week : The Summary Advisor

- Oracle Exalytics Week : How Does it Perform, and How can I Test It?

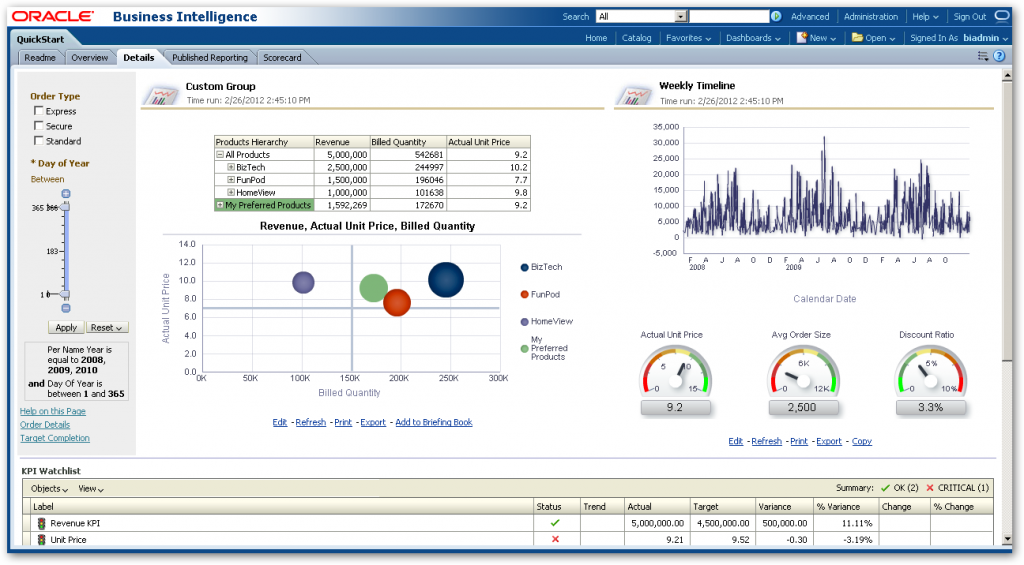

So let's start by understanding why Oracle put together Exalytics. For anyone who's new to Oracle BI, Oracle Business Intelligence 11g provides a set of web-based tools for creating reports, ad-hoc analyses, dashboards, alerts and other BI objects. Using a set of servers such as the Oracle BI Server, Oracle BI Presentation Server and the Oracle BI Scheduler, rich-interactive dashboards can be created that source their data from a range of Oracle, and non-Oracle, data sources.

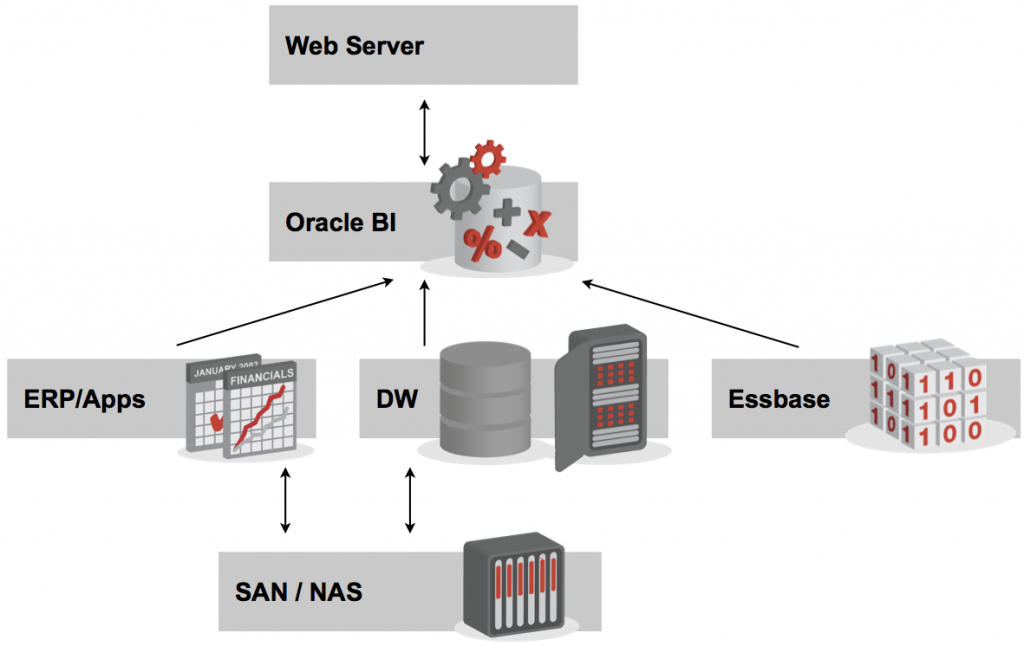

Oracle Business Intelligence provides a number of features above and beyond what you get with a data warehouse, or reports created using Excel. Oracle BI provides the query and presentation element within a full BI and DW deployment, with the most visible manifestation being the dashboards, and catalogs of reports, ad-hoc analyses, alerts, KPIs and scorecards that you can create with the system. Under the covers, the BI Server component within Oracle BI provides additional calculation and data federation capabilities, as well as aggregate navigation, connections to multi-dimensional databases such as Oracle Essbase and Oracle OLAP, and security that is based on WebLogic/Fusion Middleware 11g application roles and policies, that you can use to restrict access to your reporting data regardless of where it came from. The diagram below shows BI within the context of a full Oracle BI and DW technology stack:

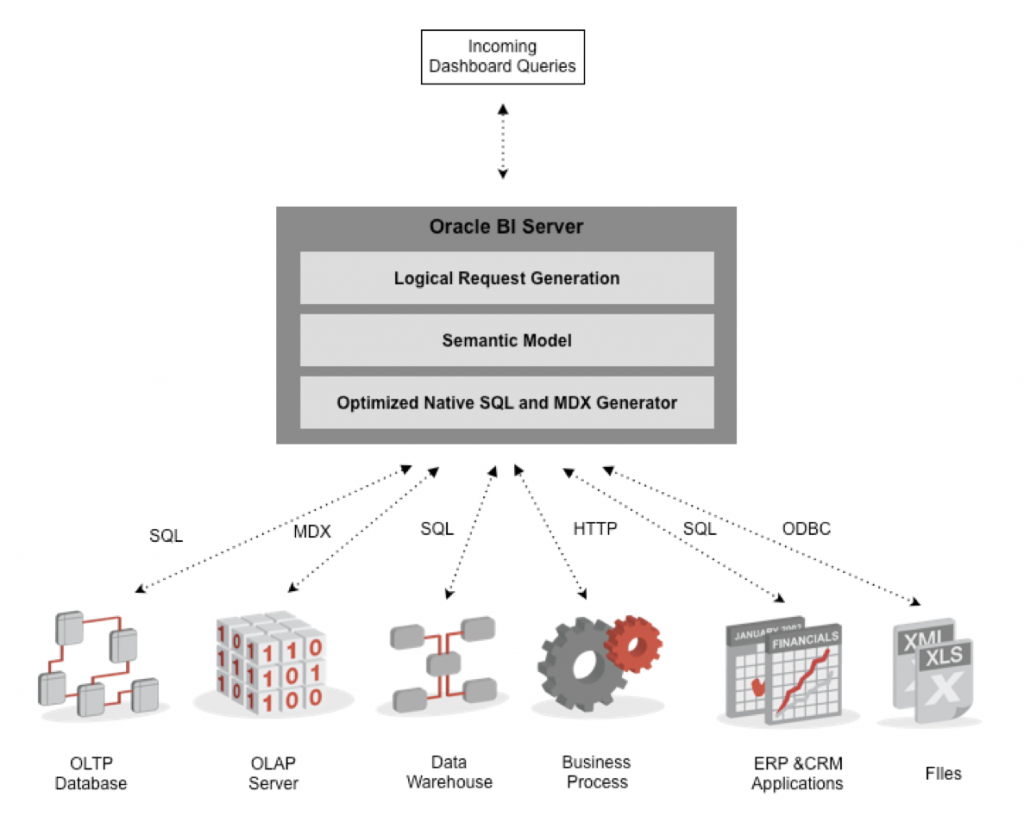

So how does Oracle BI access and return data to end-users, via the dashboard? If you read my series of postings a couple of years ago on the internals of the Oracle BI Server (parts one, two and three are online here), you'll know that the BI Server uses the Oracle BI Repository to translate incoming "logical" SQL requests into physical SQL, MDX, HTTP and other calls to the underlying data sources, which then return data that the BI Server combines, further manipulates, and then sends back to the dashboard in the form of analyses. The diagram below shows the data from to, and from the BI Server component within Oracle Business Intelligence.

So, in any BI system, and with Oracle BI specifically, where does the "heavy lifting" happen? Your aim, as a developer or DBA should be to return data to users with consistent, sub-second response times, but large data volumes and unpredictable queries can make this job tough. When you analyze the response time for a query, using the log files from OBIEE or diagnostic data from the Oracle database or Enterprise Manager, you'll find that most of the time goes in:

- The database returning the query results

- Storage returning data to the database server

- Network bottlenecks between the storage server and the database server

Database and storage tuning can help with this, and certainly Oracle Exadata can help with filtering and aggregating very large sets of detail-level data, but even with this in place query times can be erratic. Depending on what data is accessed and over what time period, queries can still take 30 seconds, or three minutes, or whatever to return, which is an improvement on the 30 minutes + you experience before tuning and Exadata, but it's not the consistent, sub-second response times that users will require if they are to explore and navigate the data on their dashboards, and response time only gets worse the more concurrent users you add. So what solutions exist to solve this type of requirement?

Readers of this blog will already have a number of BI performance solutions in mind when considering this type of requirement. You could:

- Buy an Exadata box, or lots of Exadata boxes, as several of our clients have done, but this is an expensive option

- Add in materialized views, more indexes, partitioning schemes and so on, but these also increase the complexity of loading and managing the database, and there's diminishing returns after a while

- Or you could use OLAP technologies such as Oracle Essbase, Oracle OLAP or Microsoft SQL Server, but that's a whole new technology to learn and manage, and it's not going to scale to the size of your data warehouse

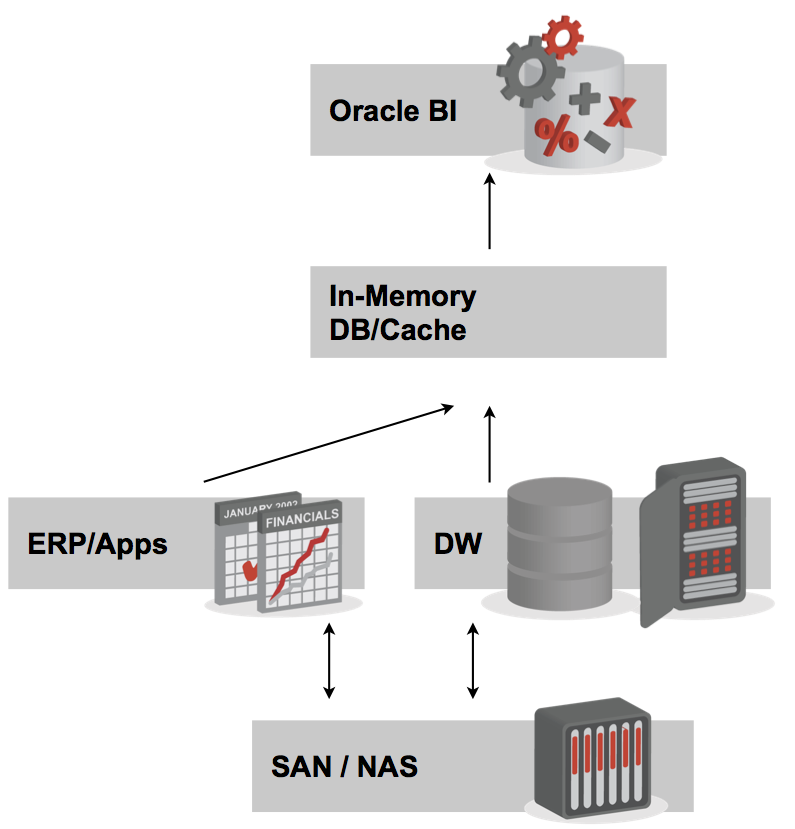

A new option though, brought about by falls in hardware costs and in-particular, memory costs, is to use some sort of in-memory database, or cache, to speed up your users's queries and dashboards.

By moving some or all of your reporting data into RAM, you can potentially get sub-second response times, consistent response times, and a technology that doesn't require a while load of administration or management. That's what Oracle Exalytics In-Memory Machine is, and I'll go through the product overview in tomorrow's posting in this series.